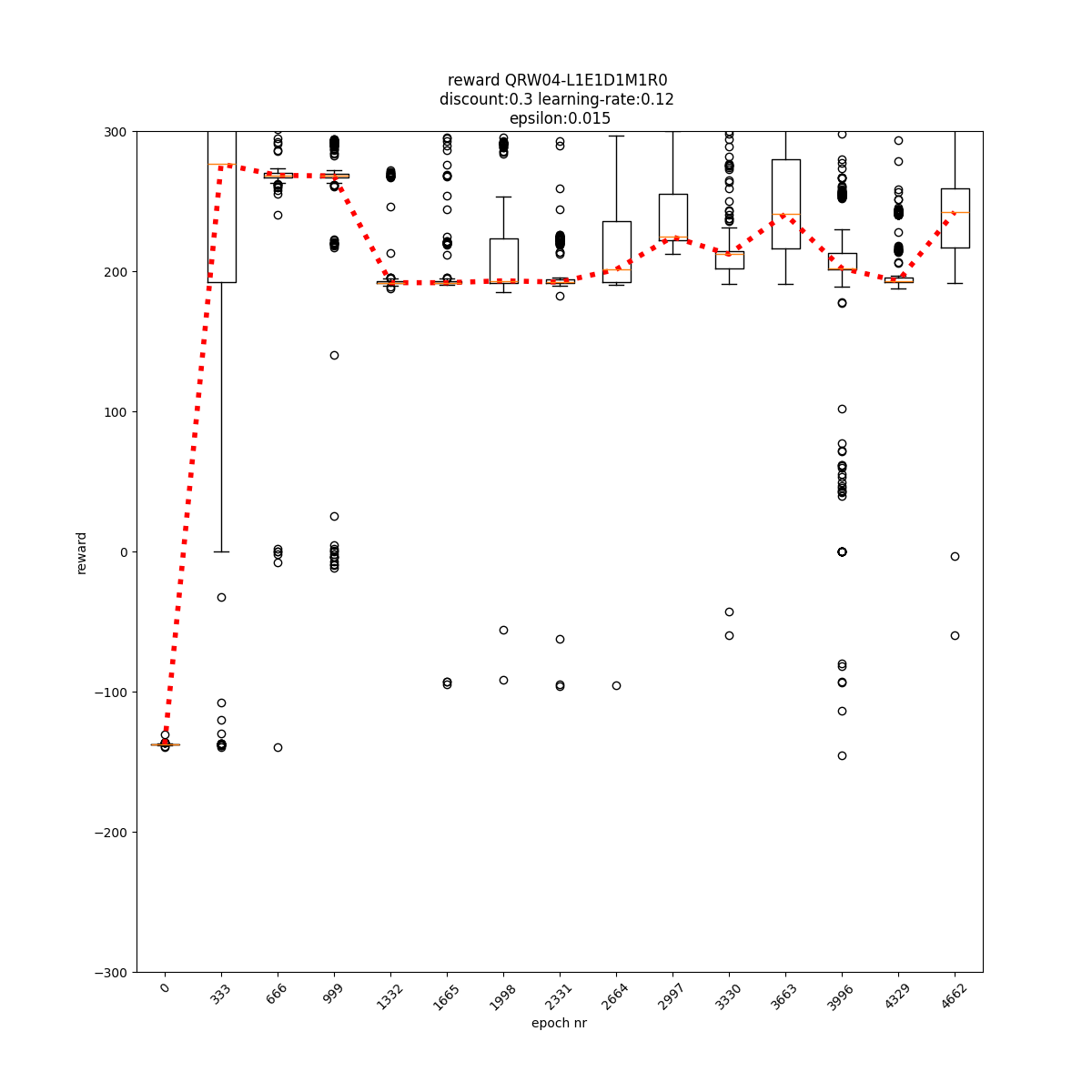

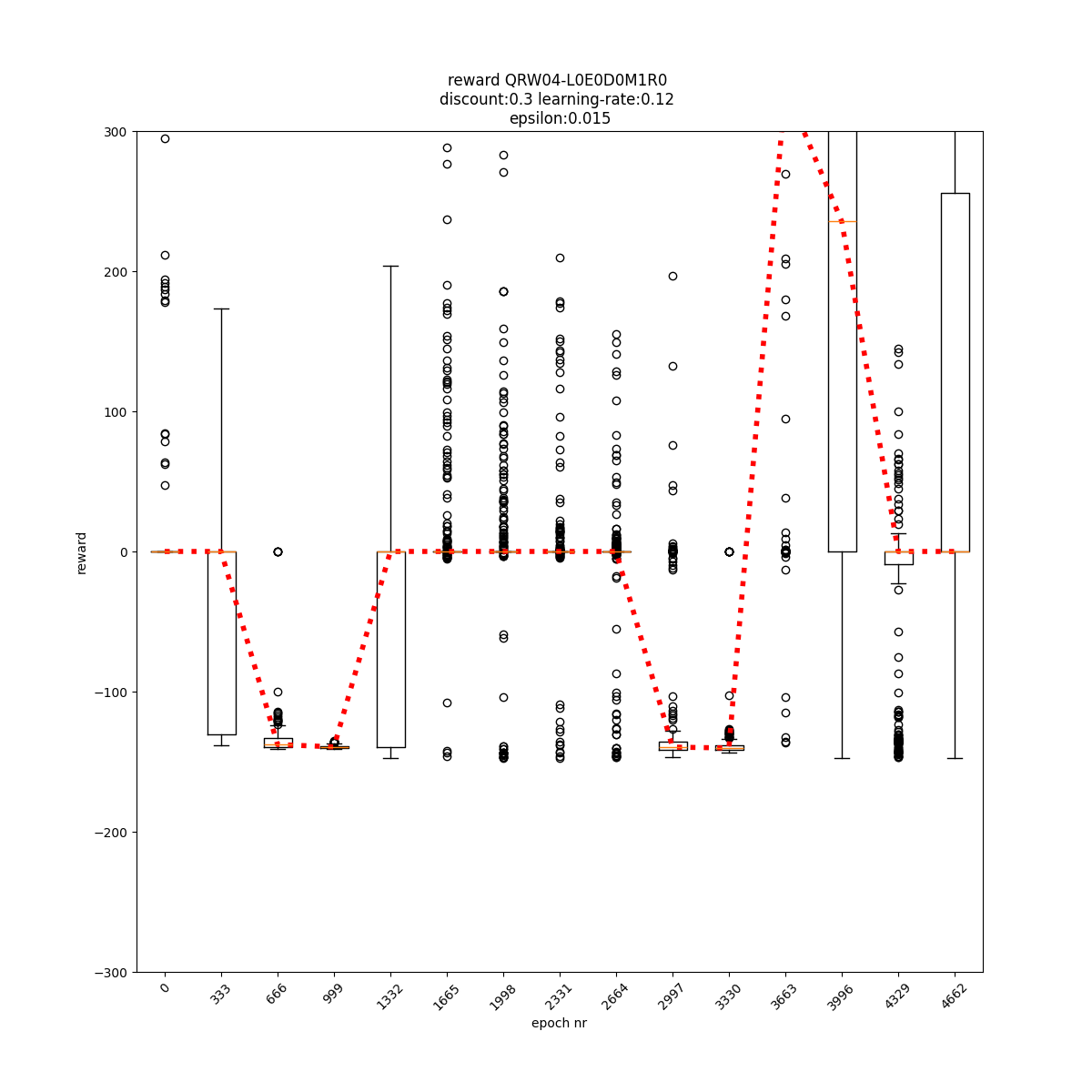

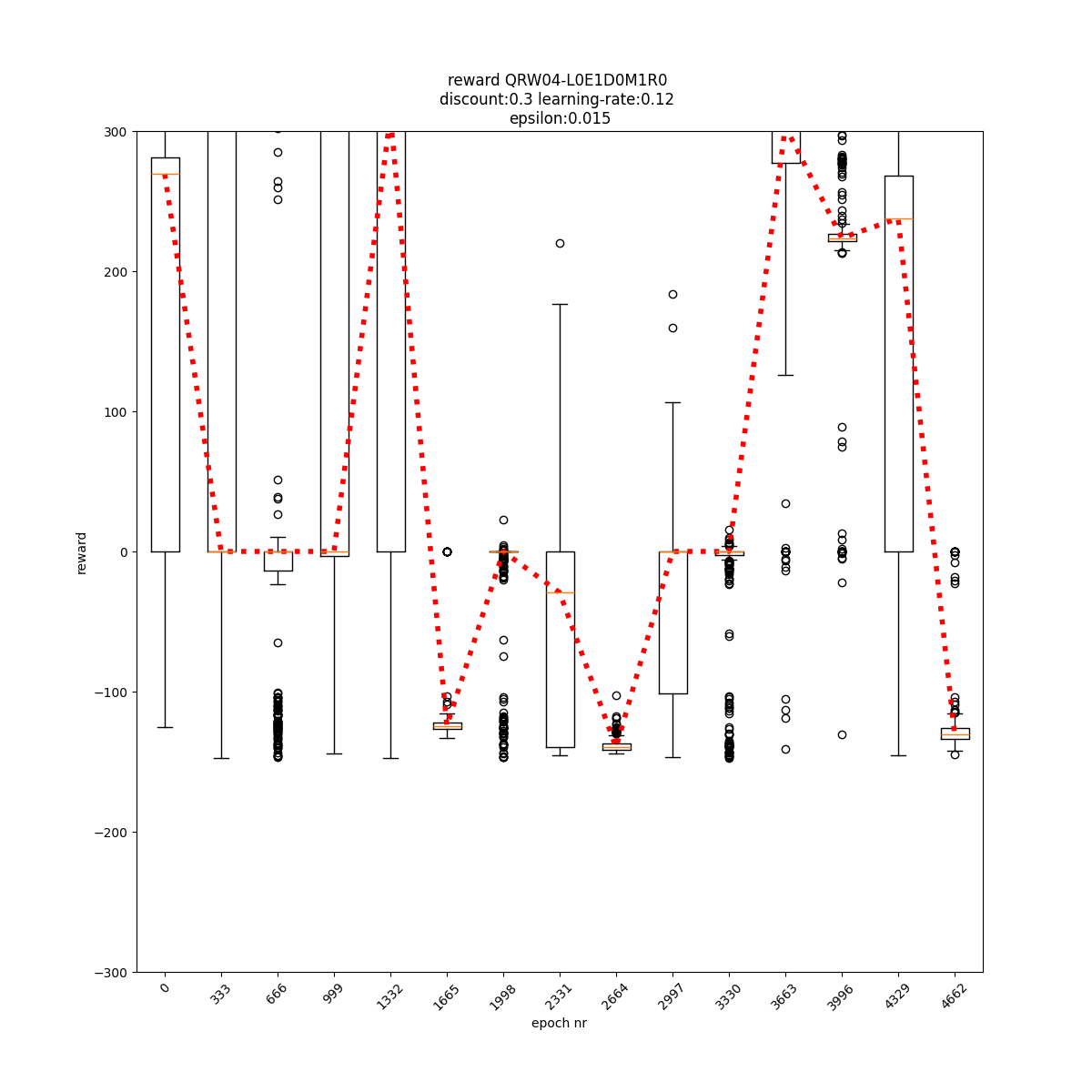

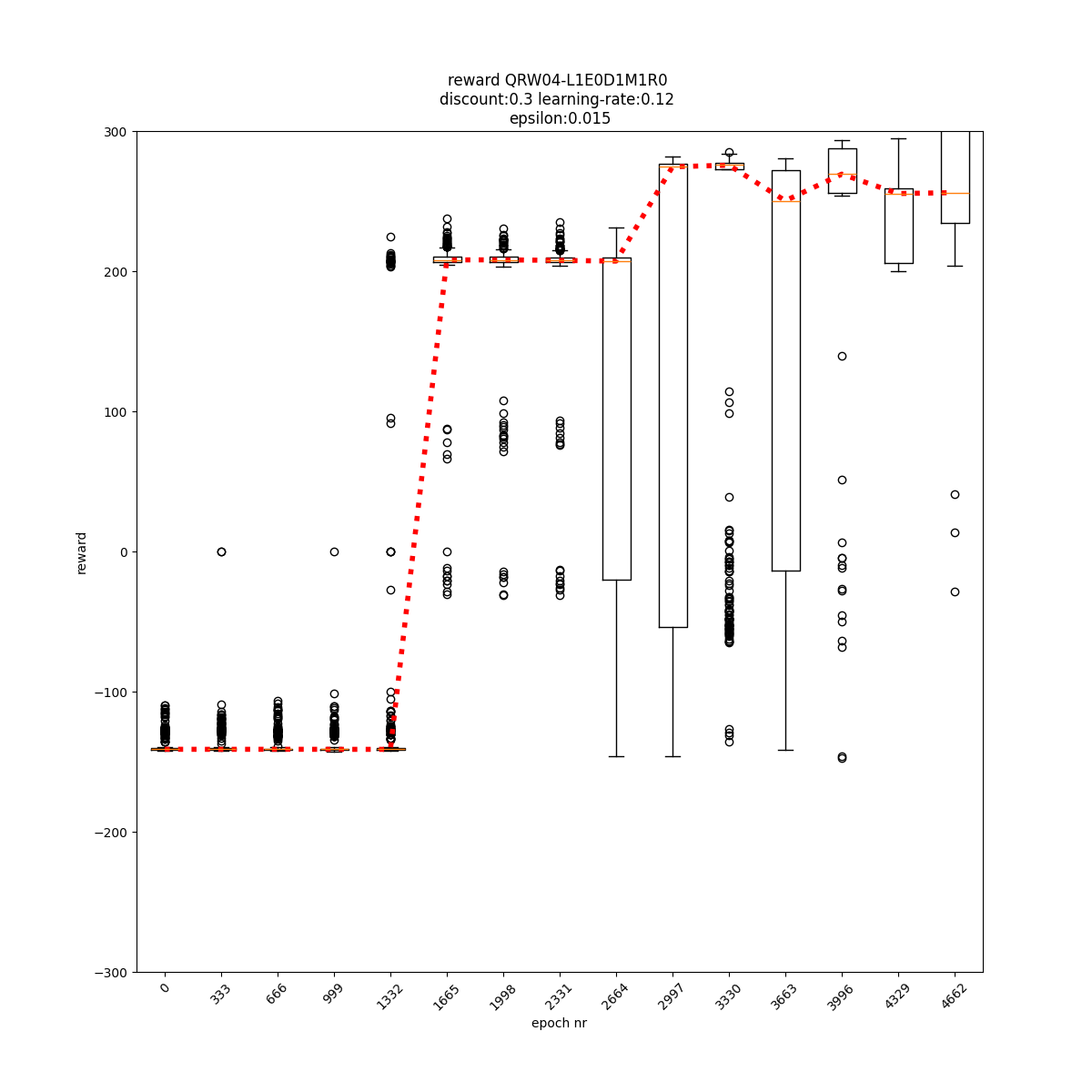

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

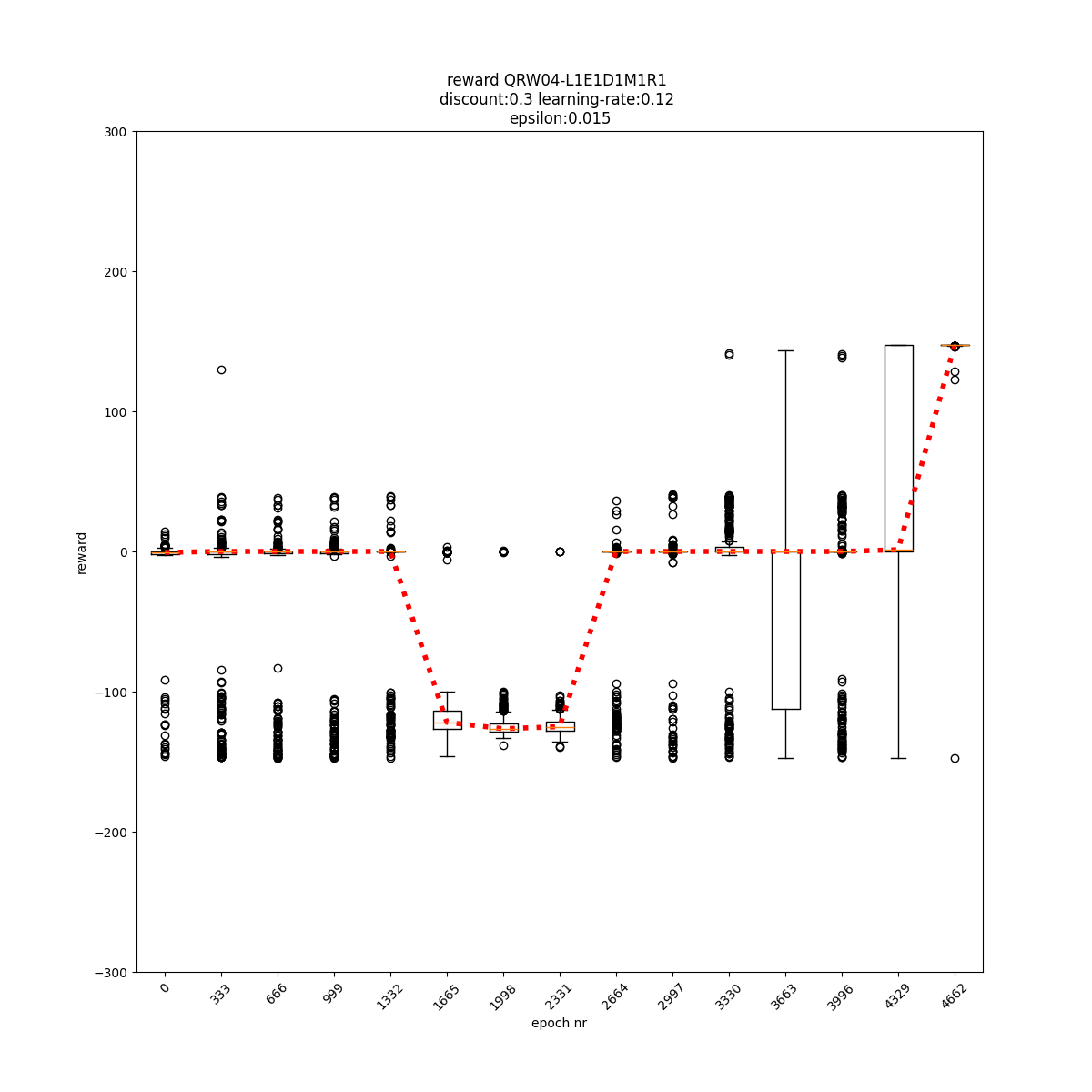

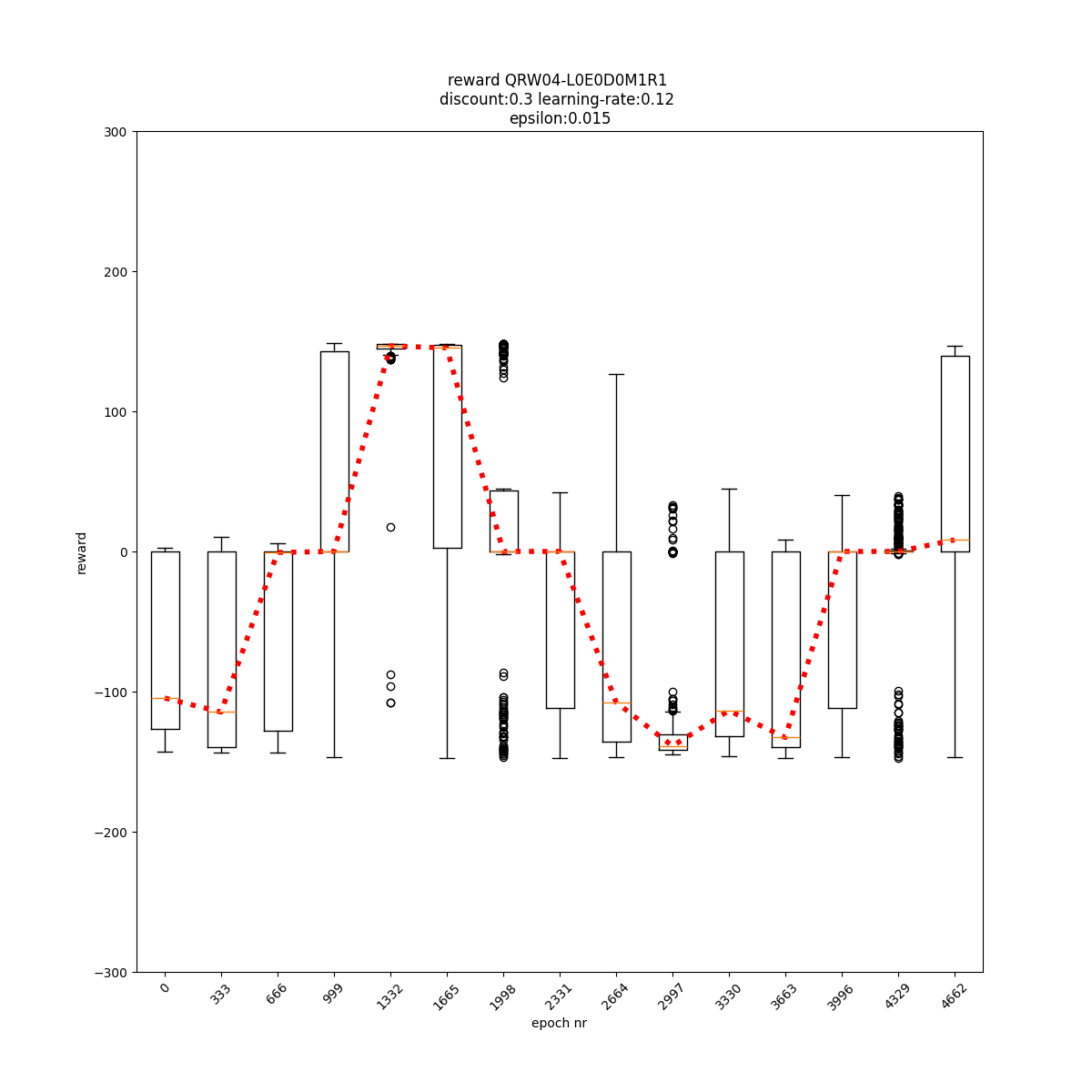

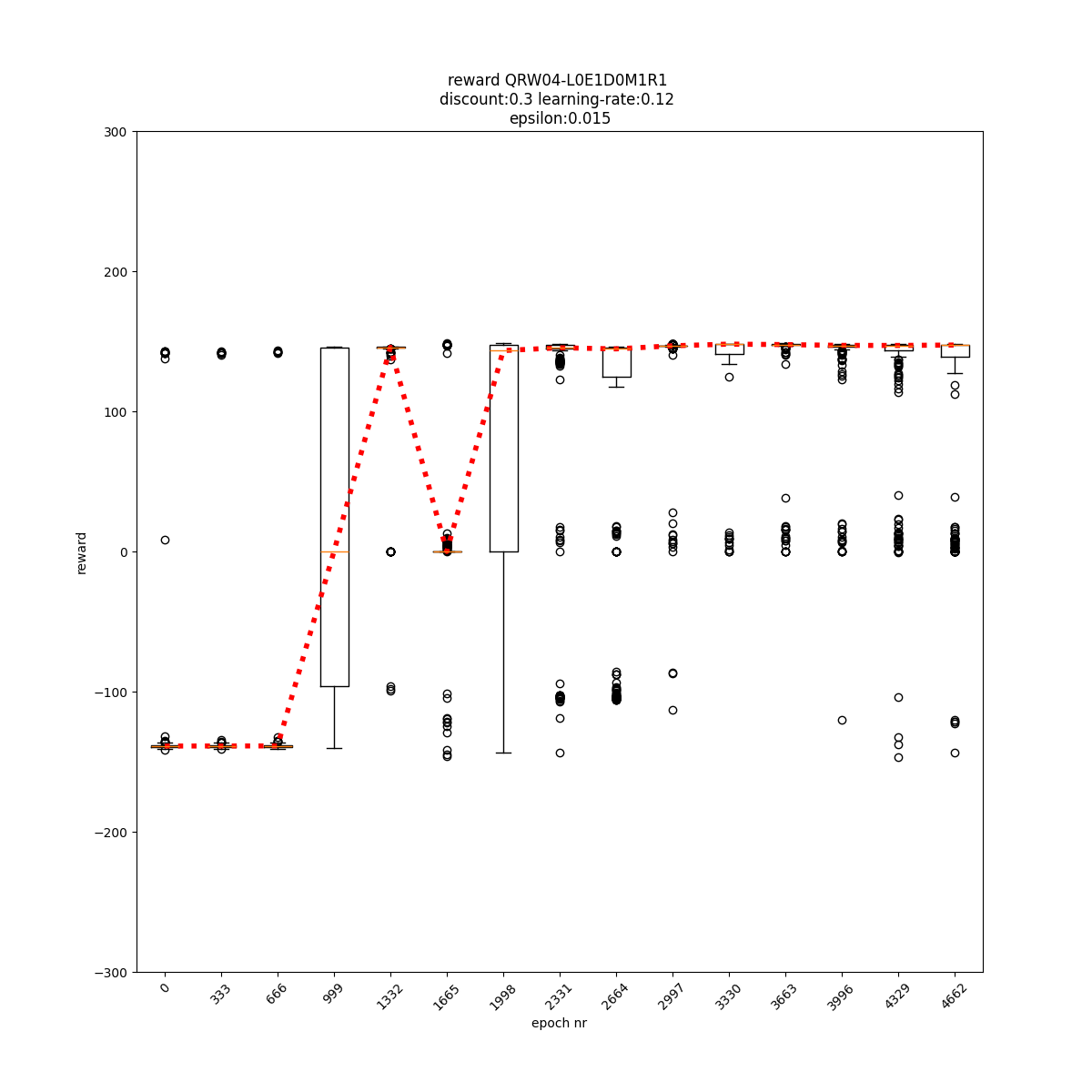

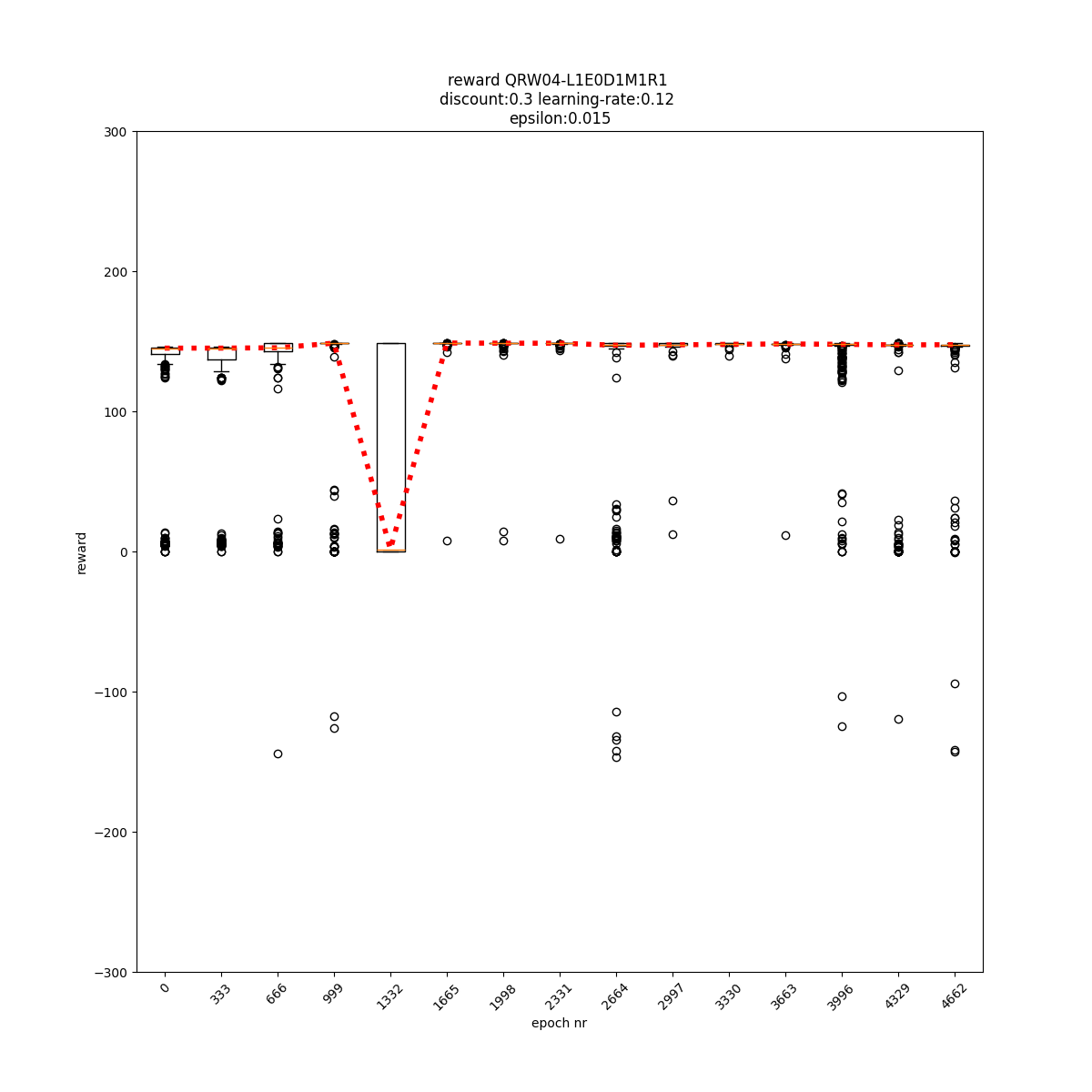

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

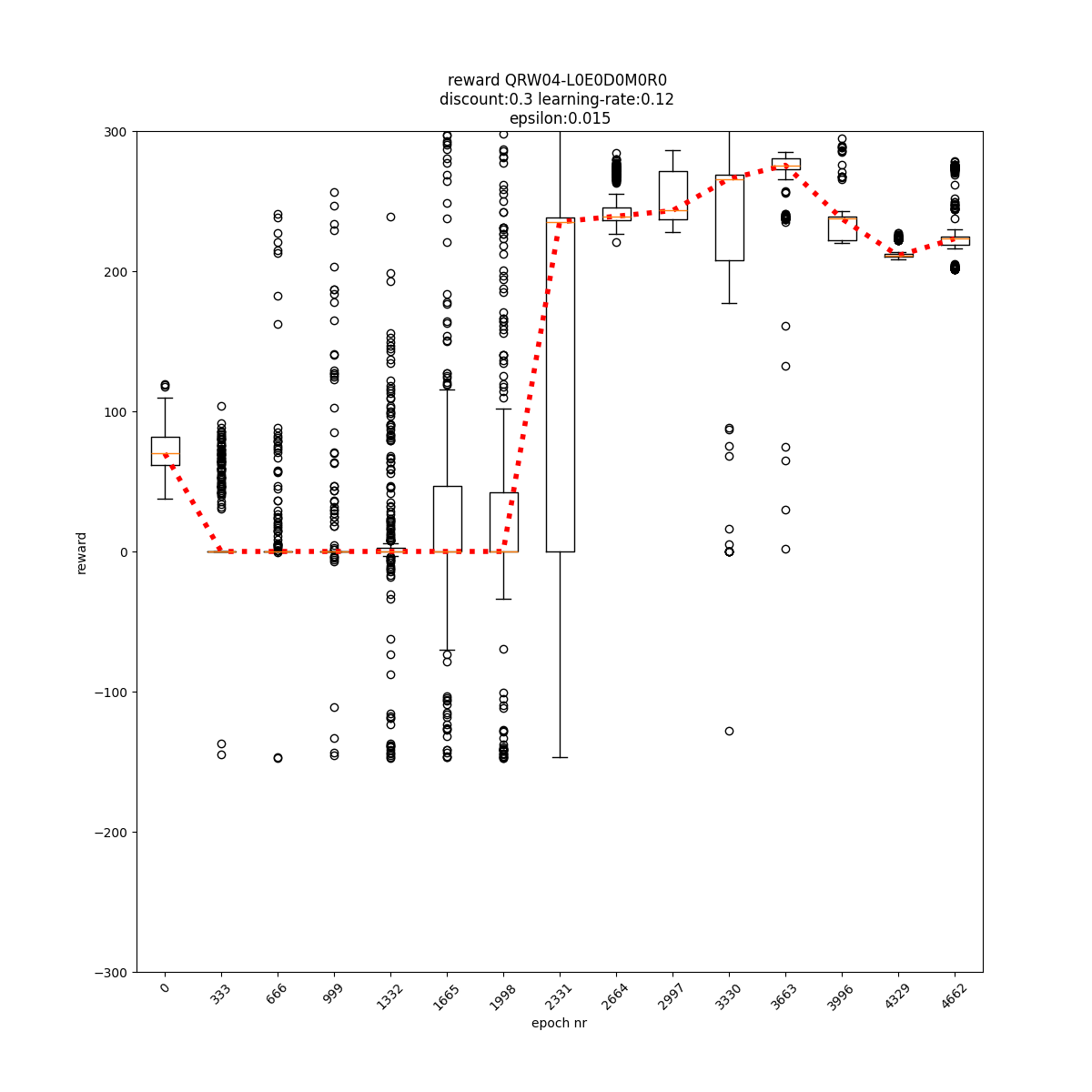

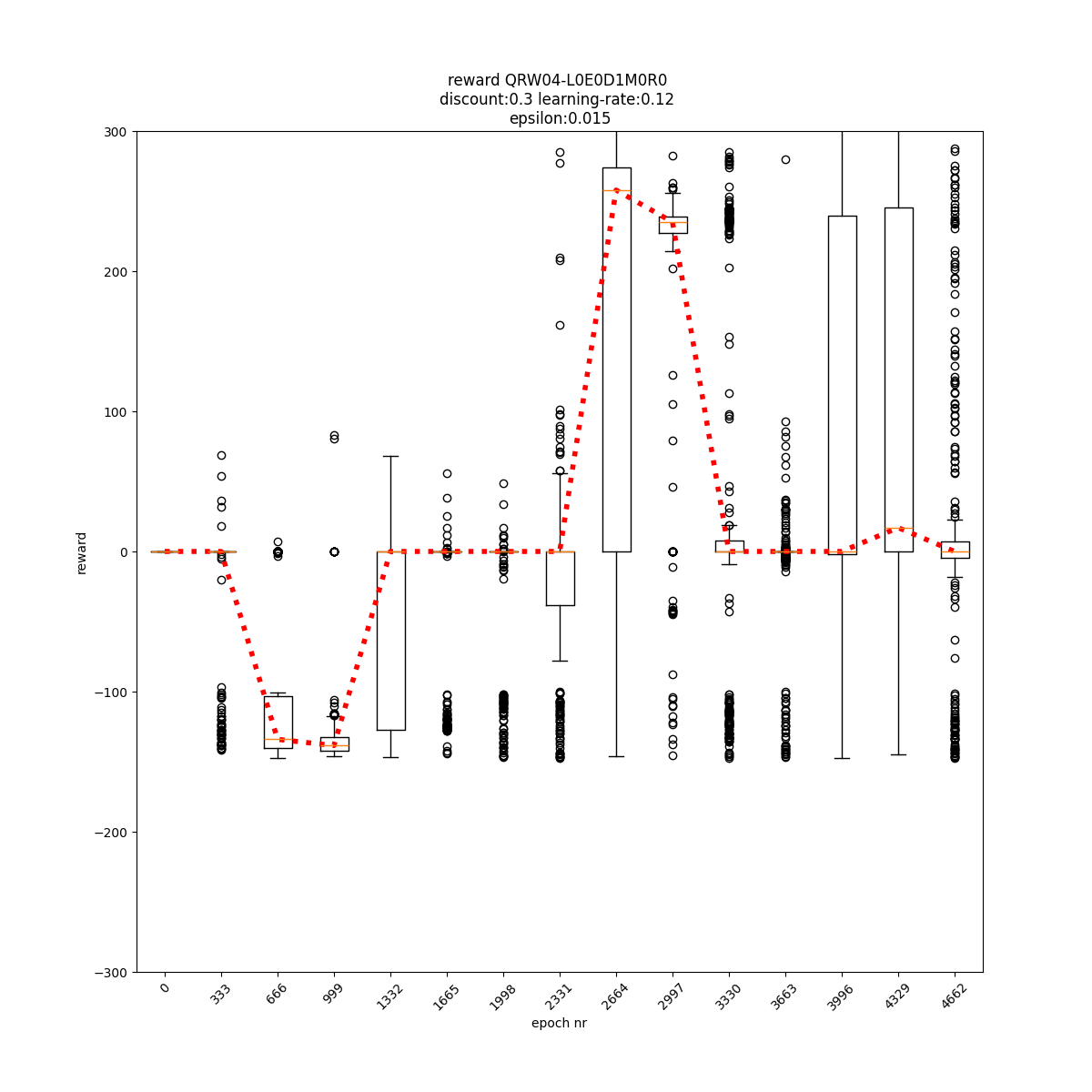

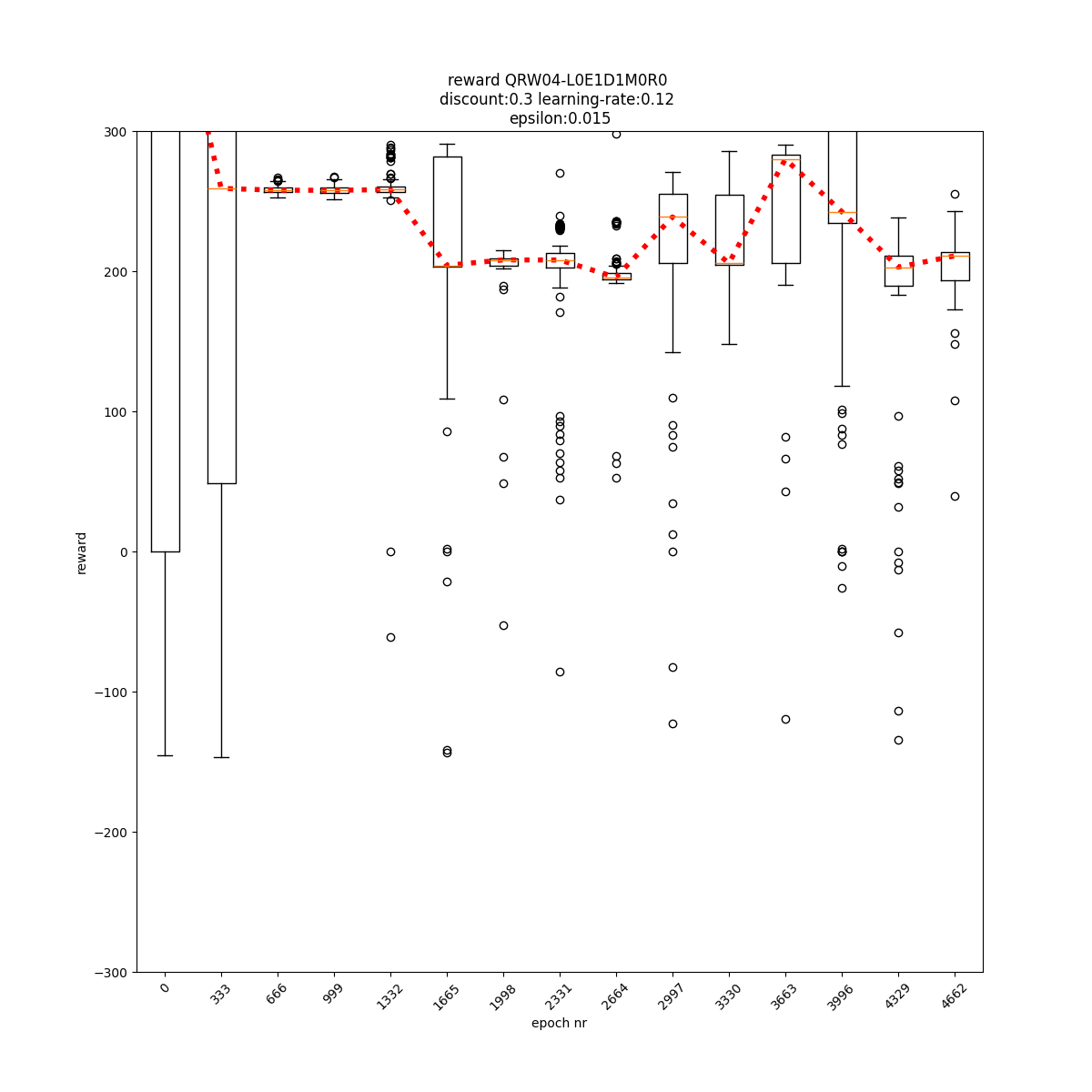

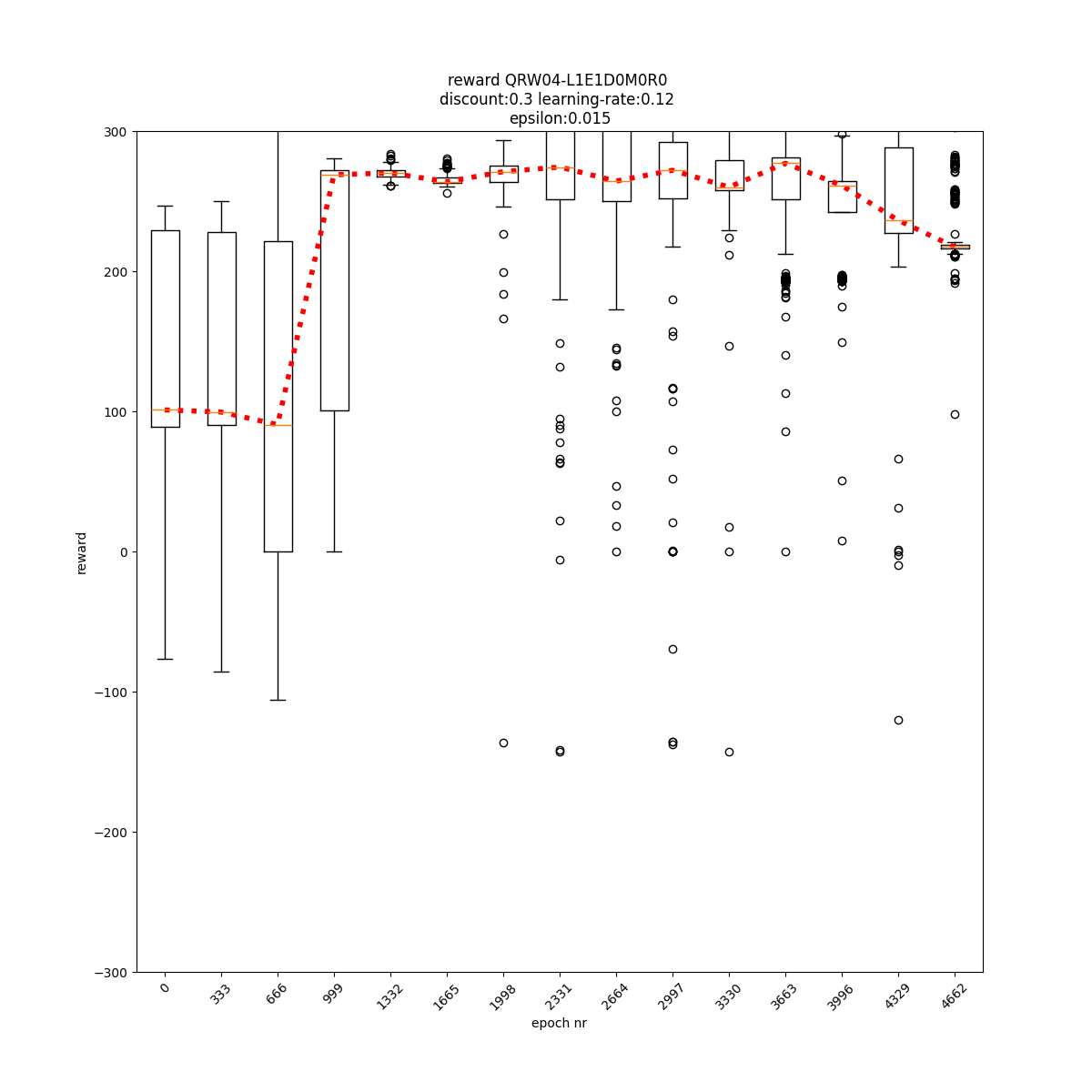

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

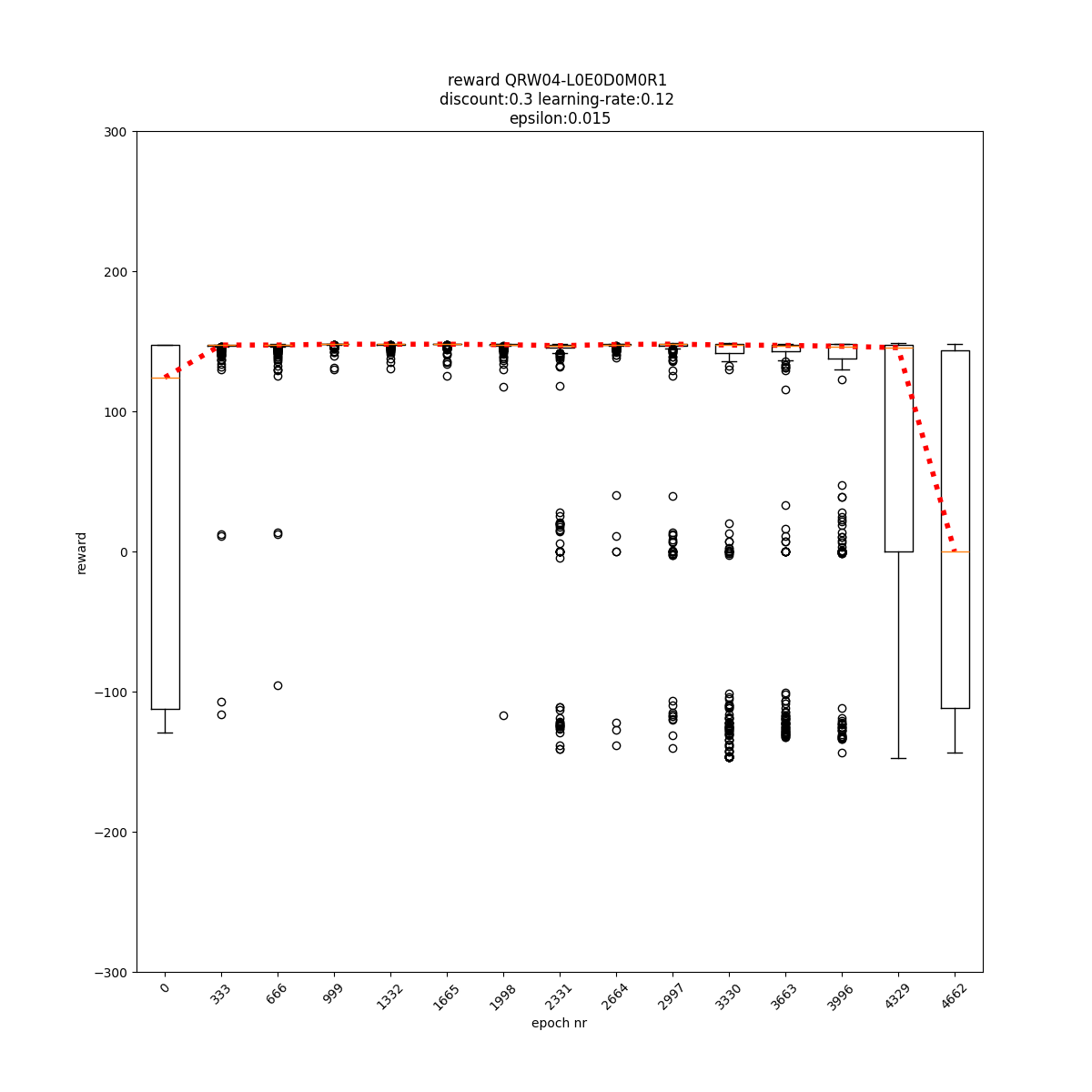

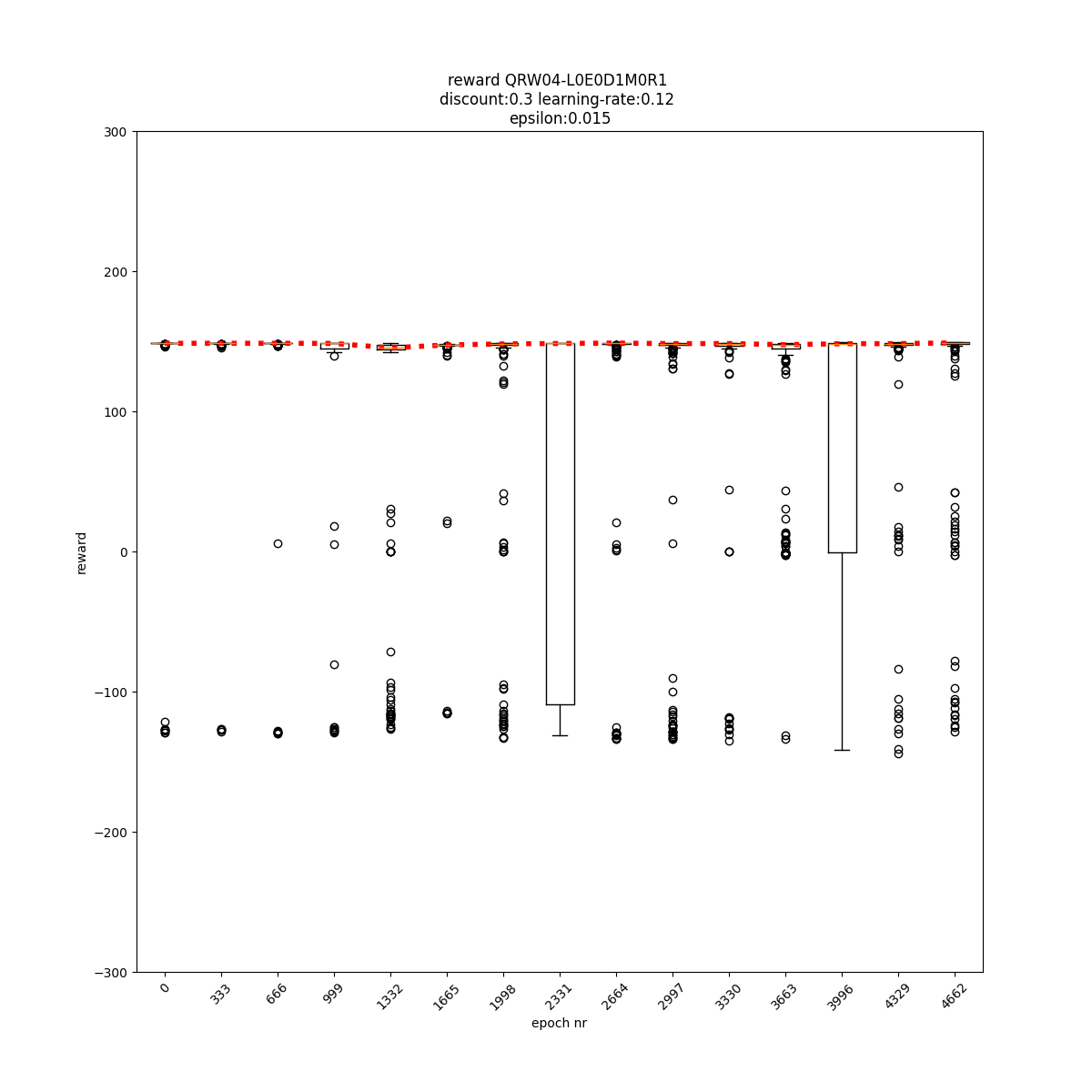

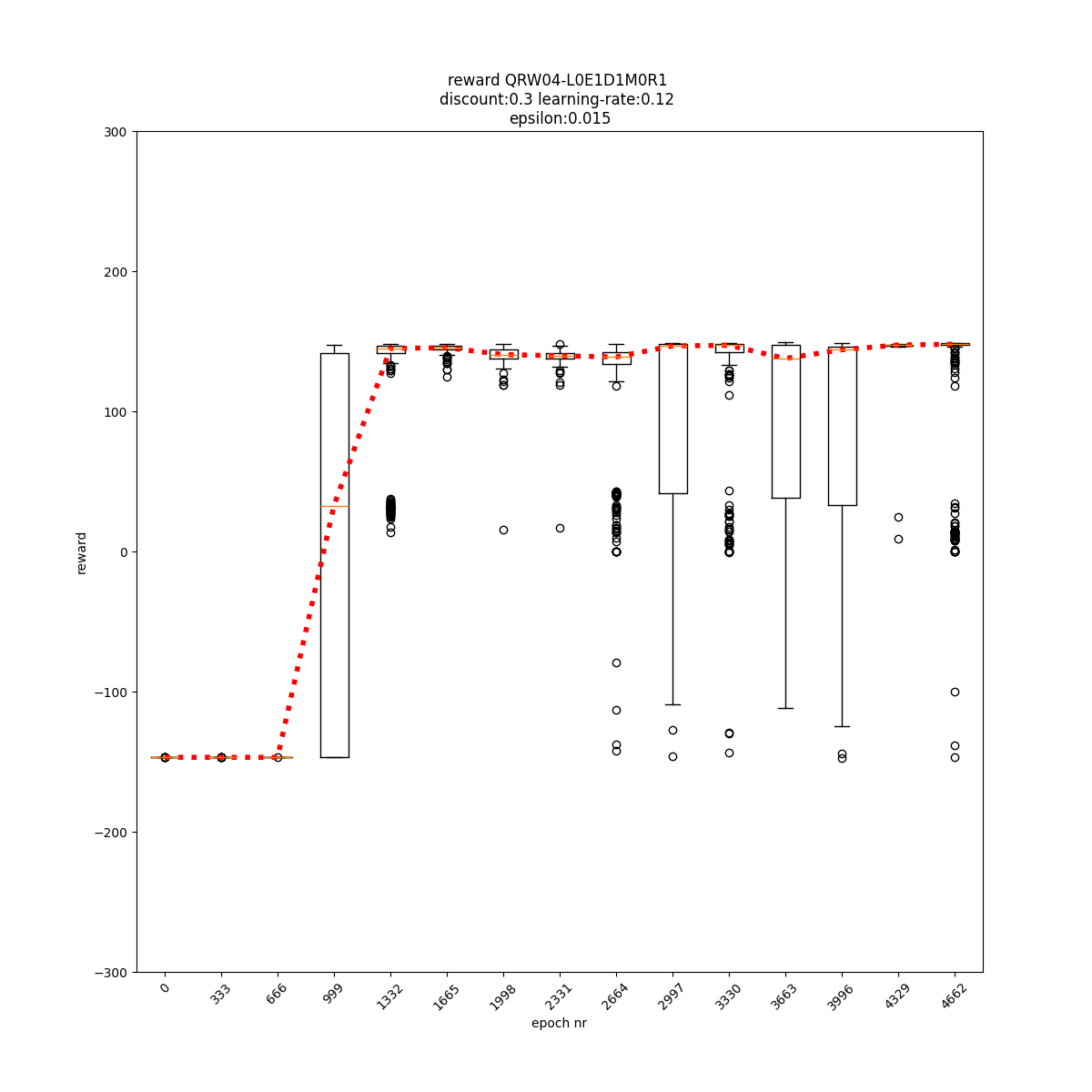

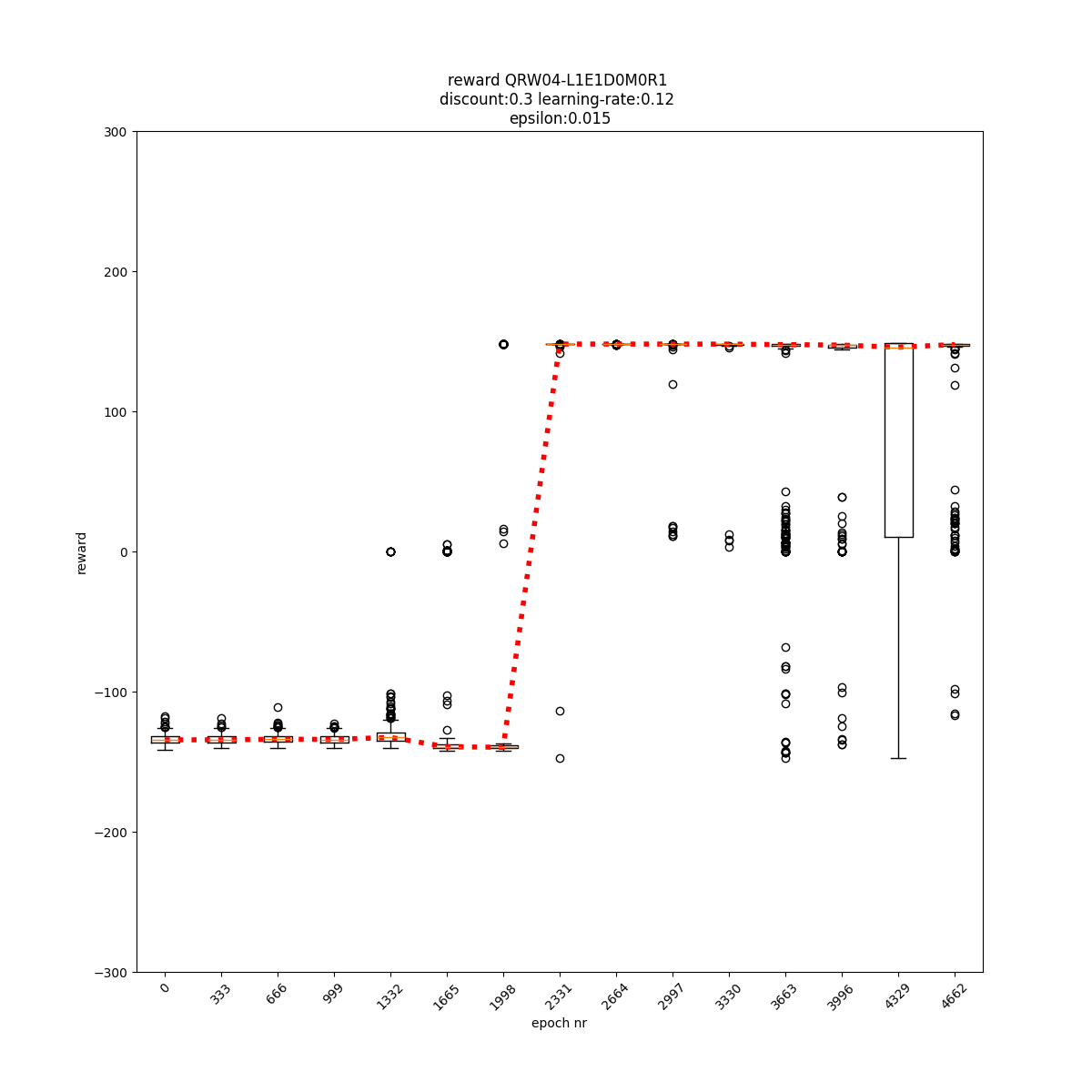

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11