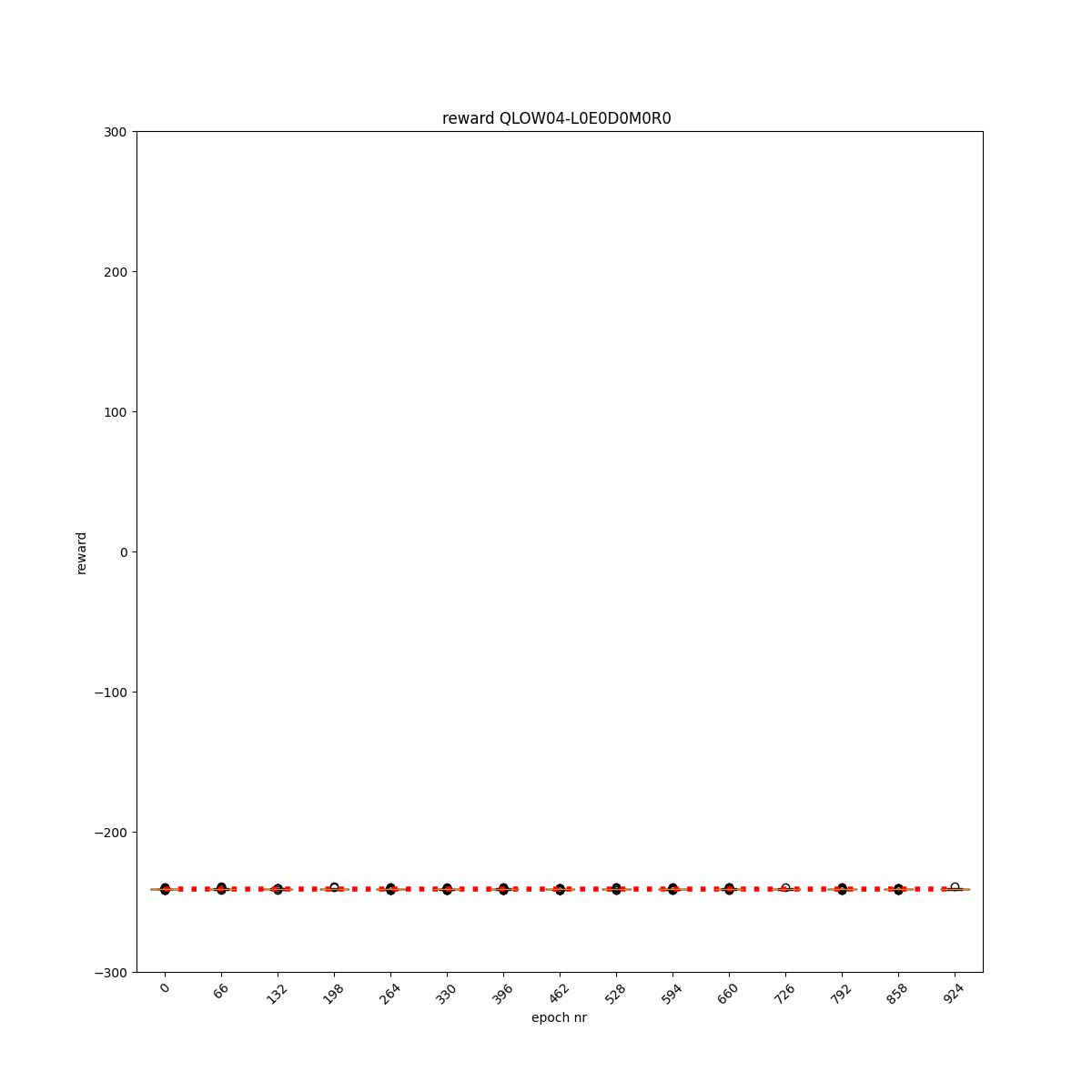

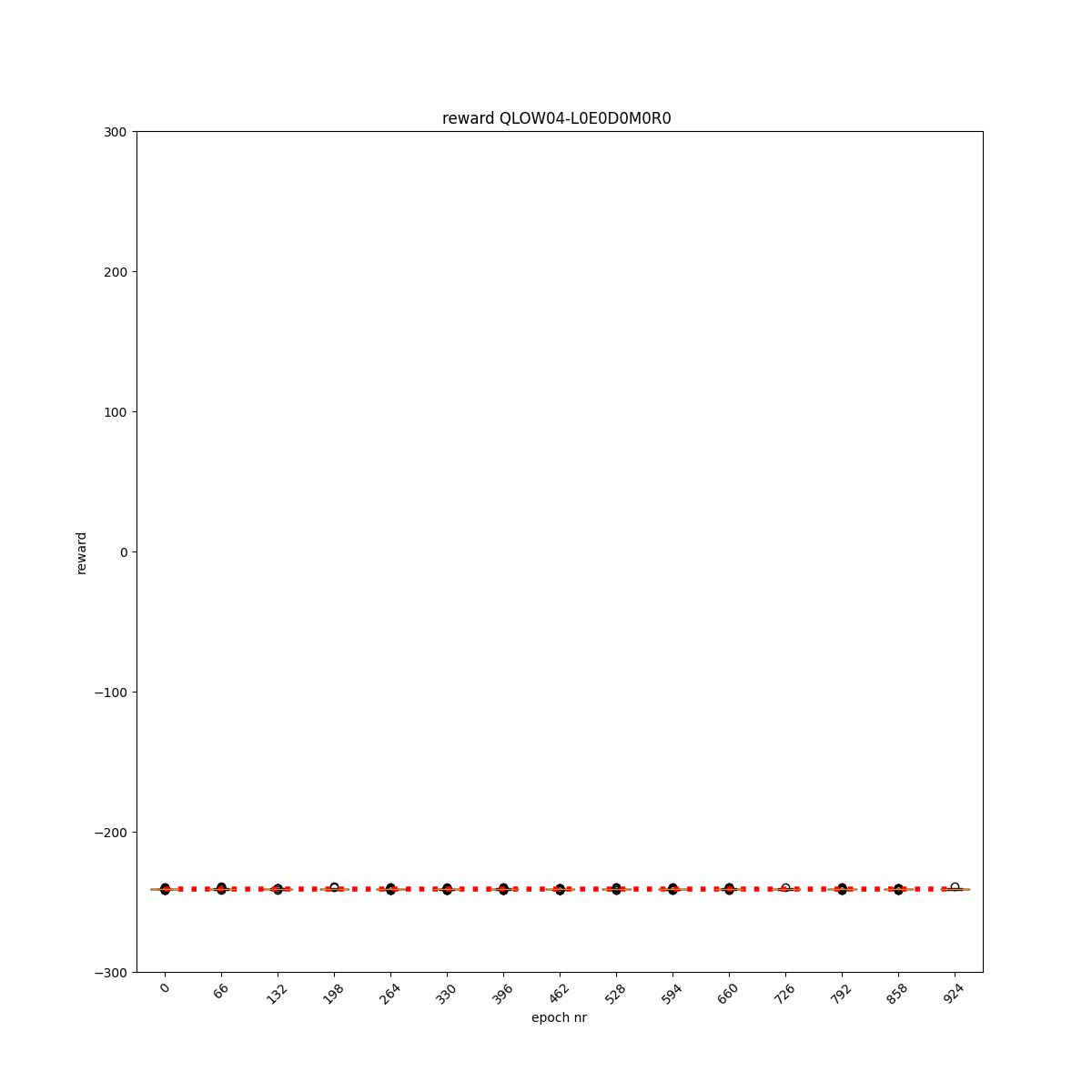

L0 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

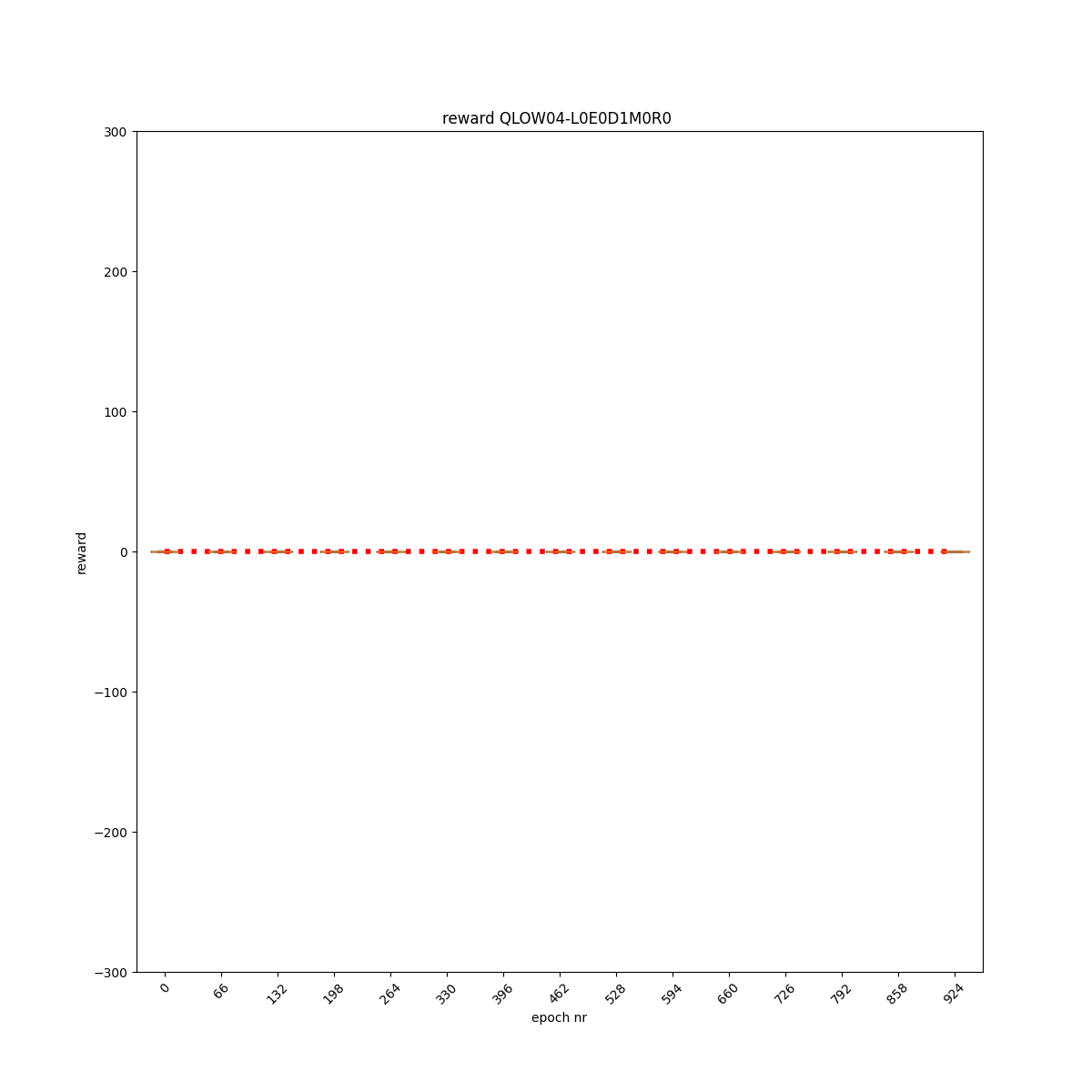

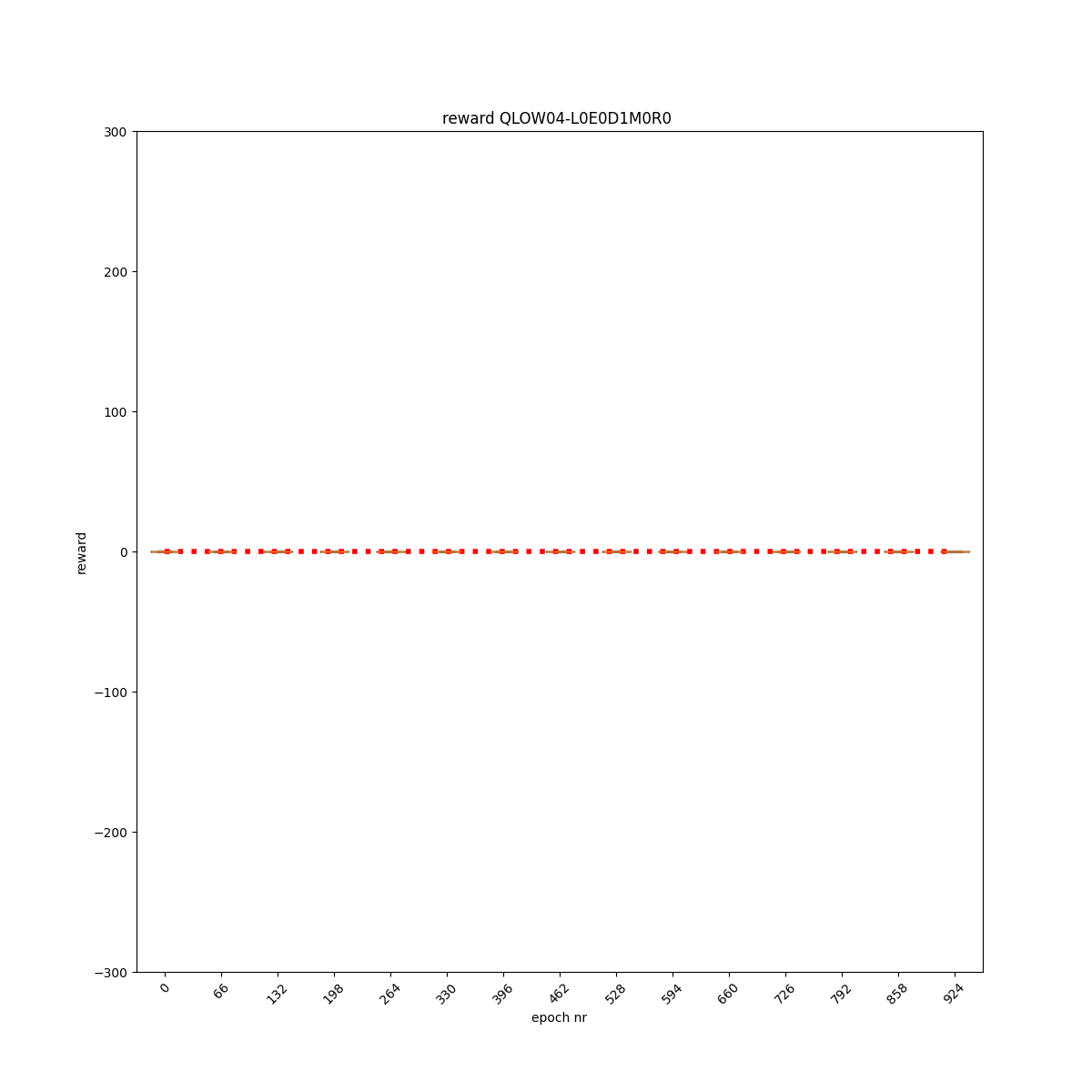

L0 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

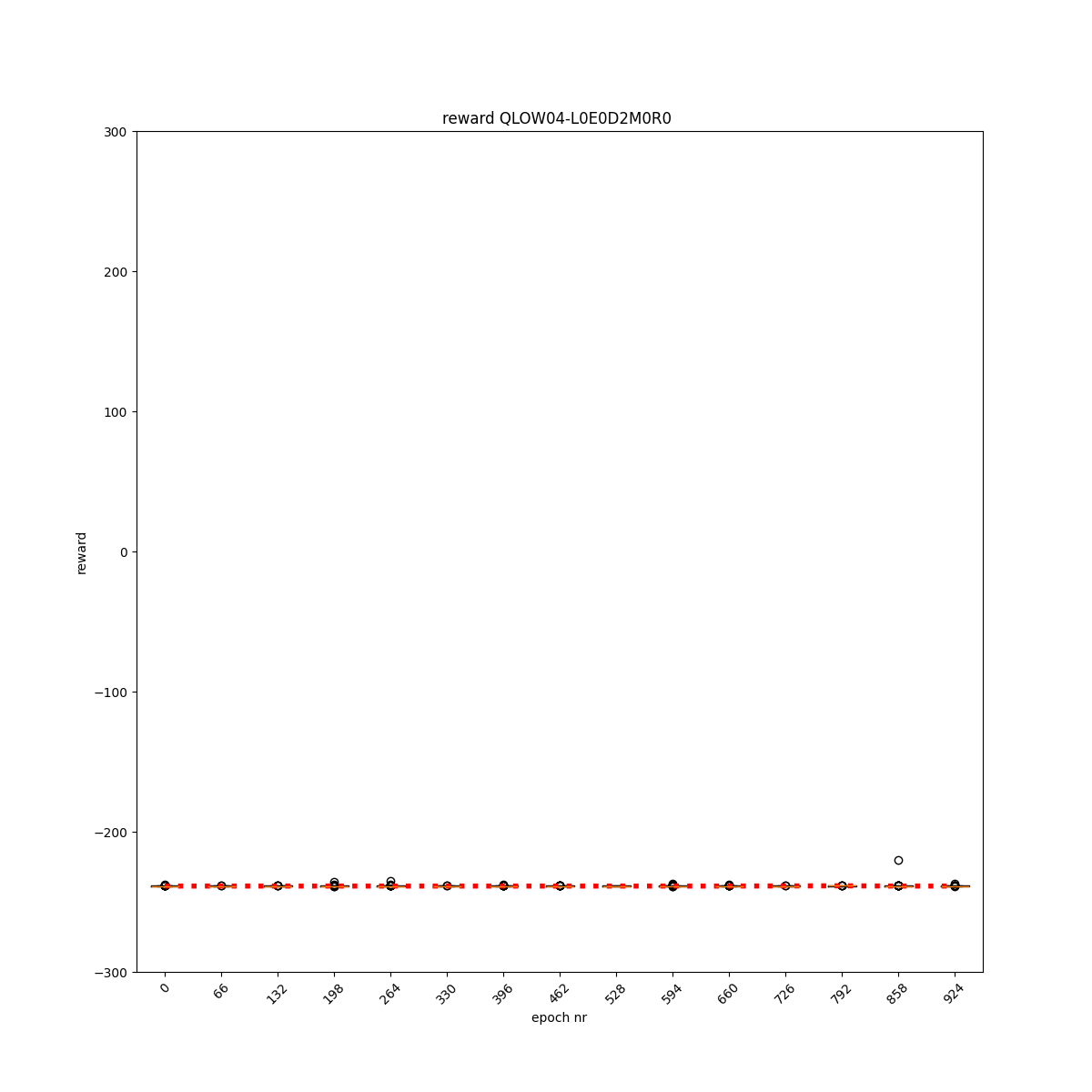

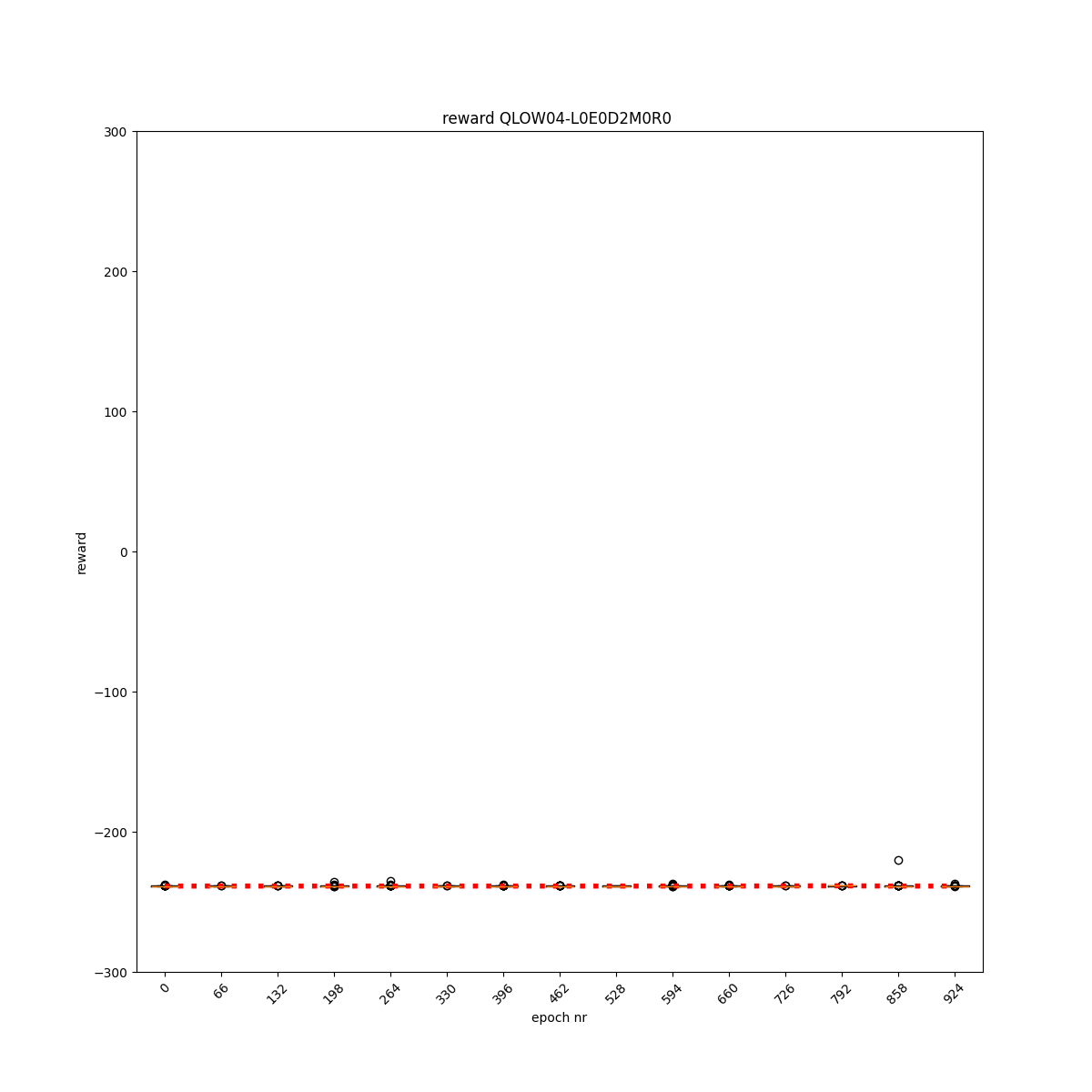

L0 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

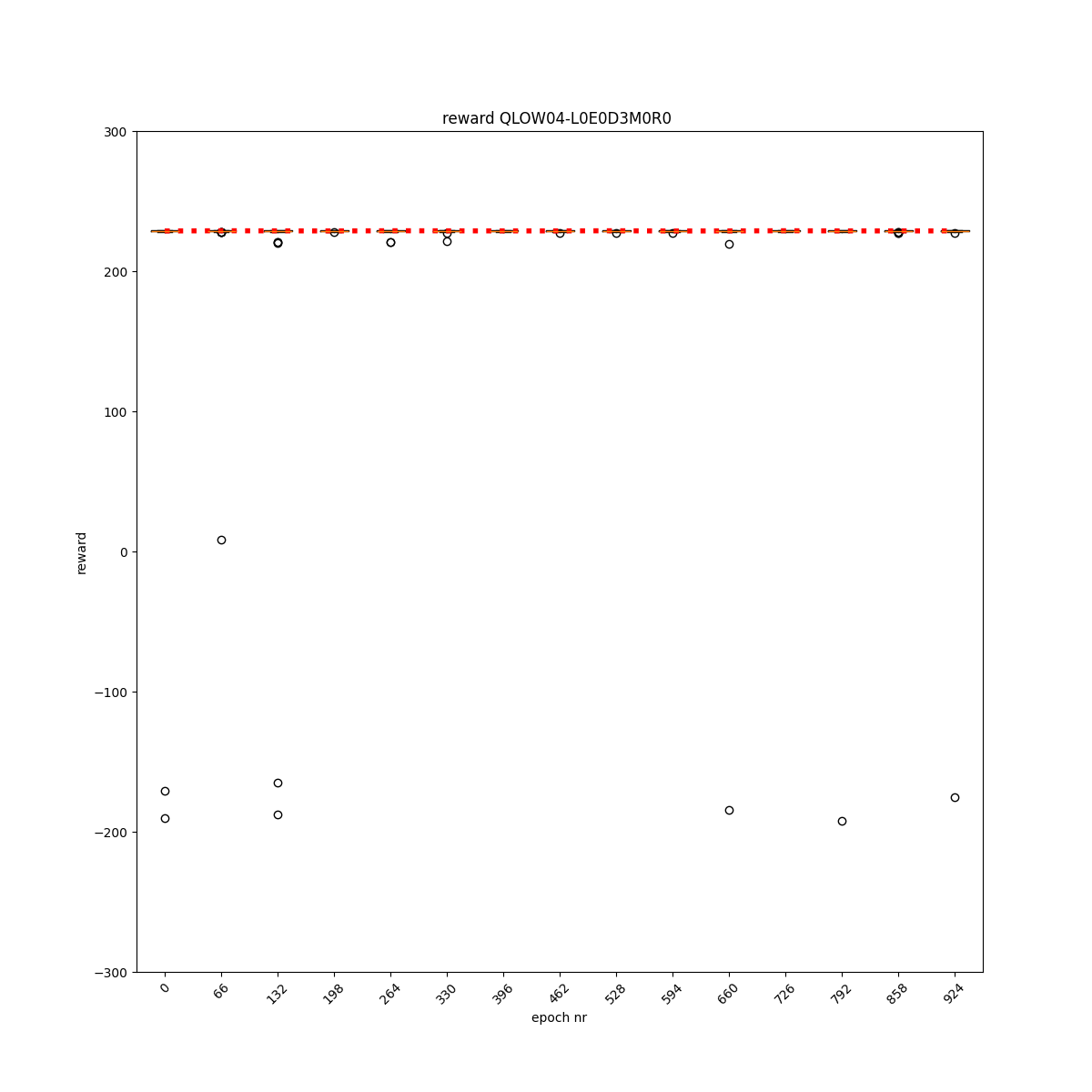

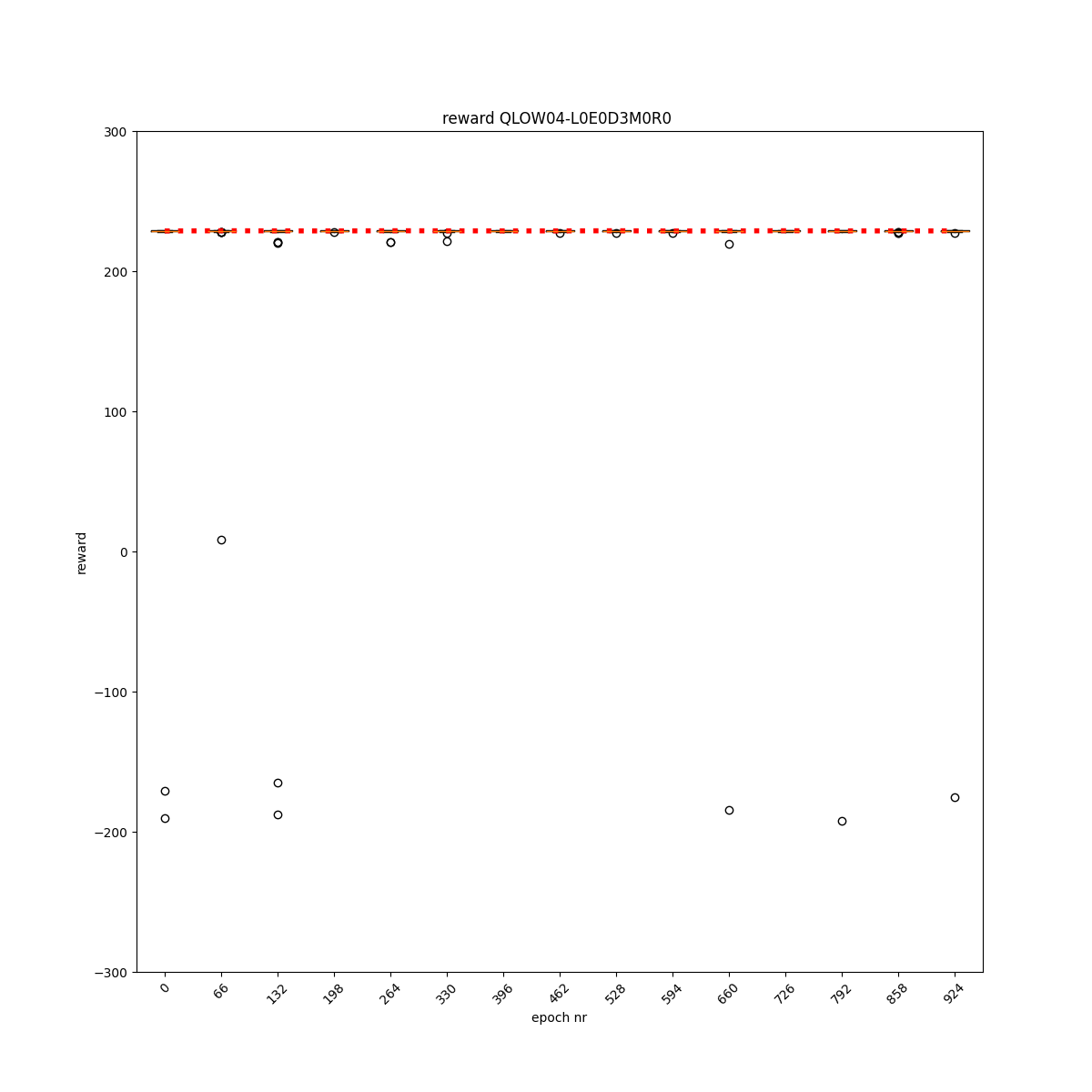

L0 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

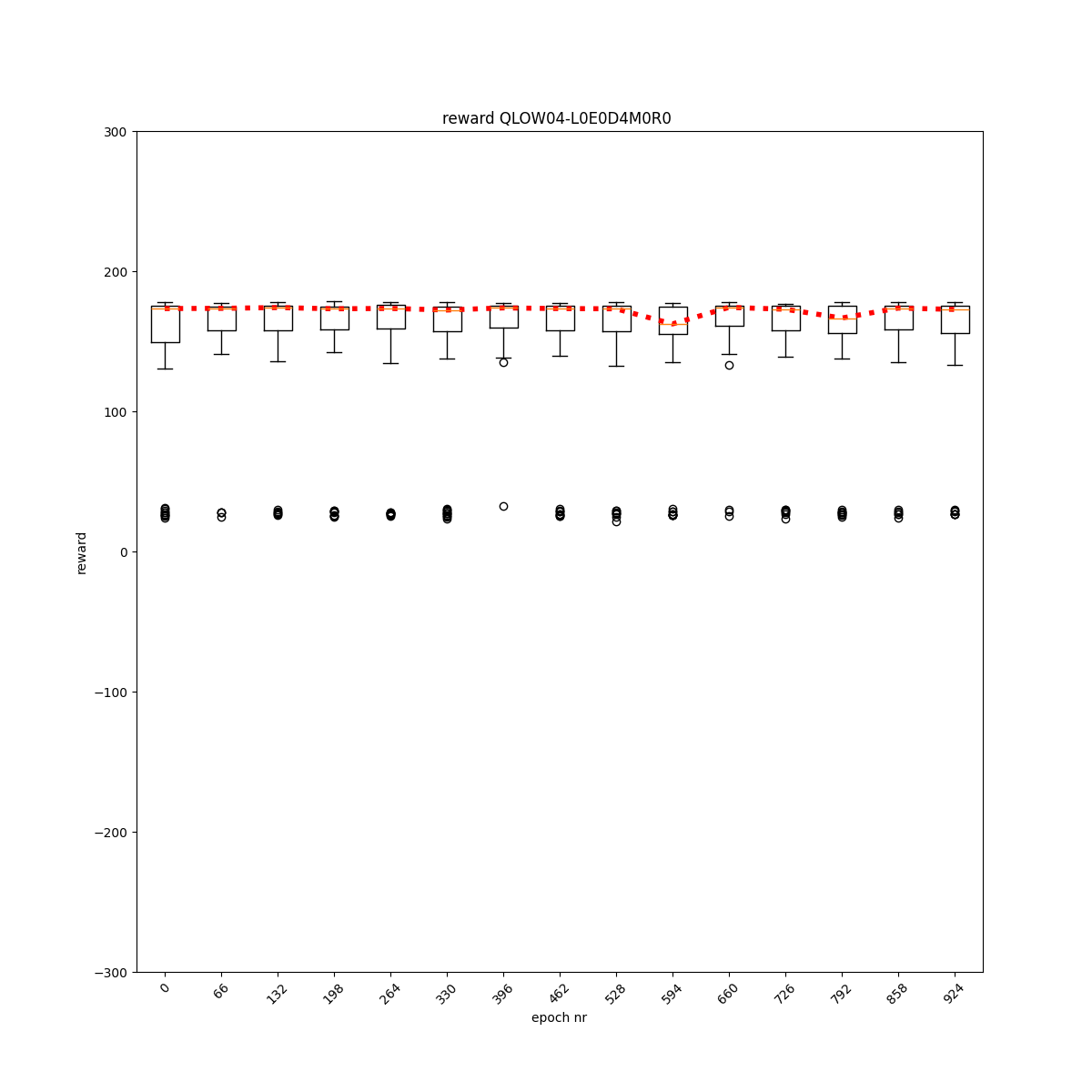

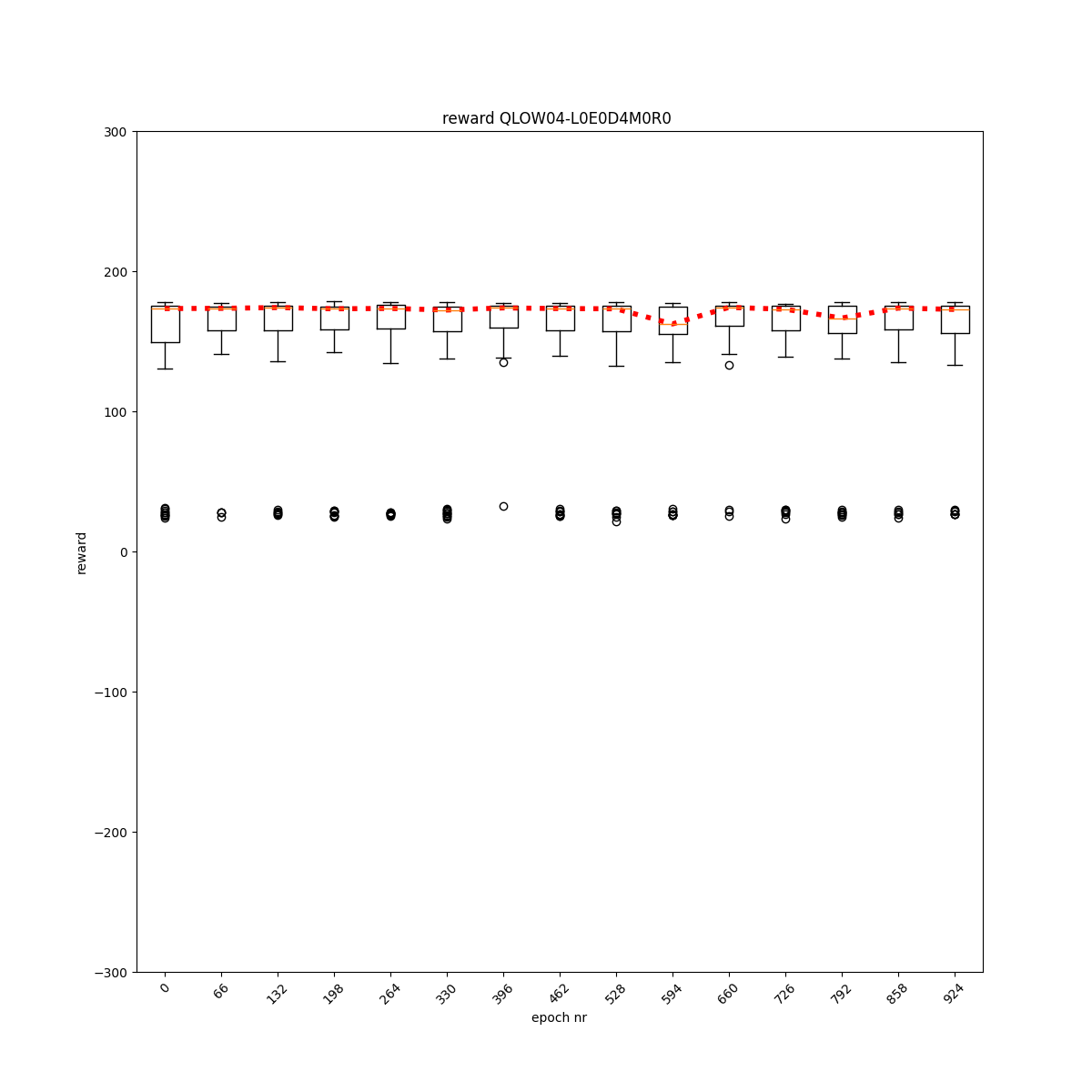

L0 E0 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

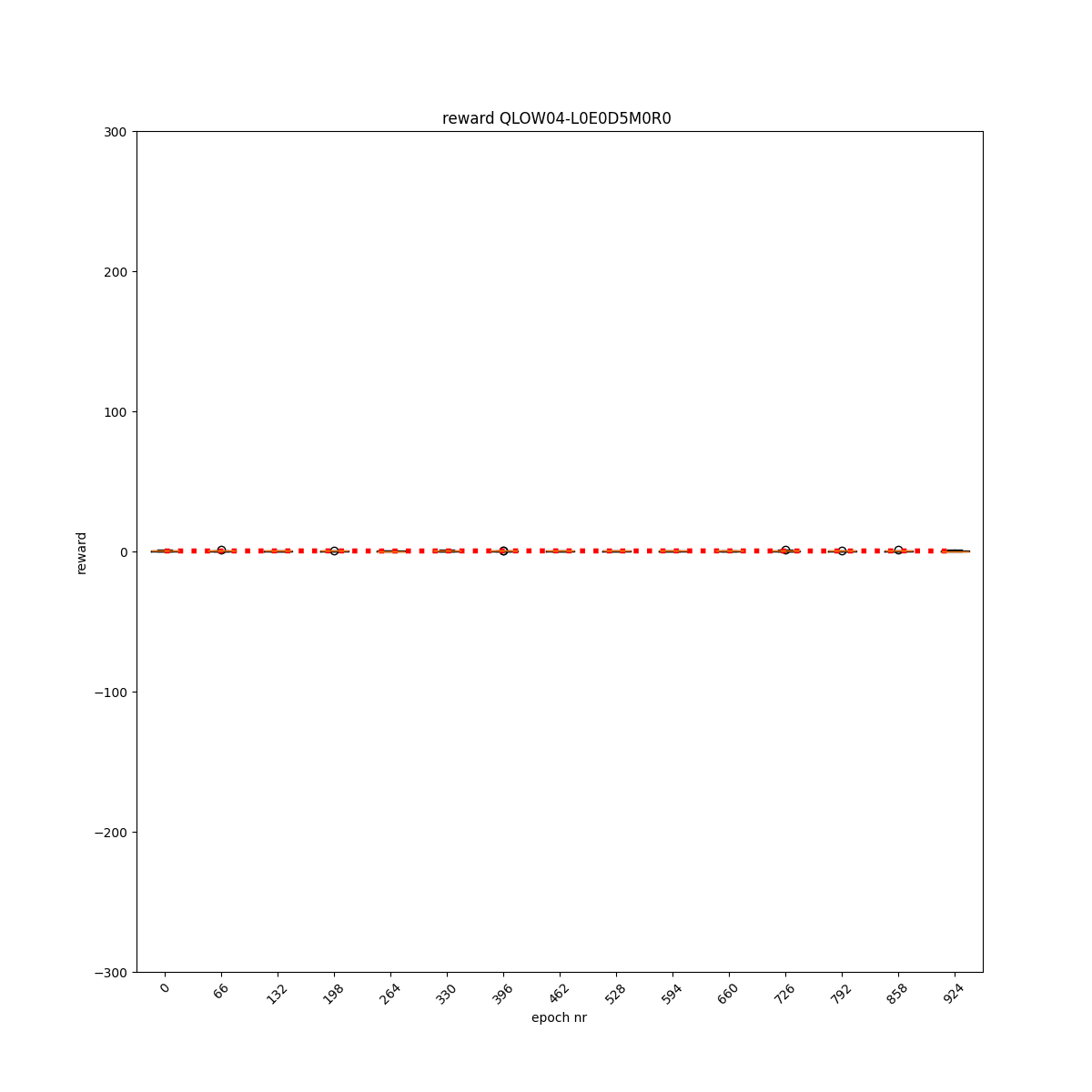

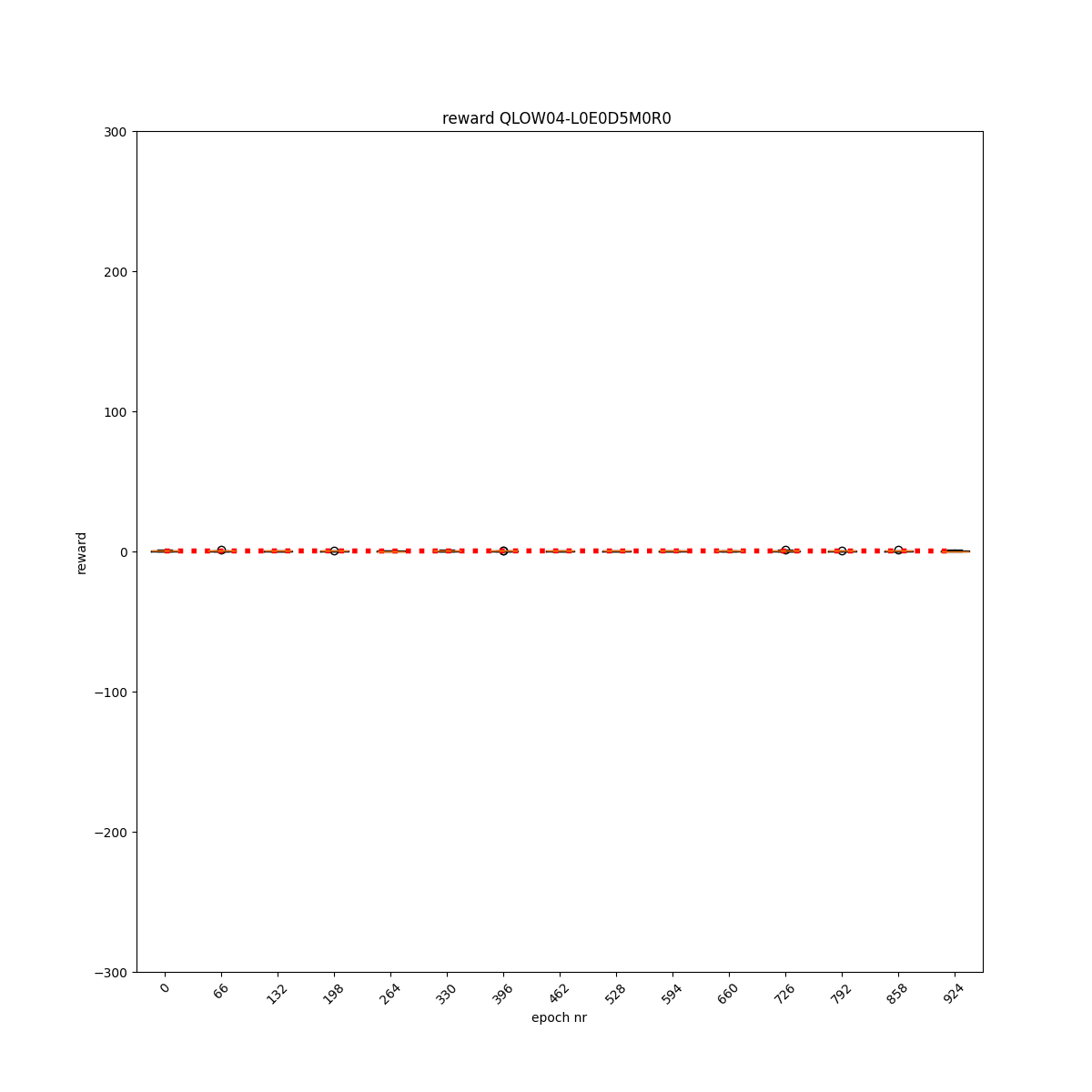

L0 E0 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

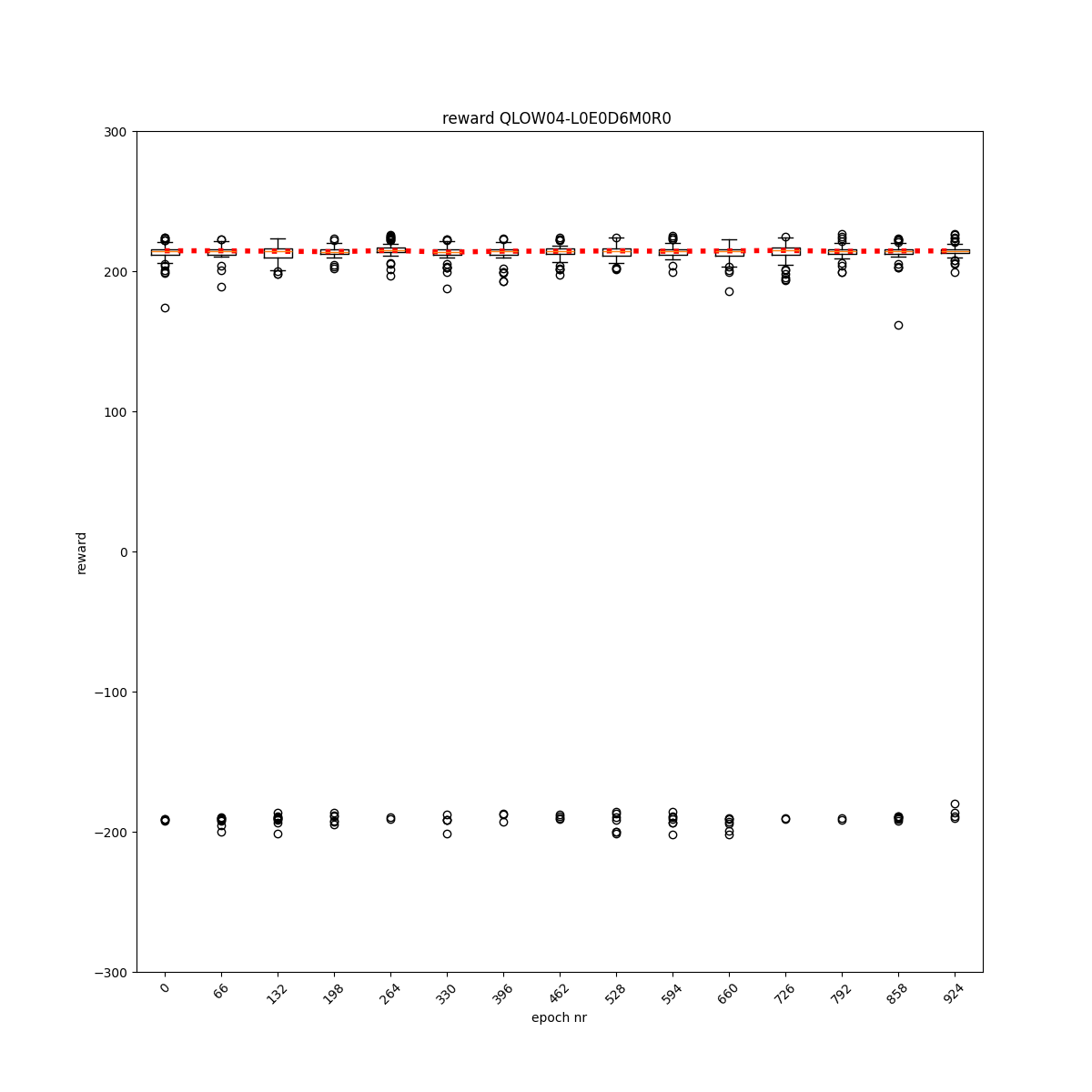

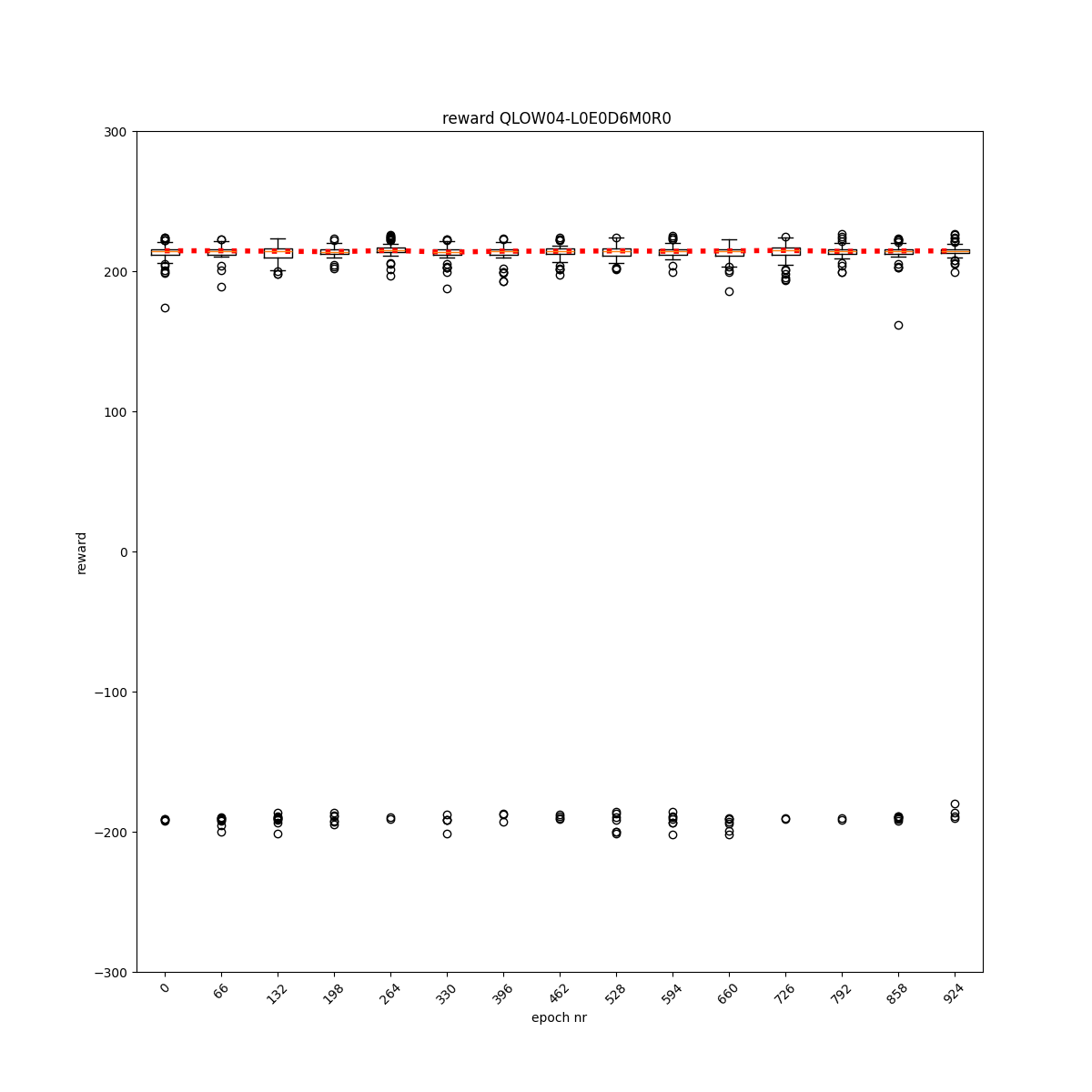

L0 E0 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

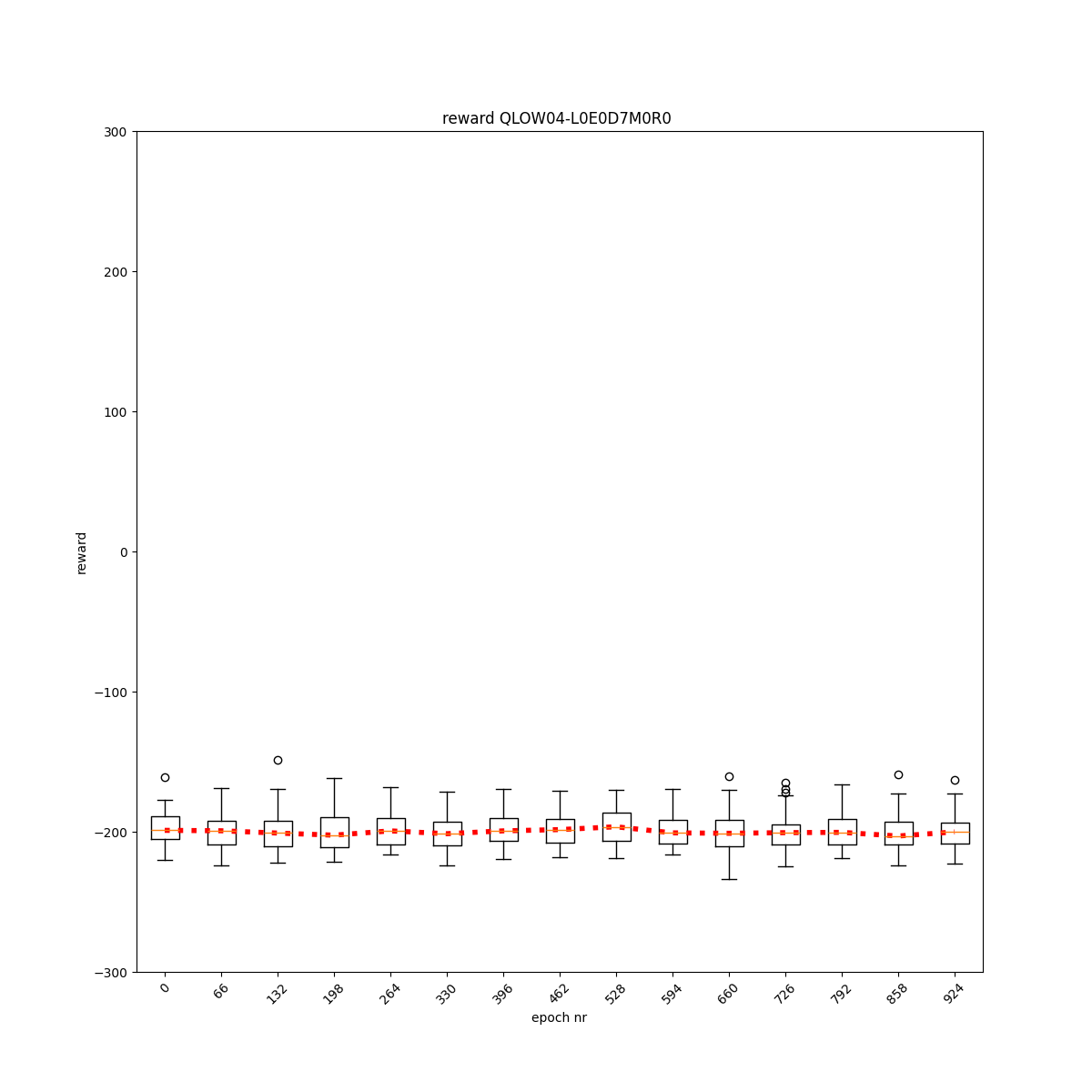

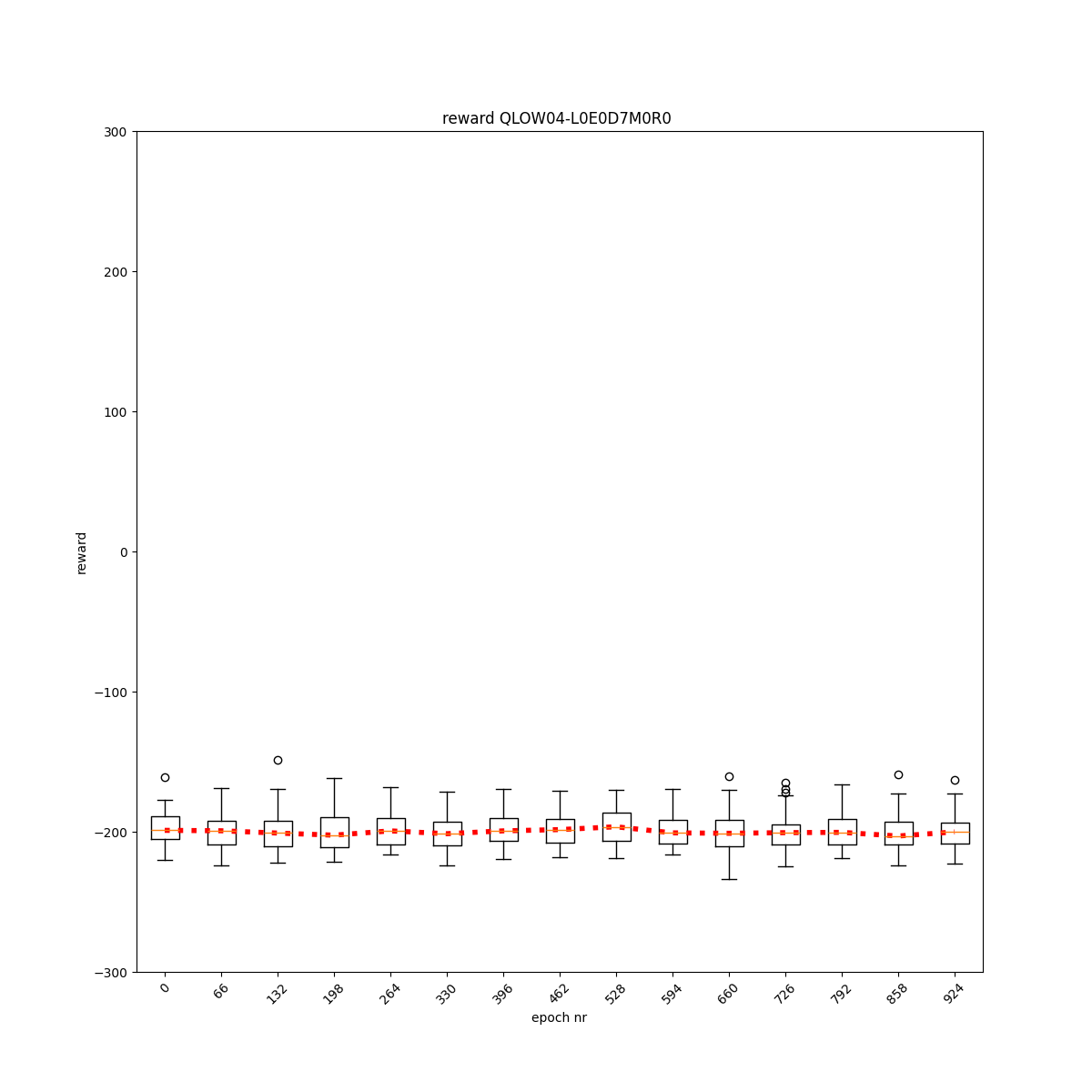

L0 E0 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

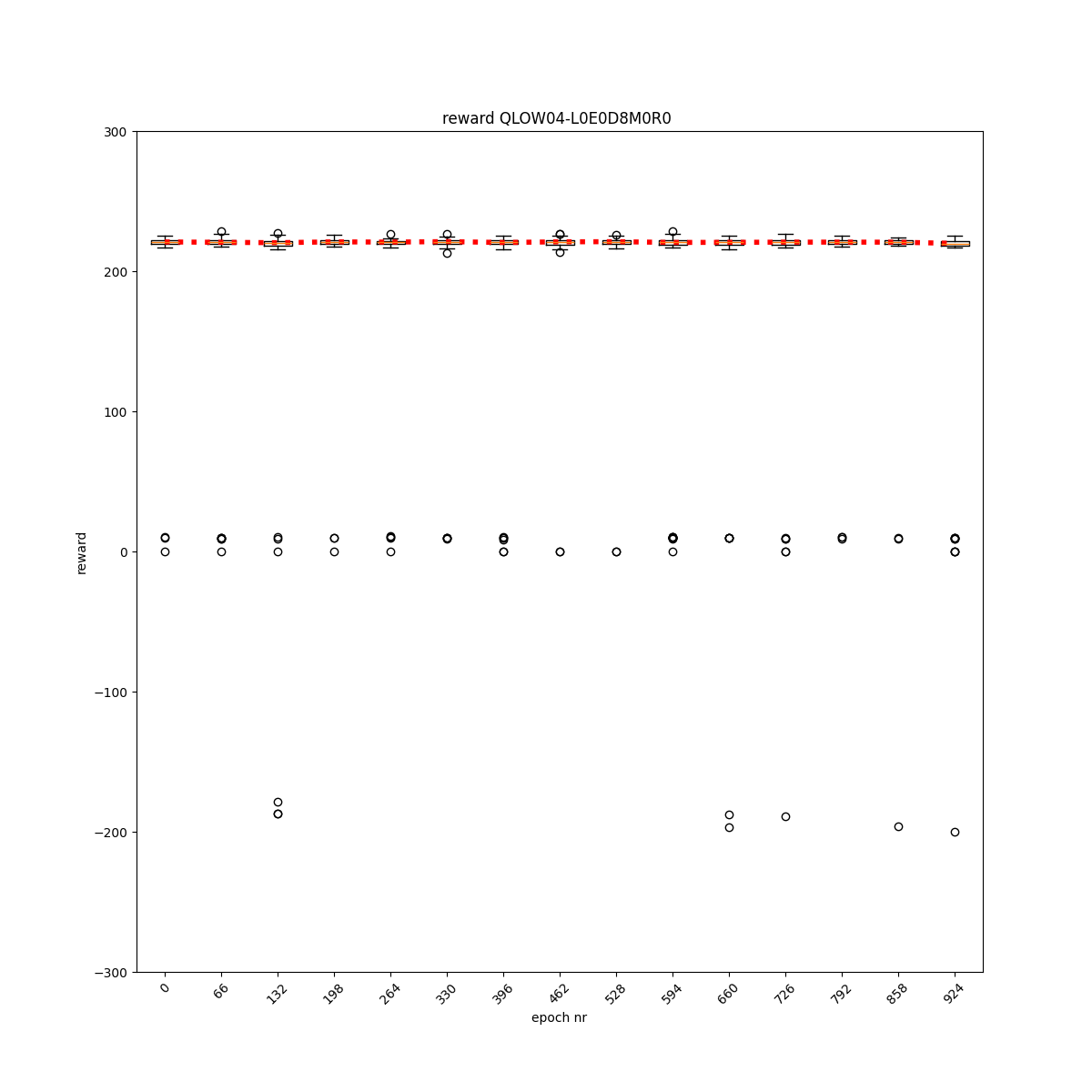

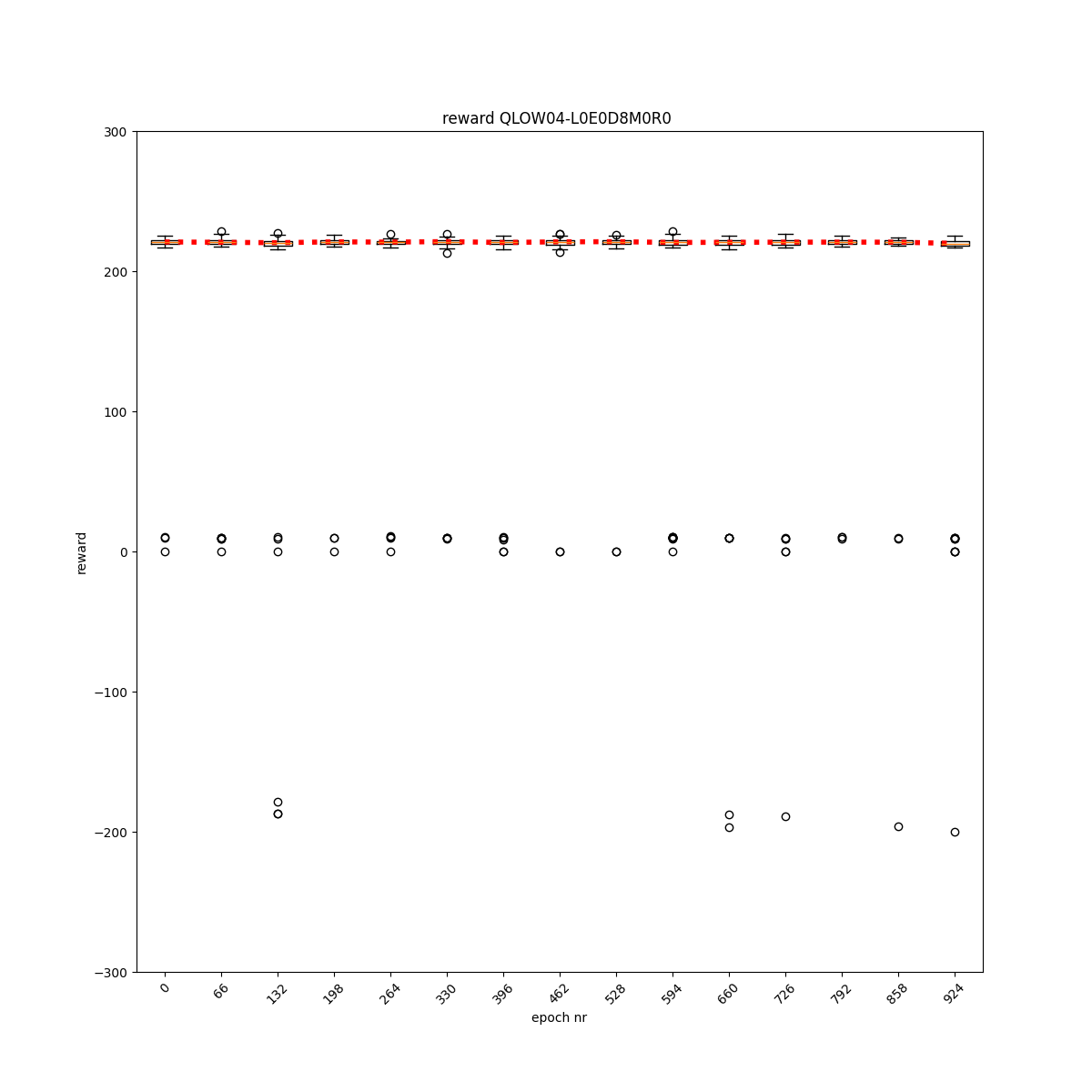

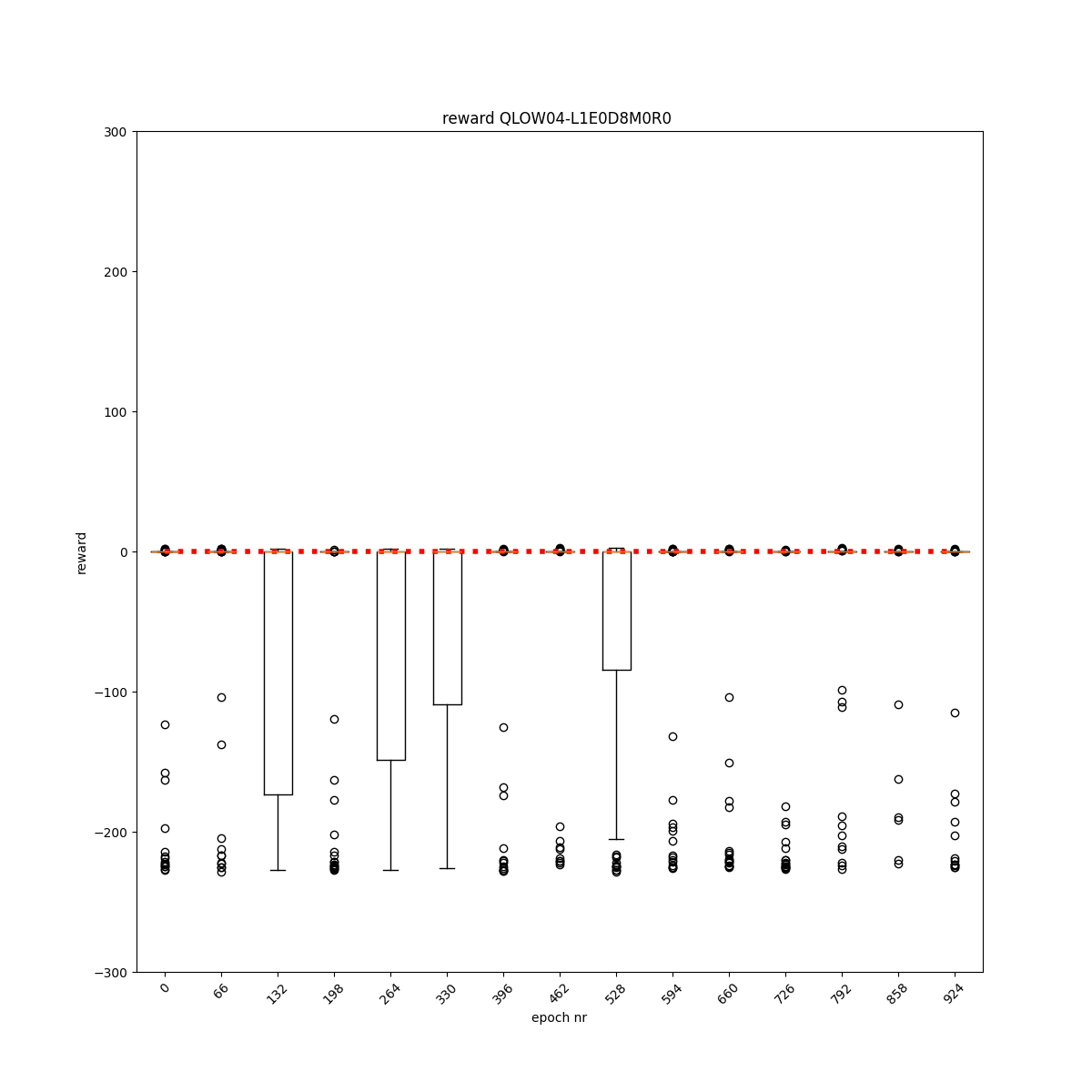

L0 E0 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

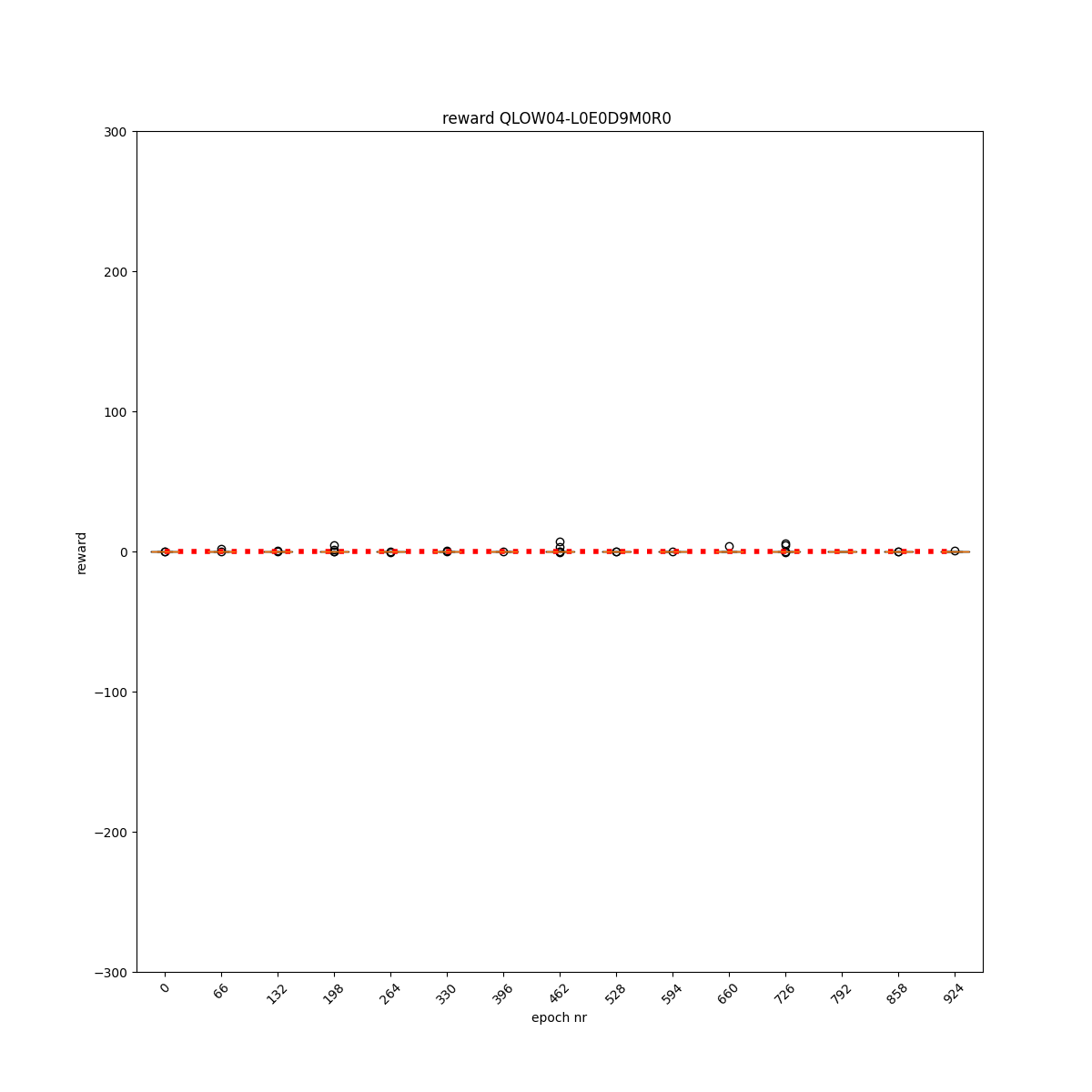

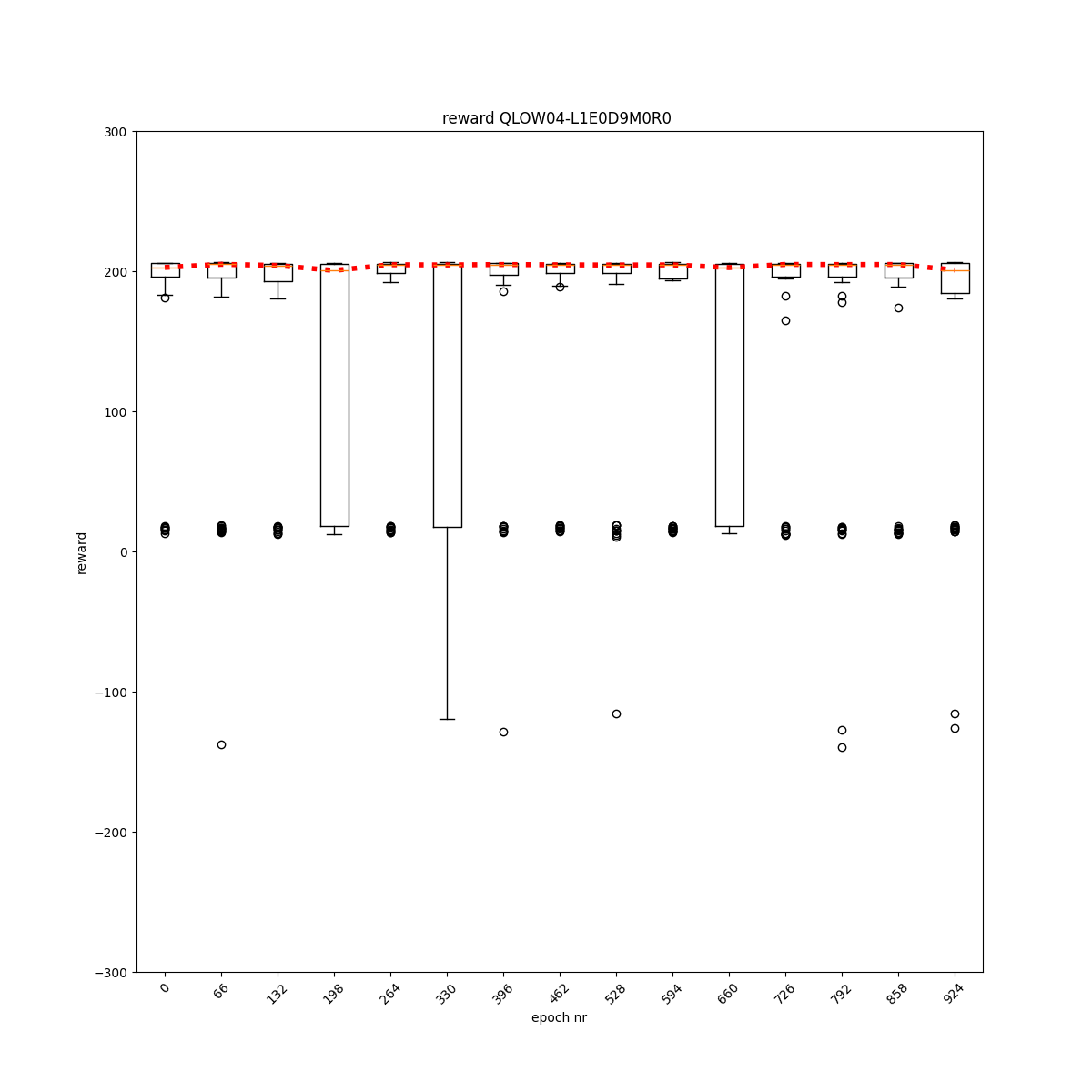

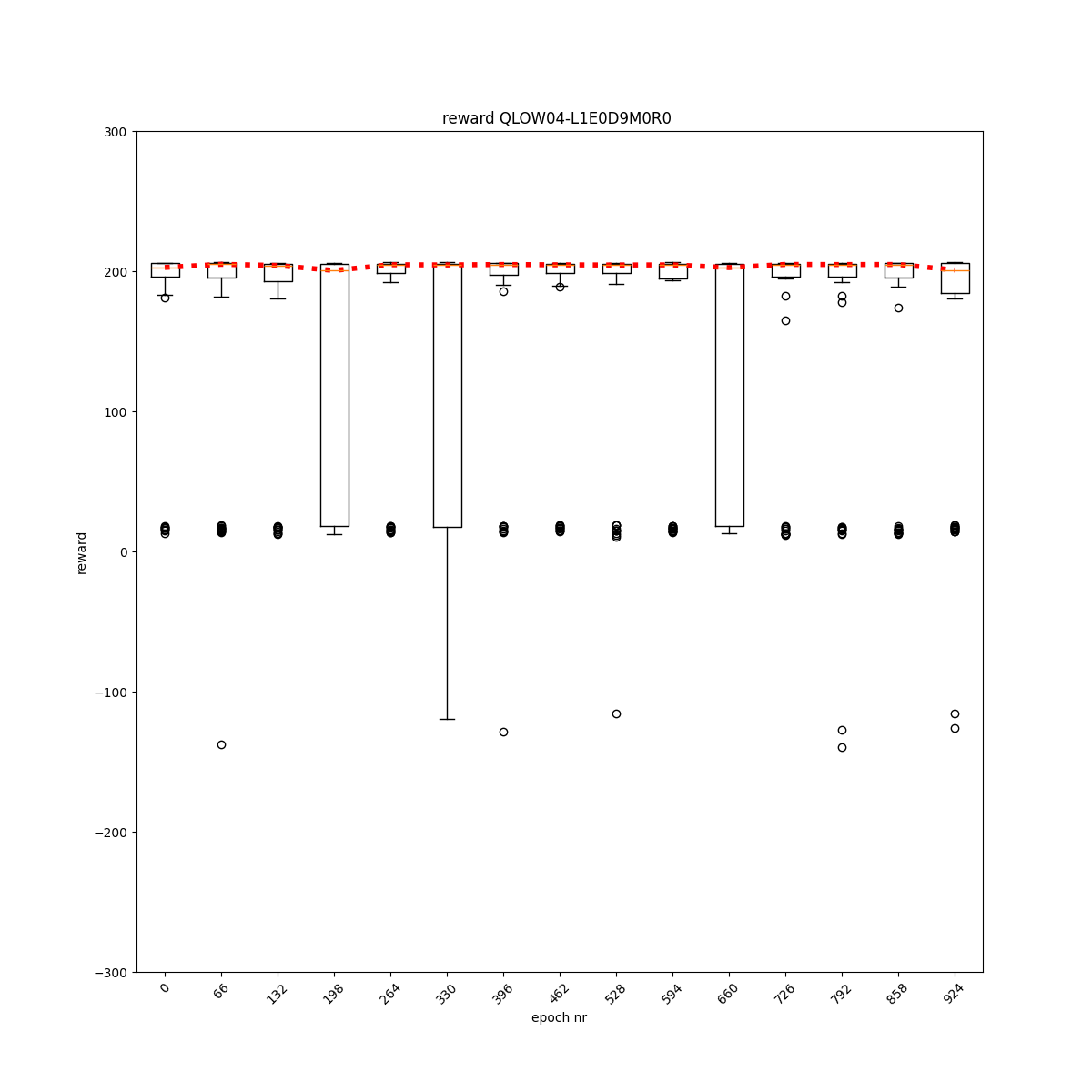

L0 E0 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

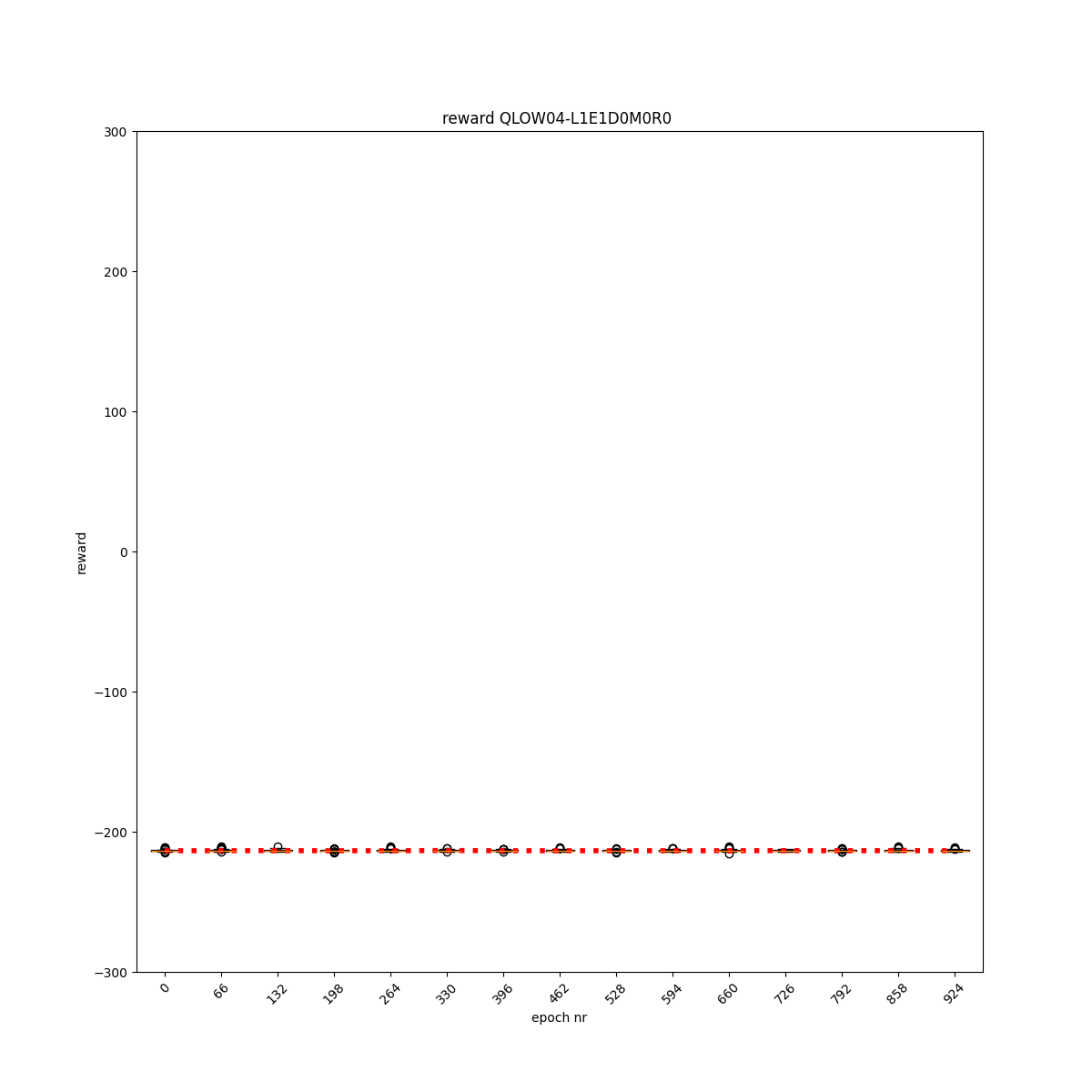

L0 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

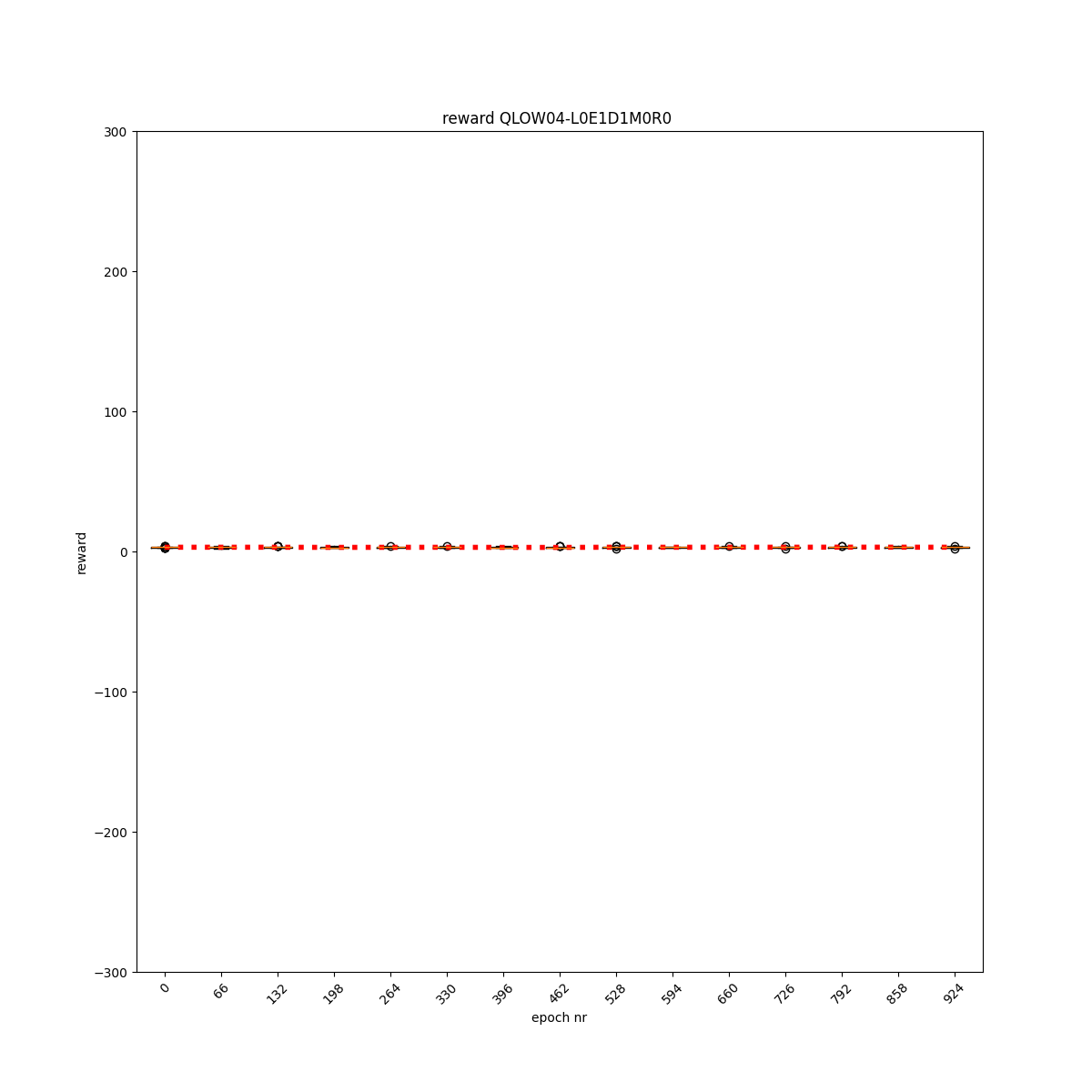

L0 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

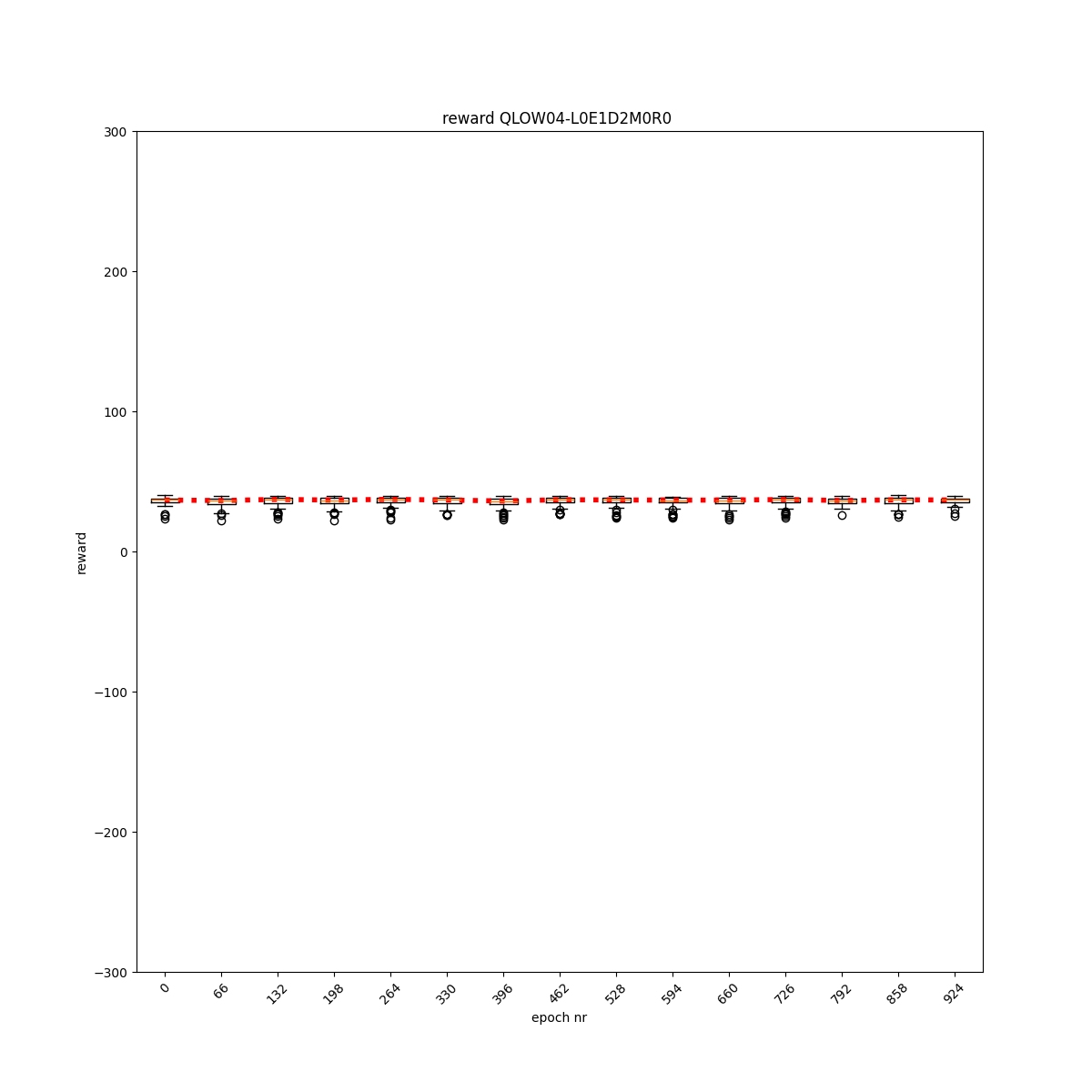

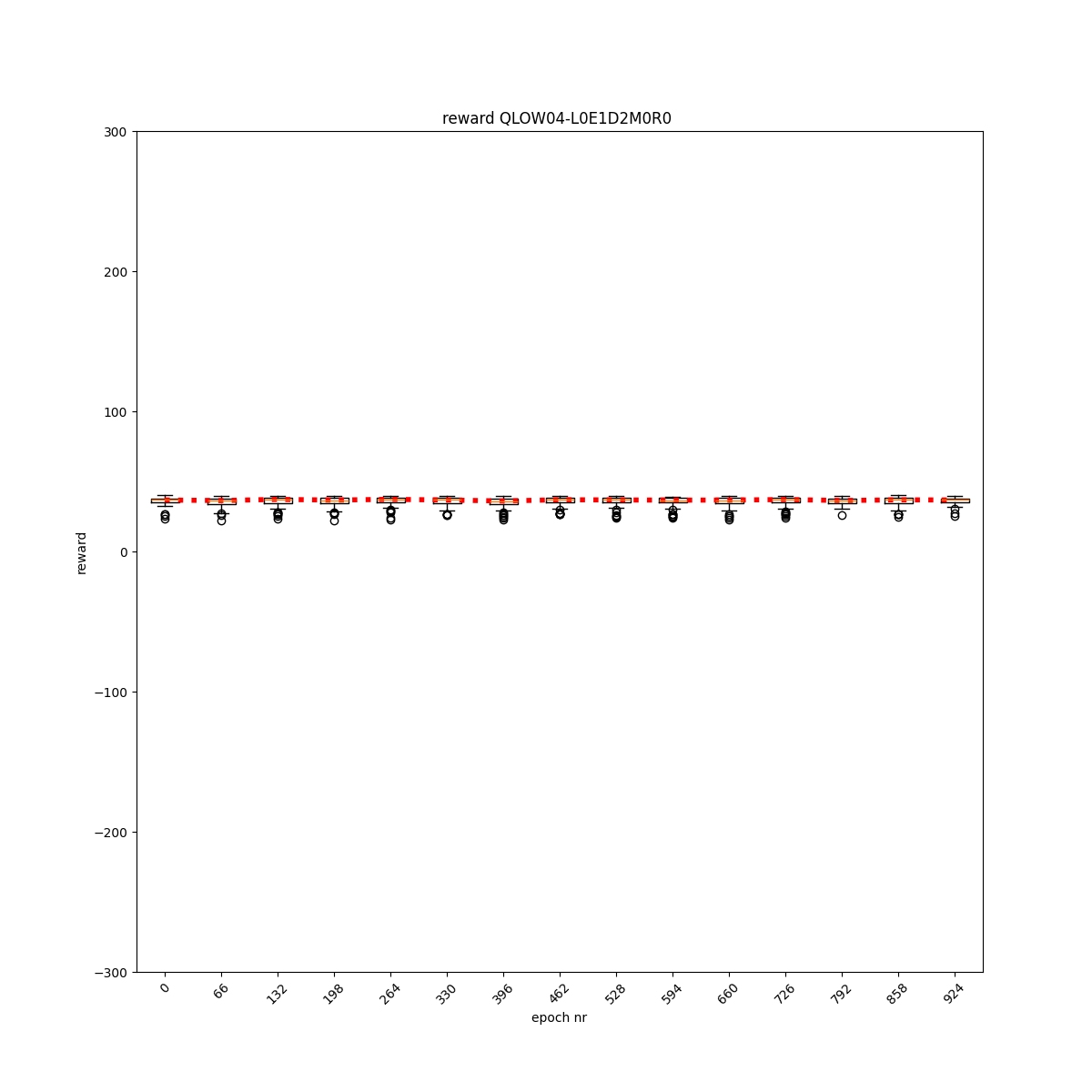

L0 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L0 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L0 E1 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

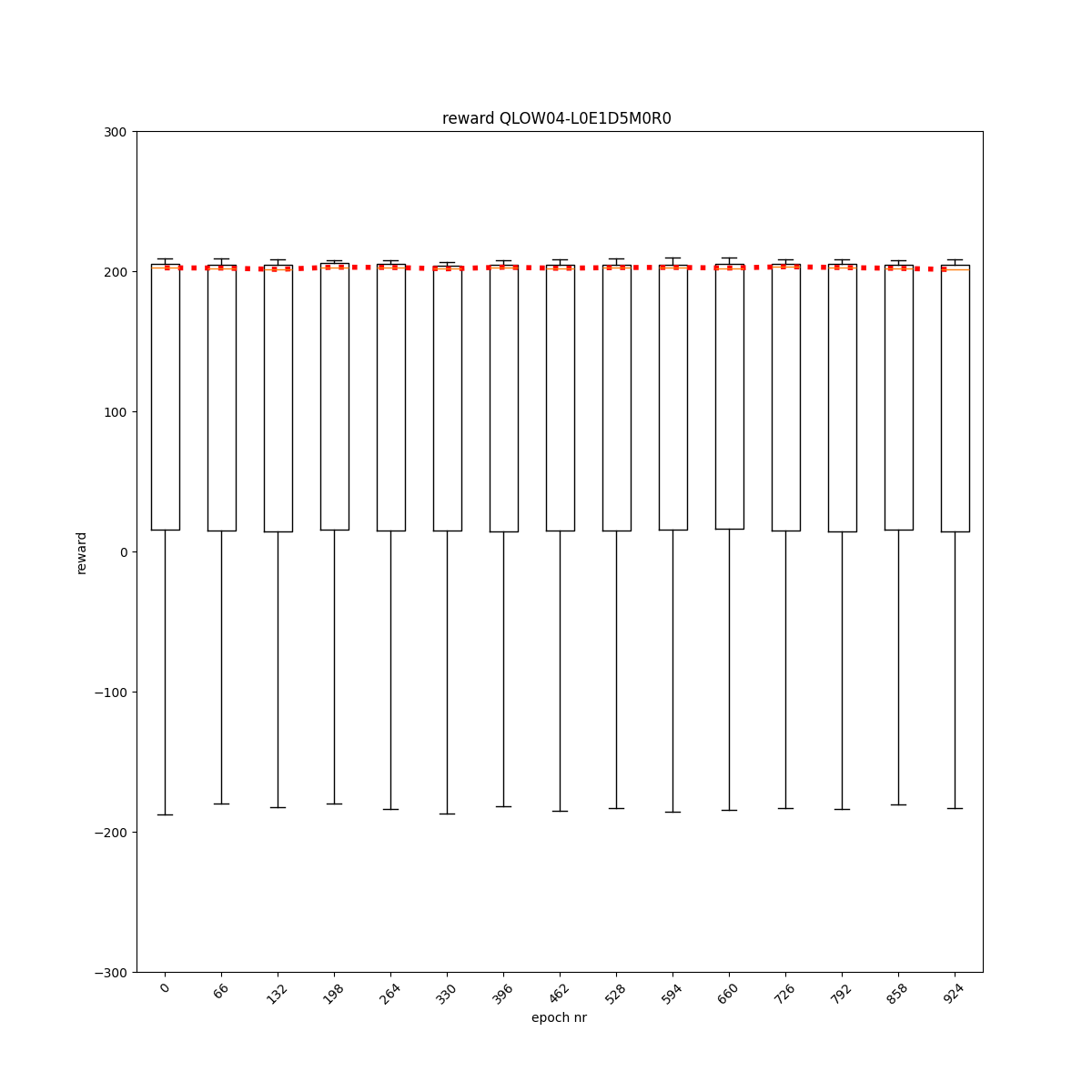

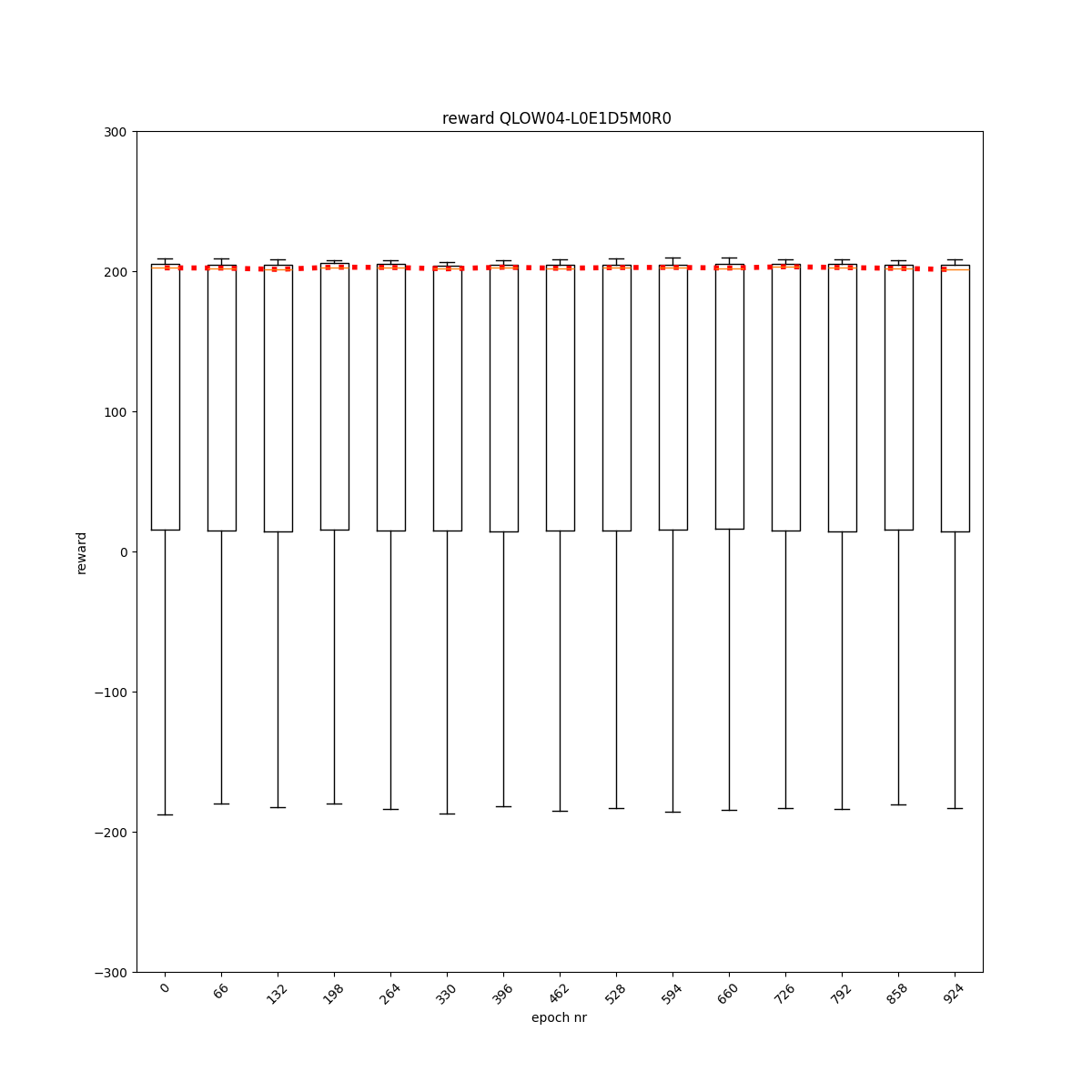

L0 E1 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

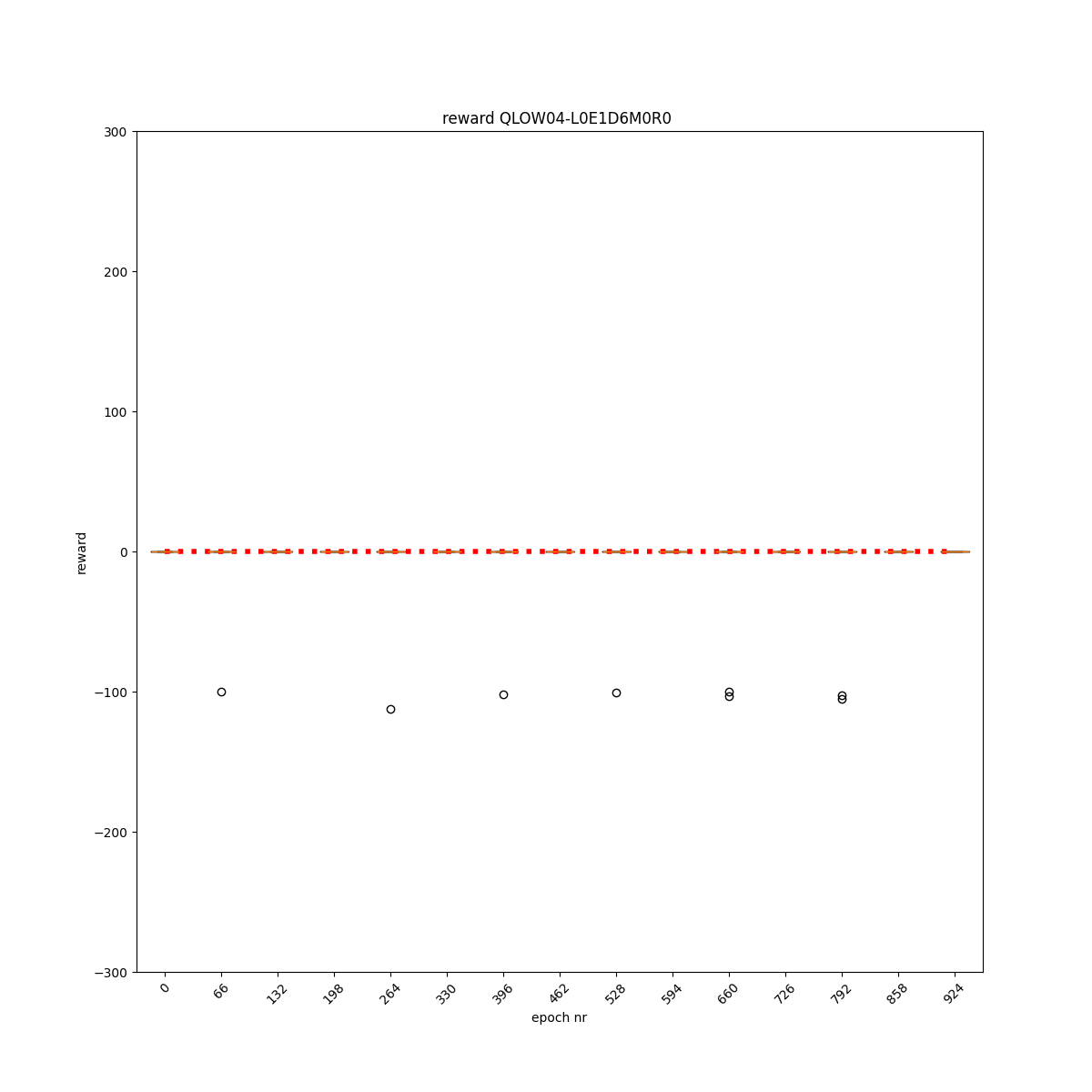

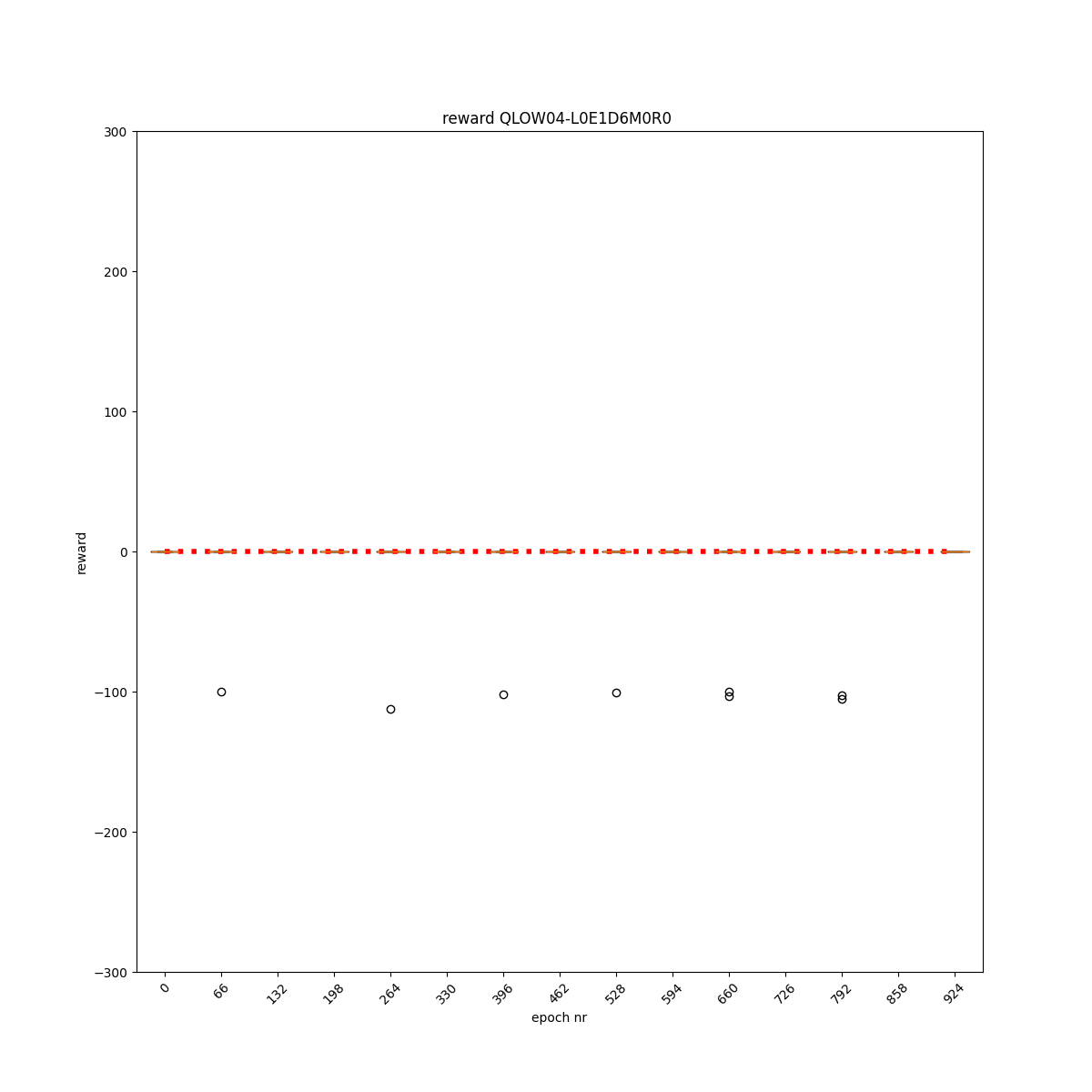

L0 E1 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

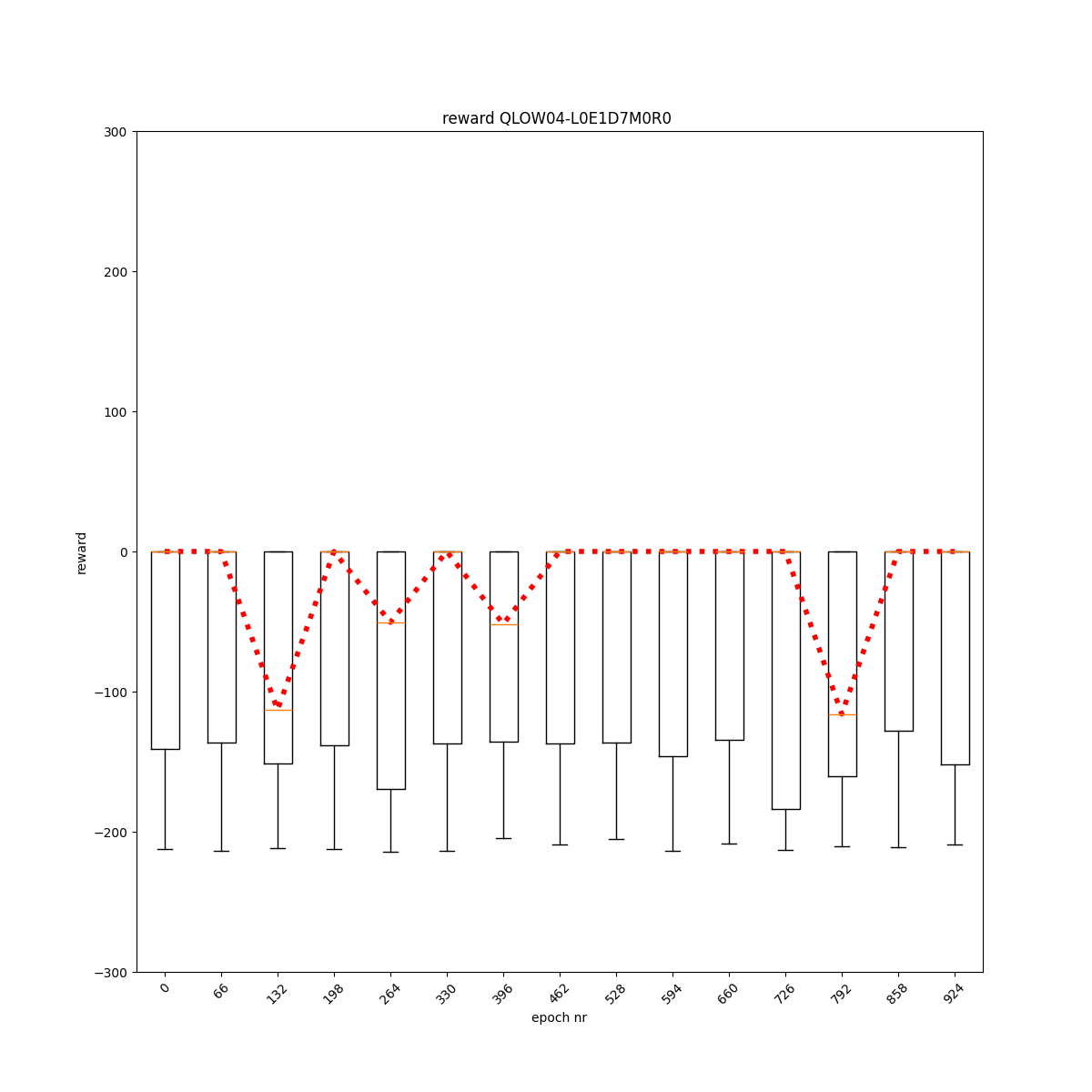

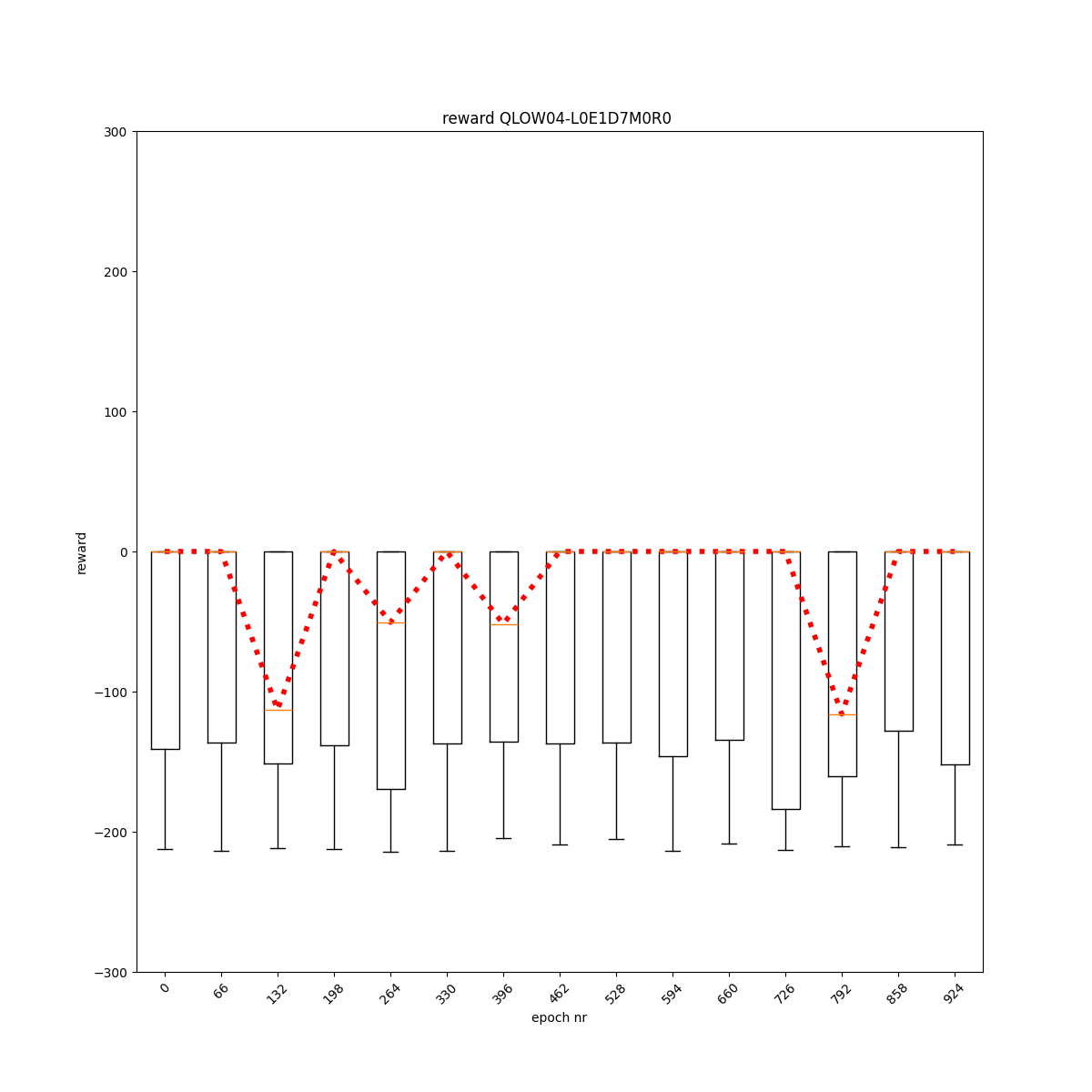

L0 E1 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

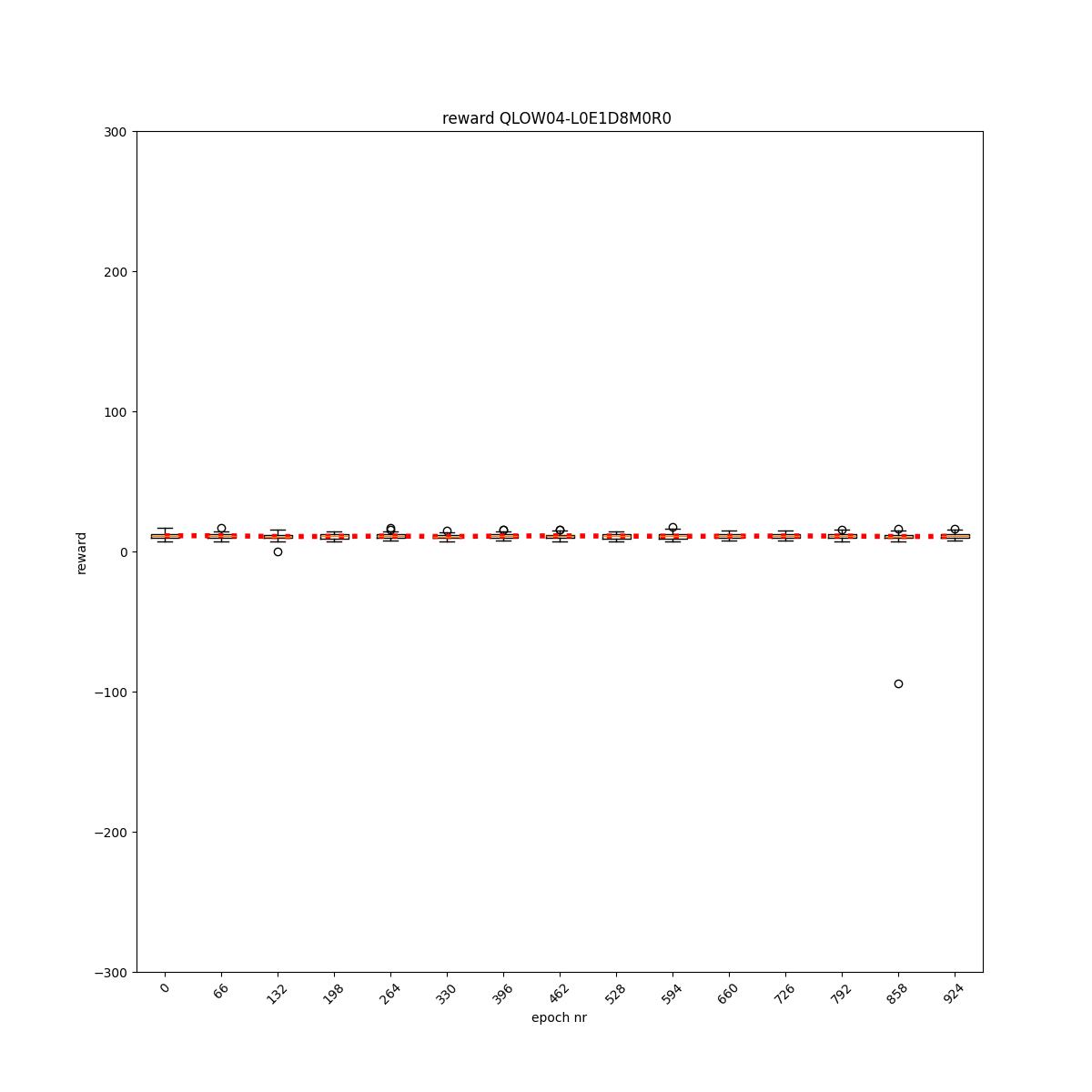

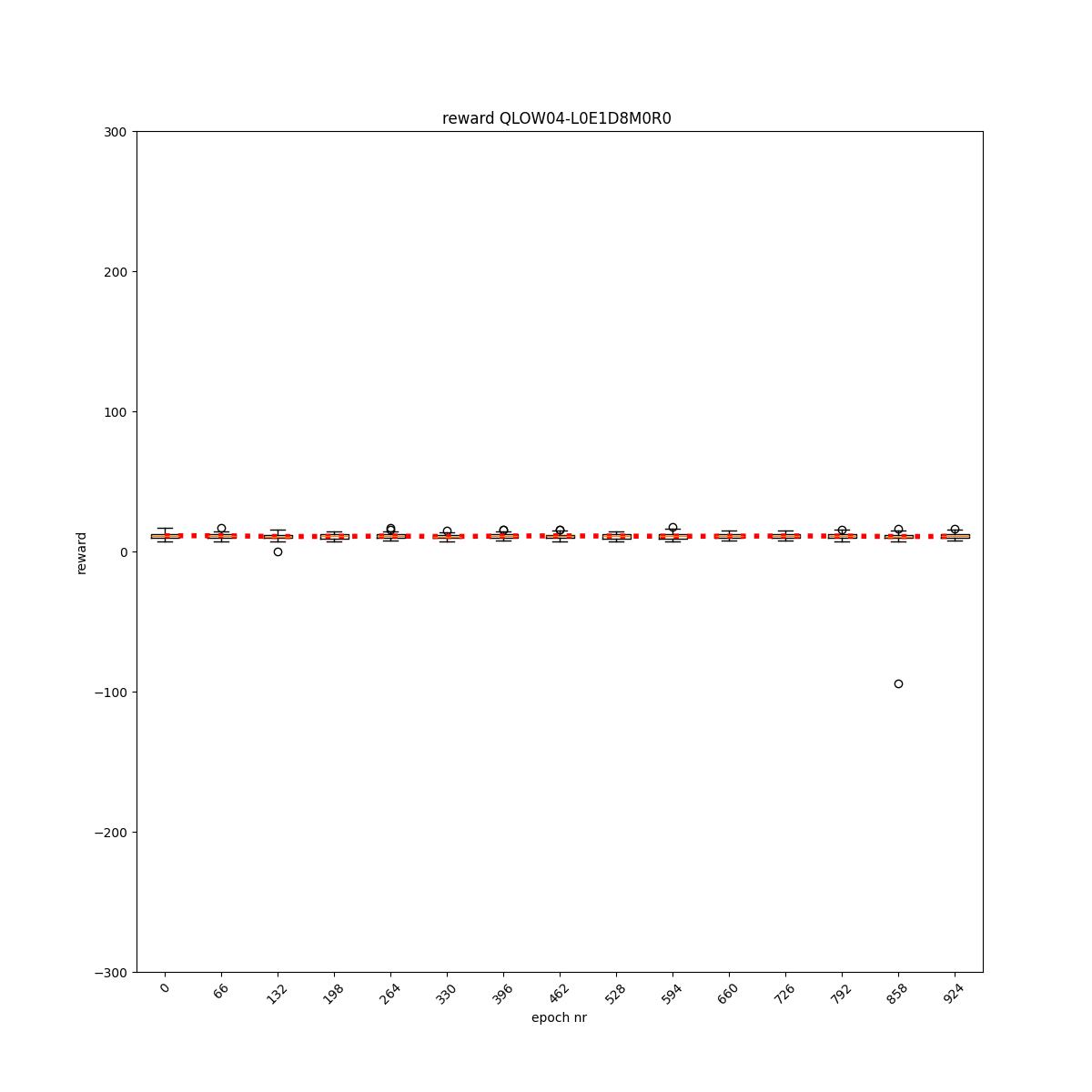

L0 E1 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

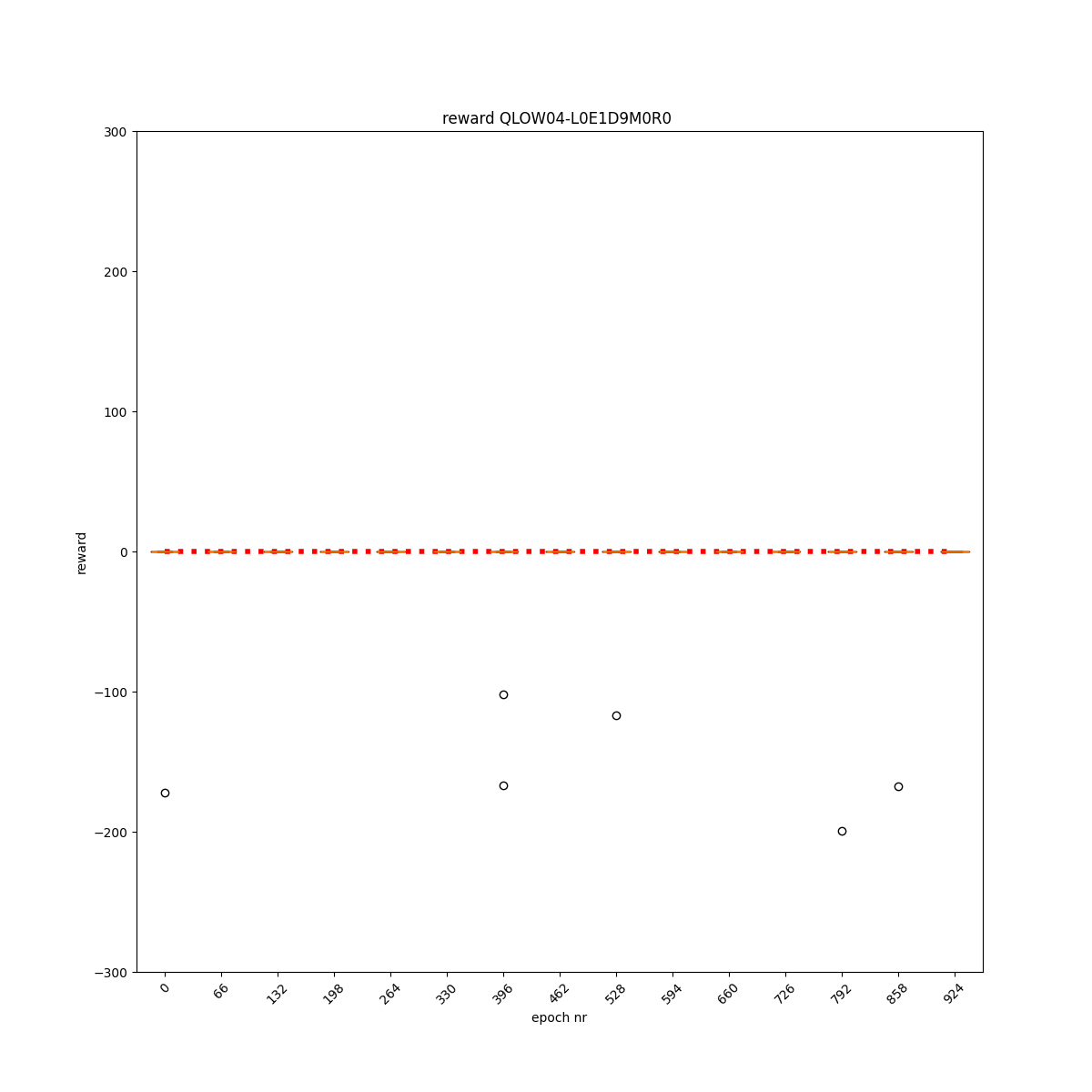

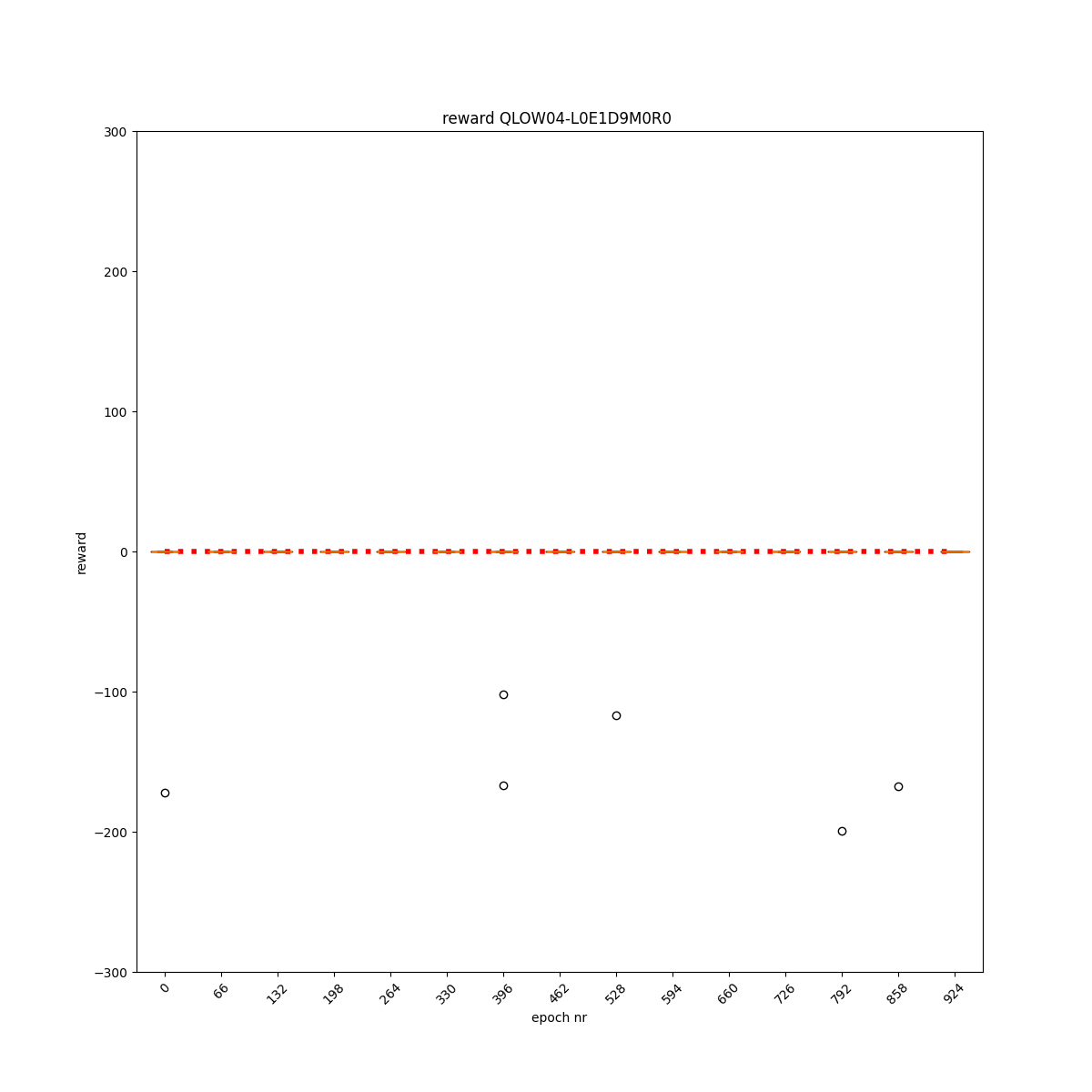

L0 E1 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

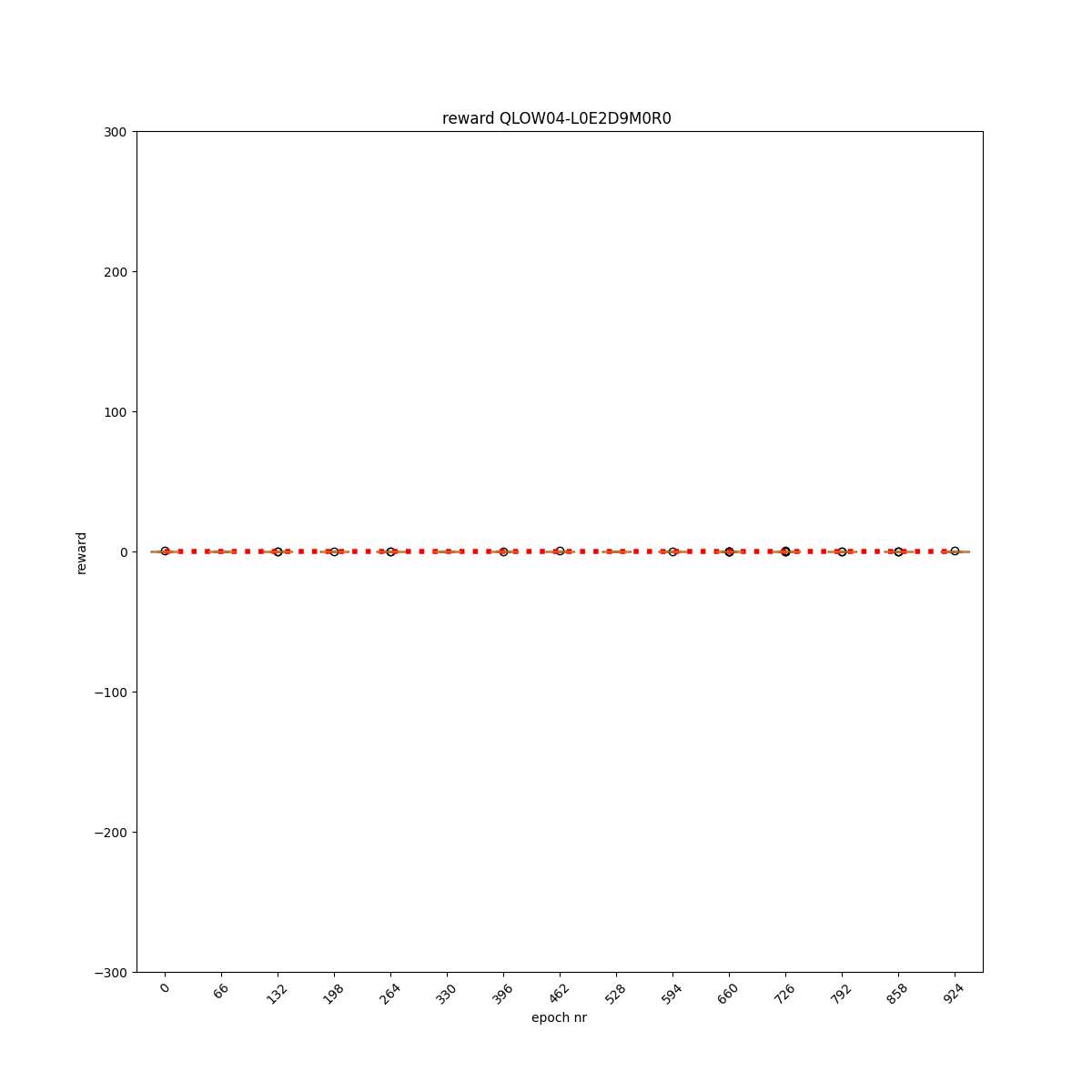

L0 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

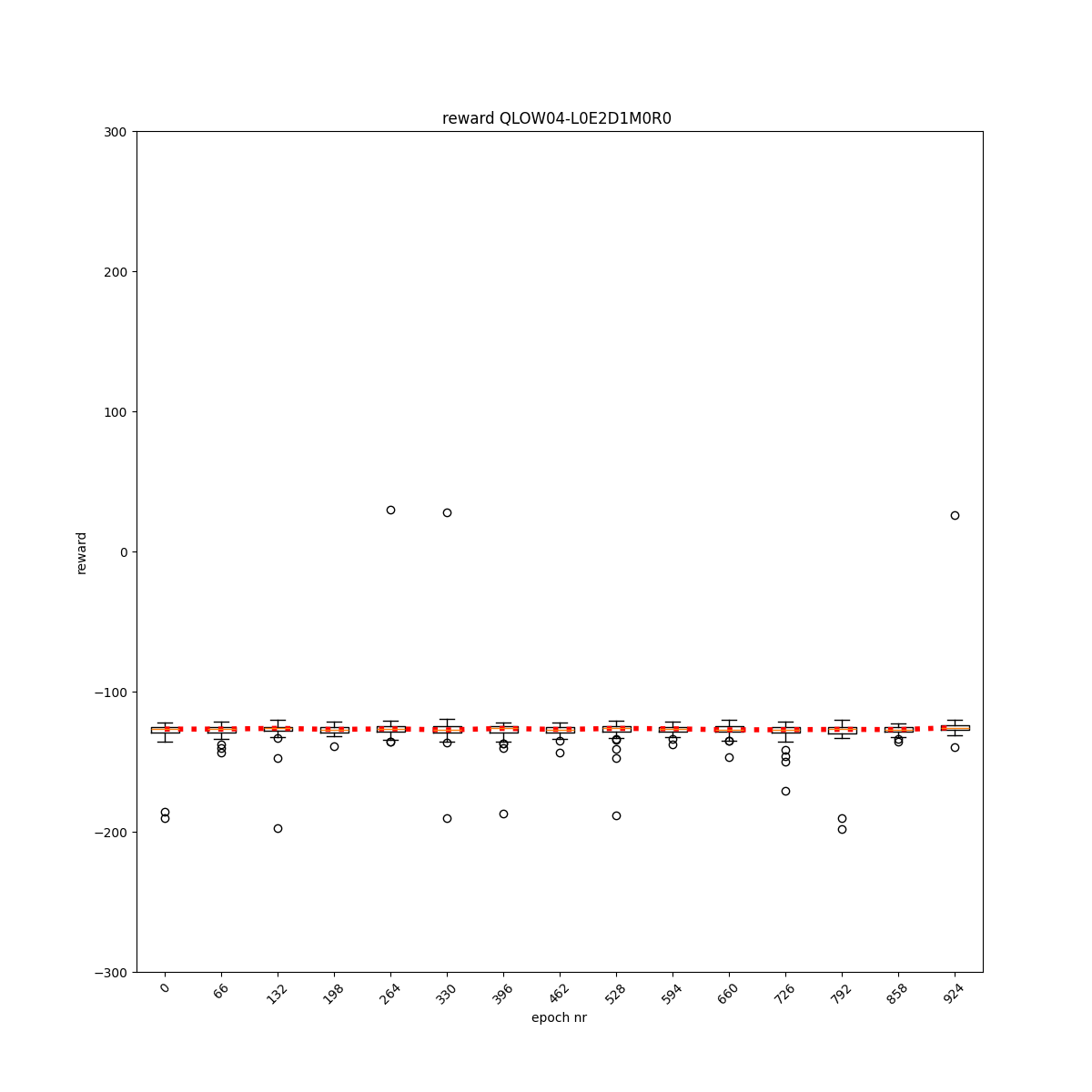

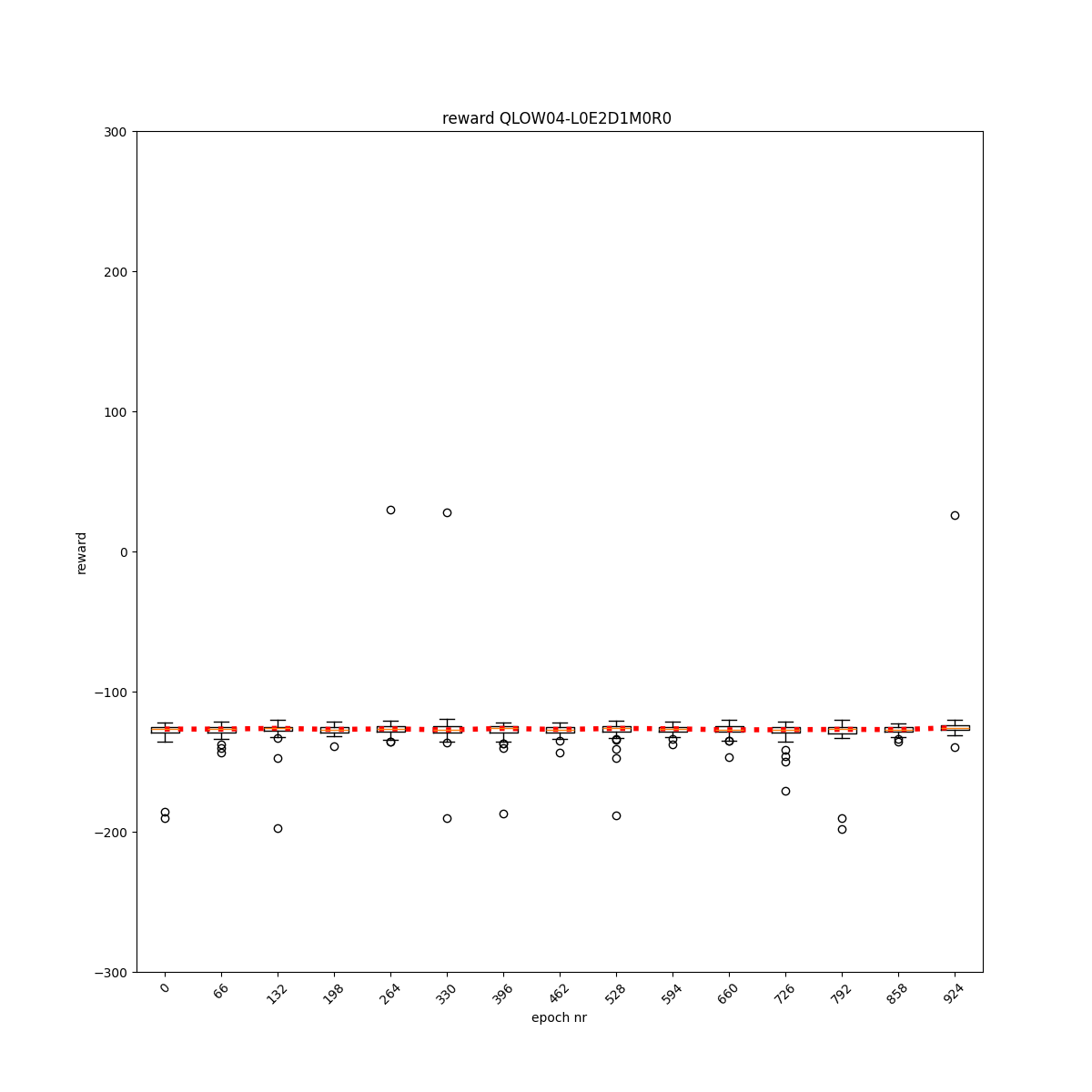

L0 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

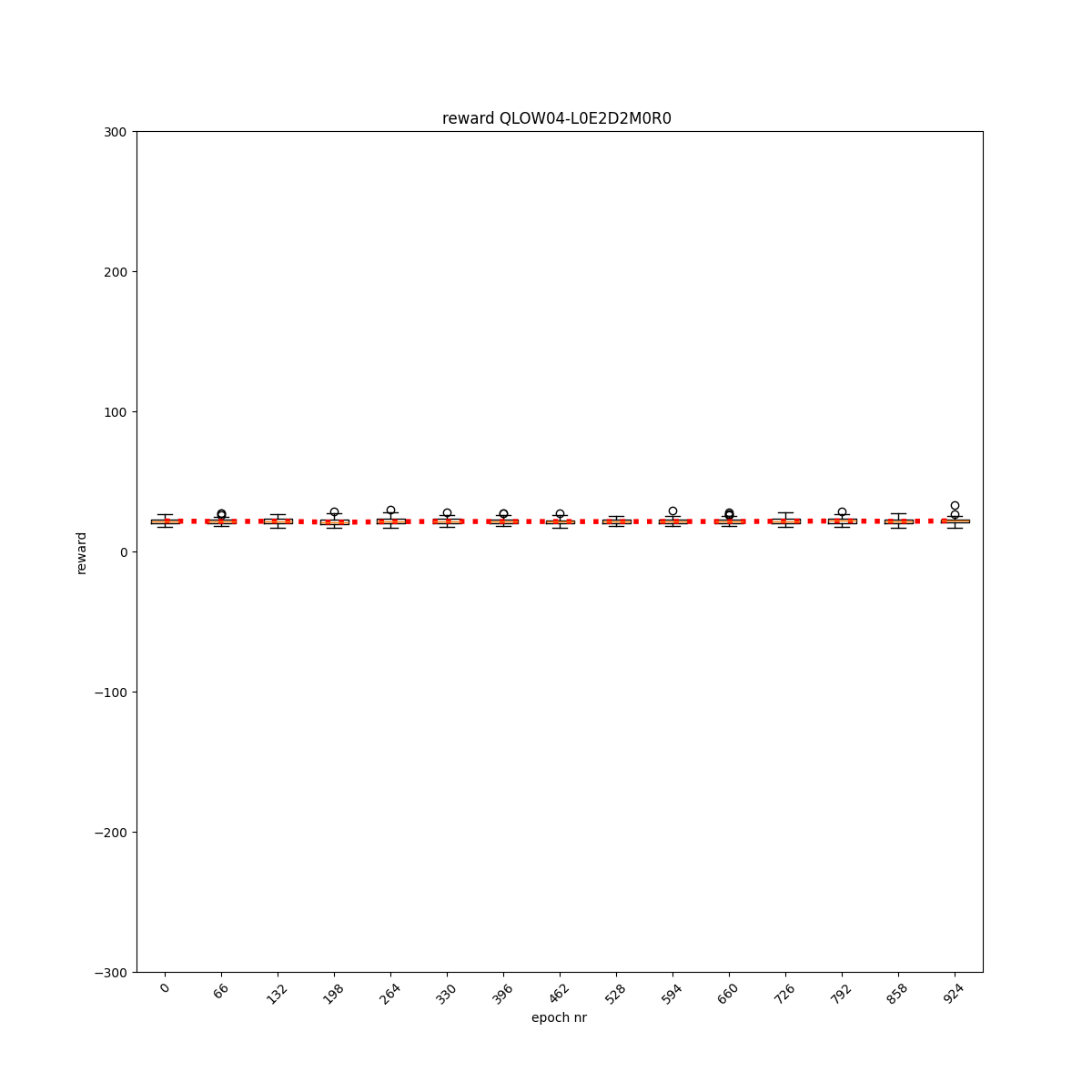

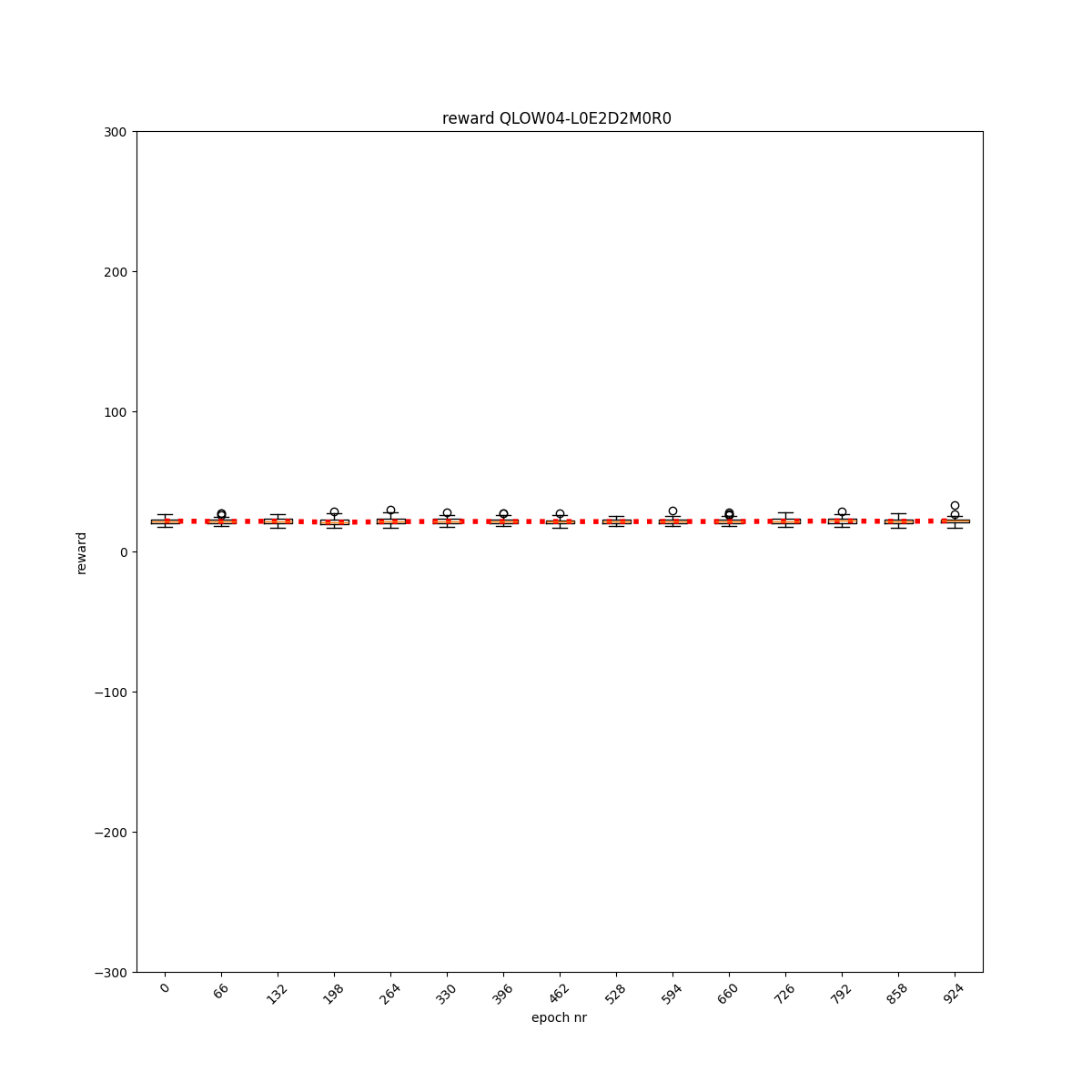

L0 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

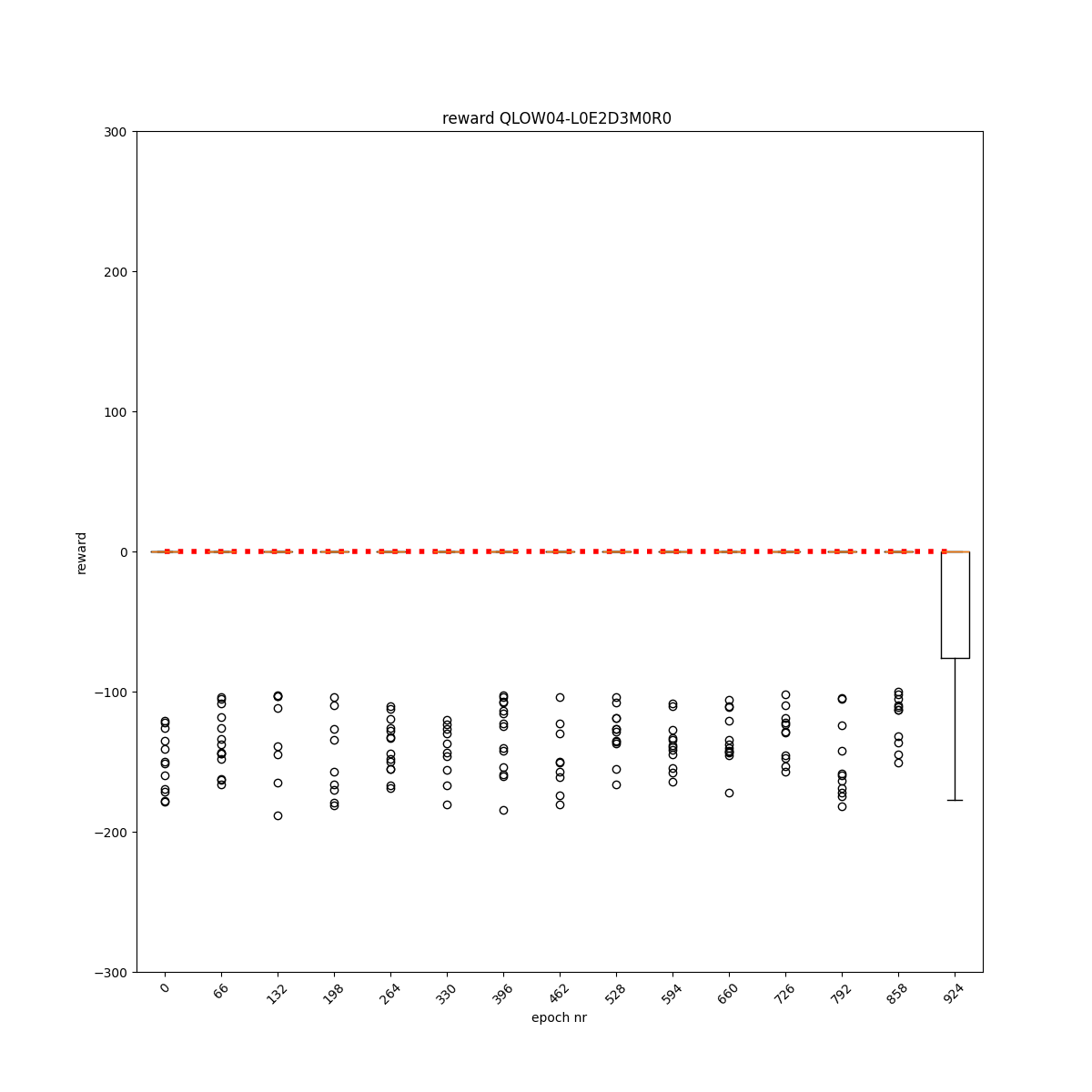

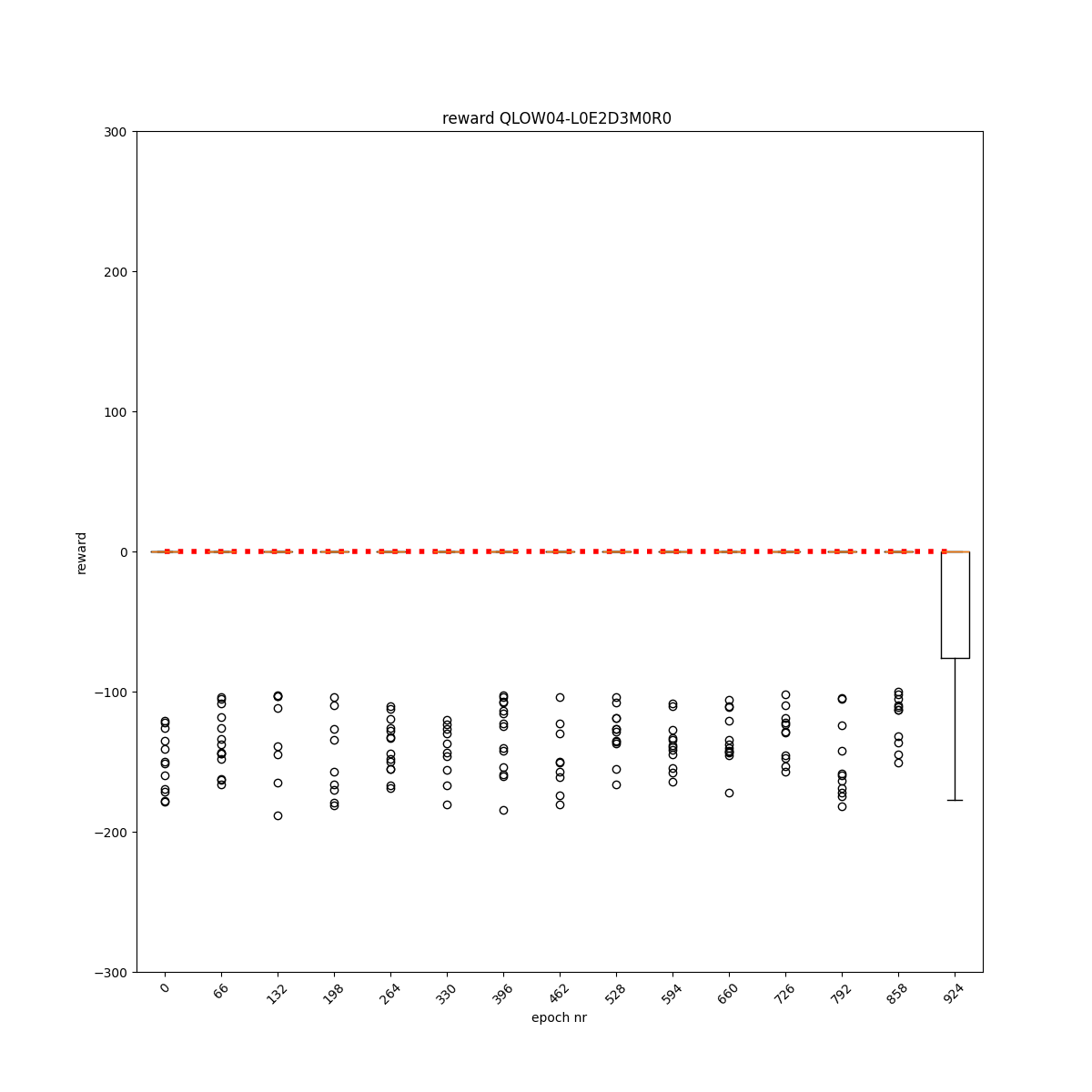

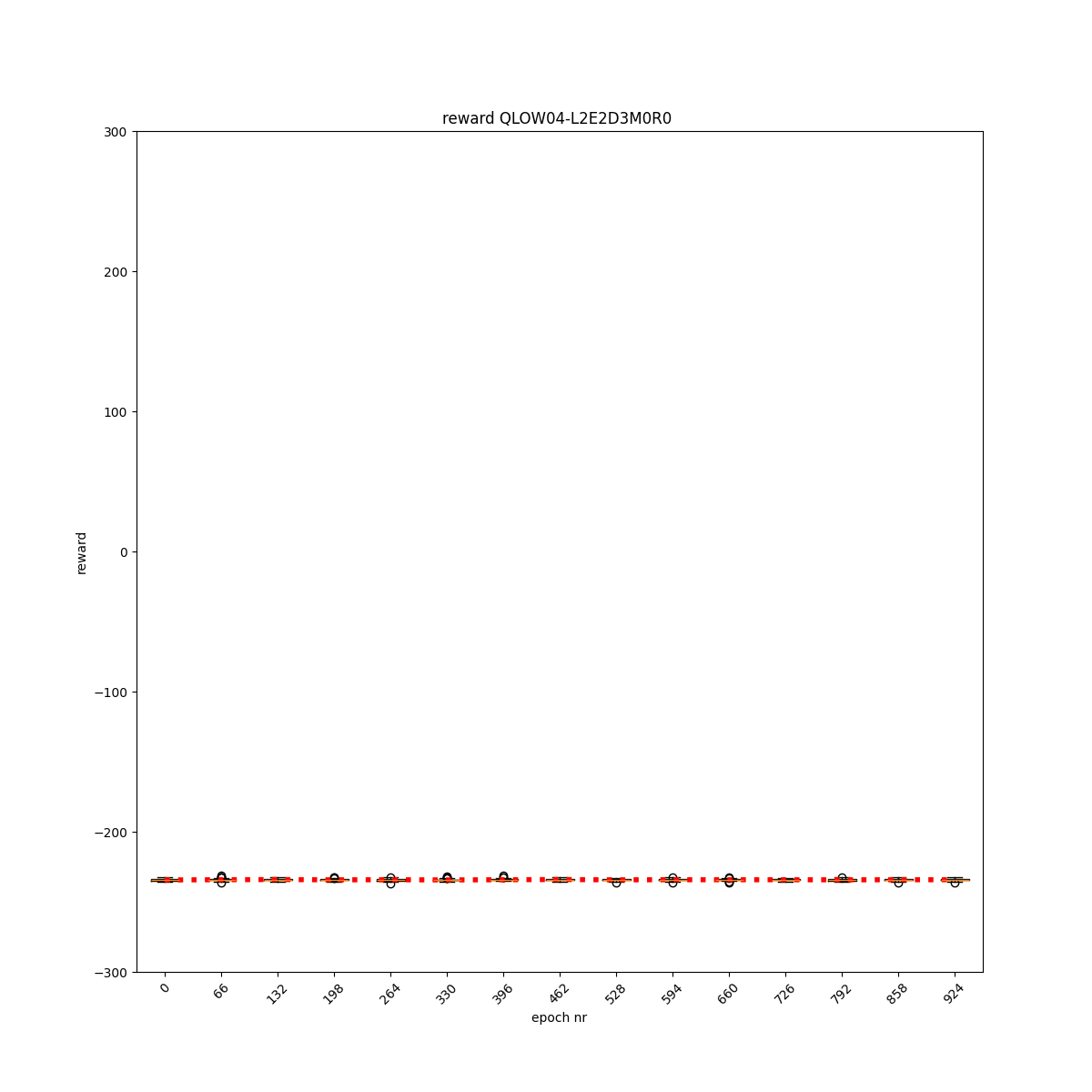

L0 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

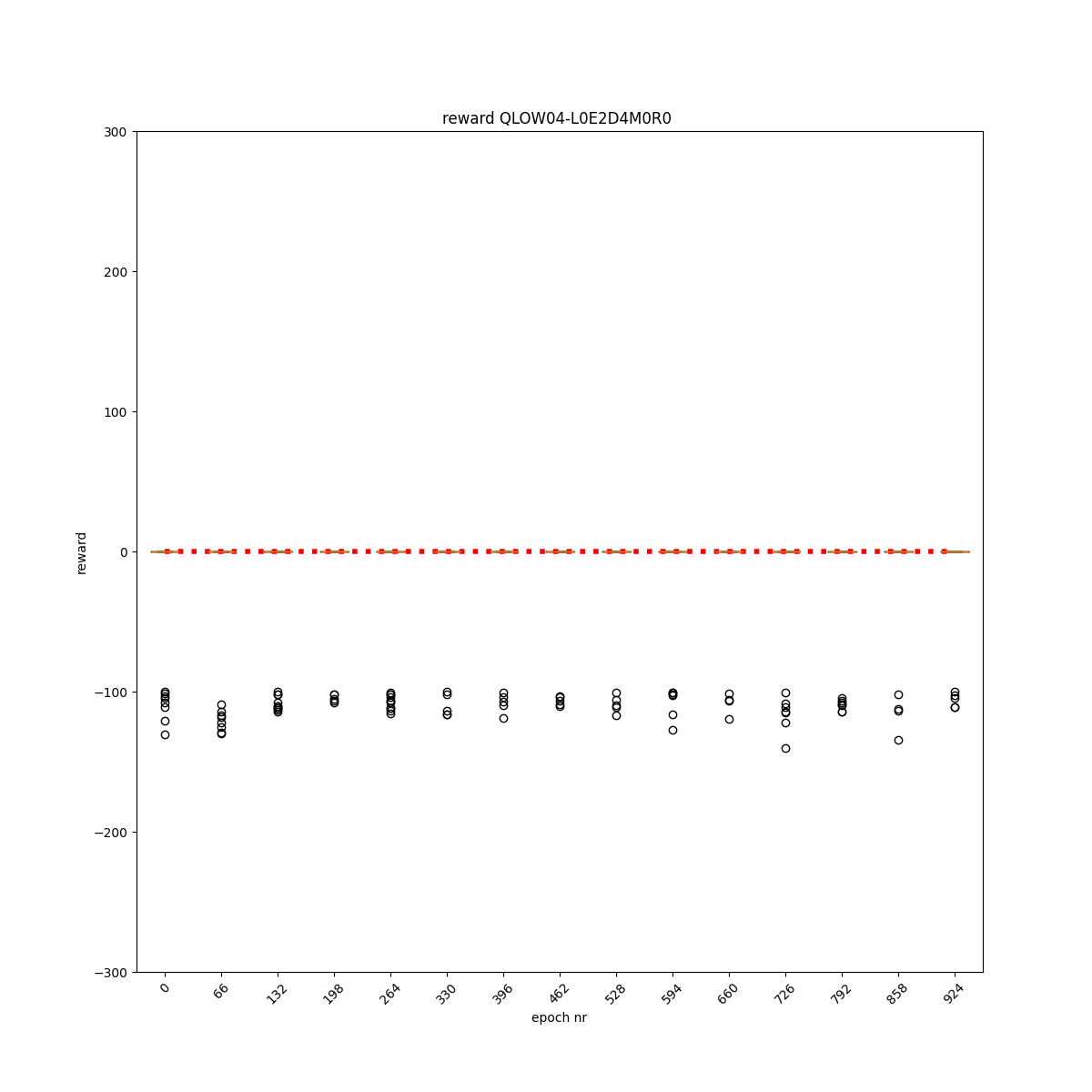

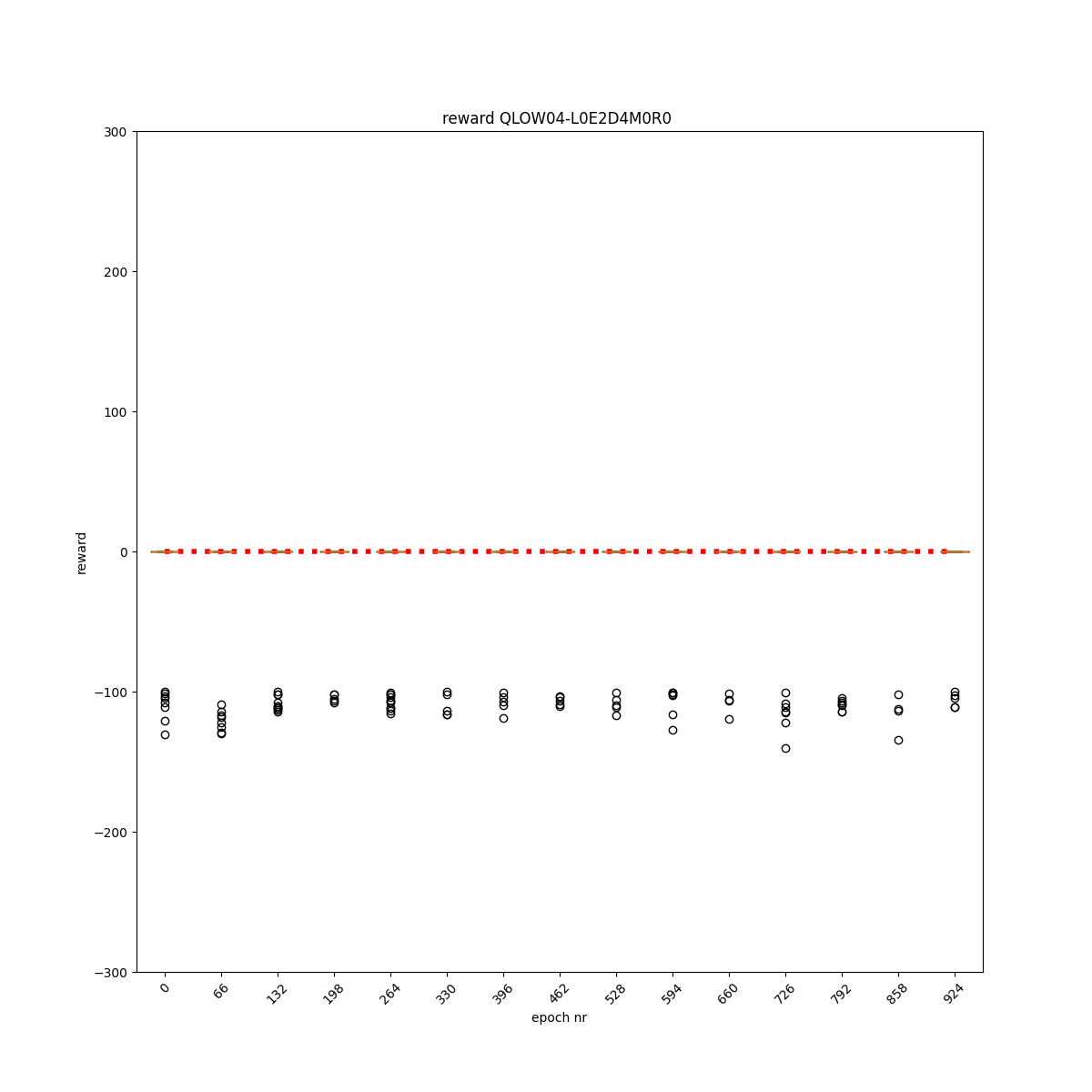

L0 E2 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

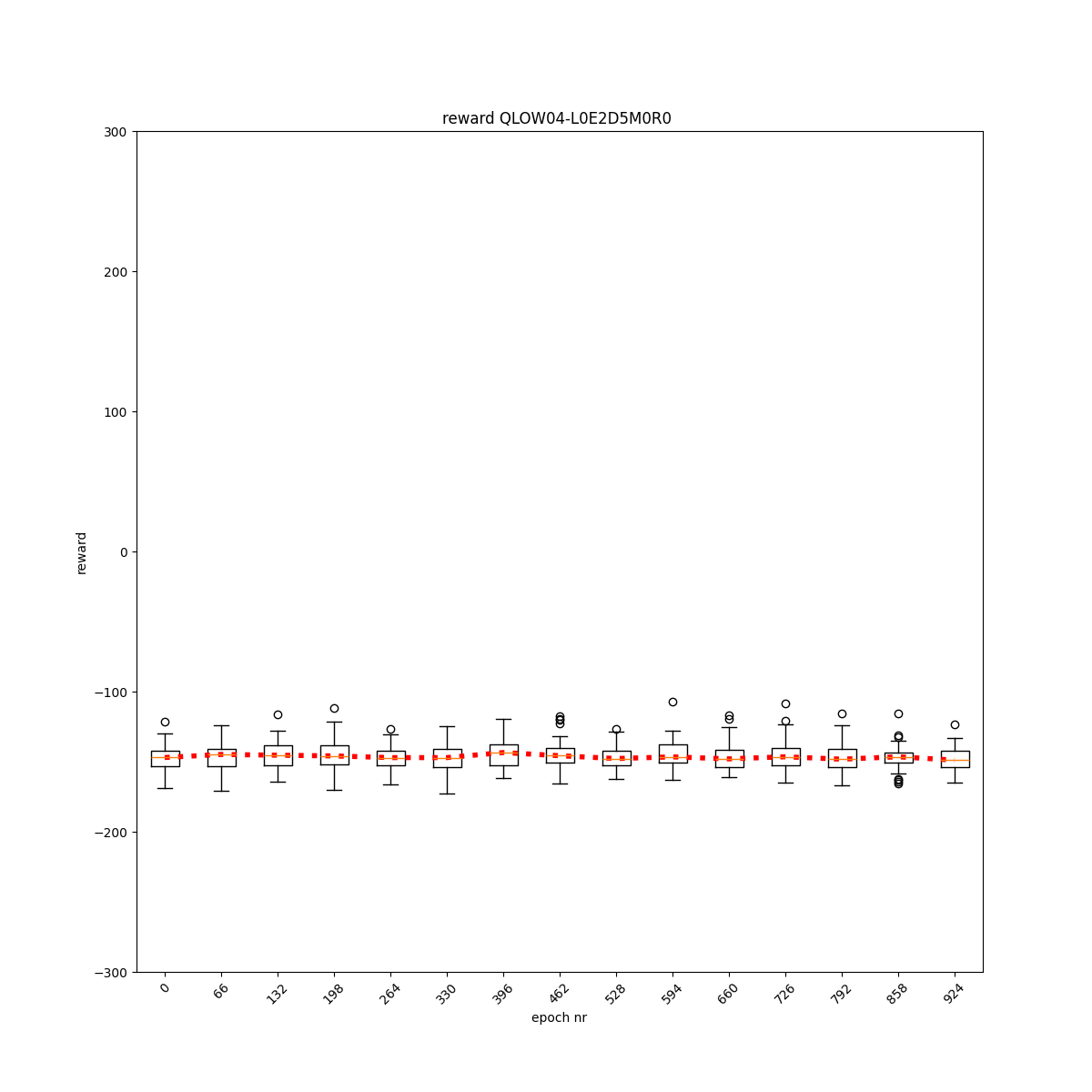

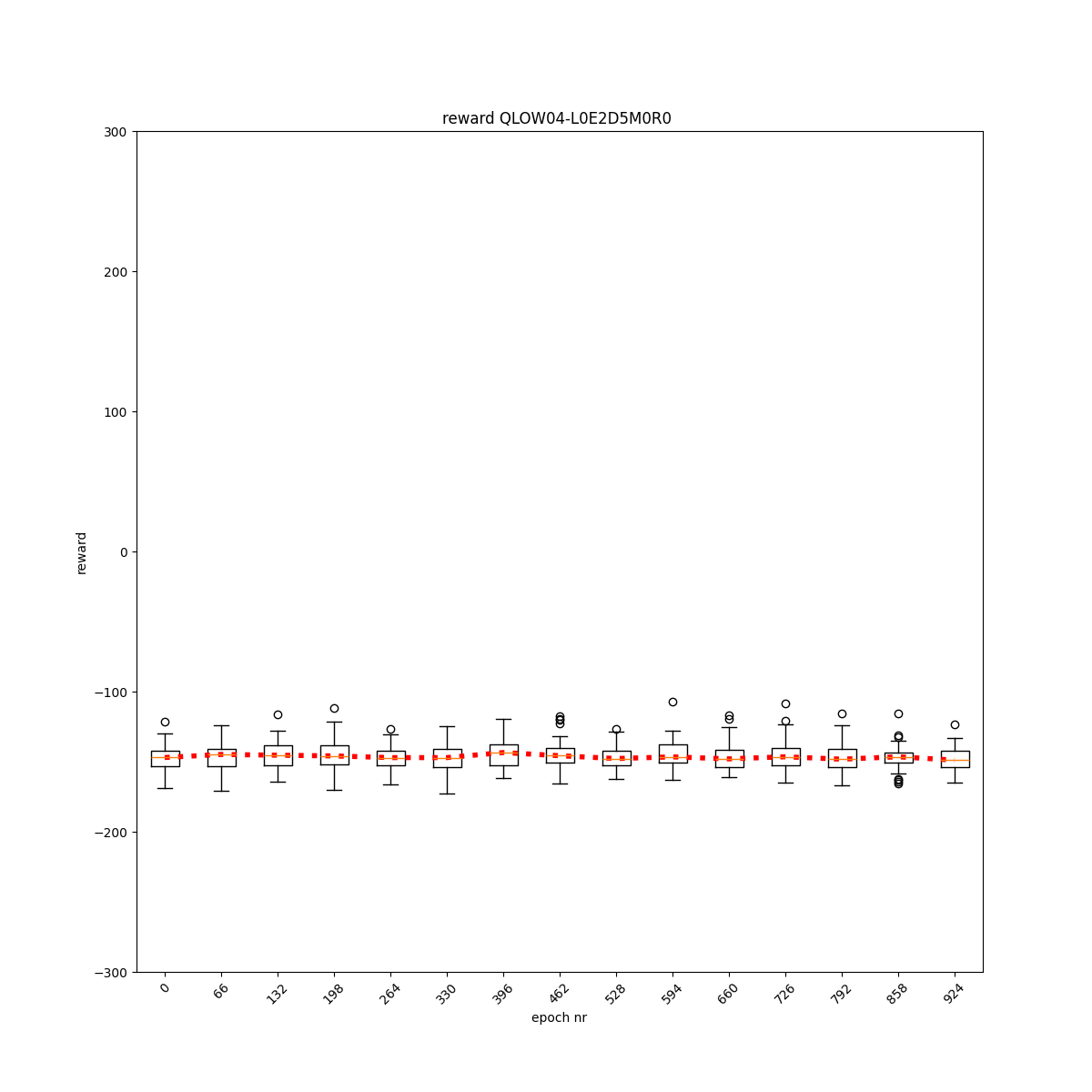

L0 E2 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

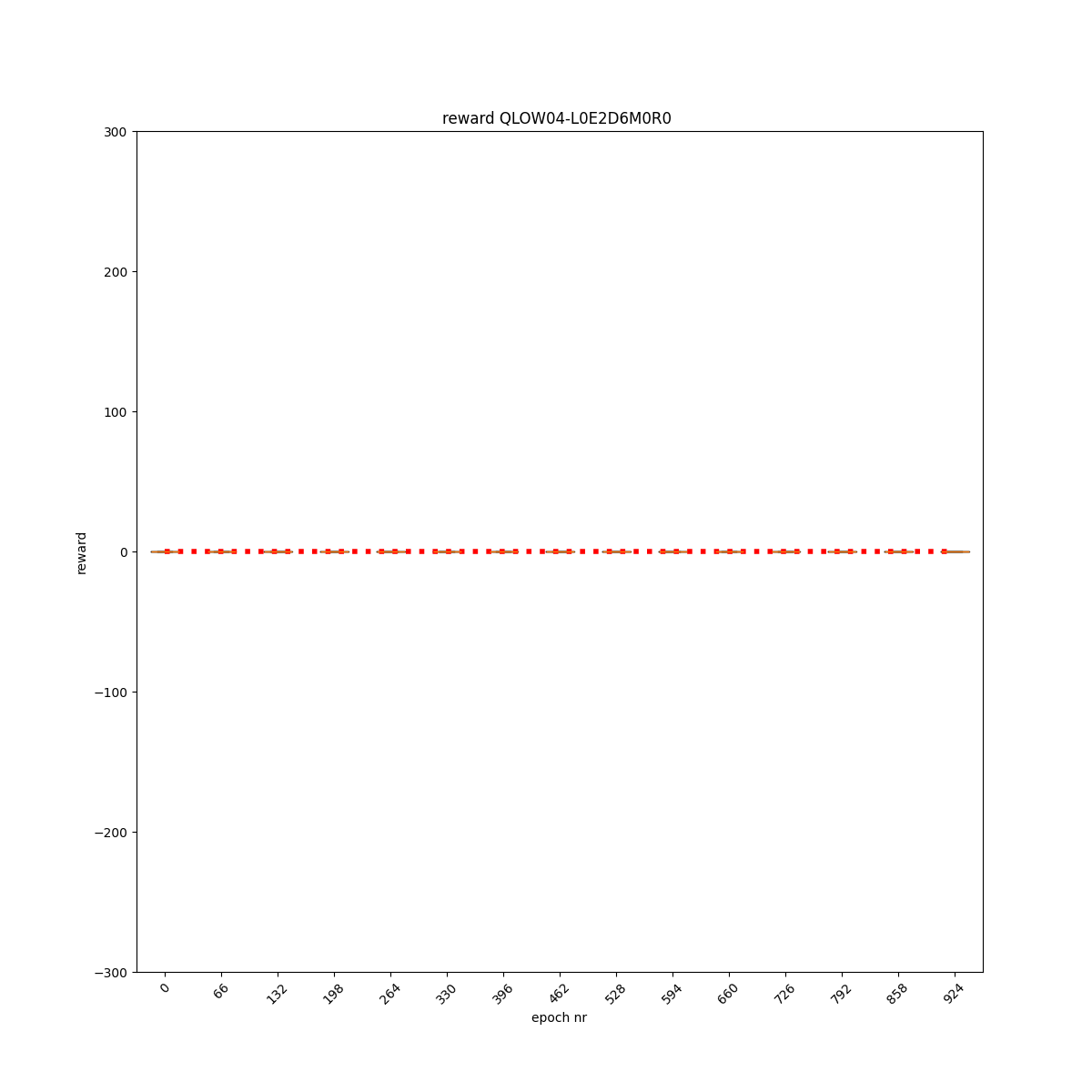

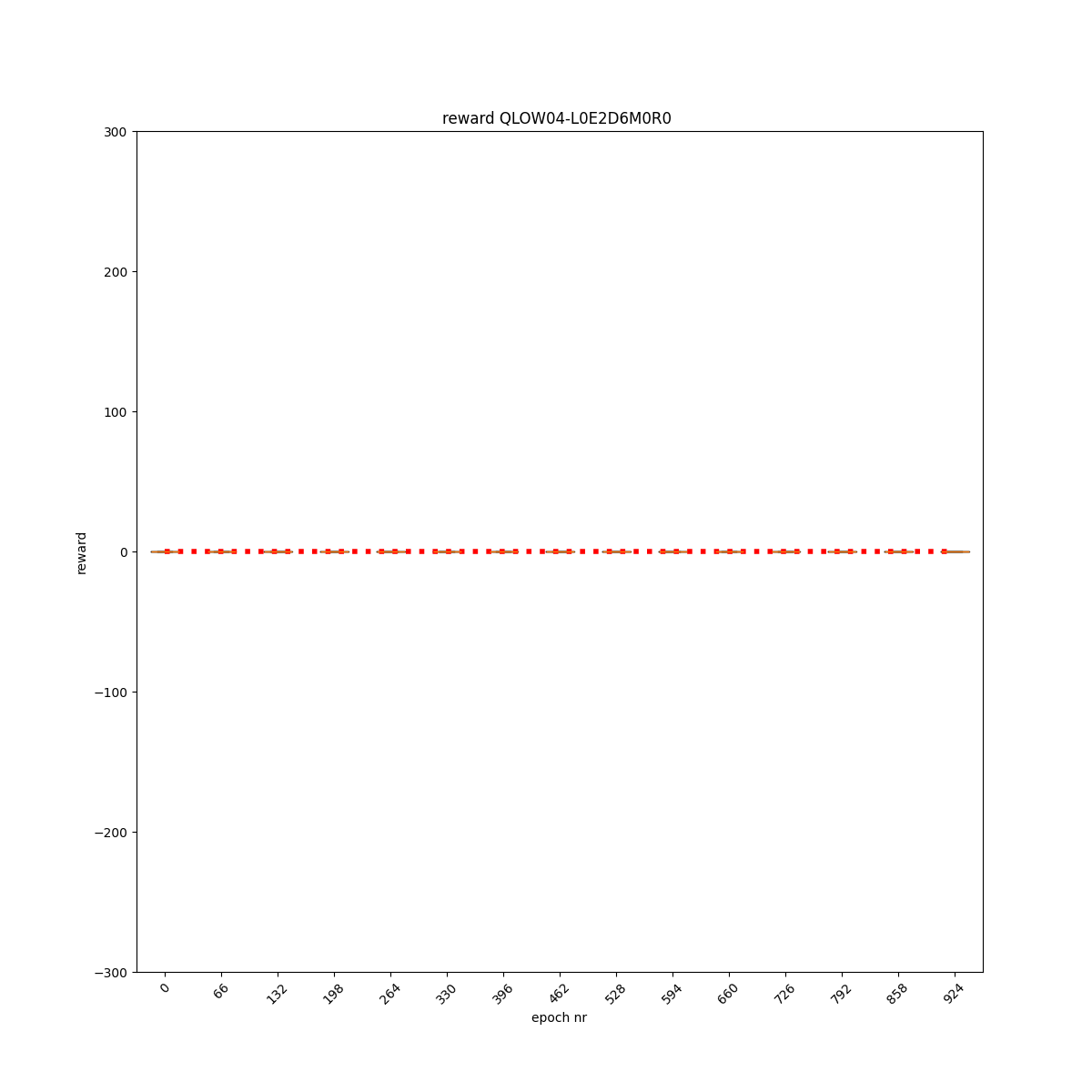

L0 E2 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

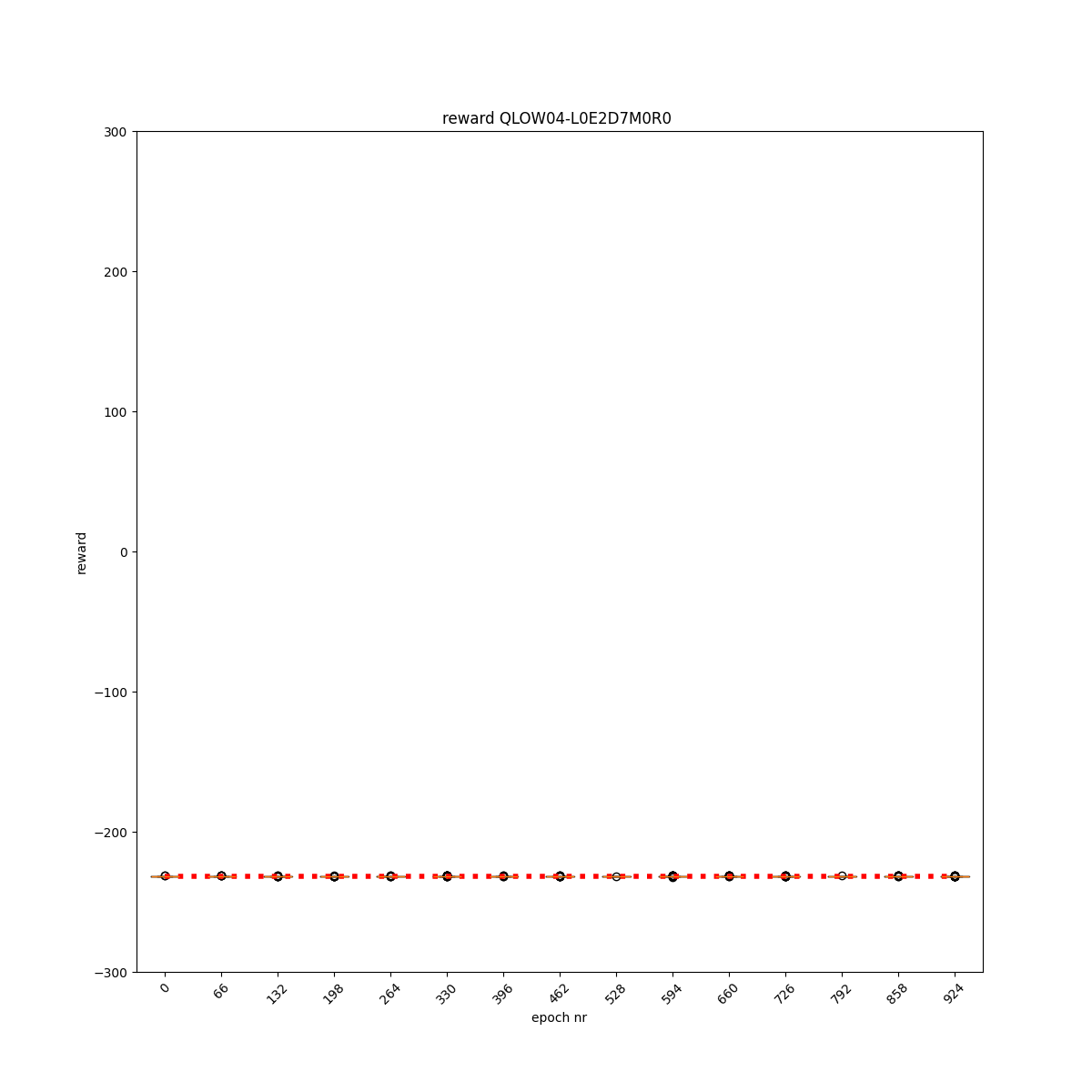

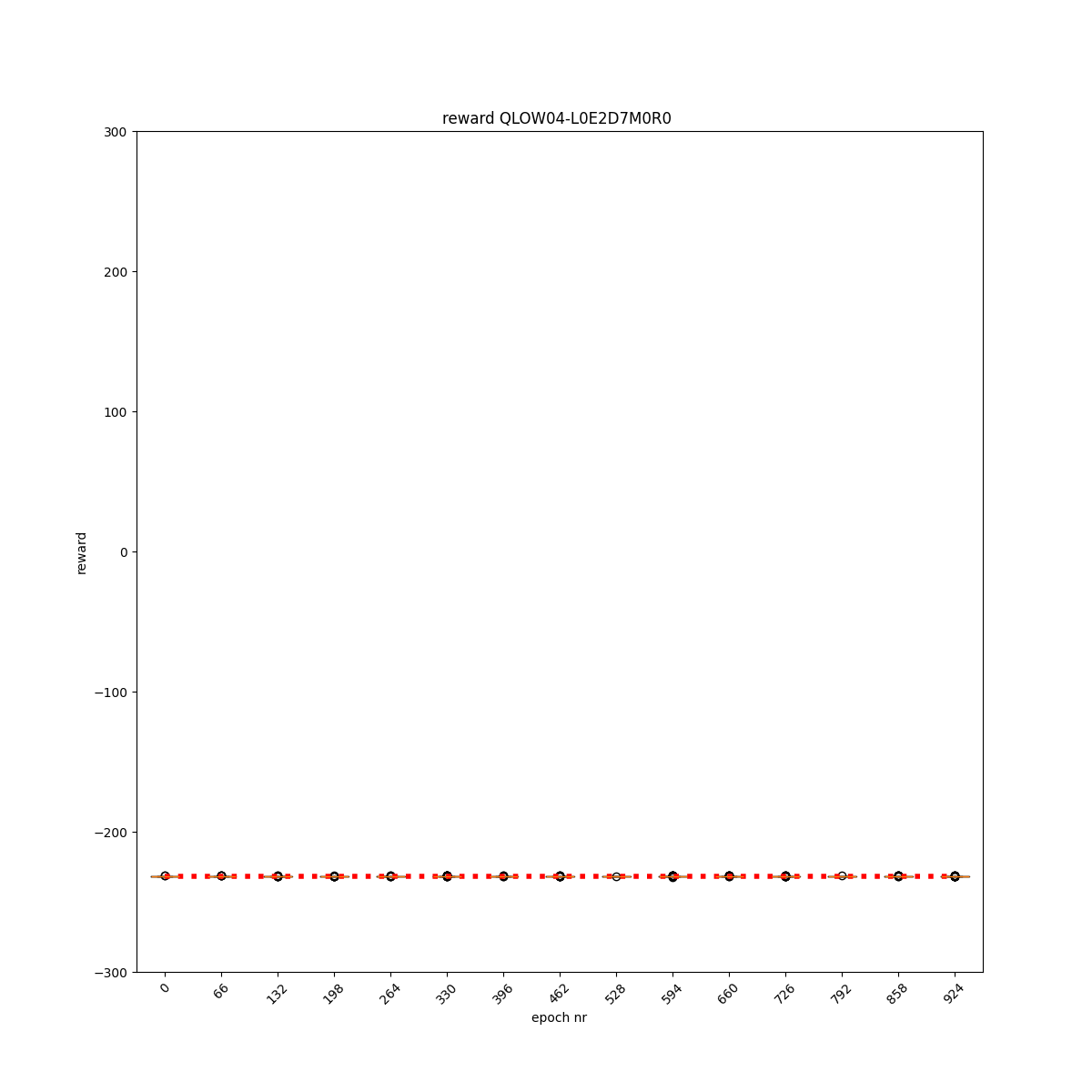

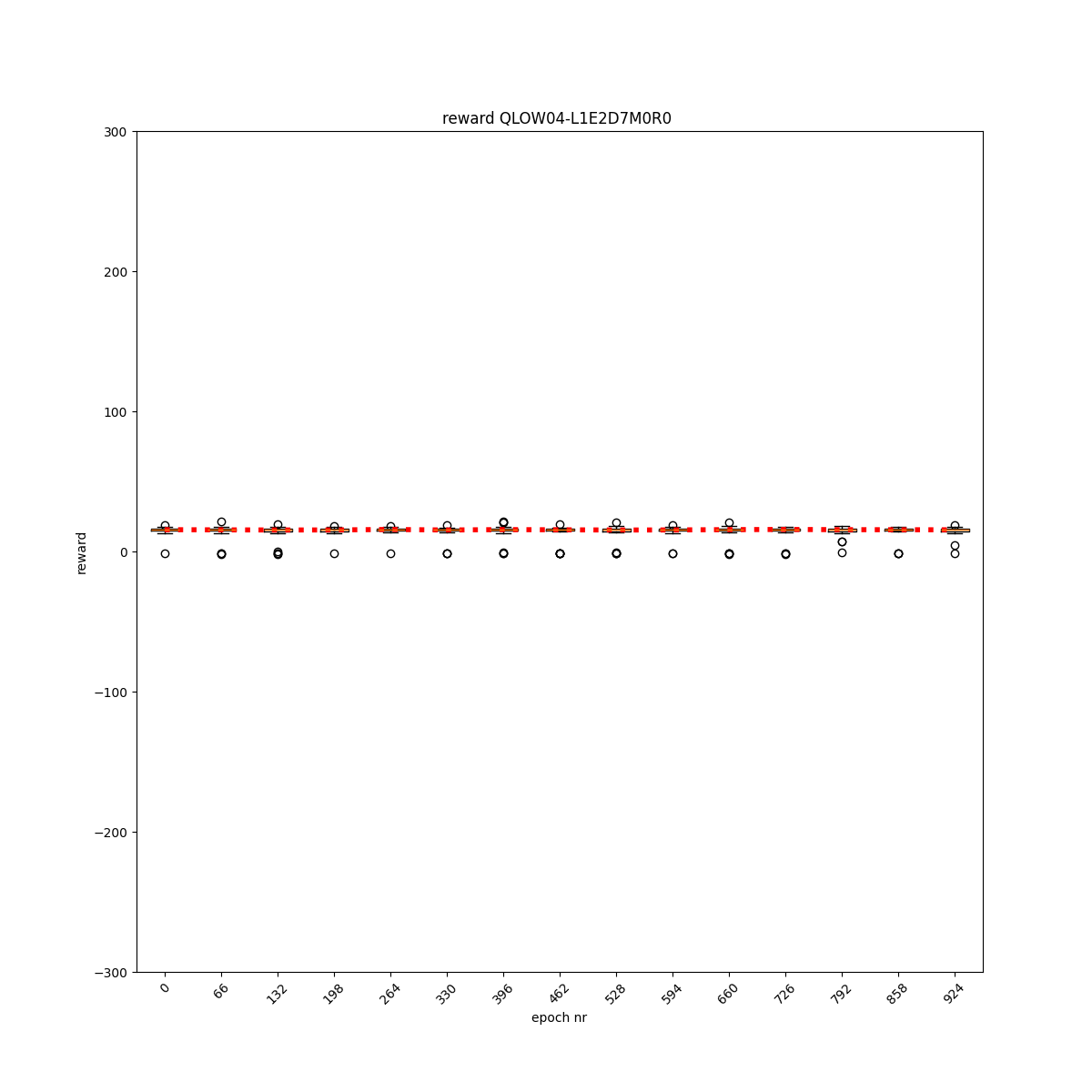

L0 E2 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

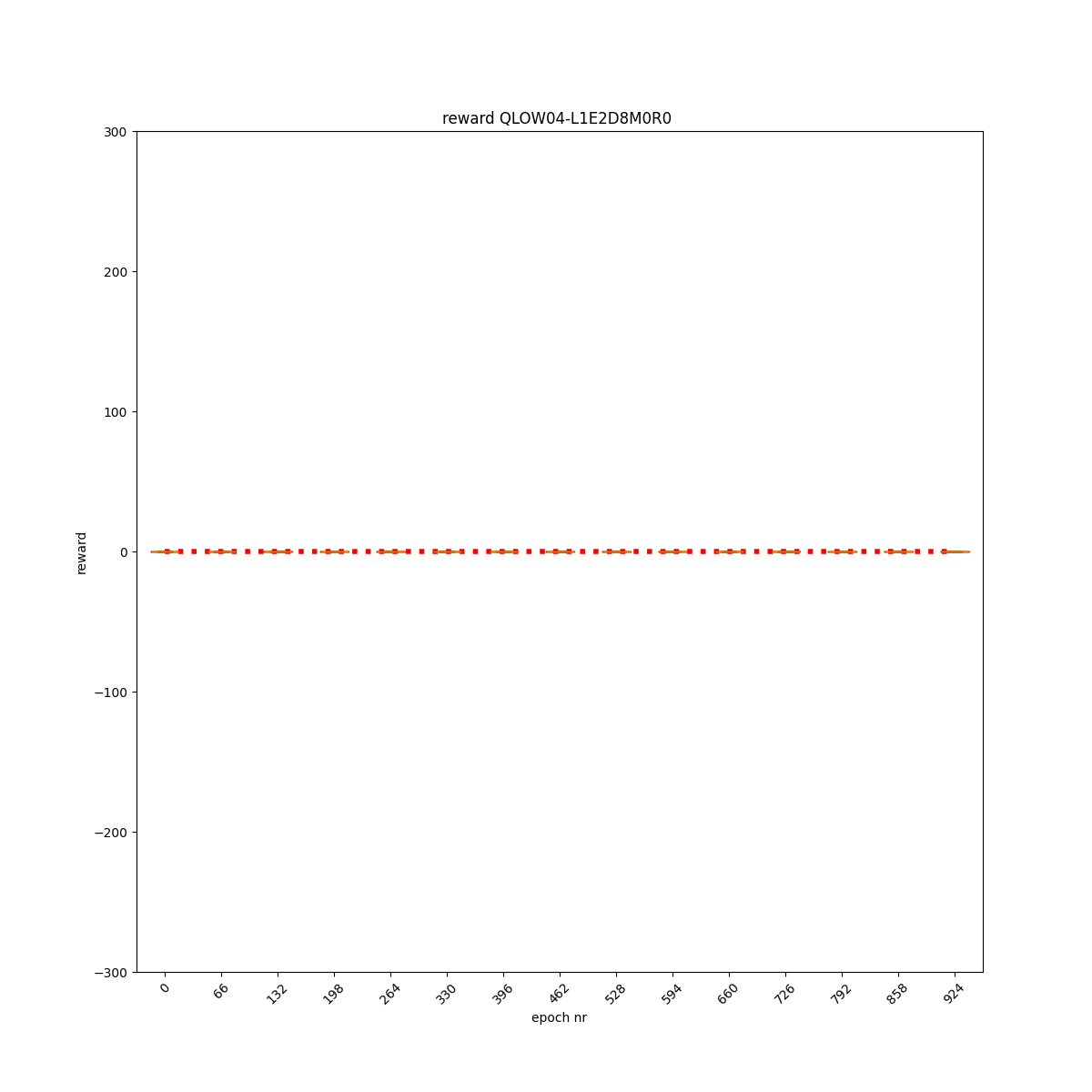

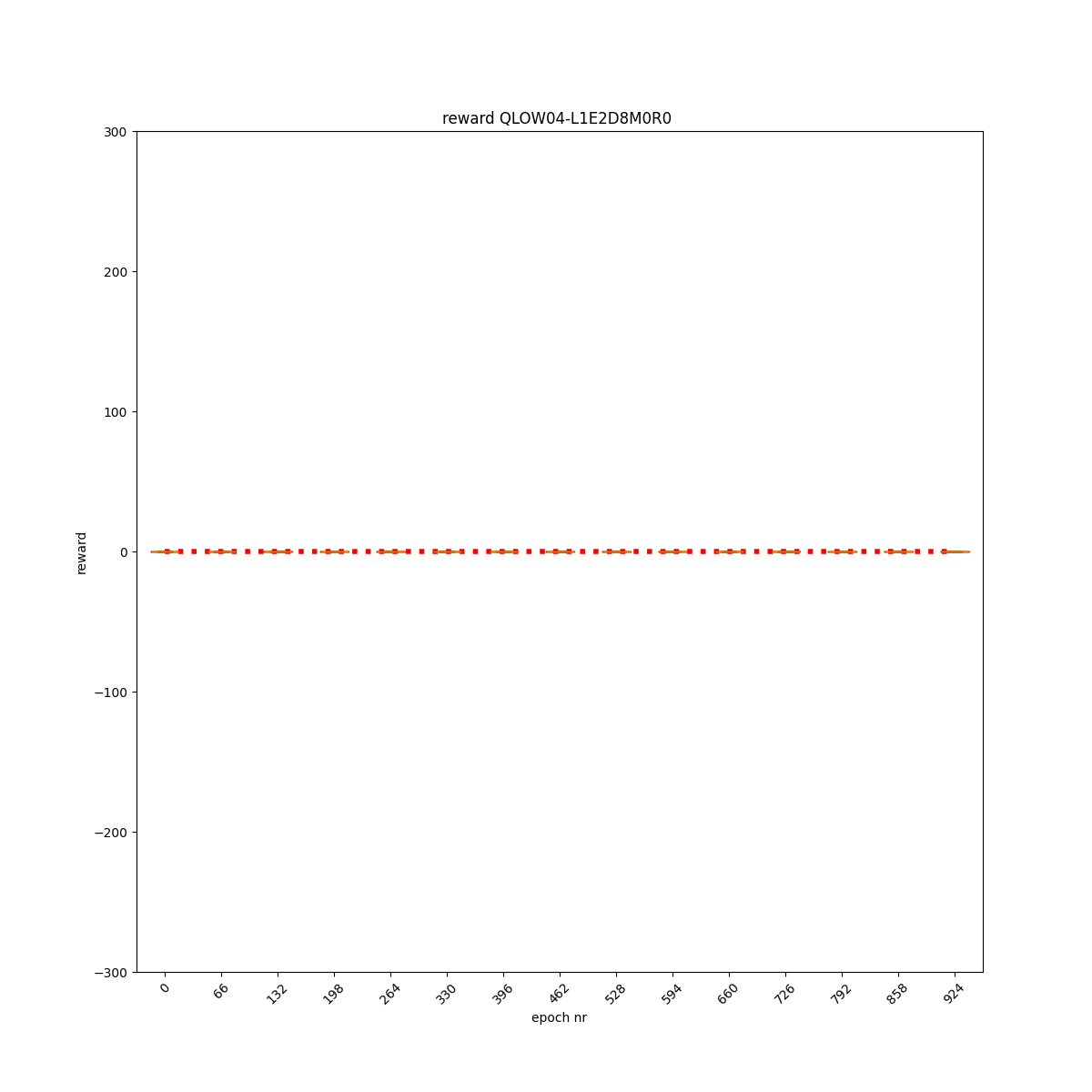

L0 E2 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

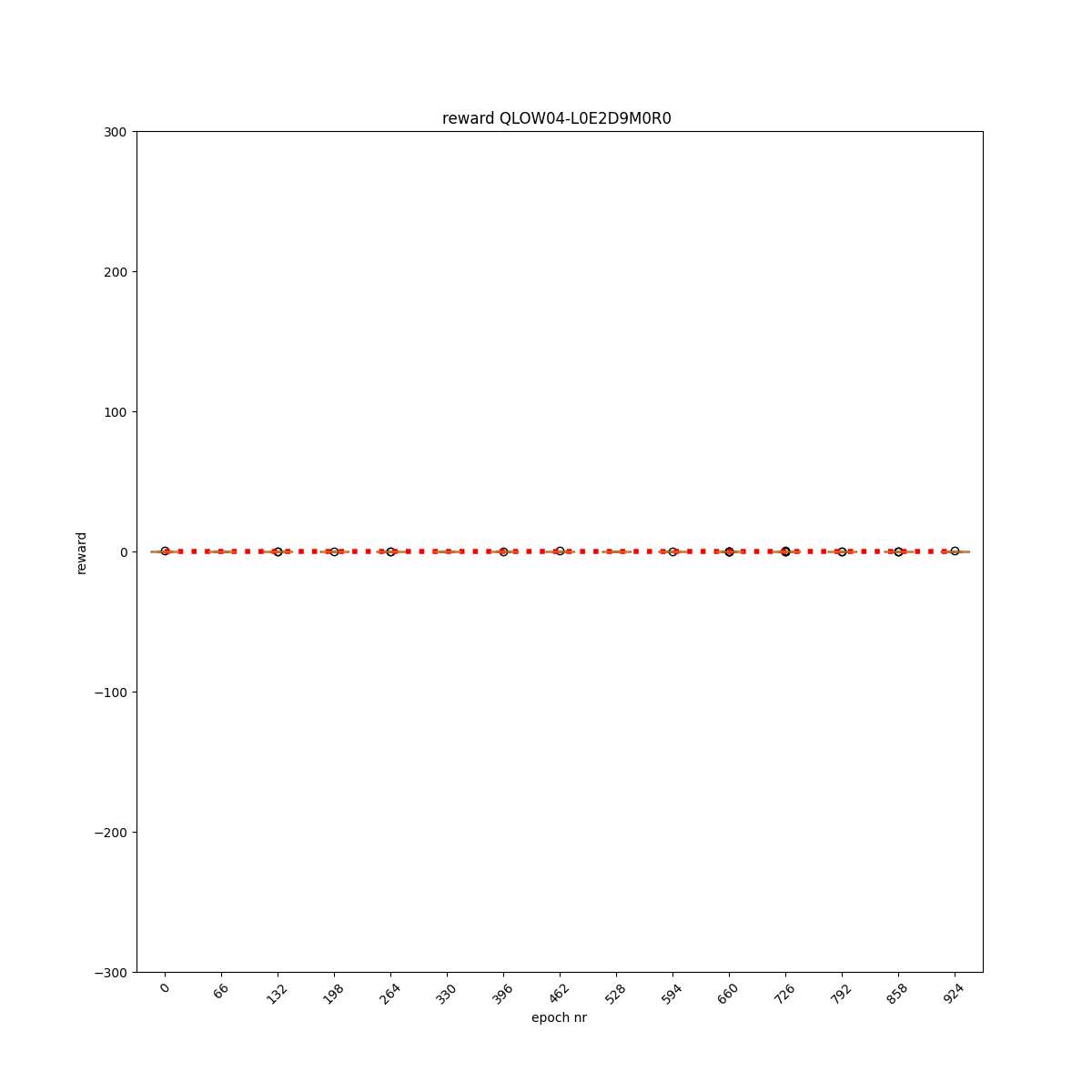

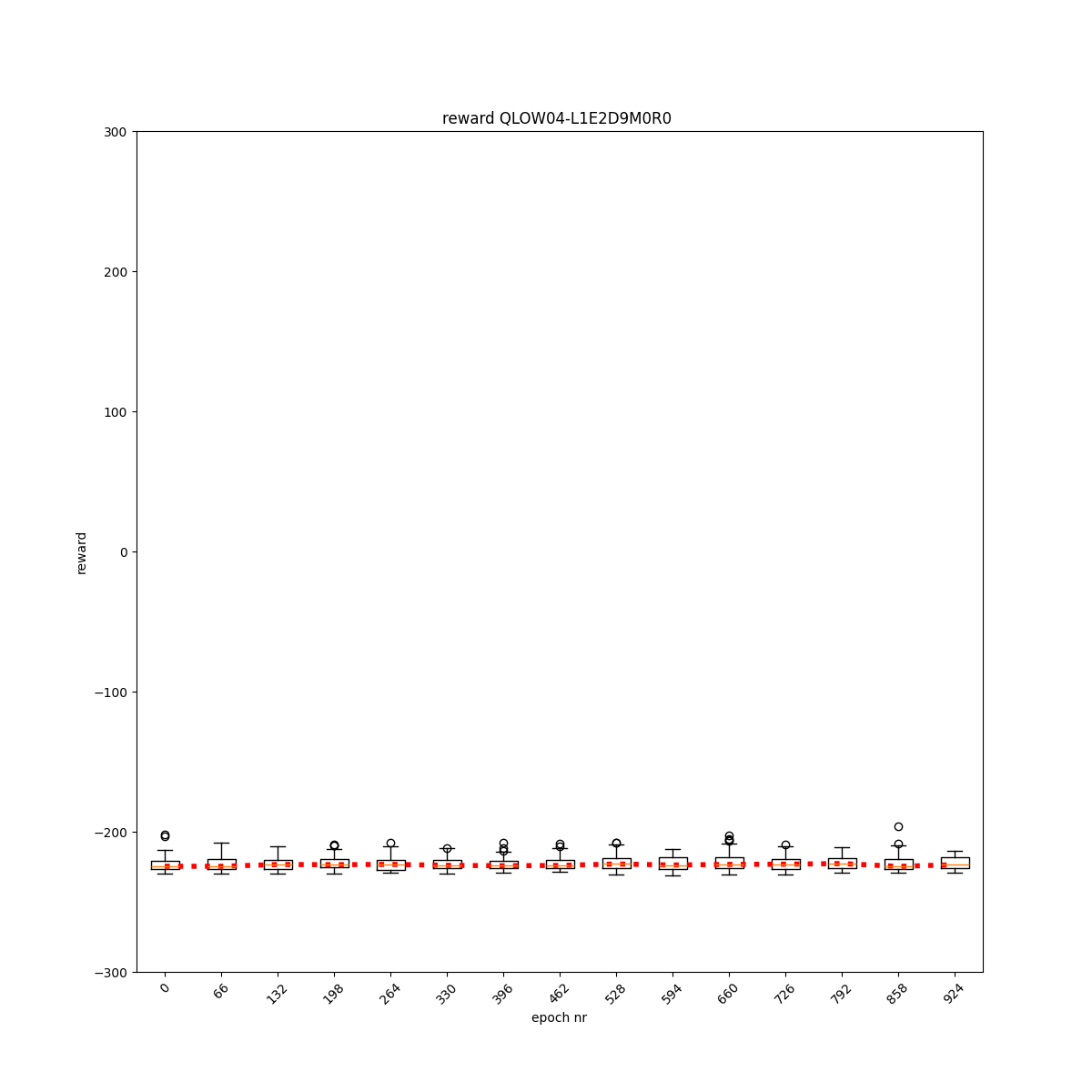

L0 E2 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

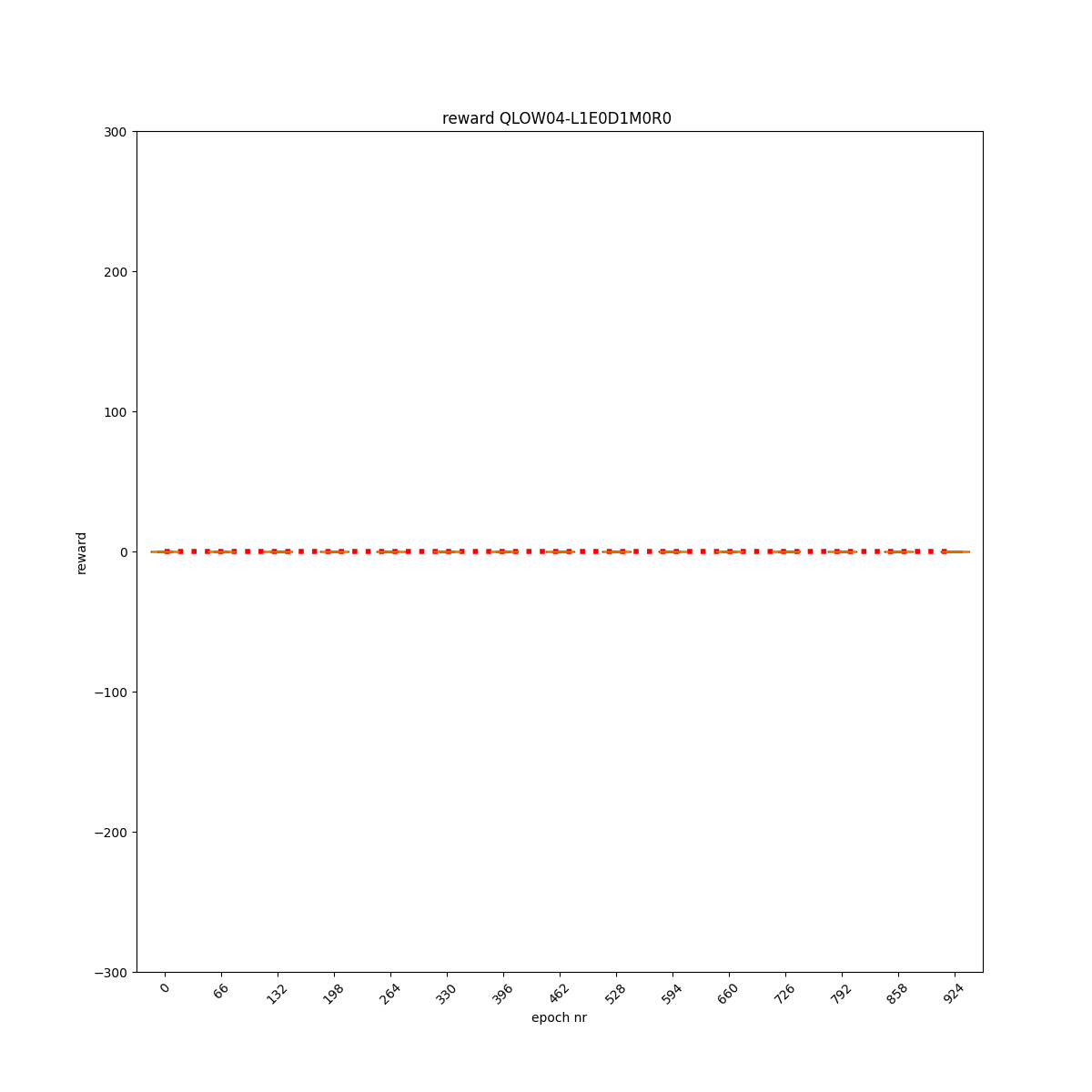

L1 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

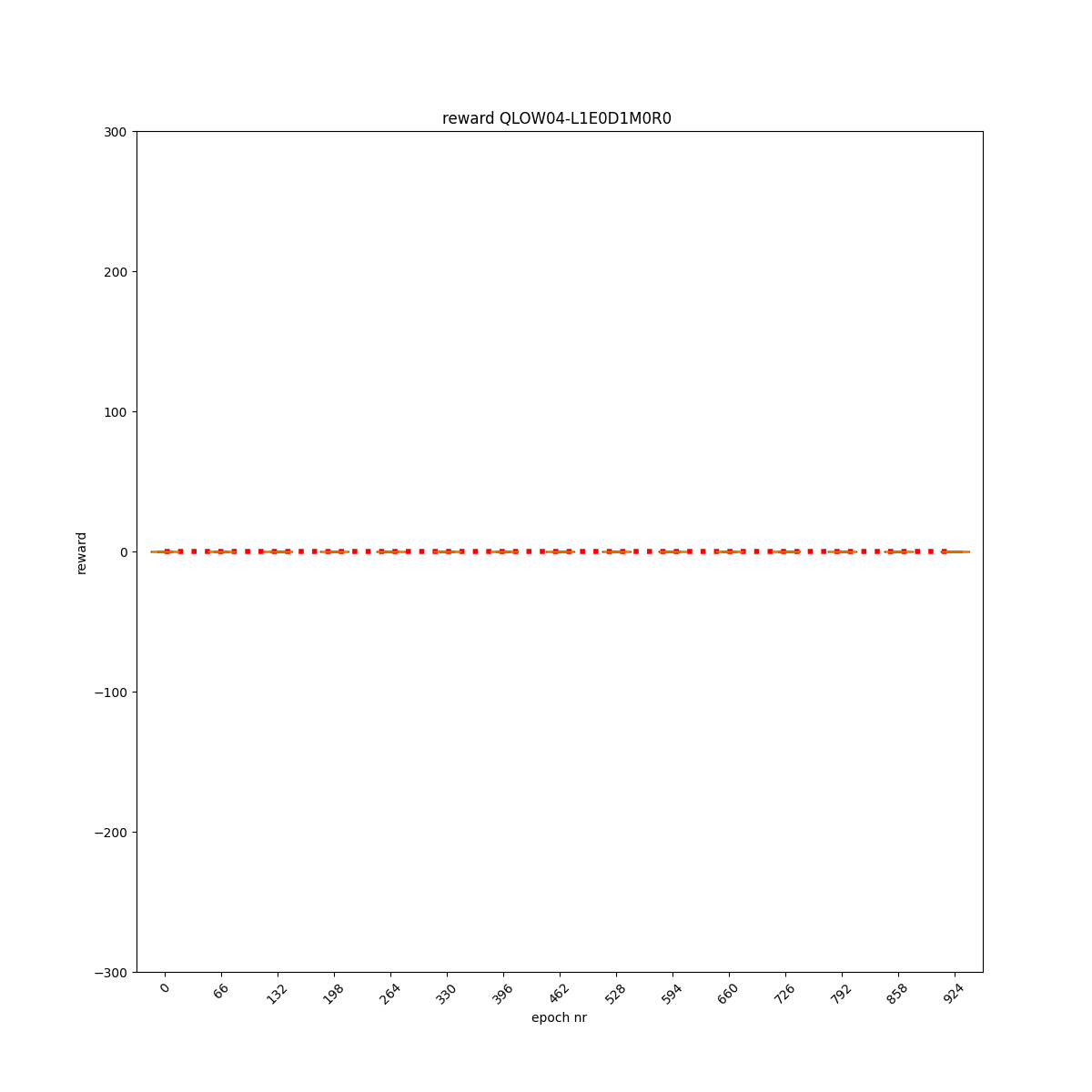

L1 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

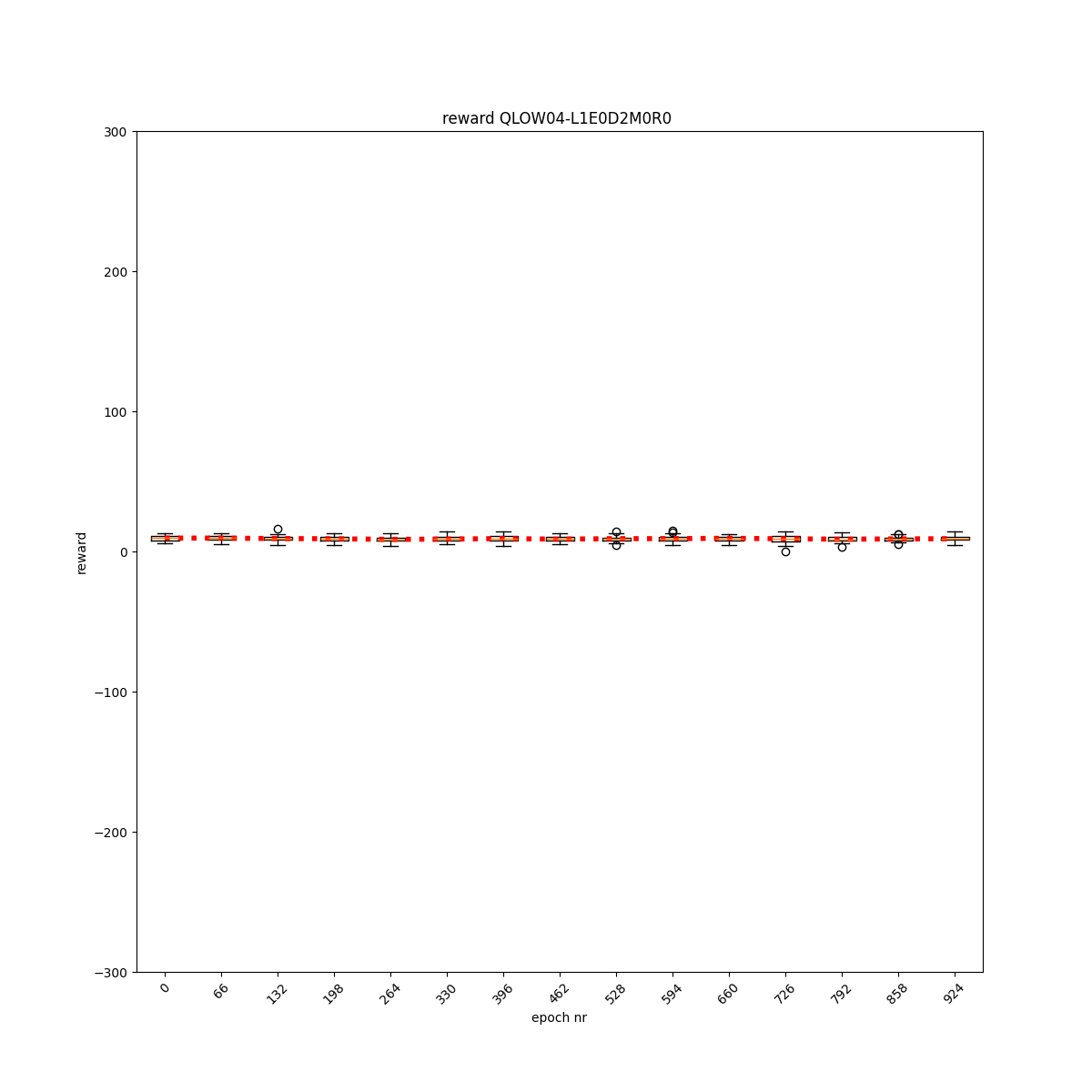

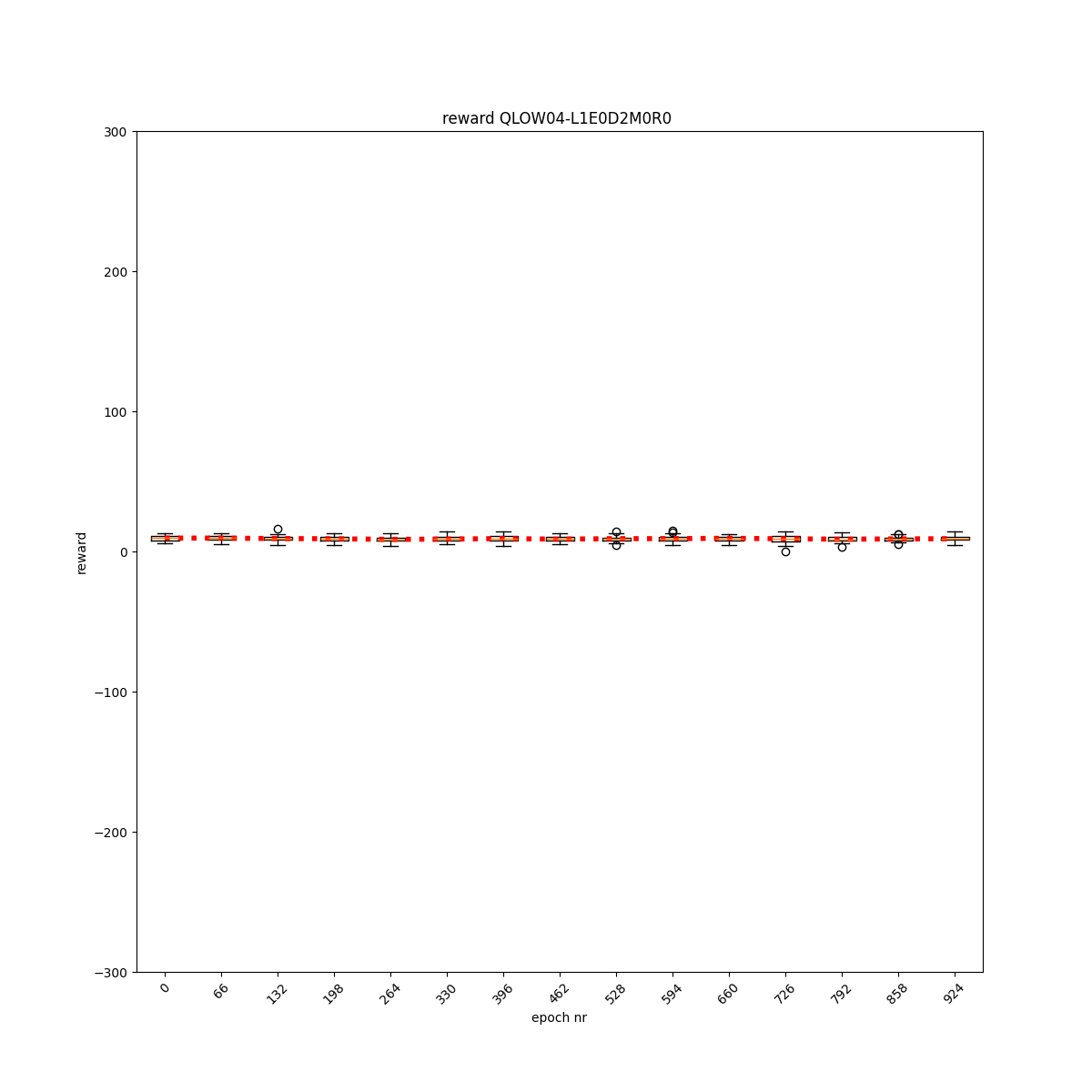

L1 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

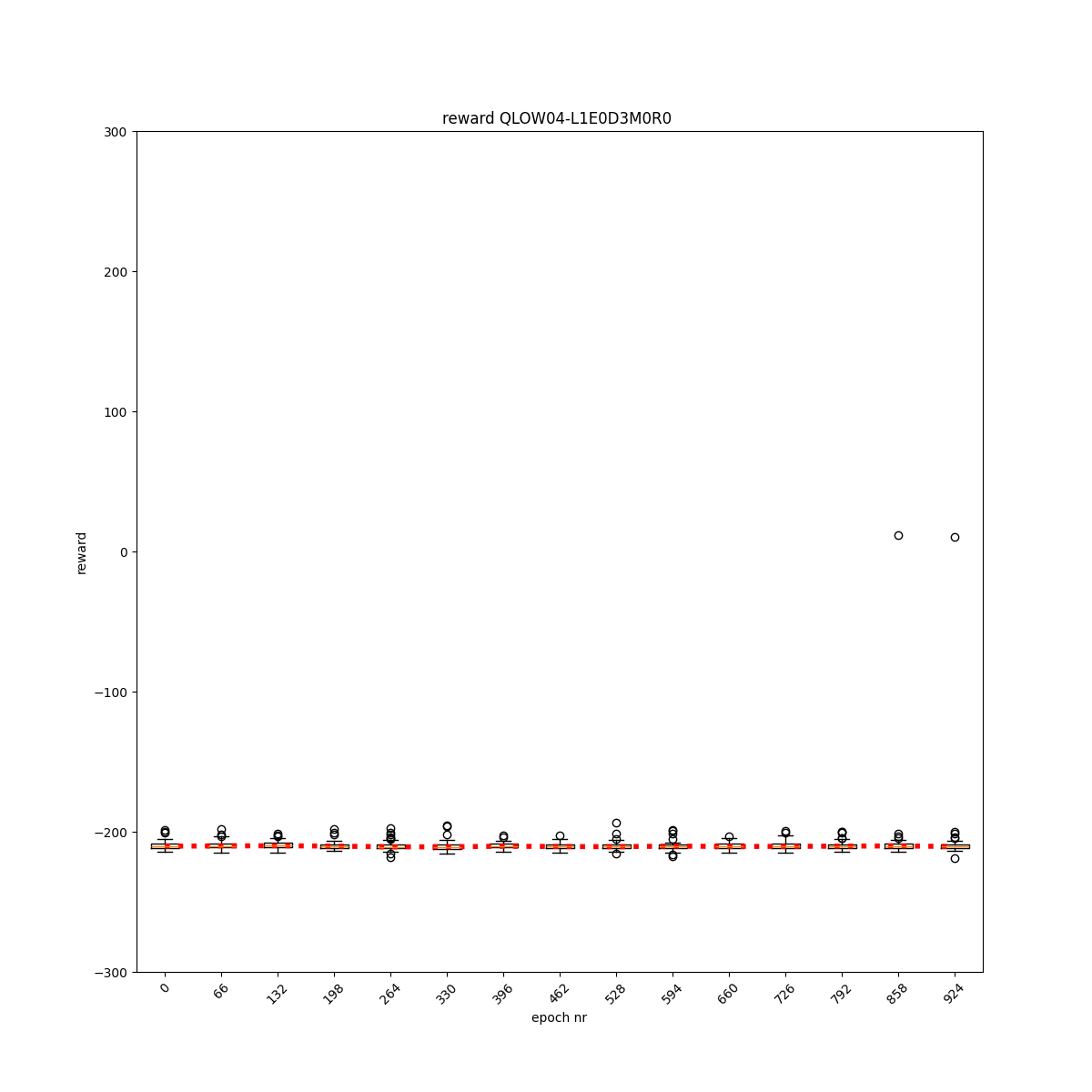

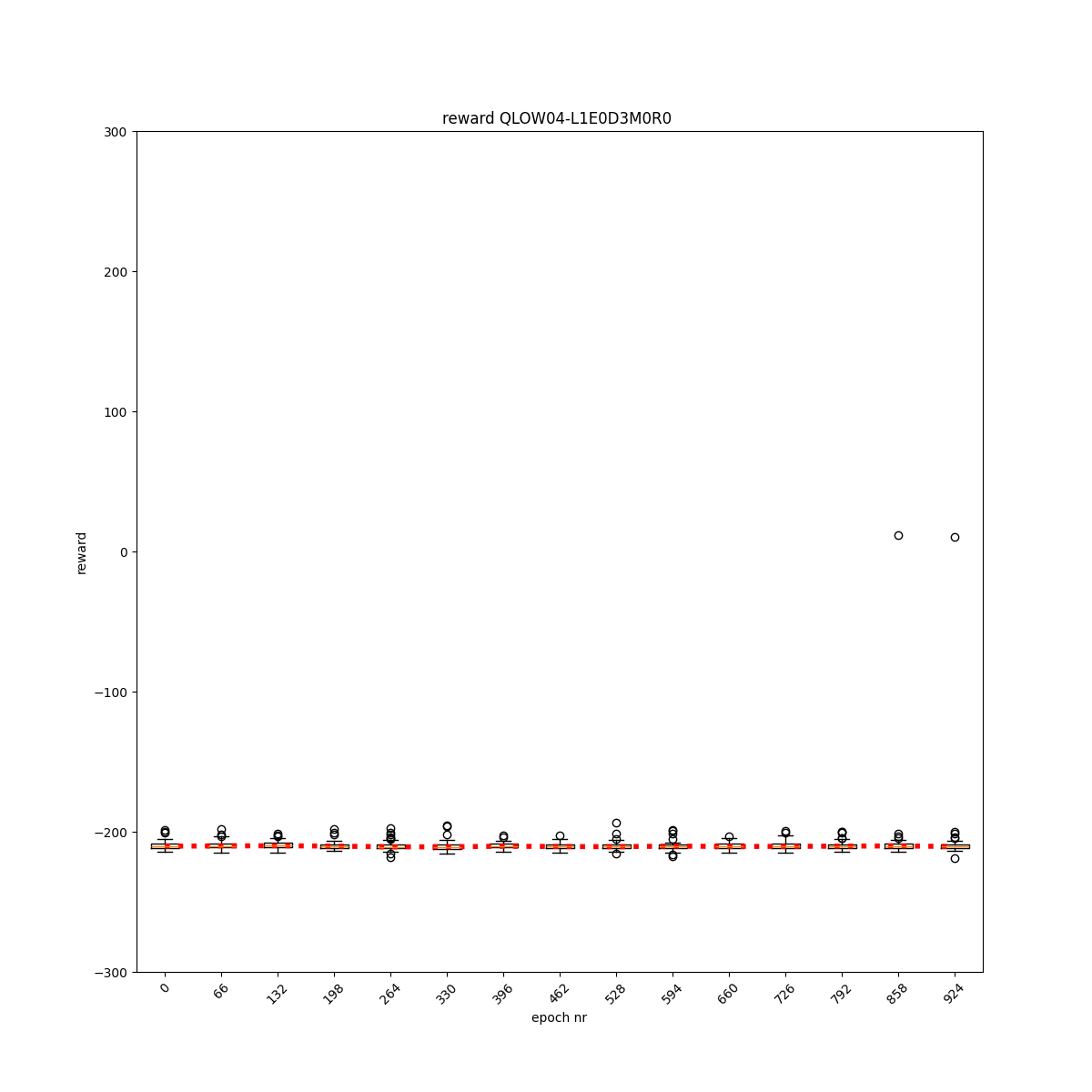

L1 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

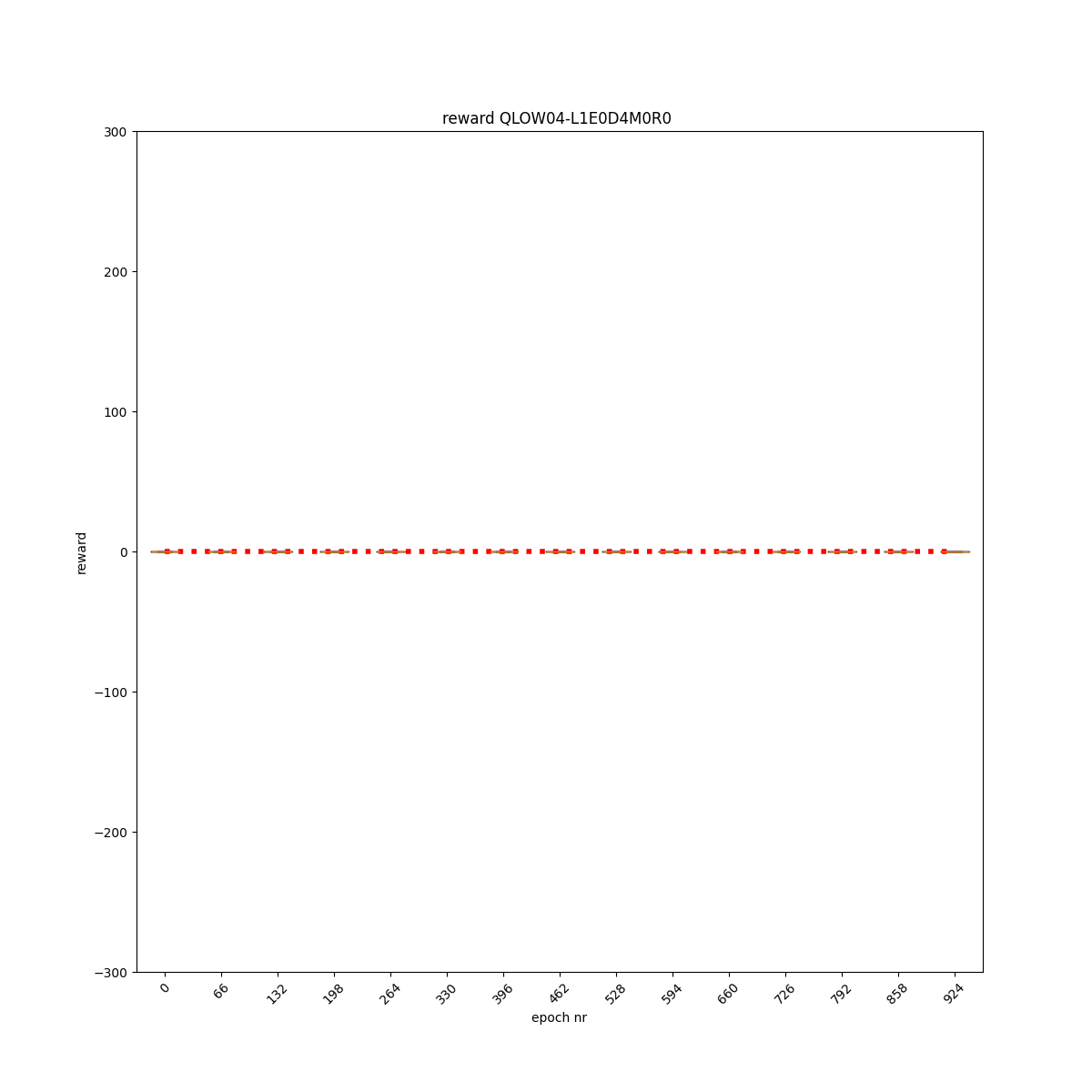

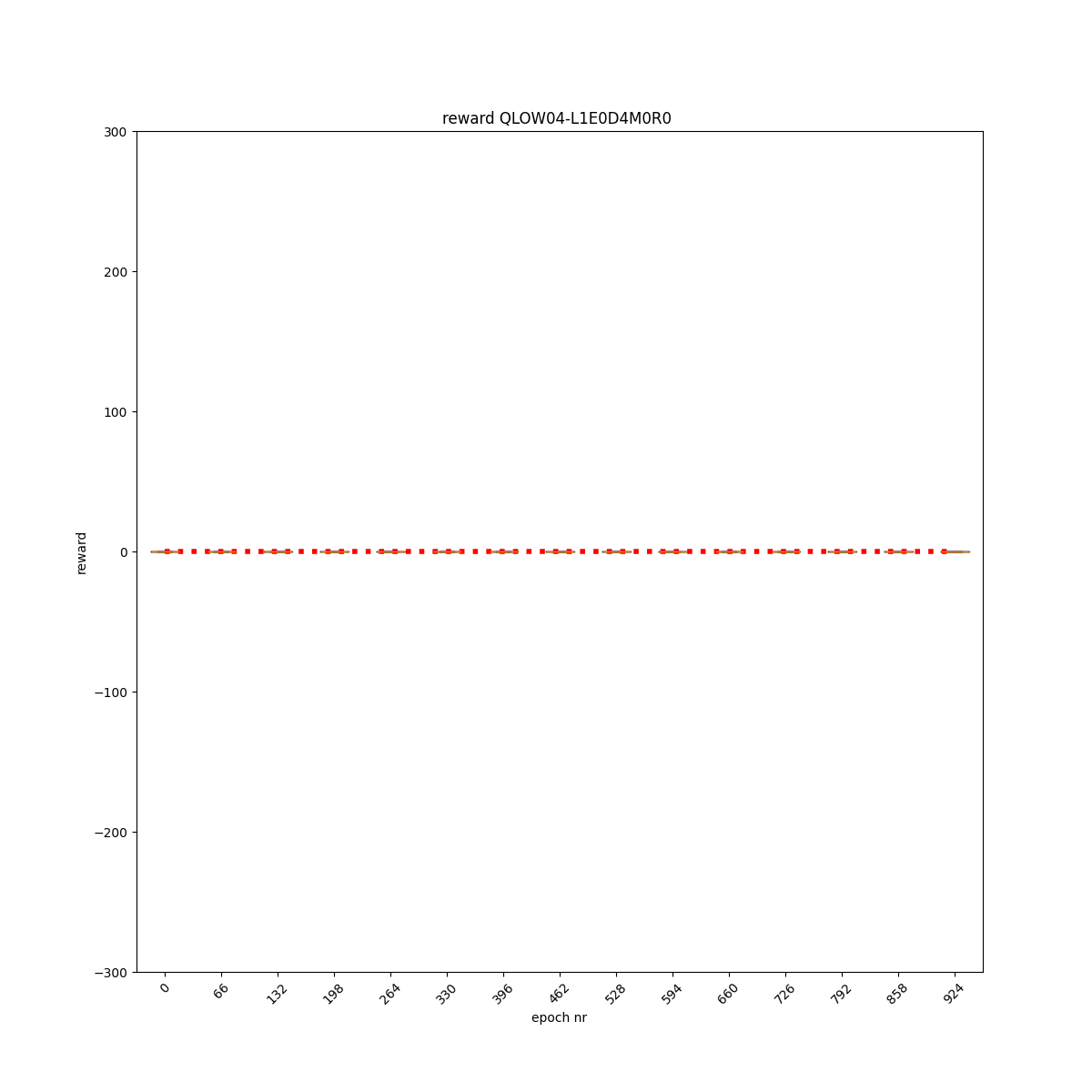

L1 E0 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E0 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E0 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

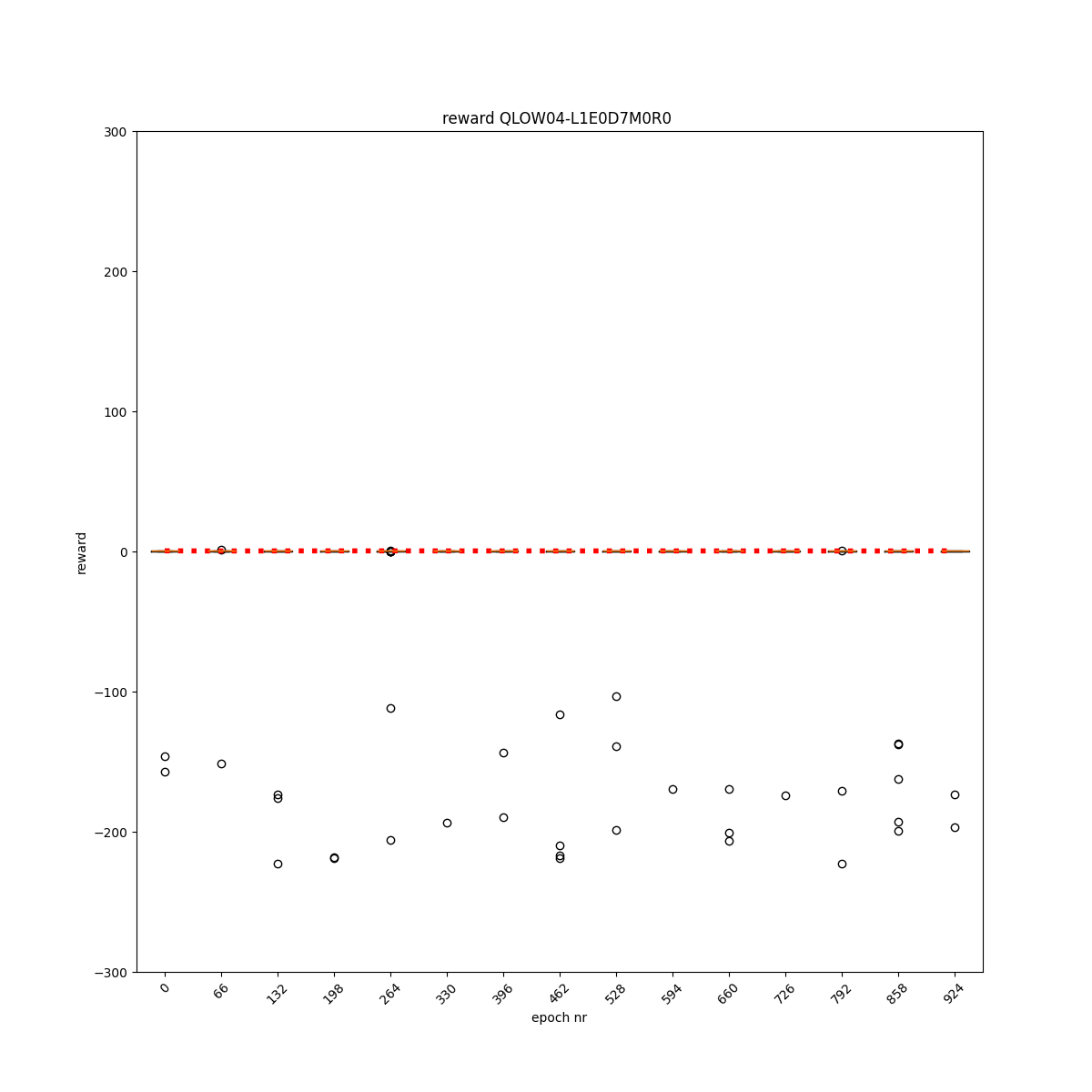

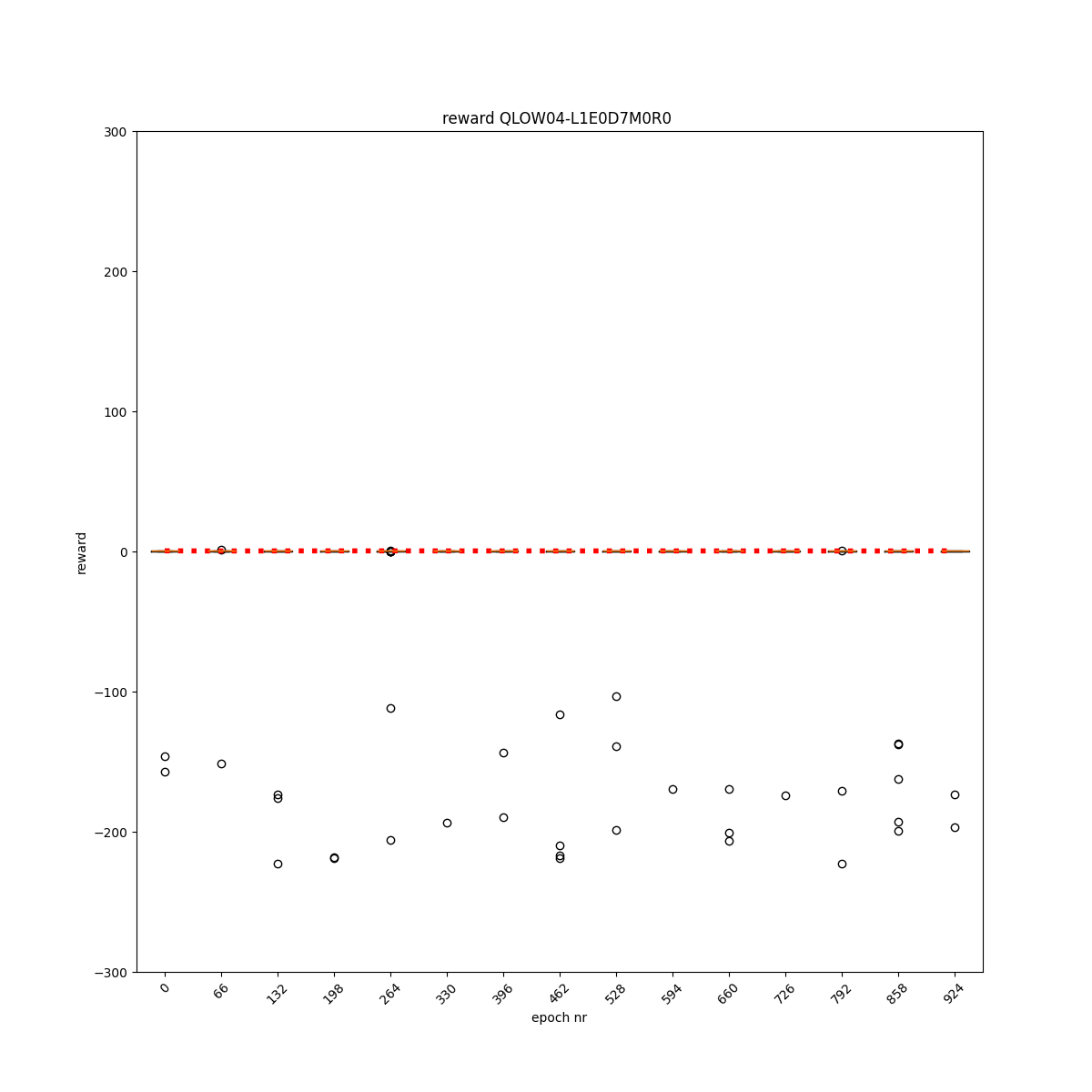

L1 E0 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

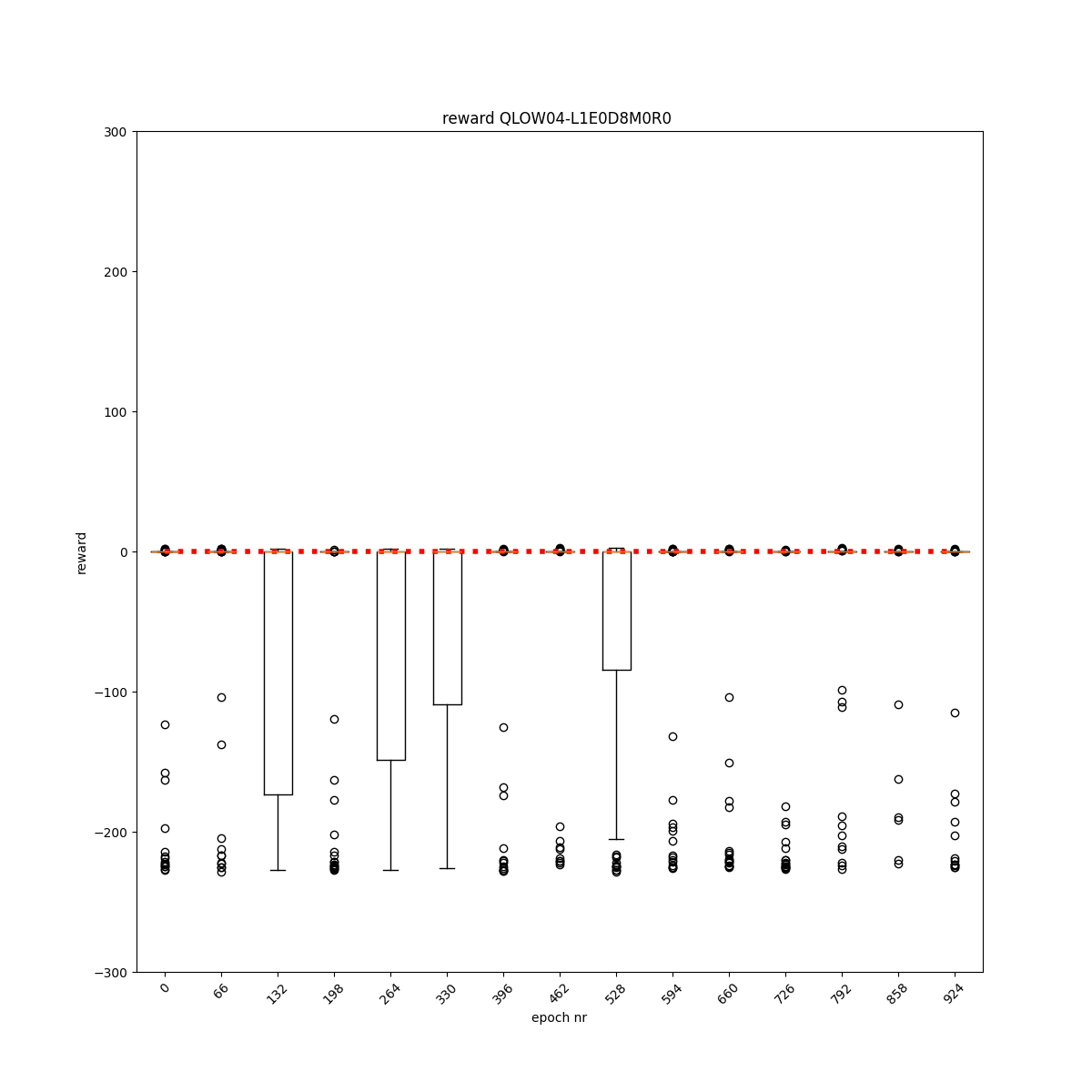

L1 E0 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

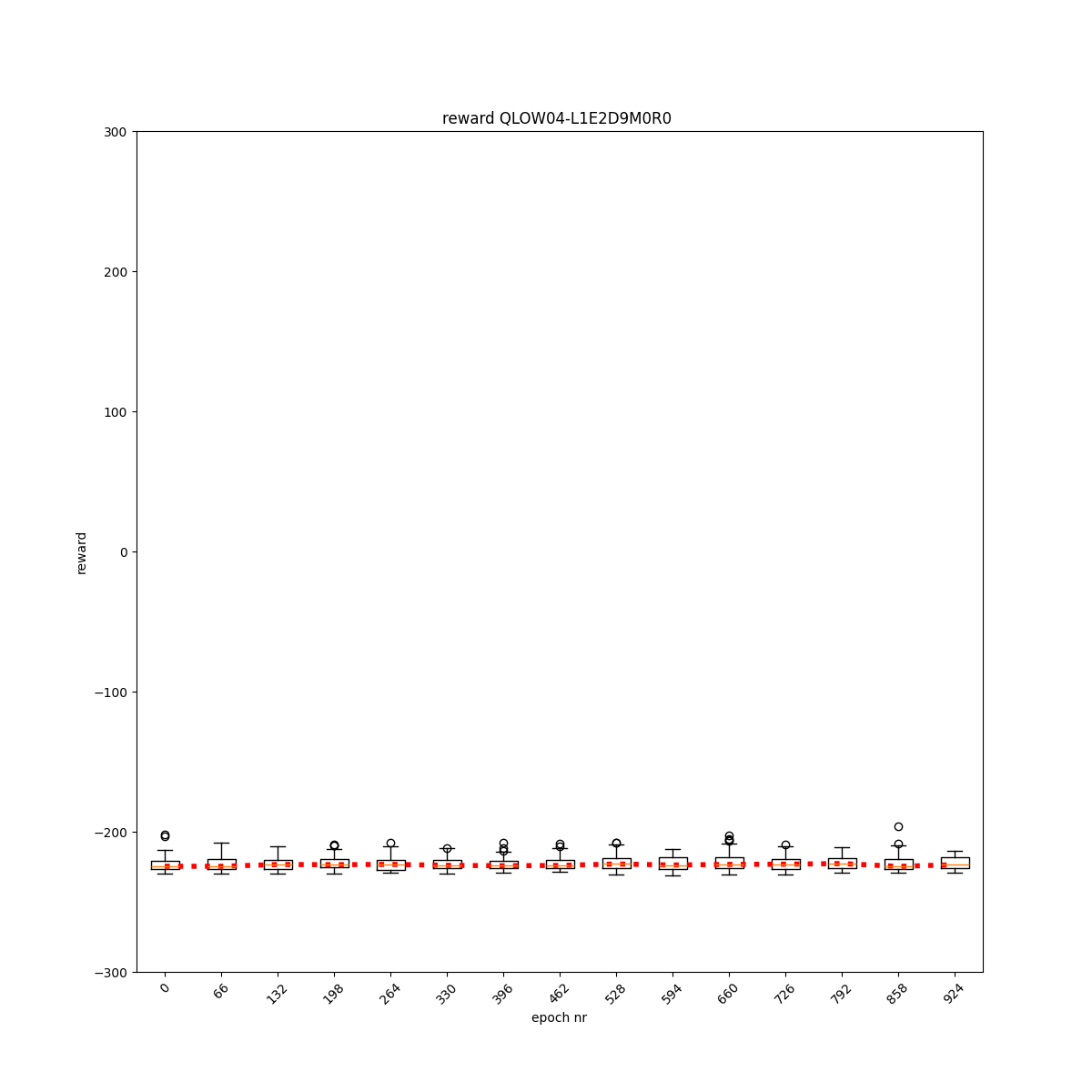

L1 E0 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

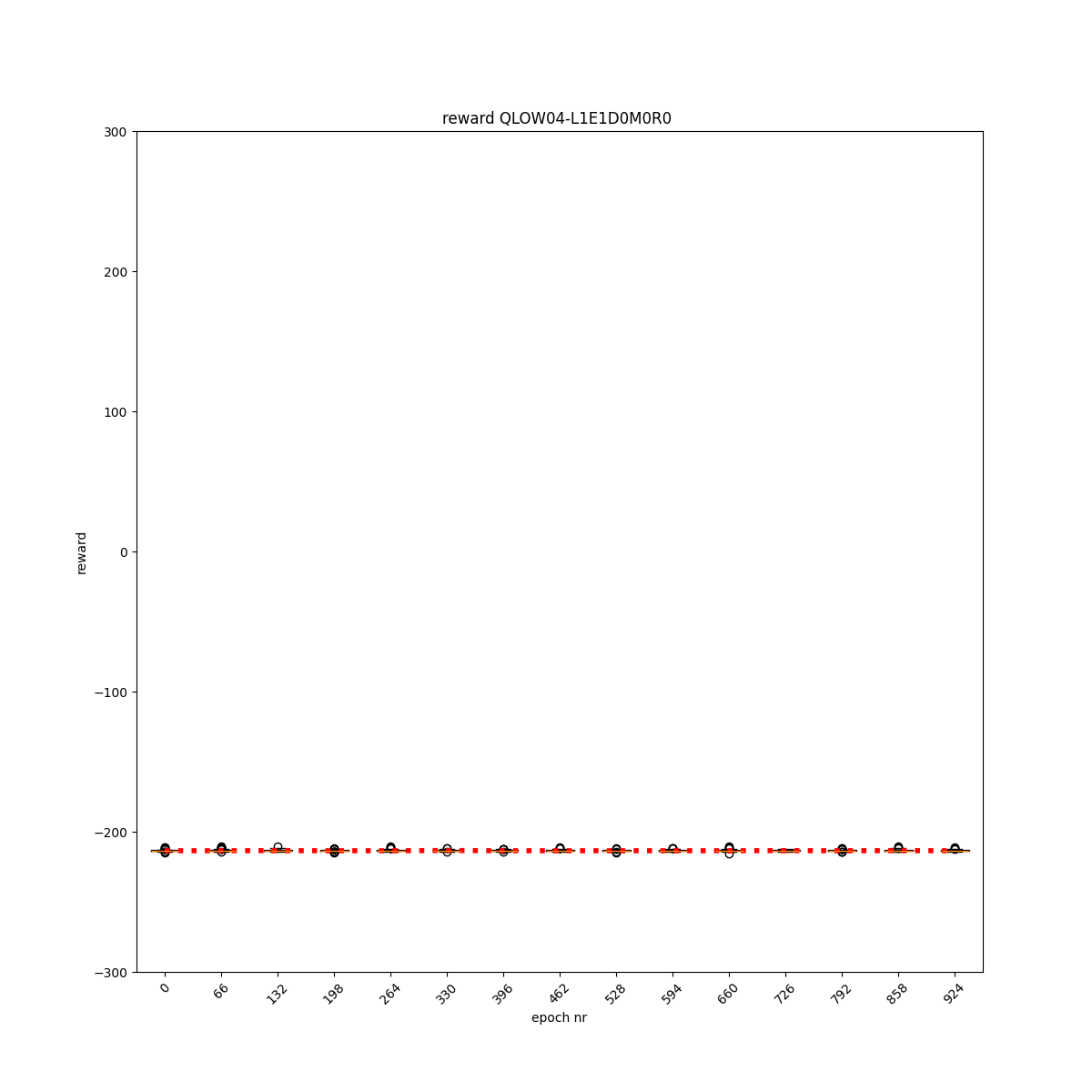

L1 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

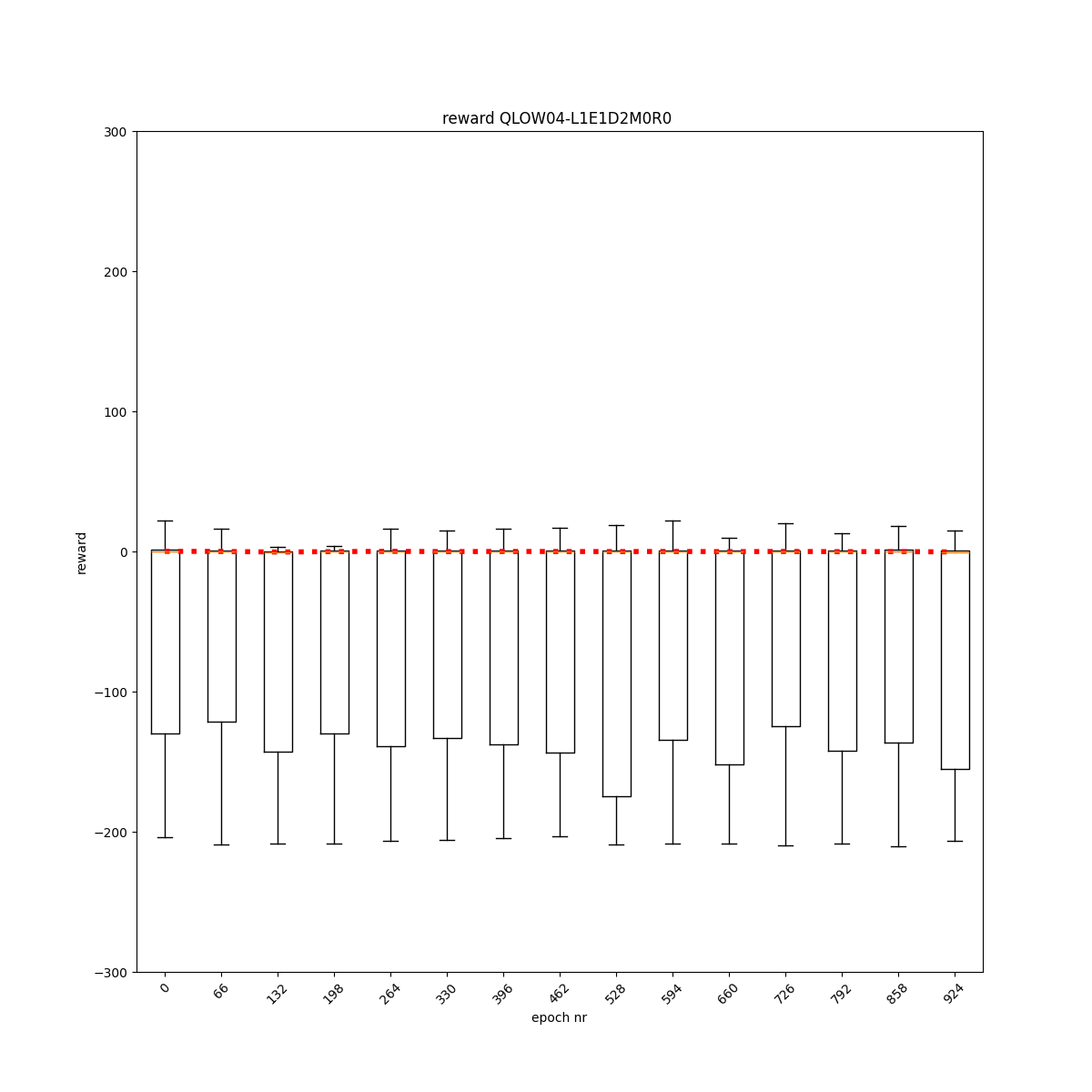

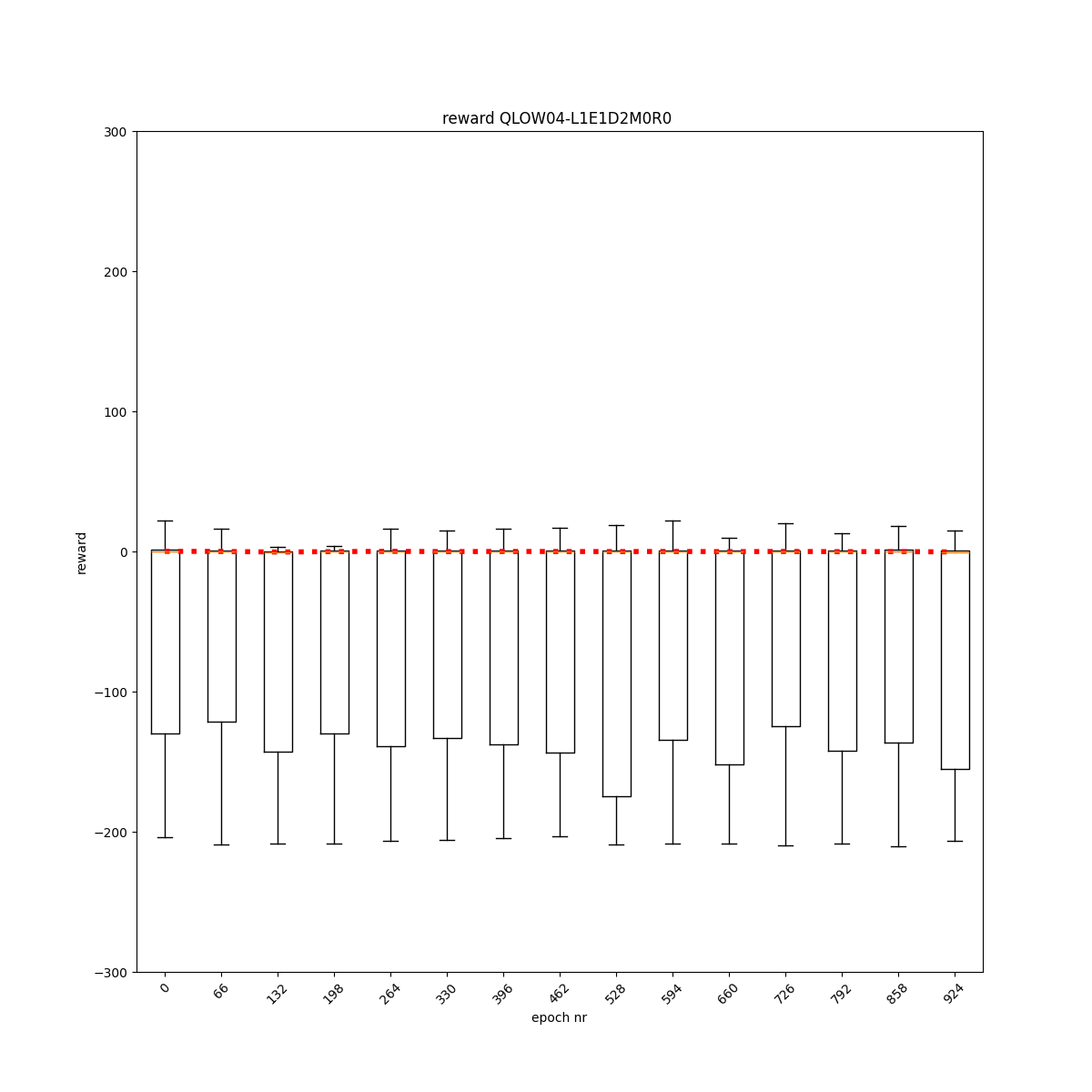

L1 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

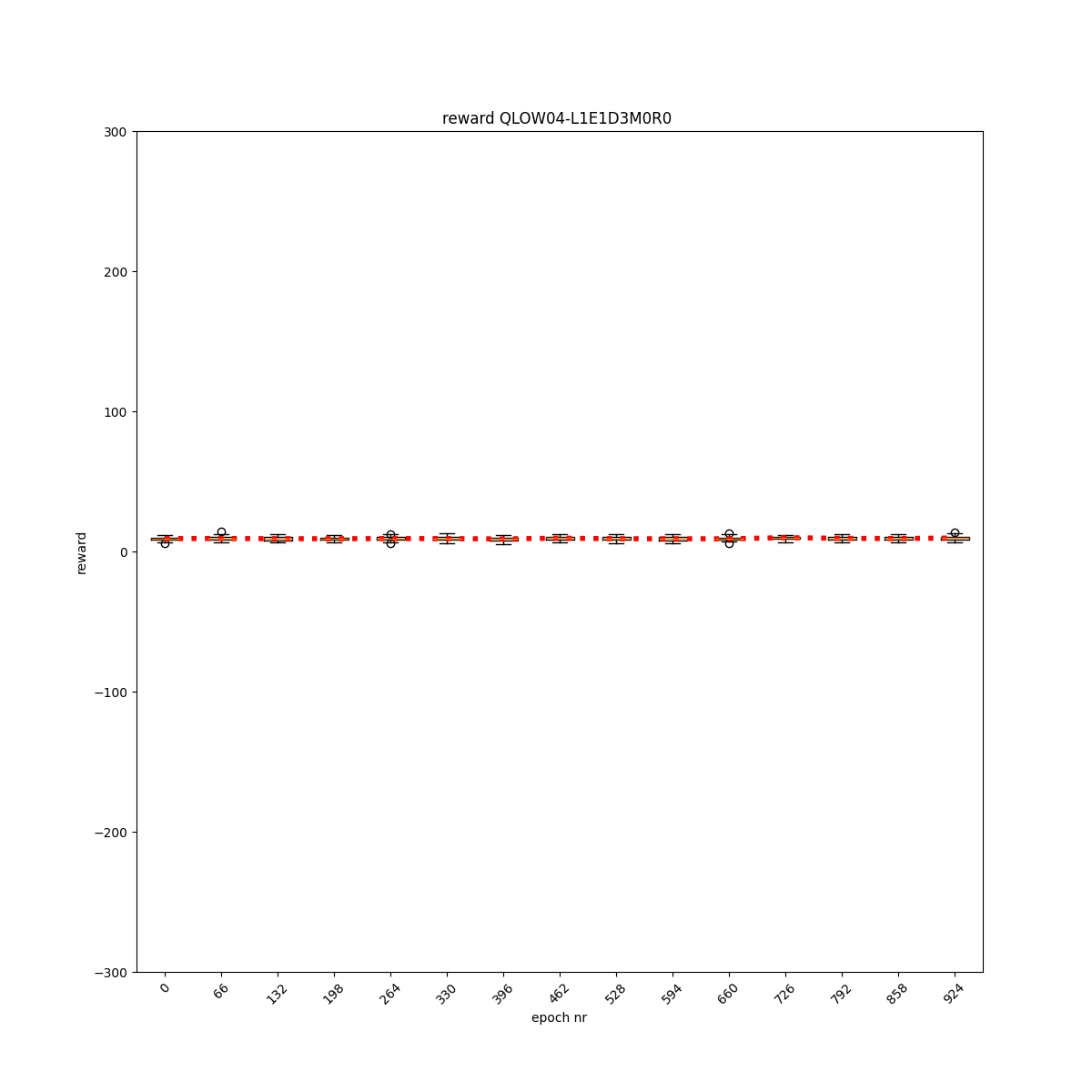

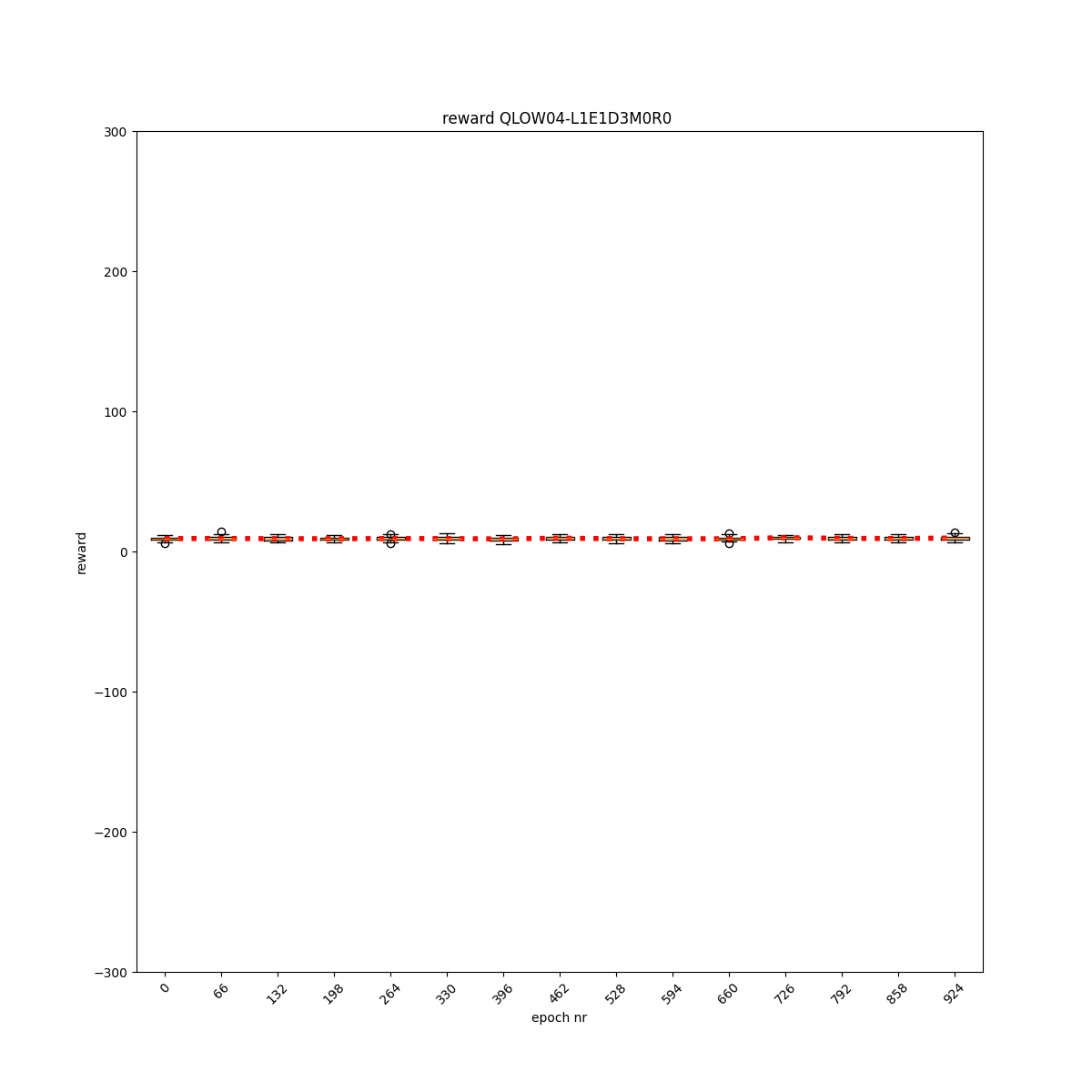

L1 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

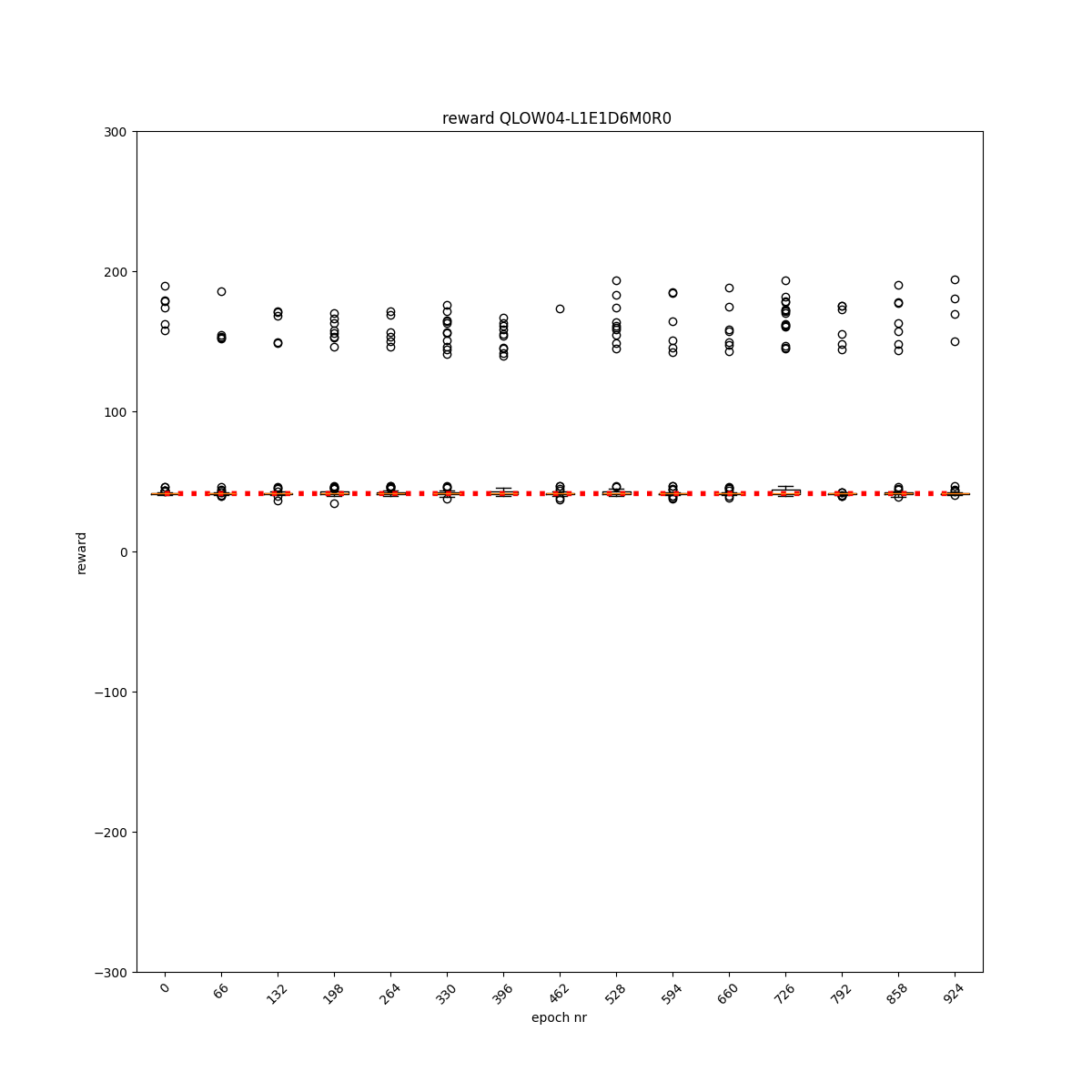

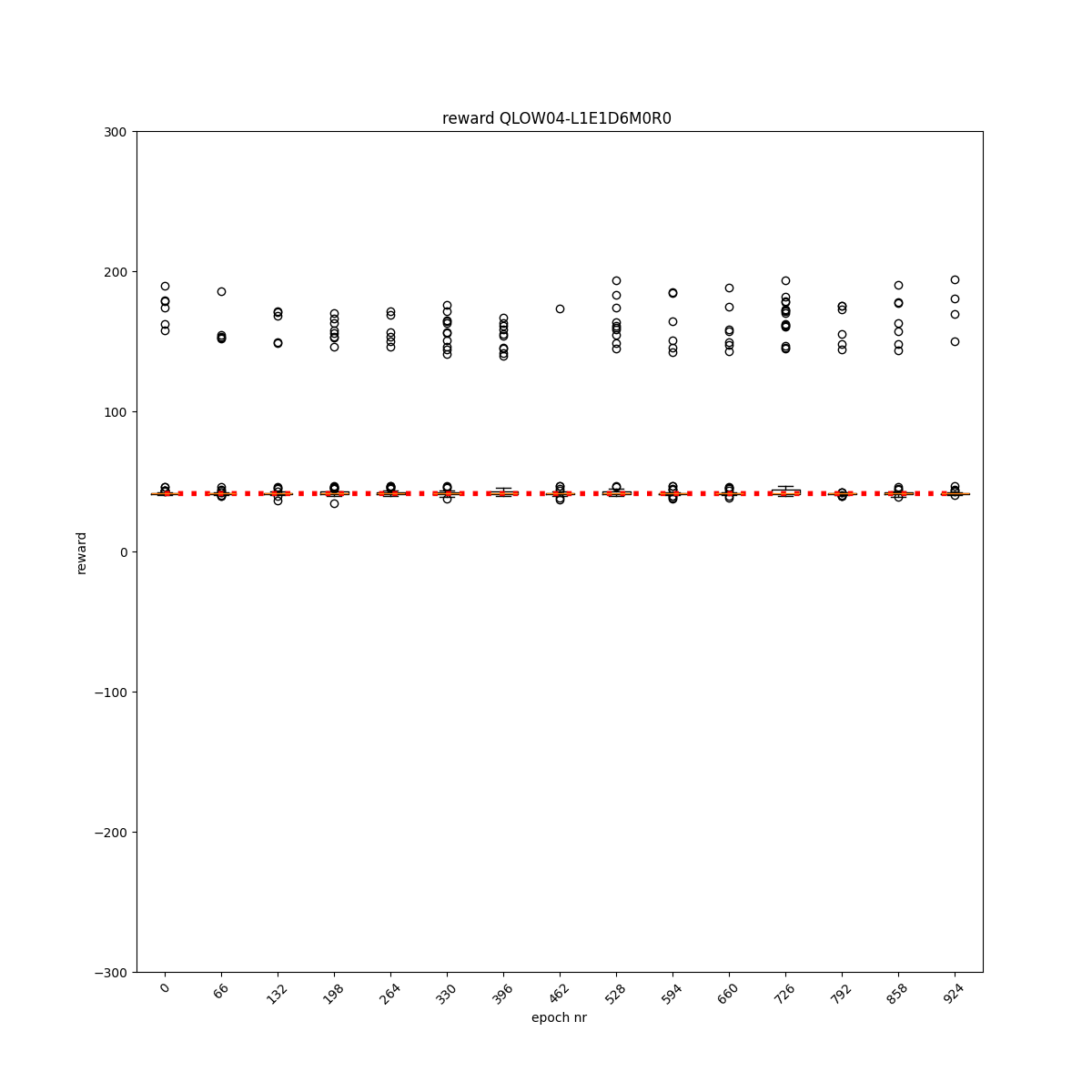

L1 E1 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

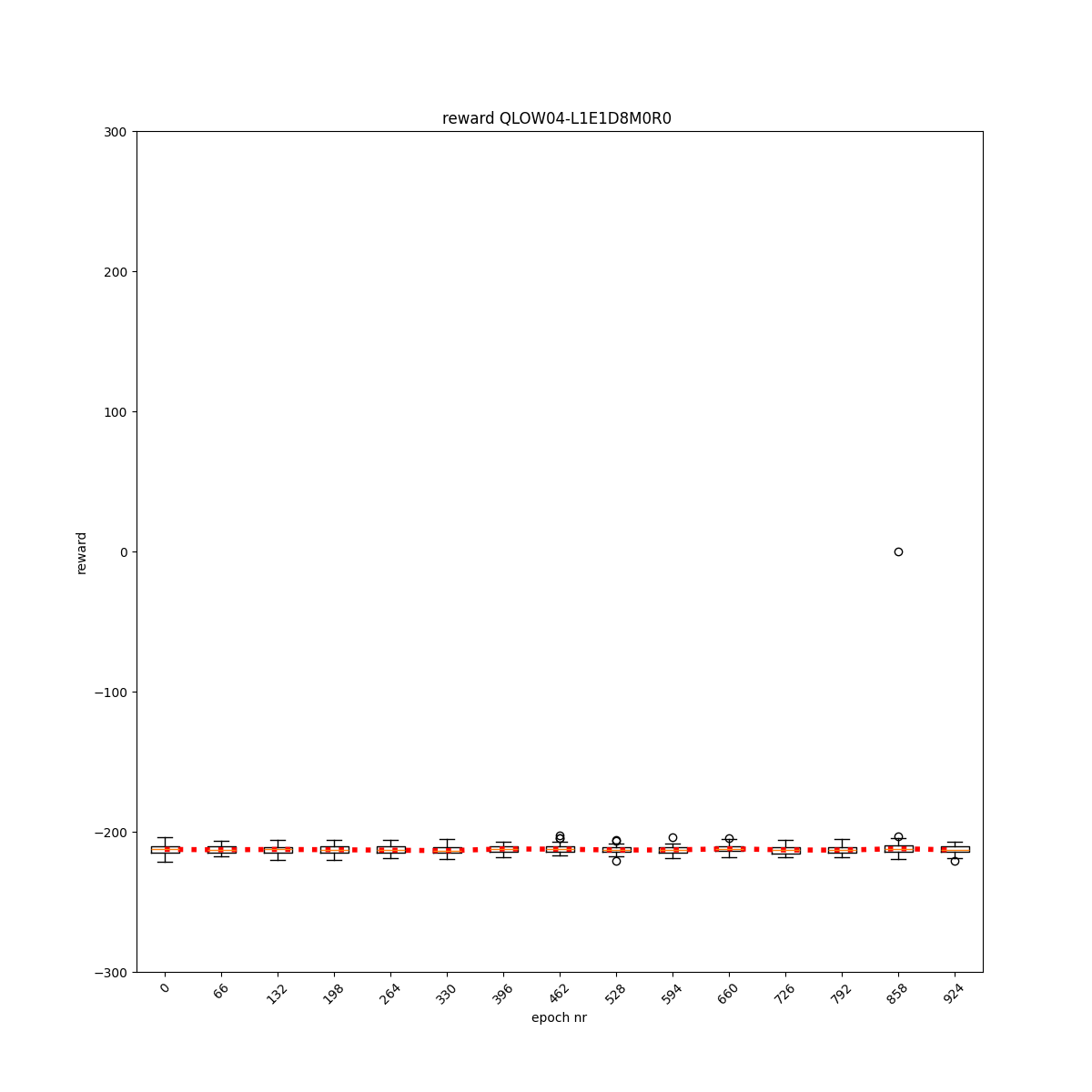

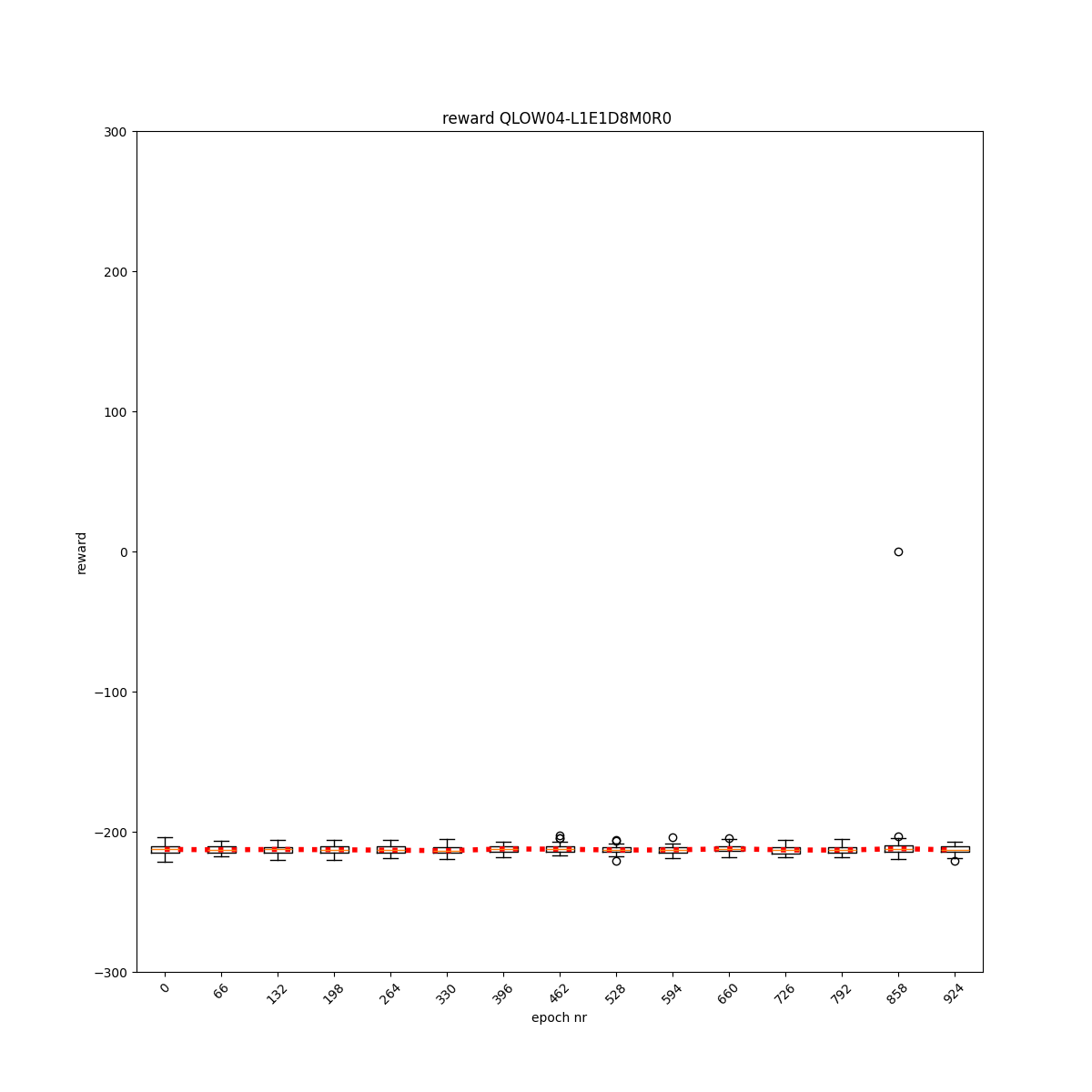

L1 E1 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

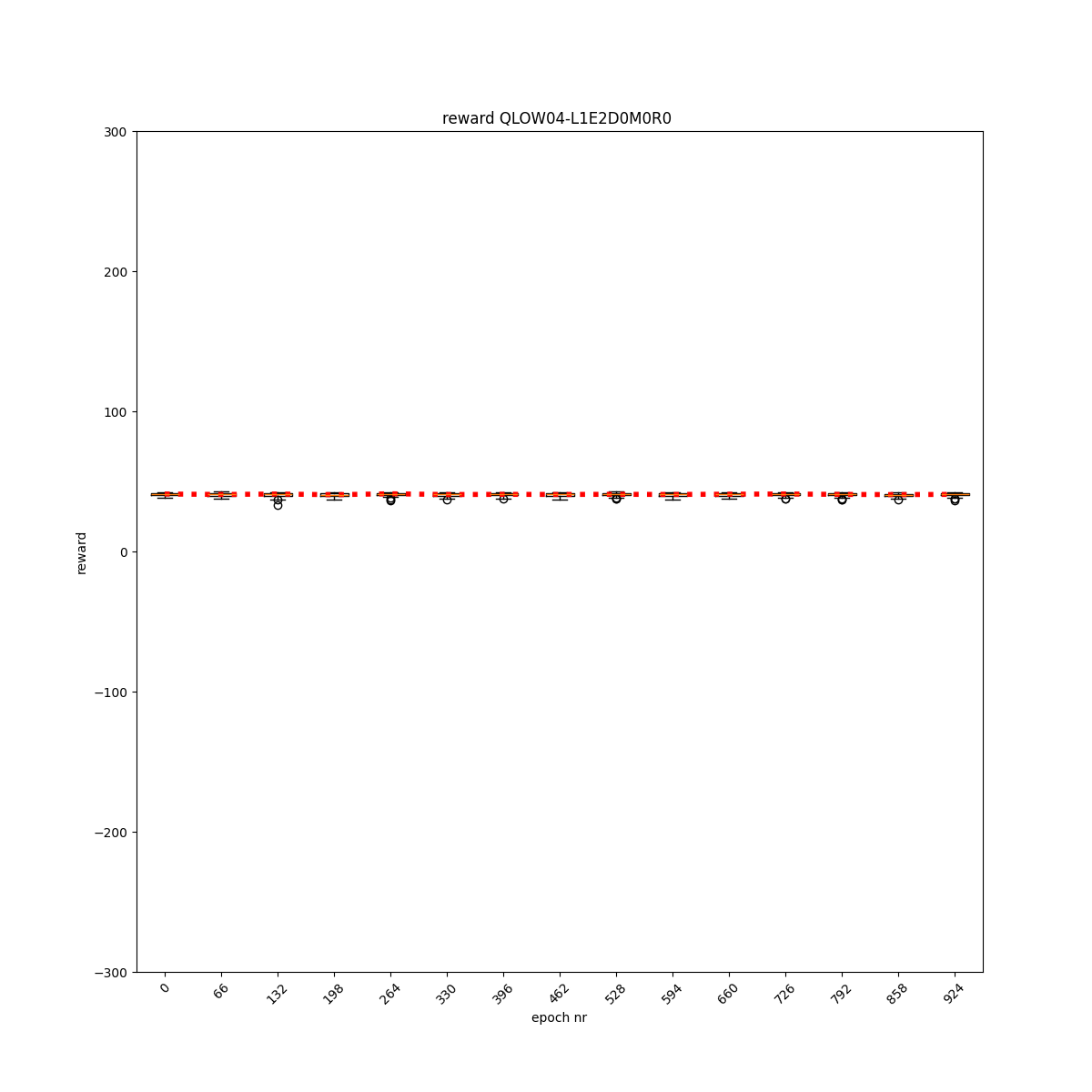

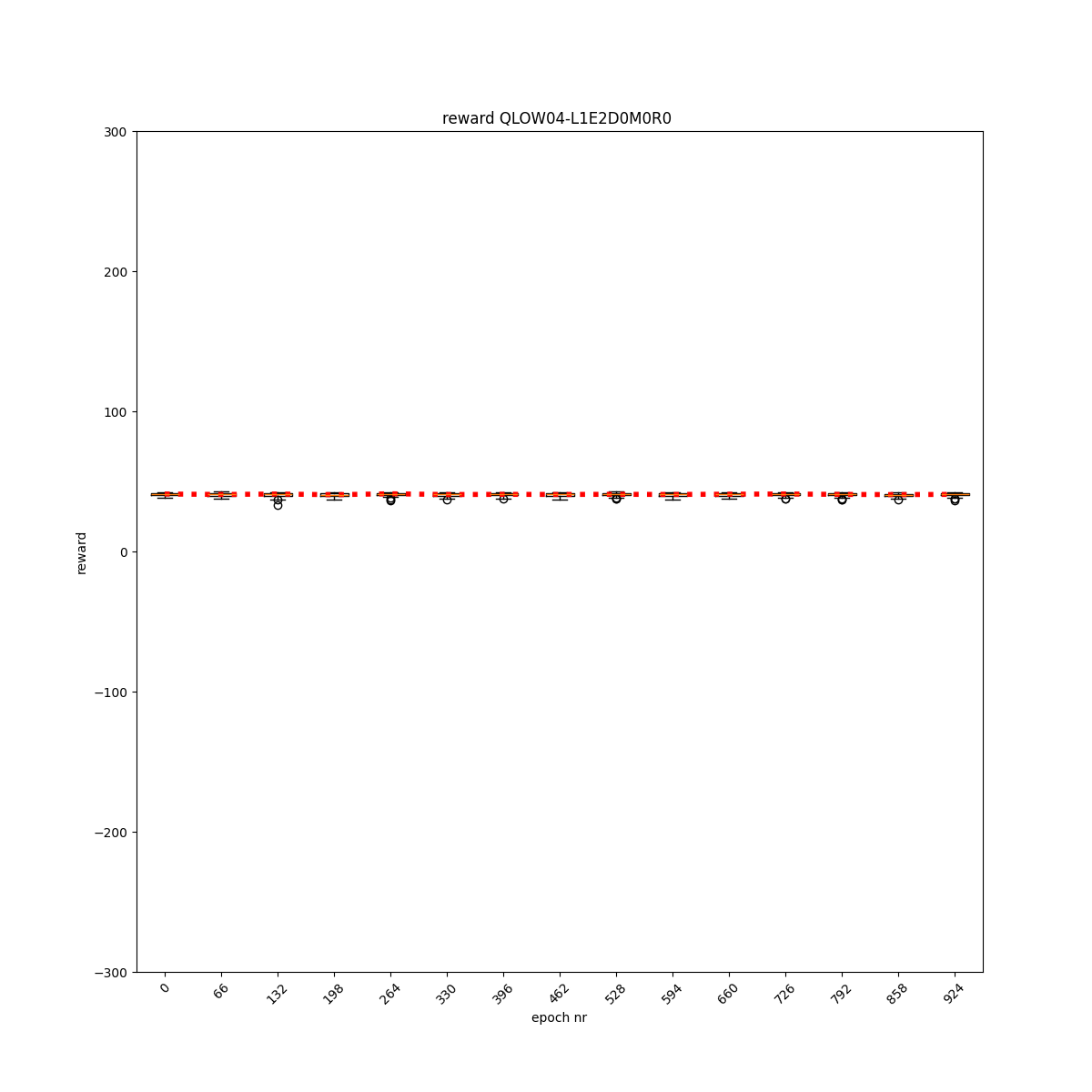

L1 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

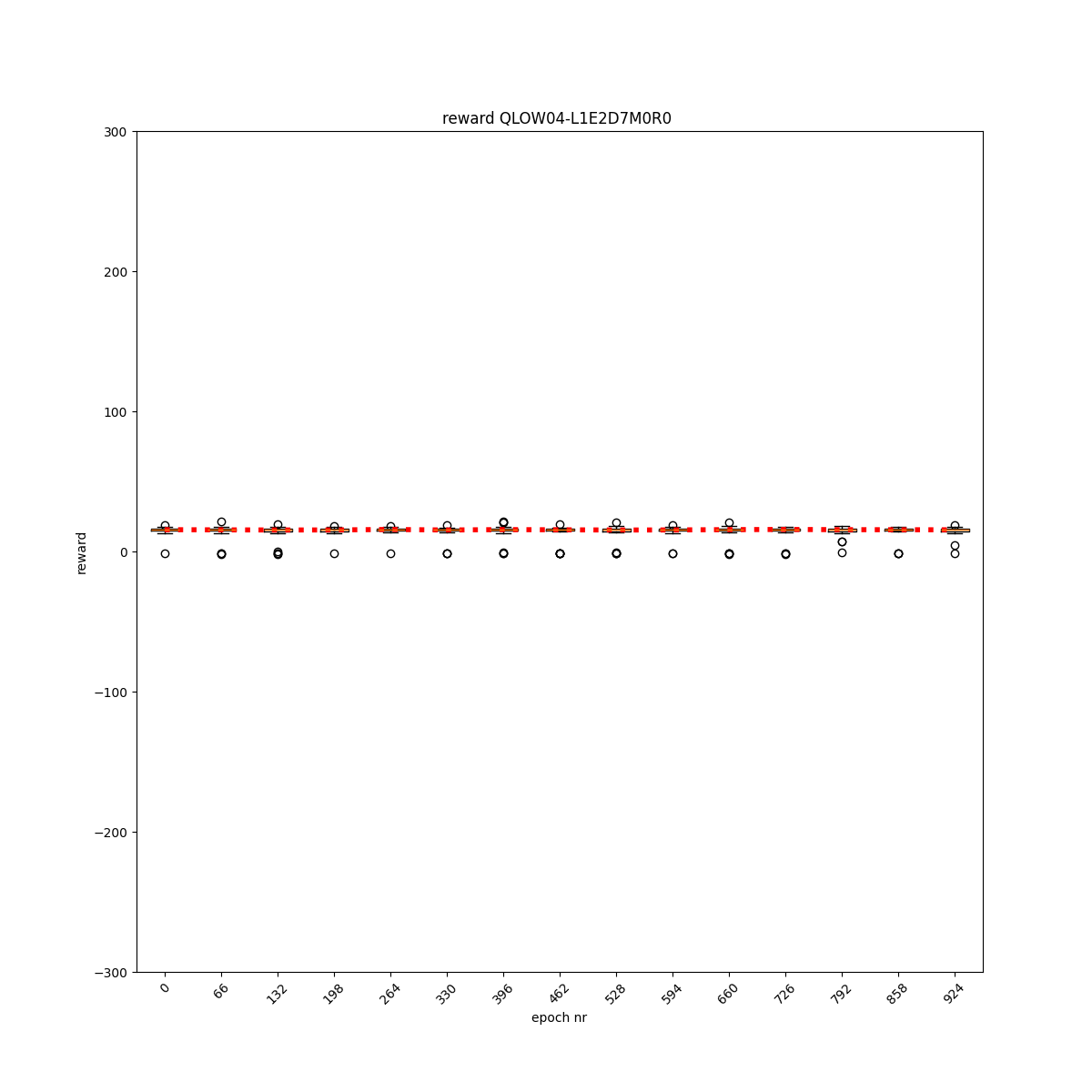

L1 E2 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

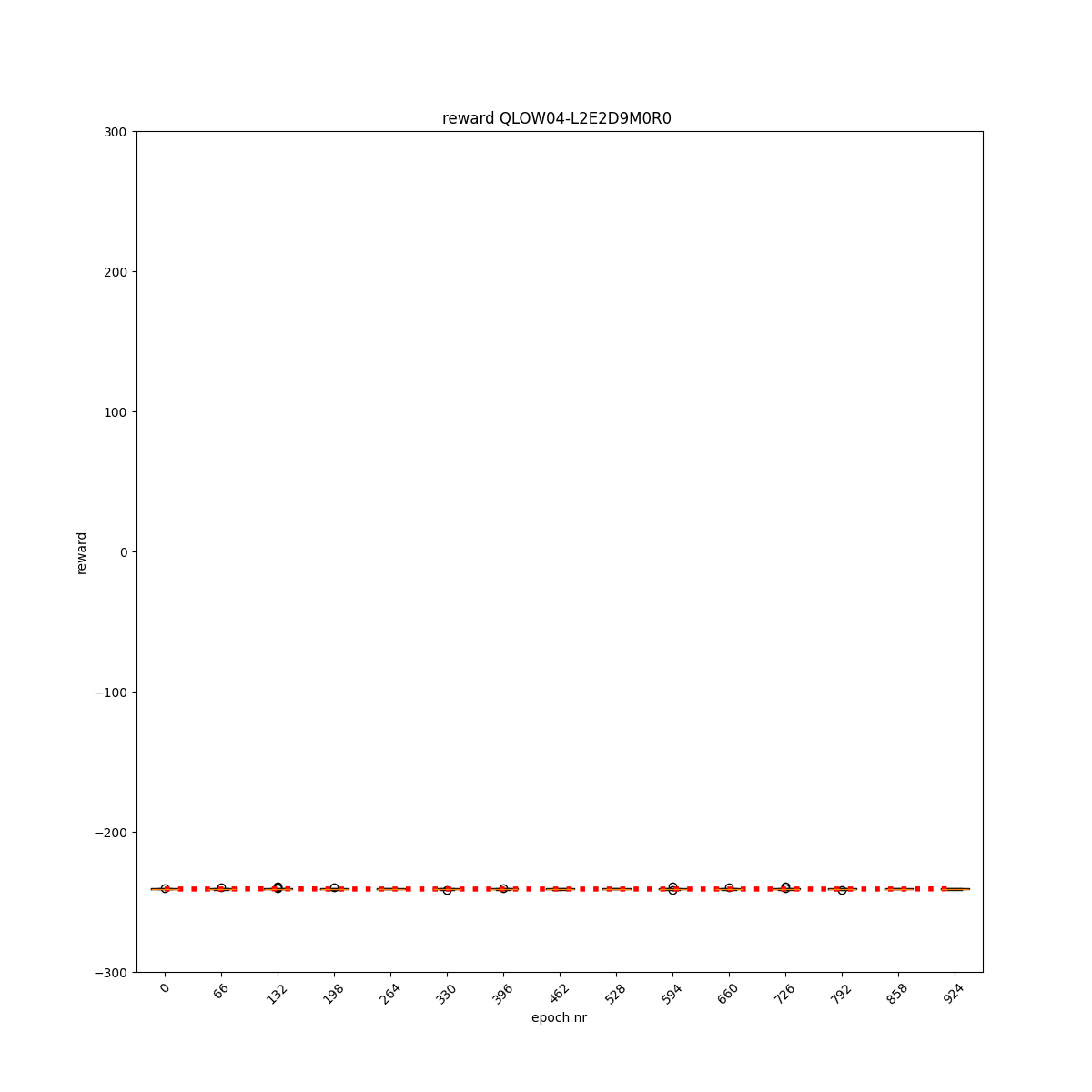

L2 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

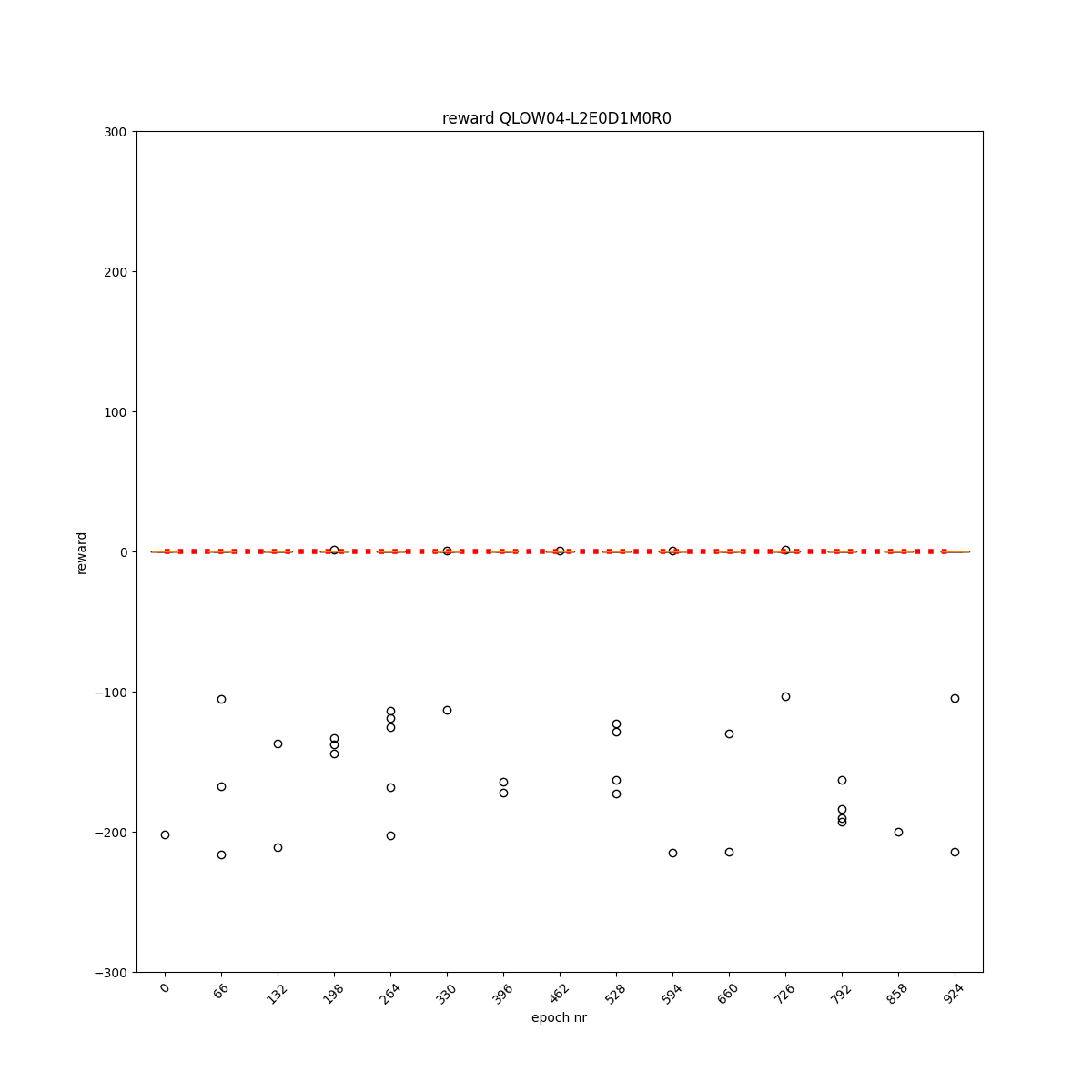

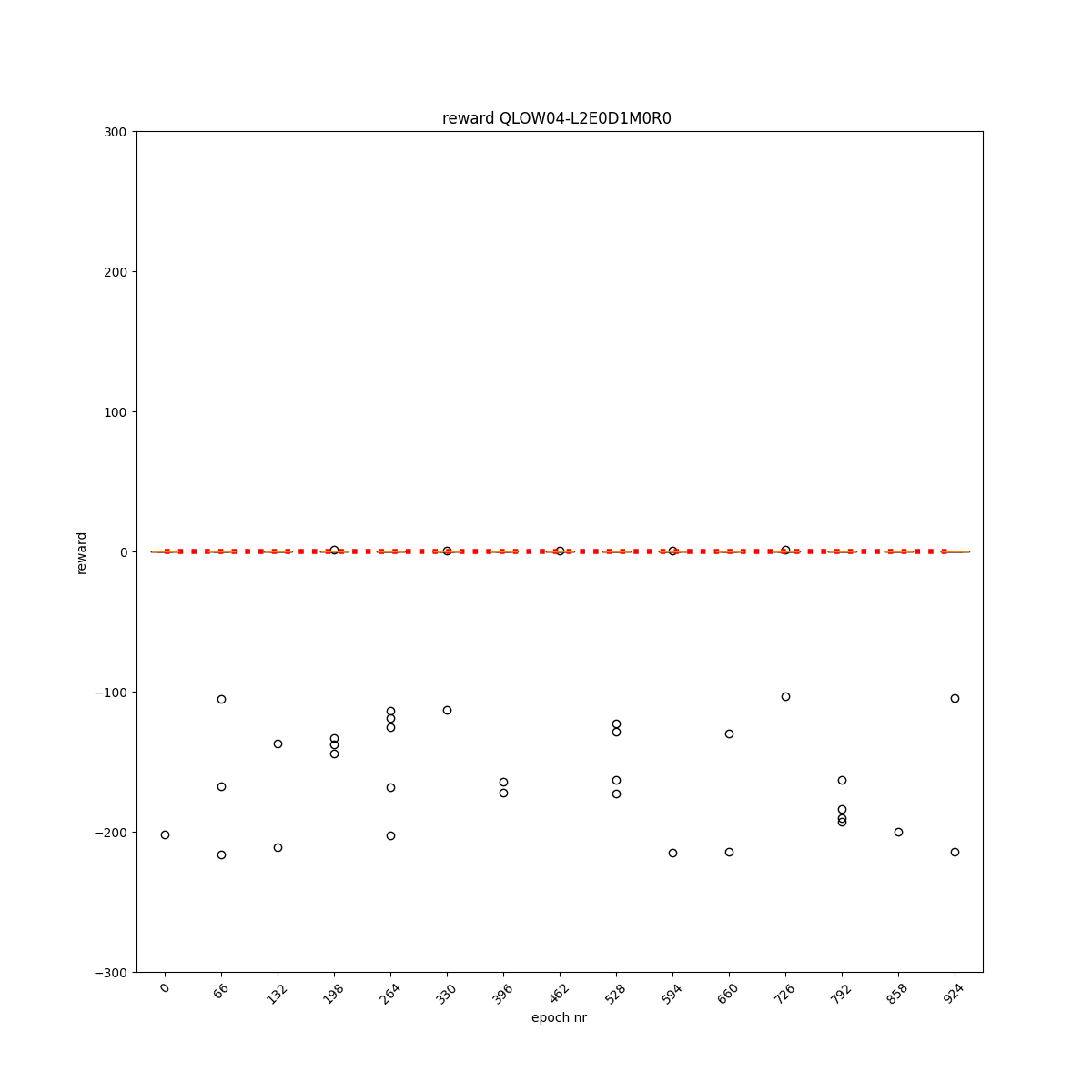

L2 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

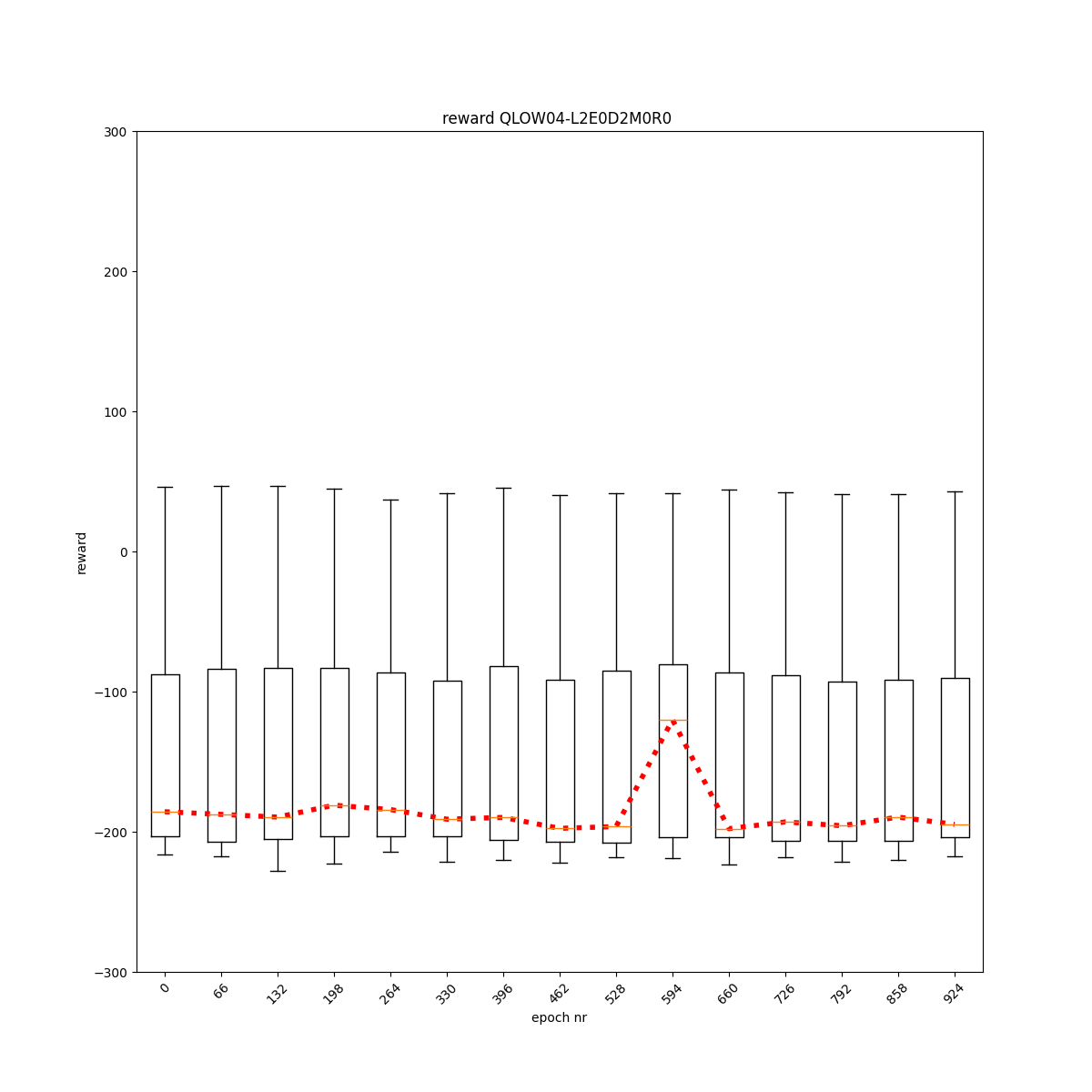

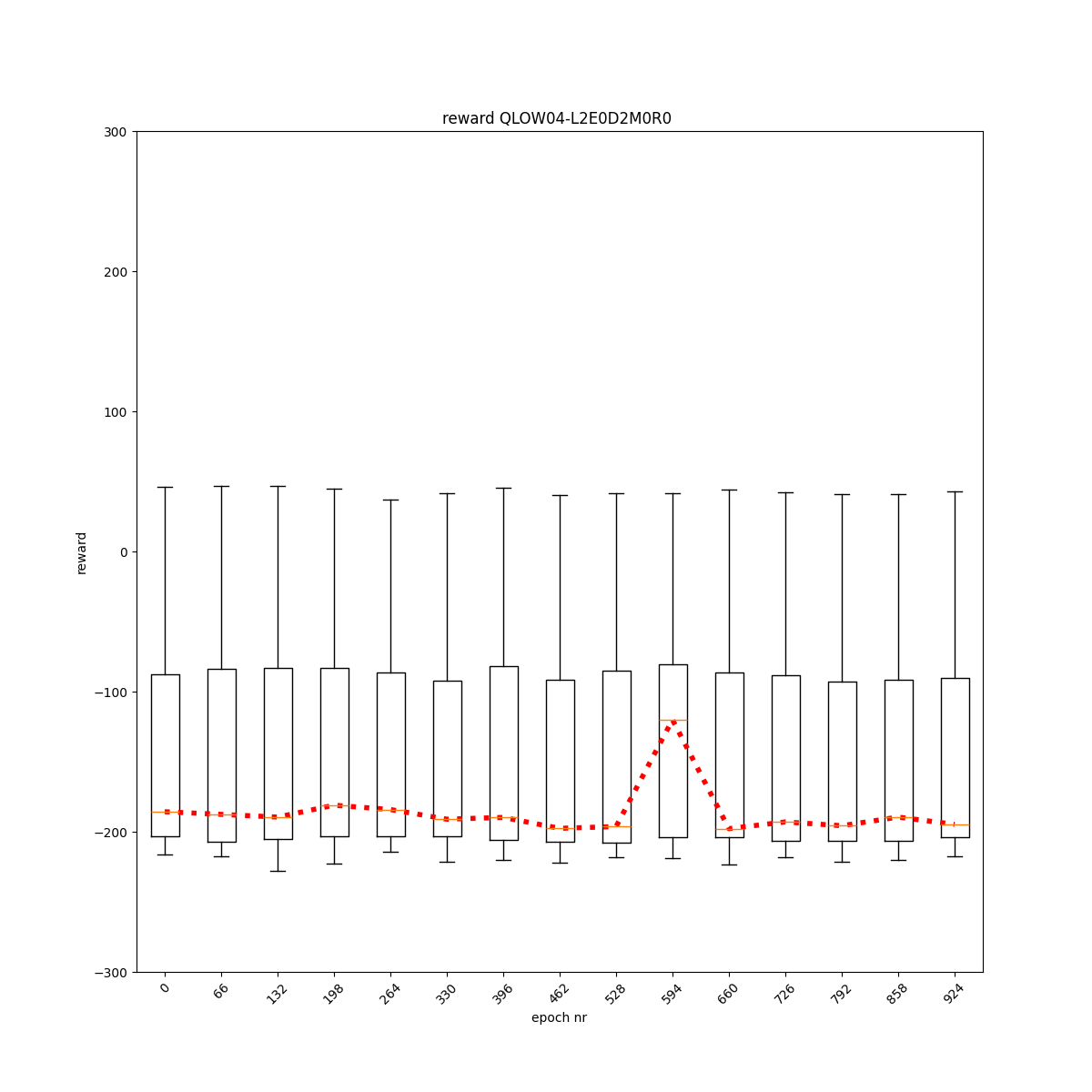

L2 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

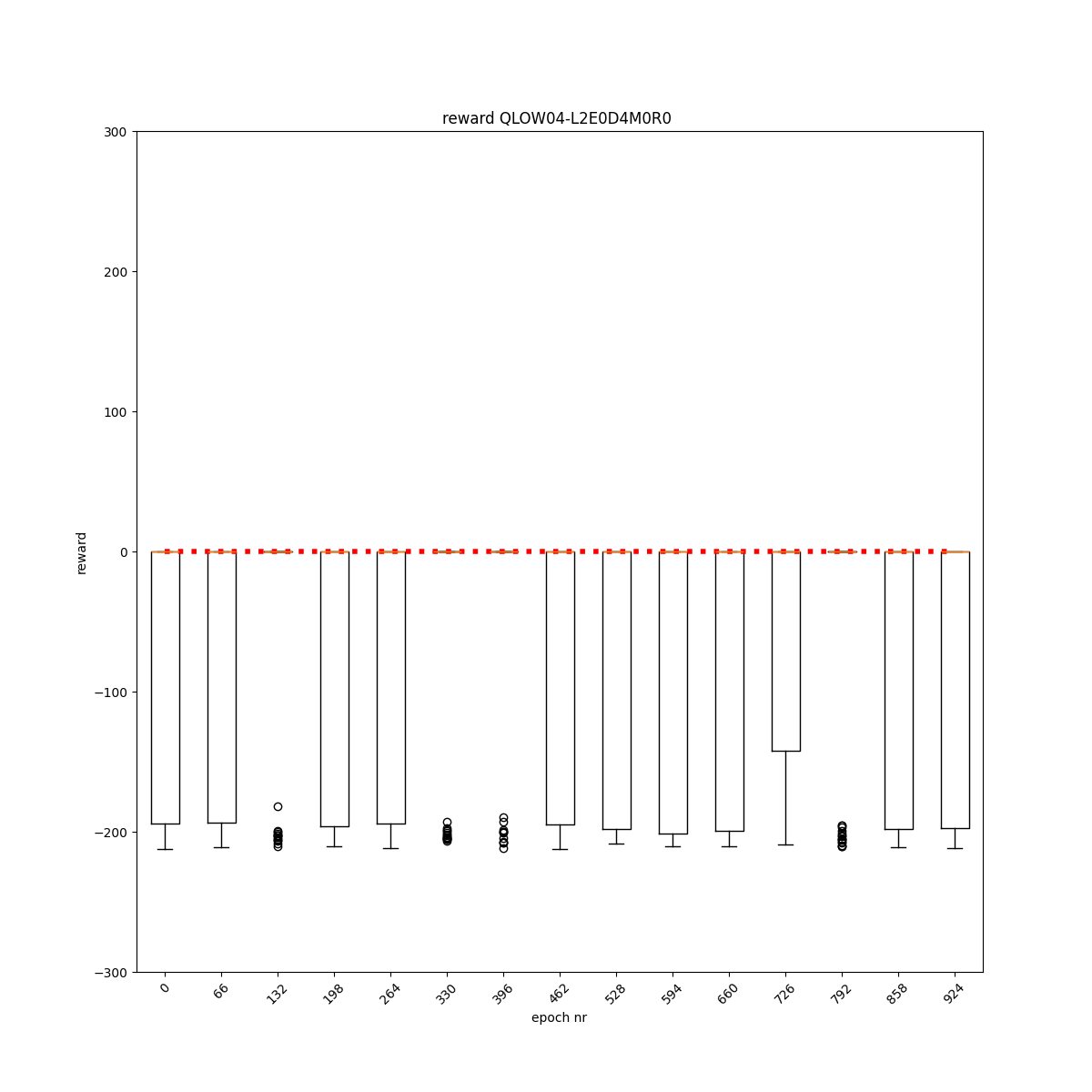

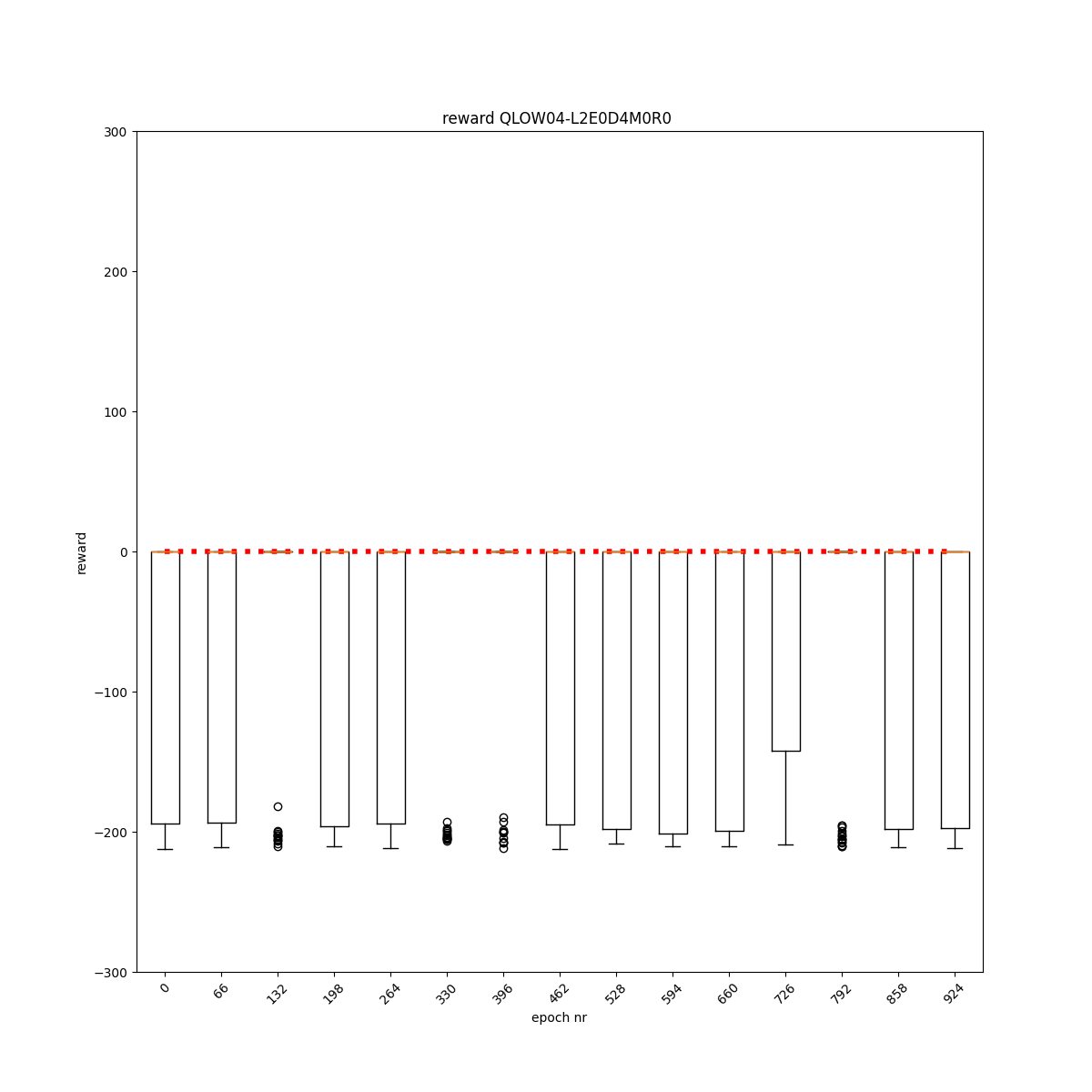

L2 E0 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

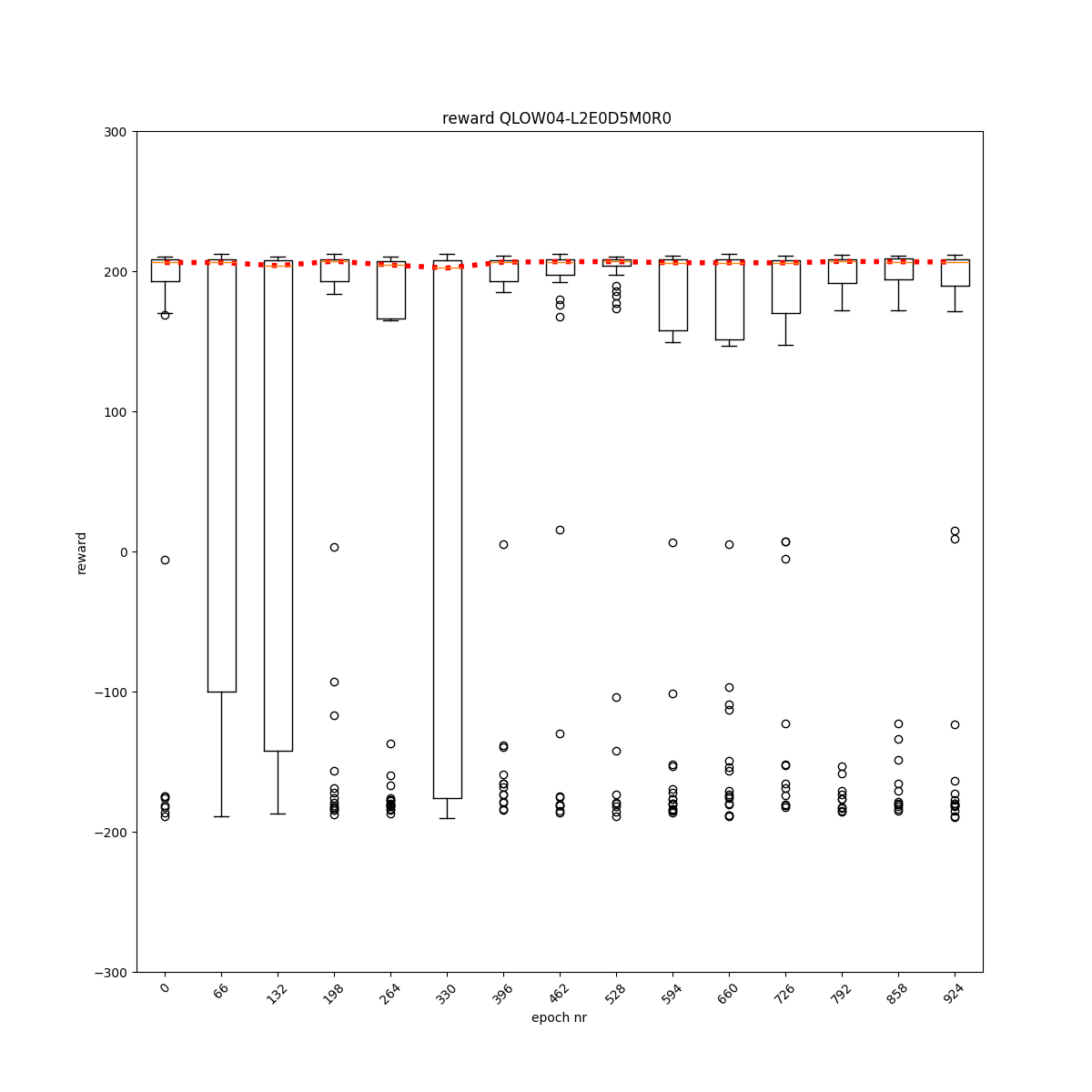

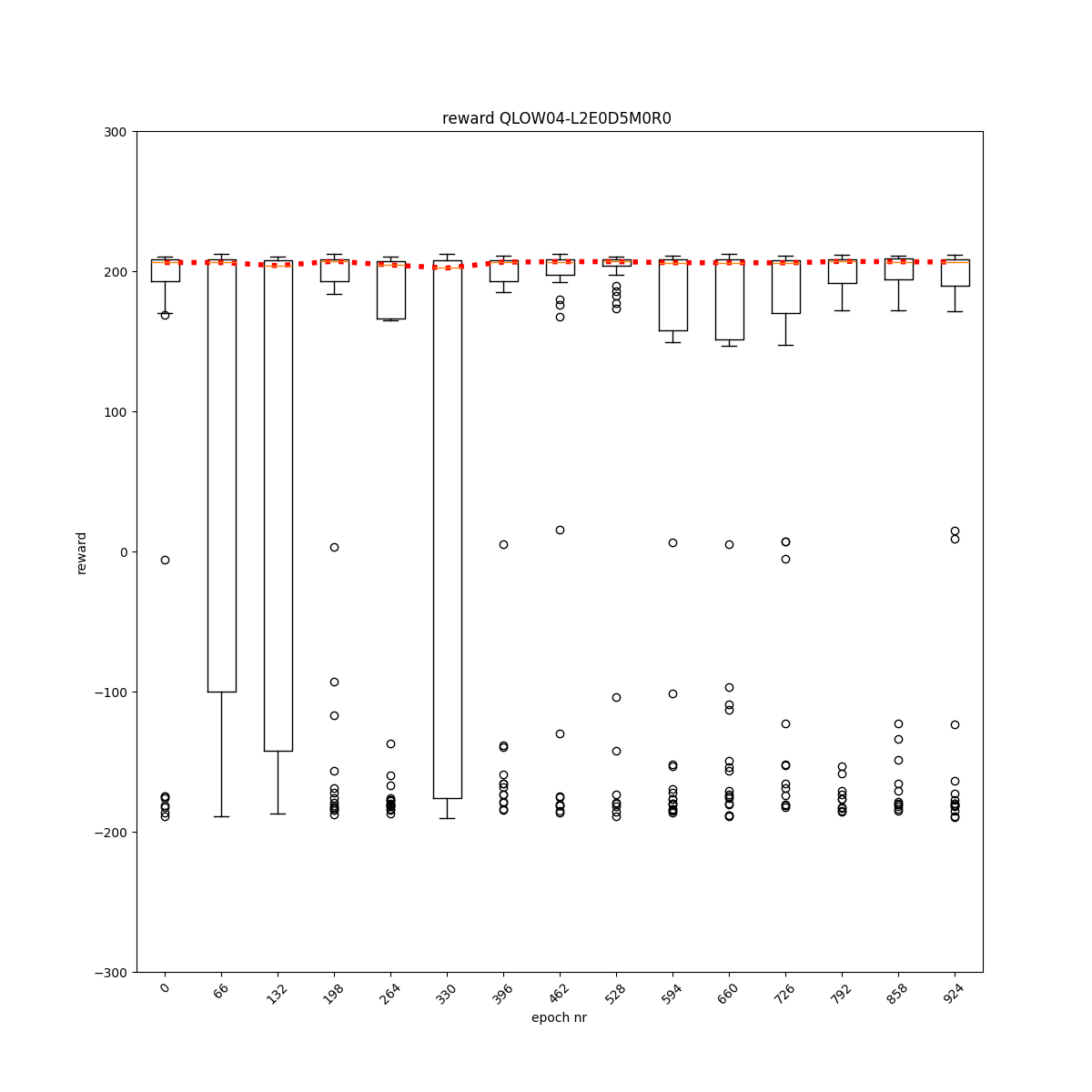

L2 E0 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

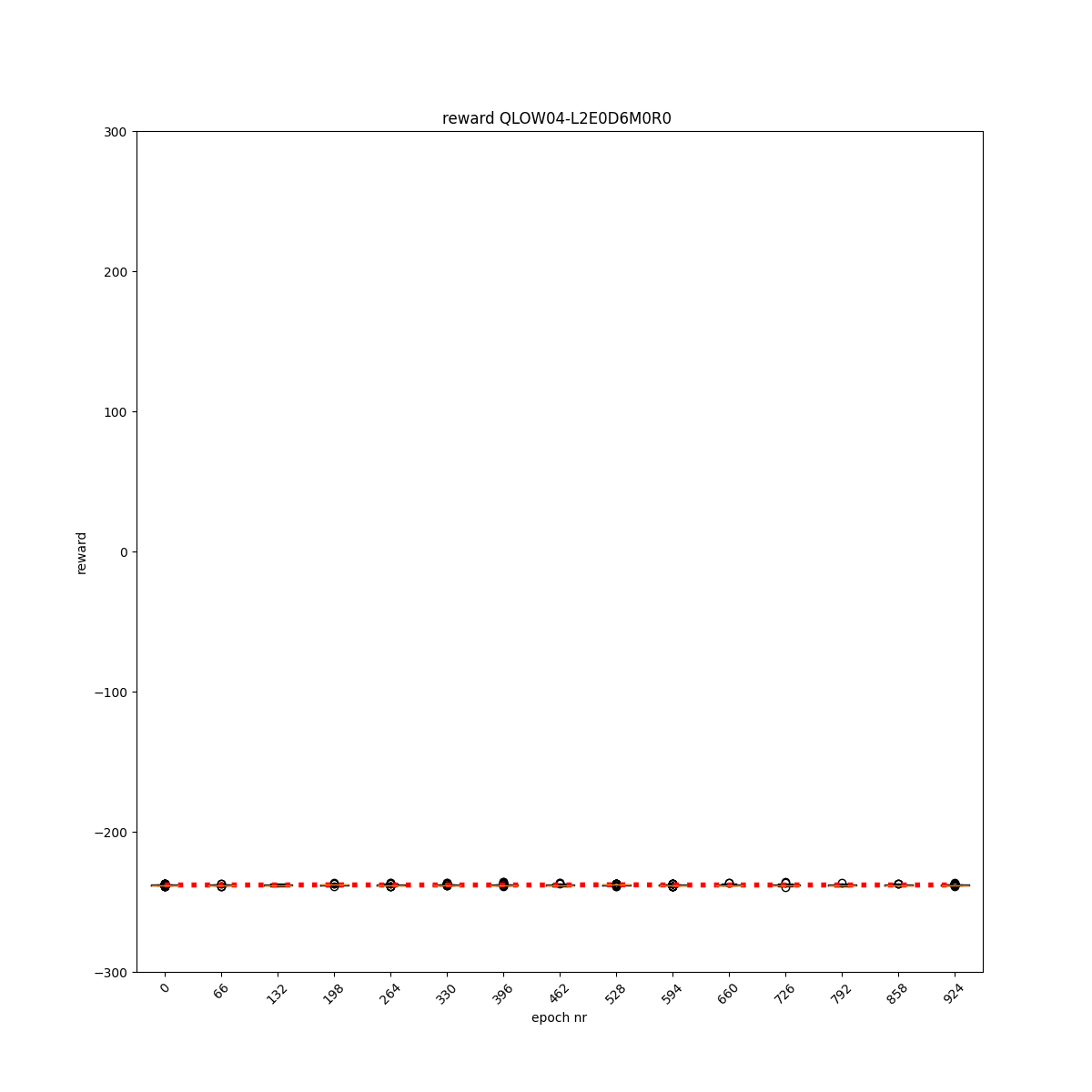

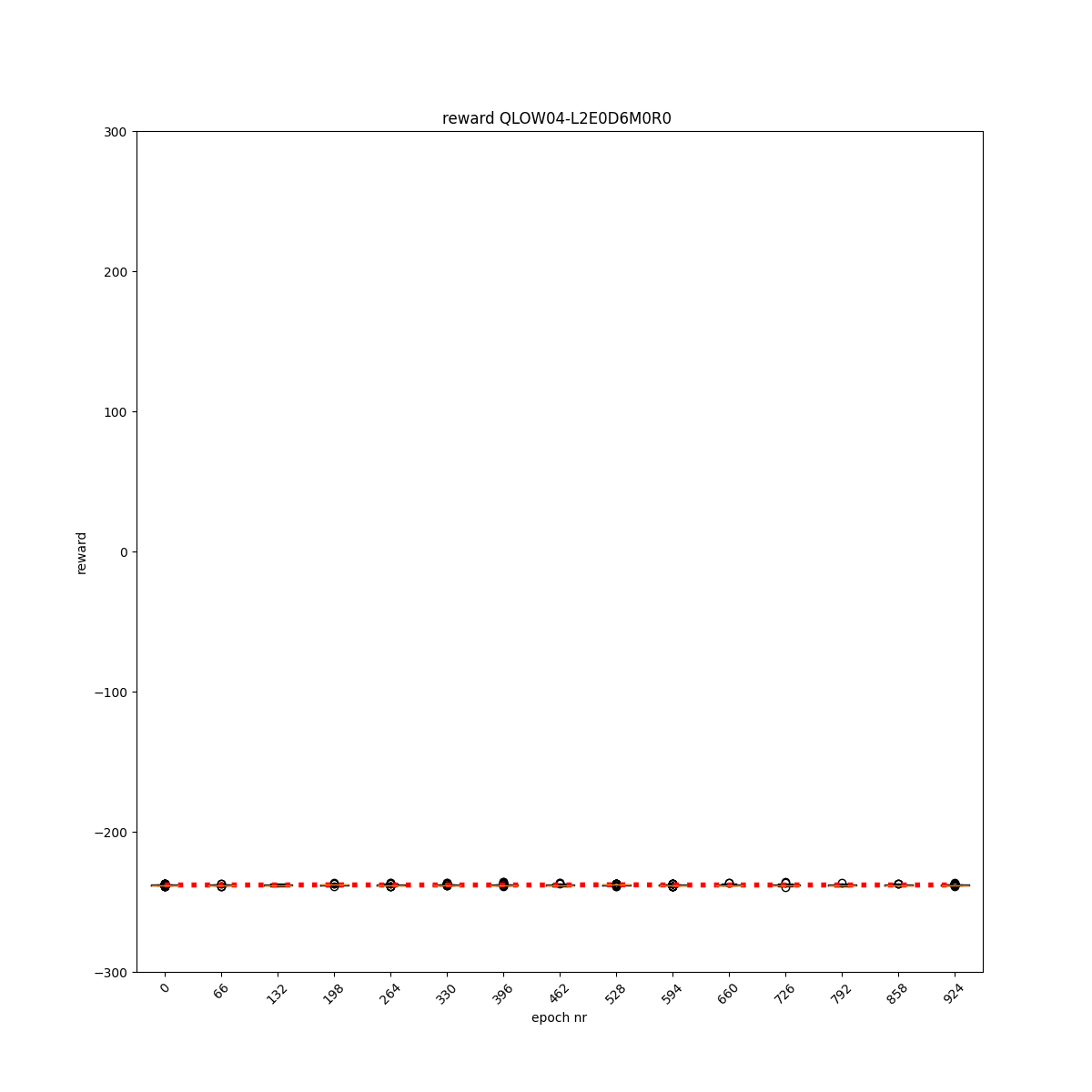

L2 E0 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

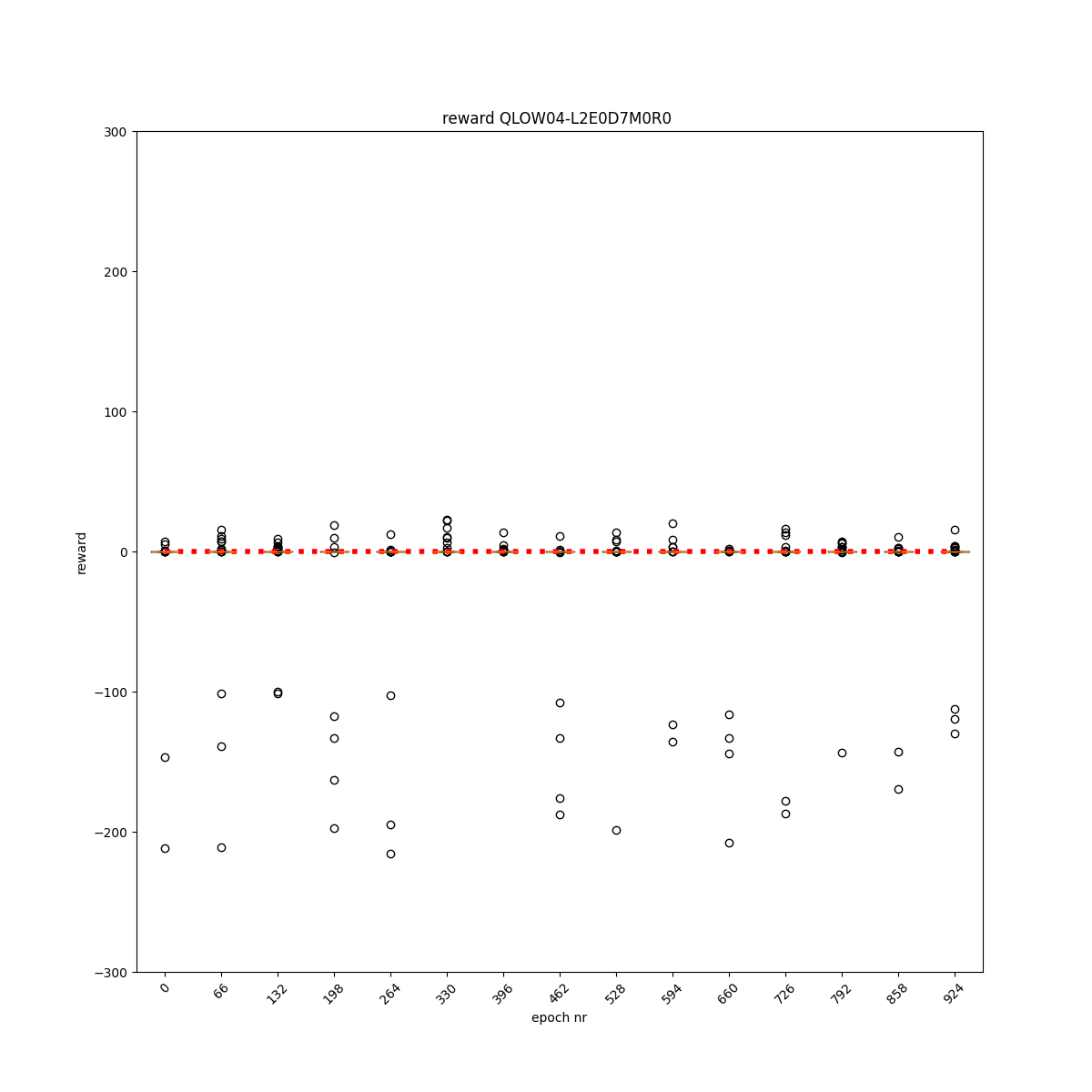

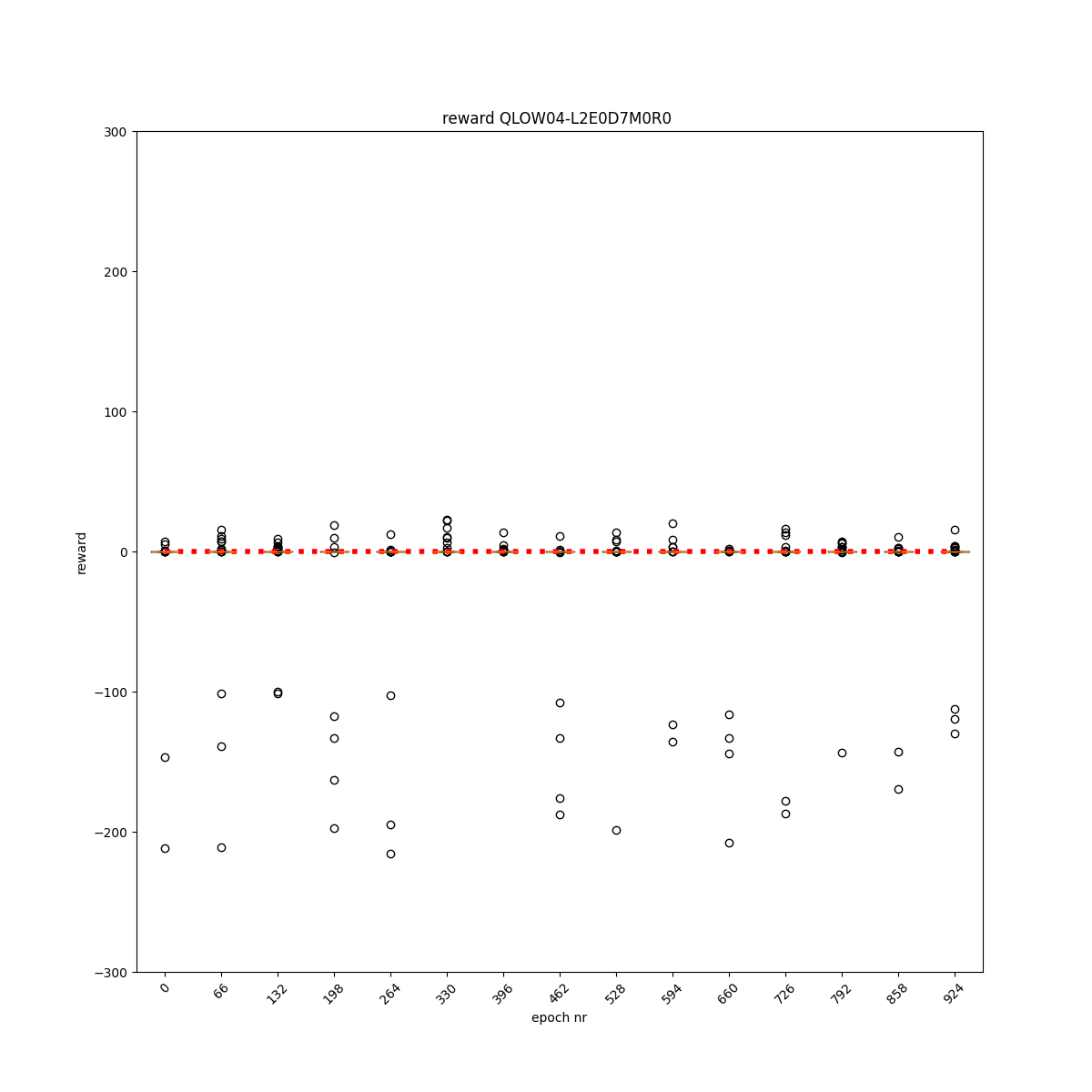

L2 E0 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

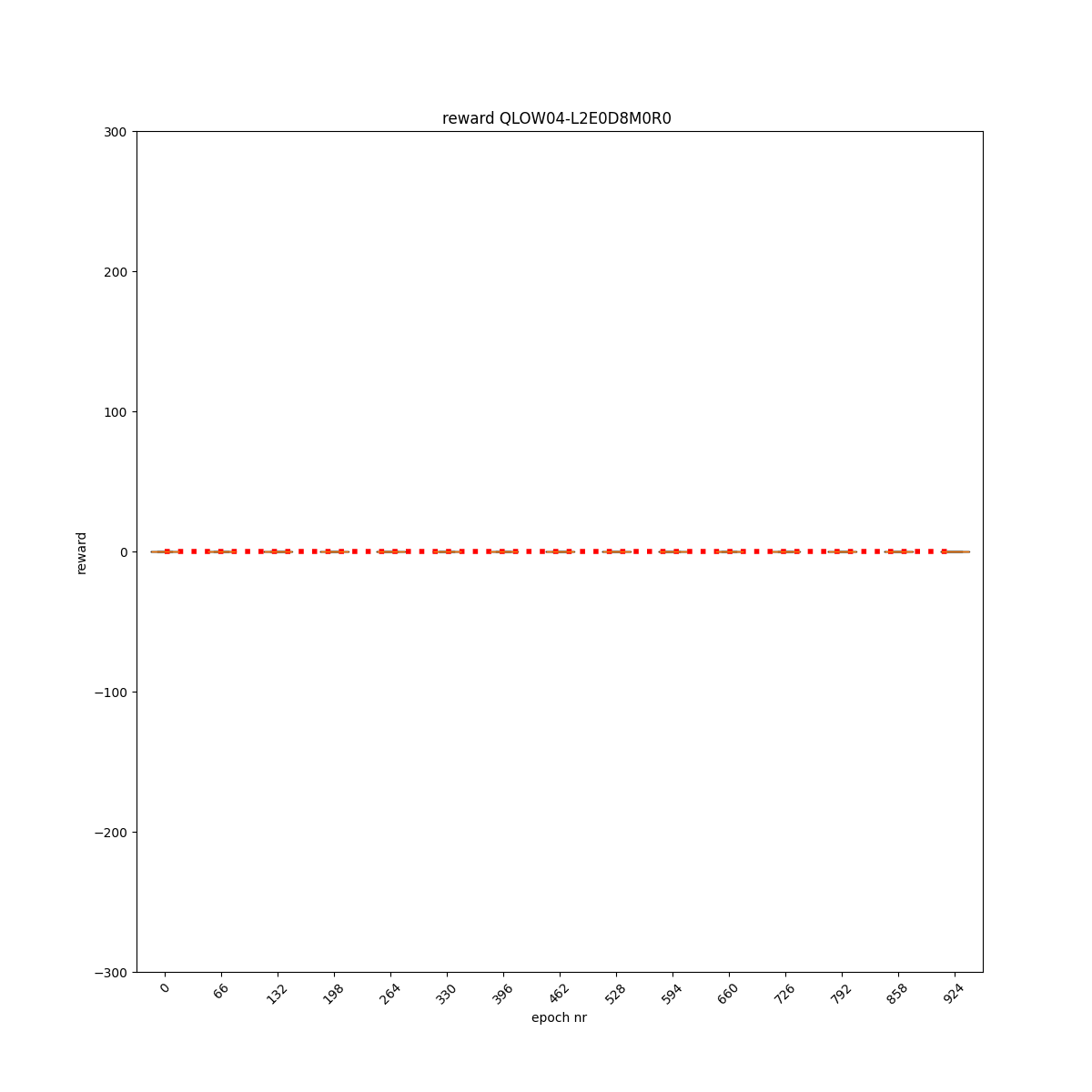

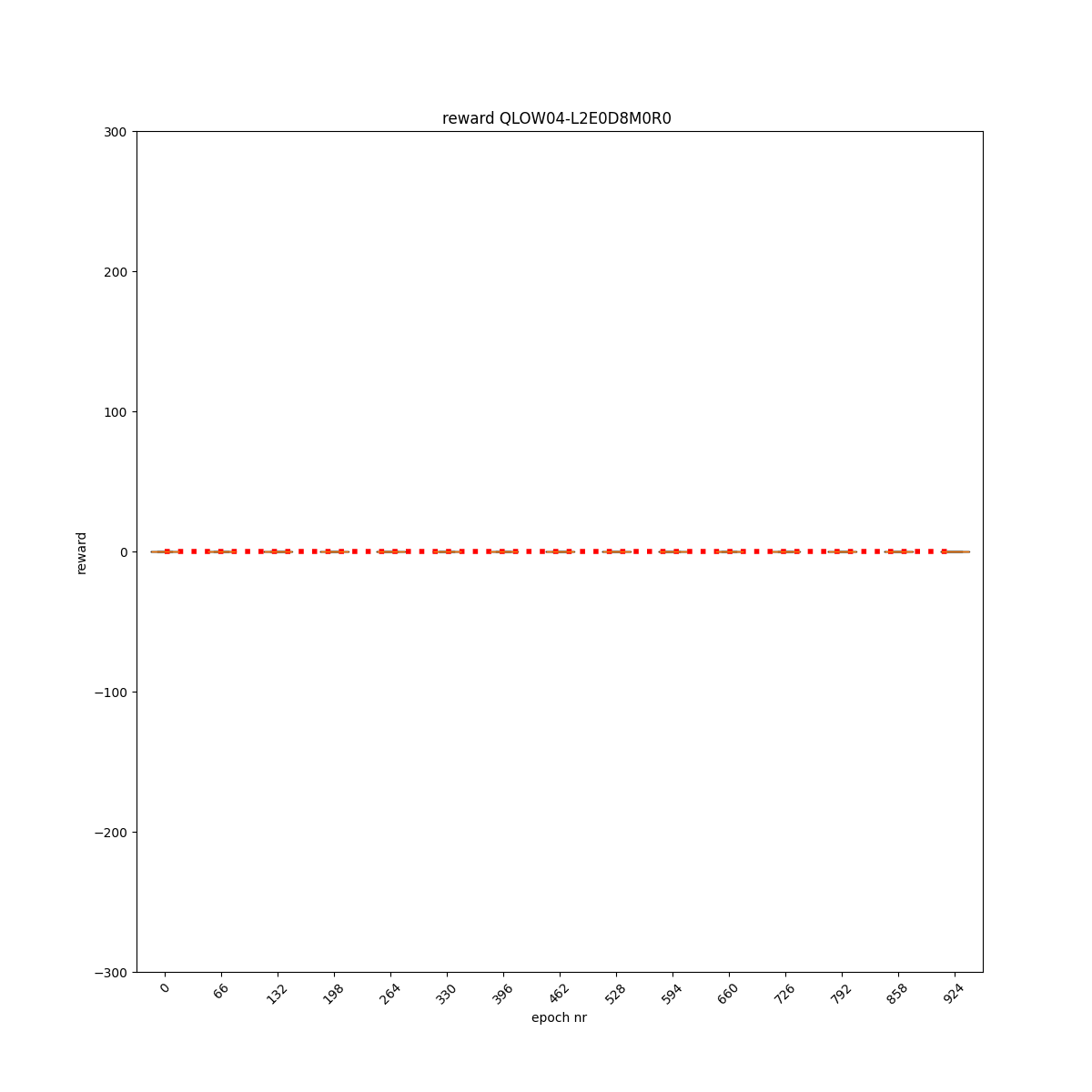

L2 E0 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E0 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

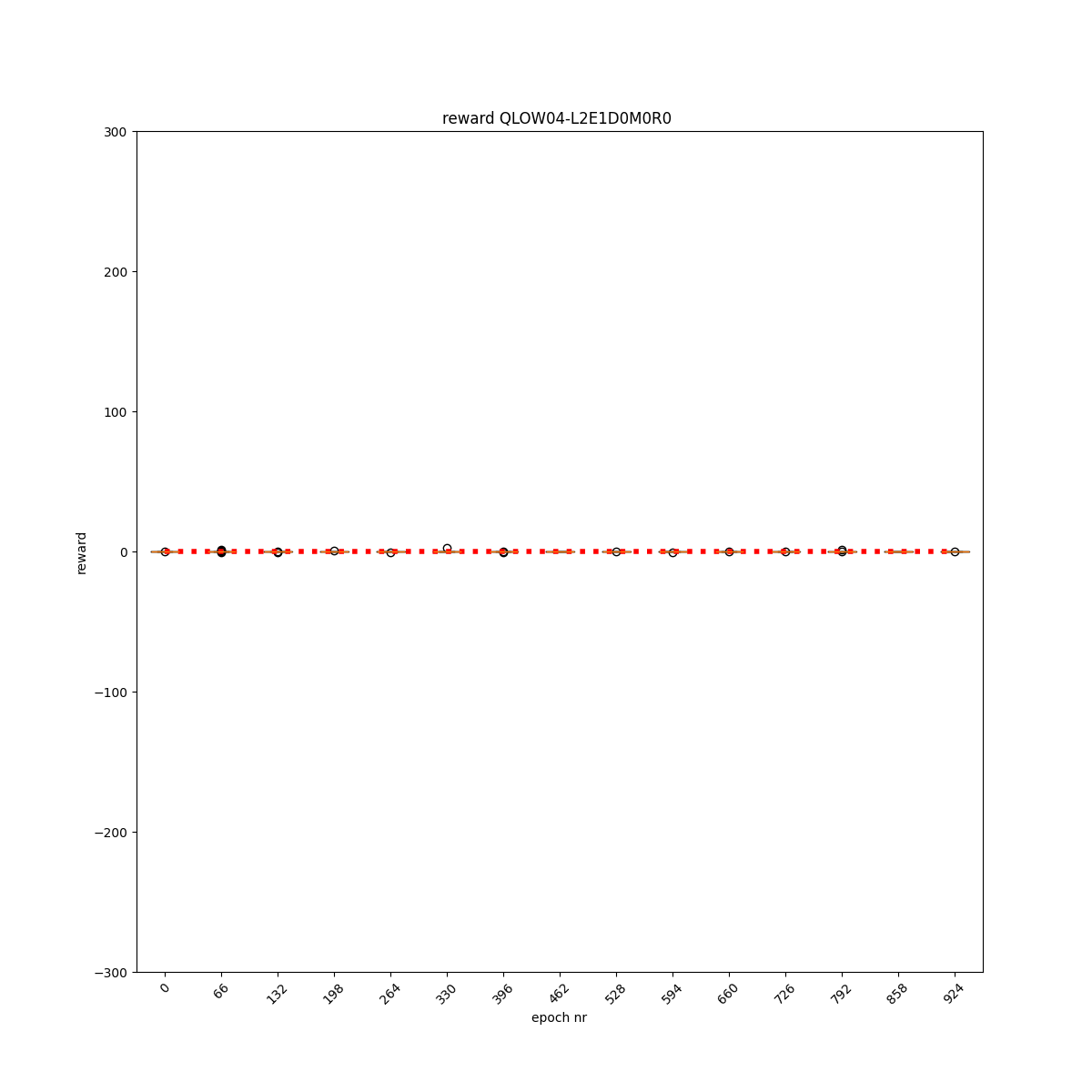

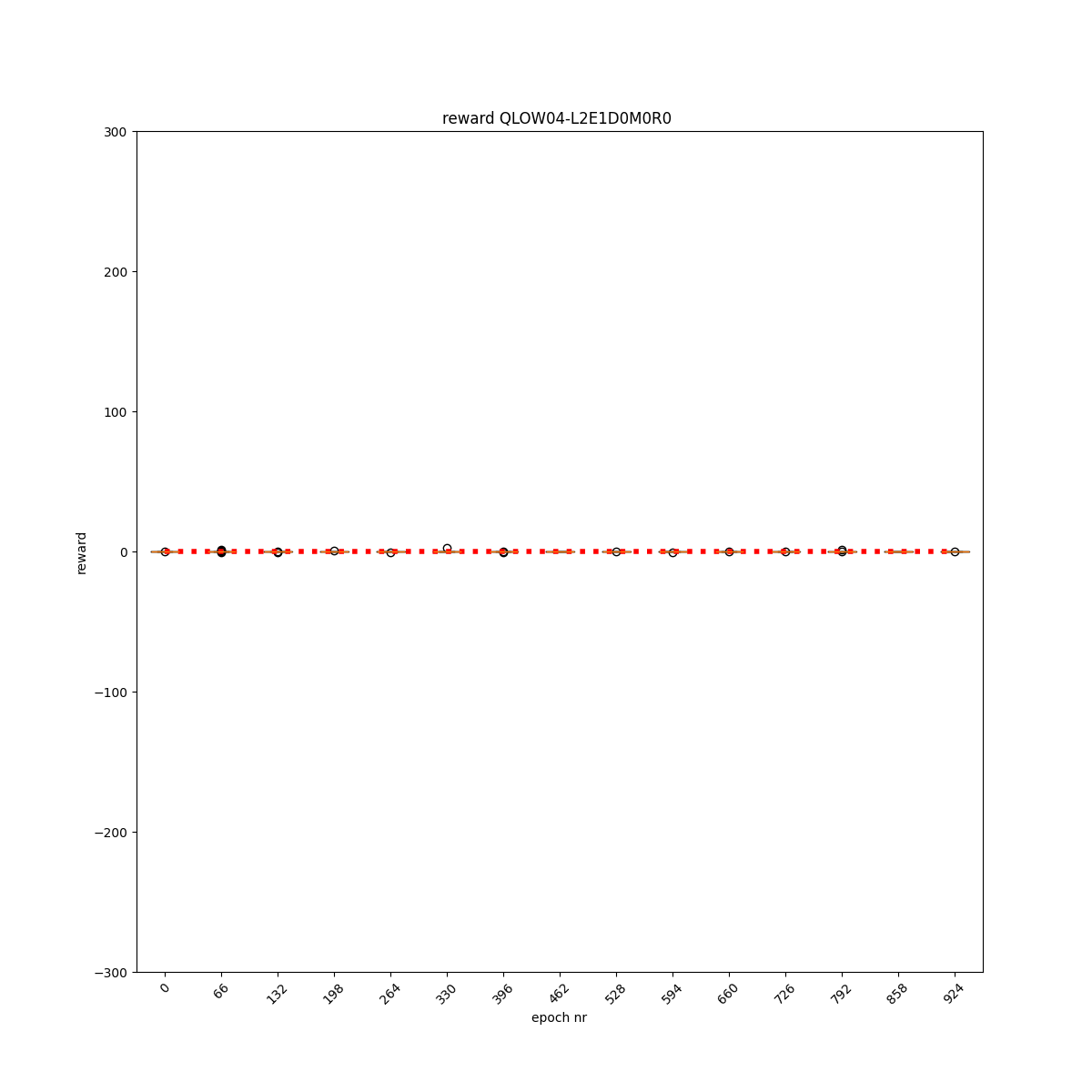

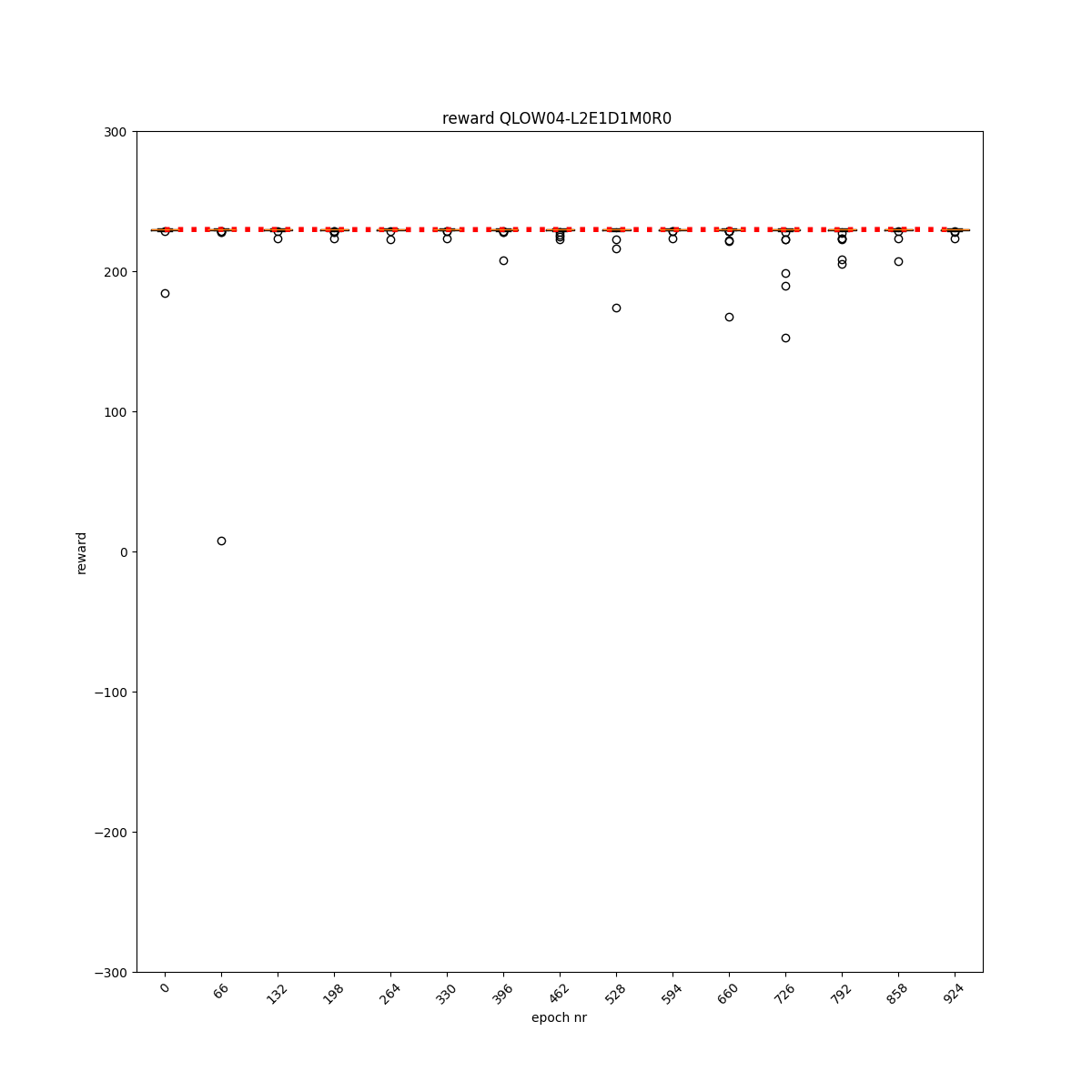

L2 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

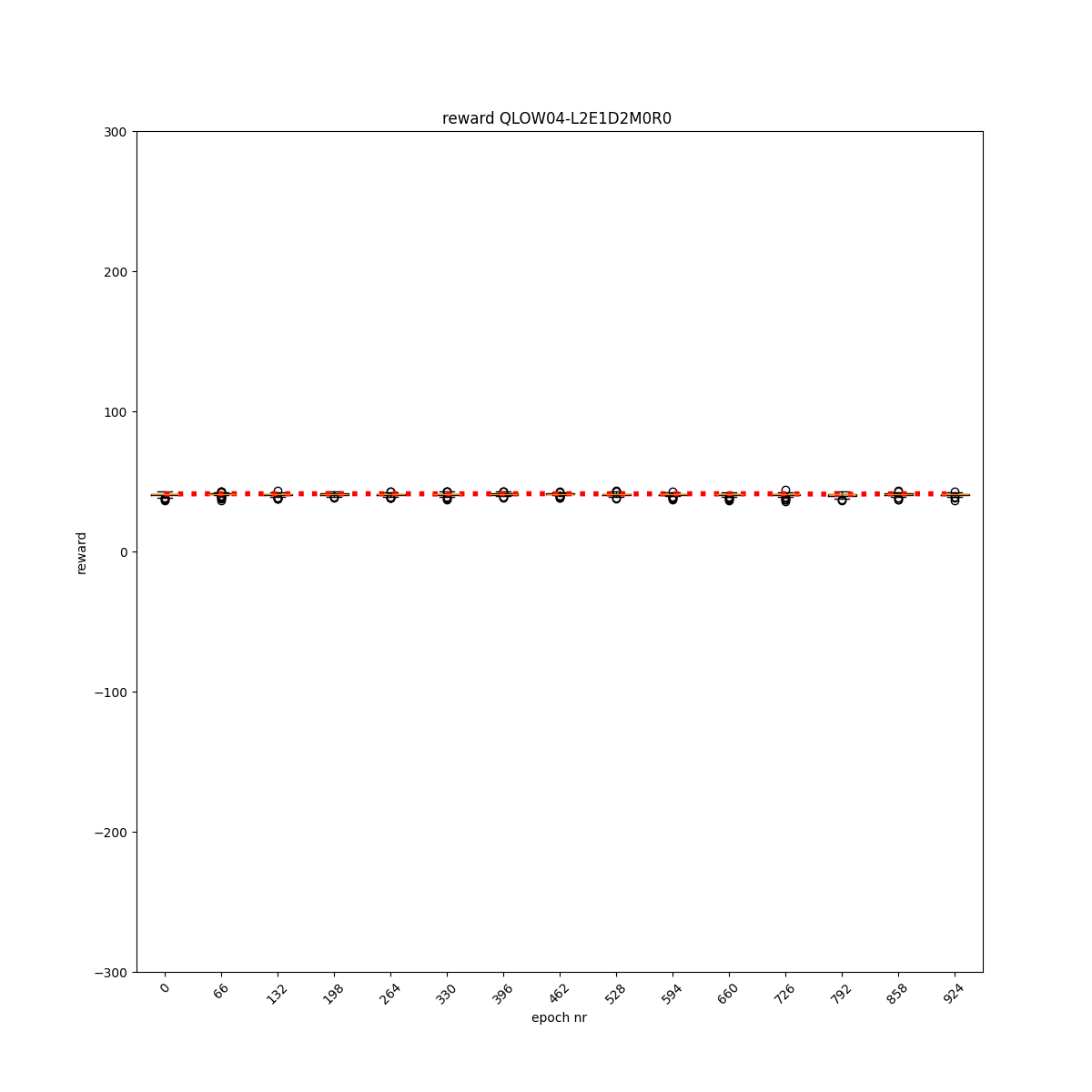

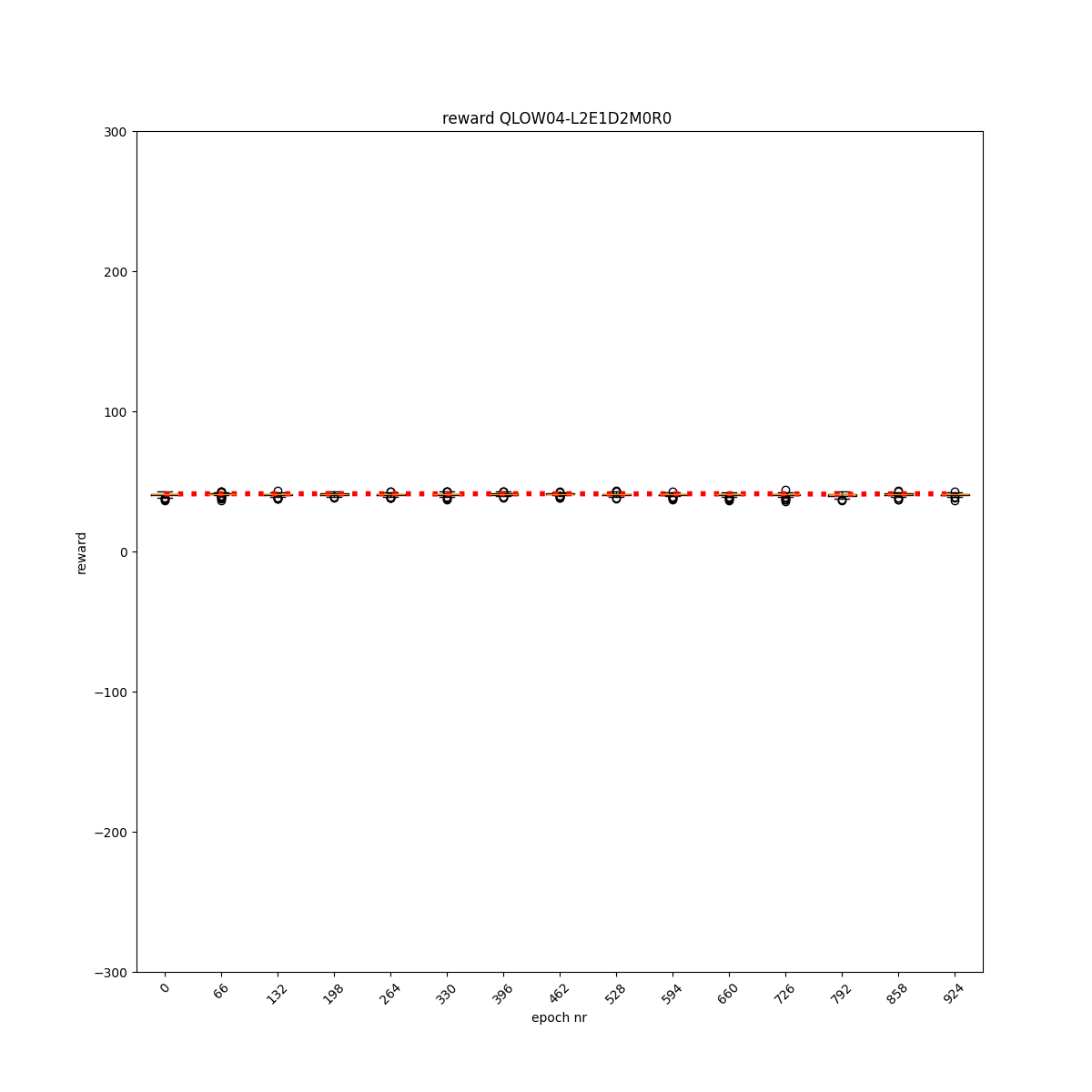

L2 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

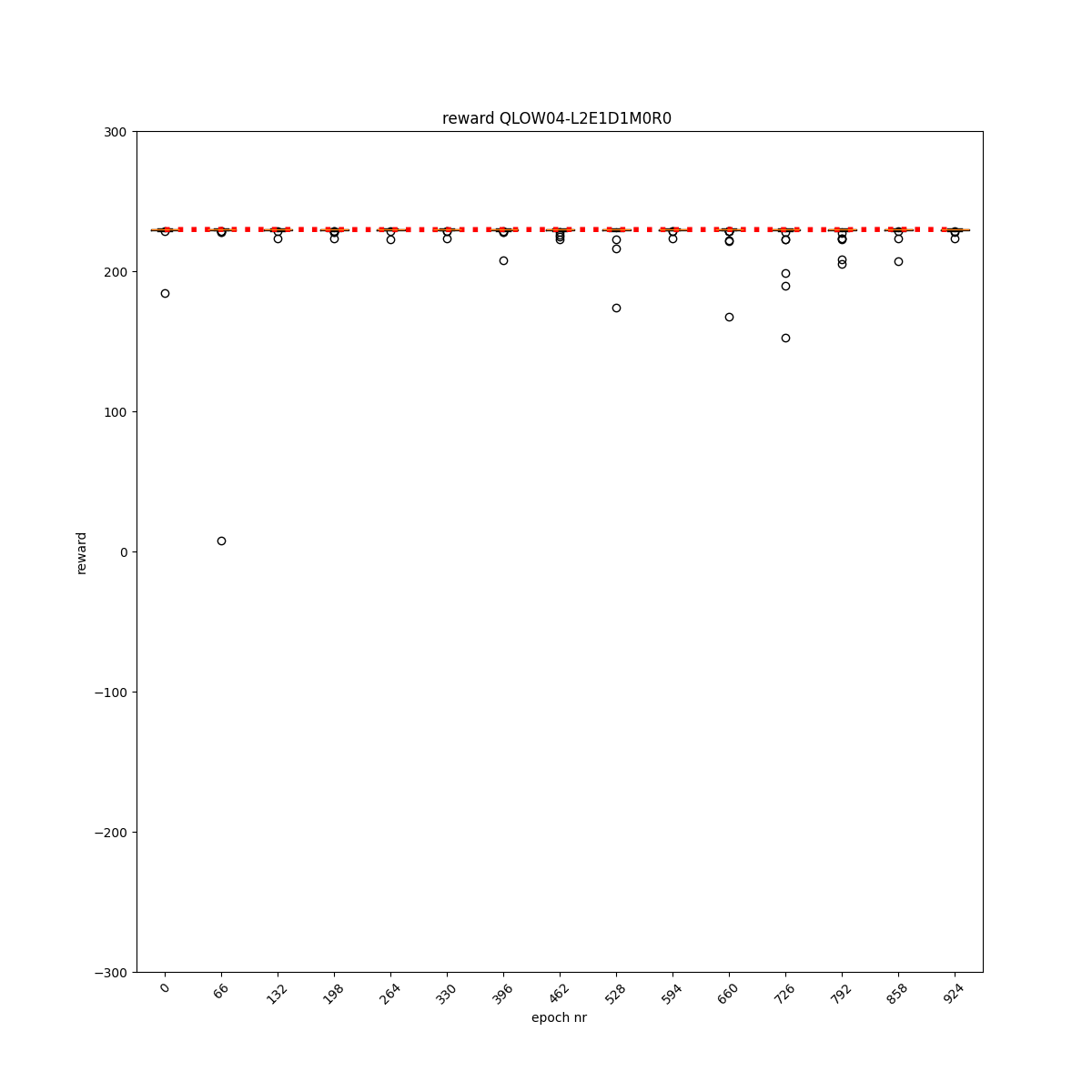

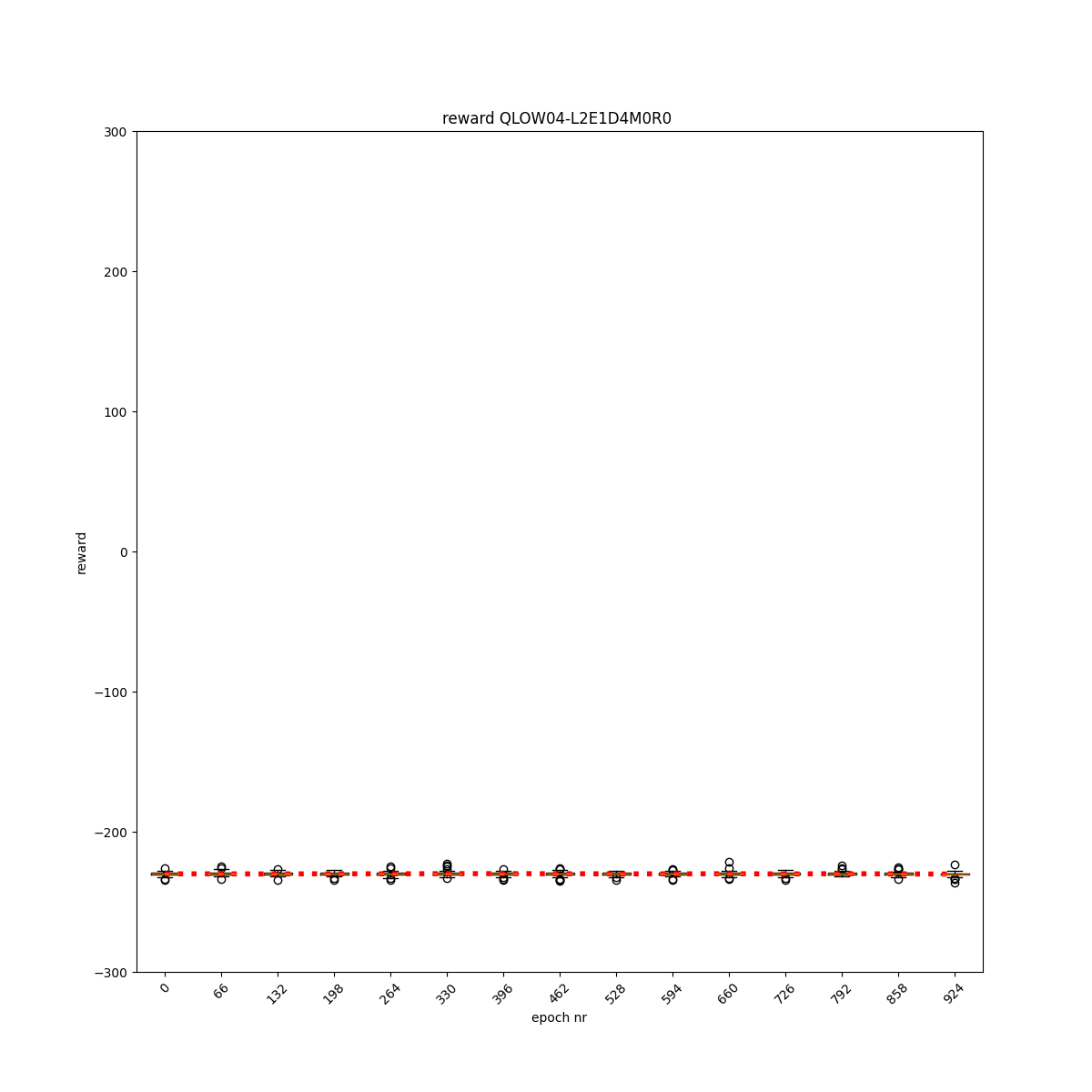

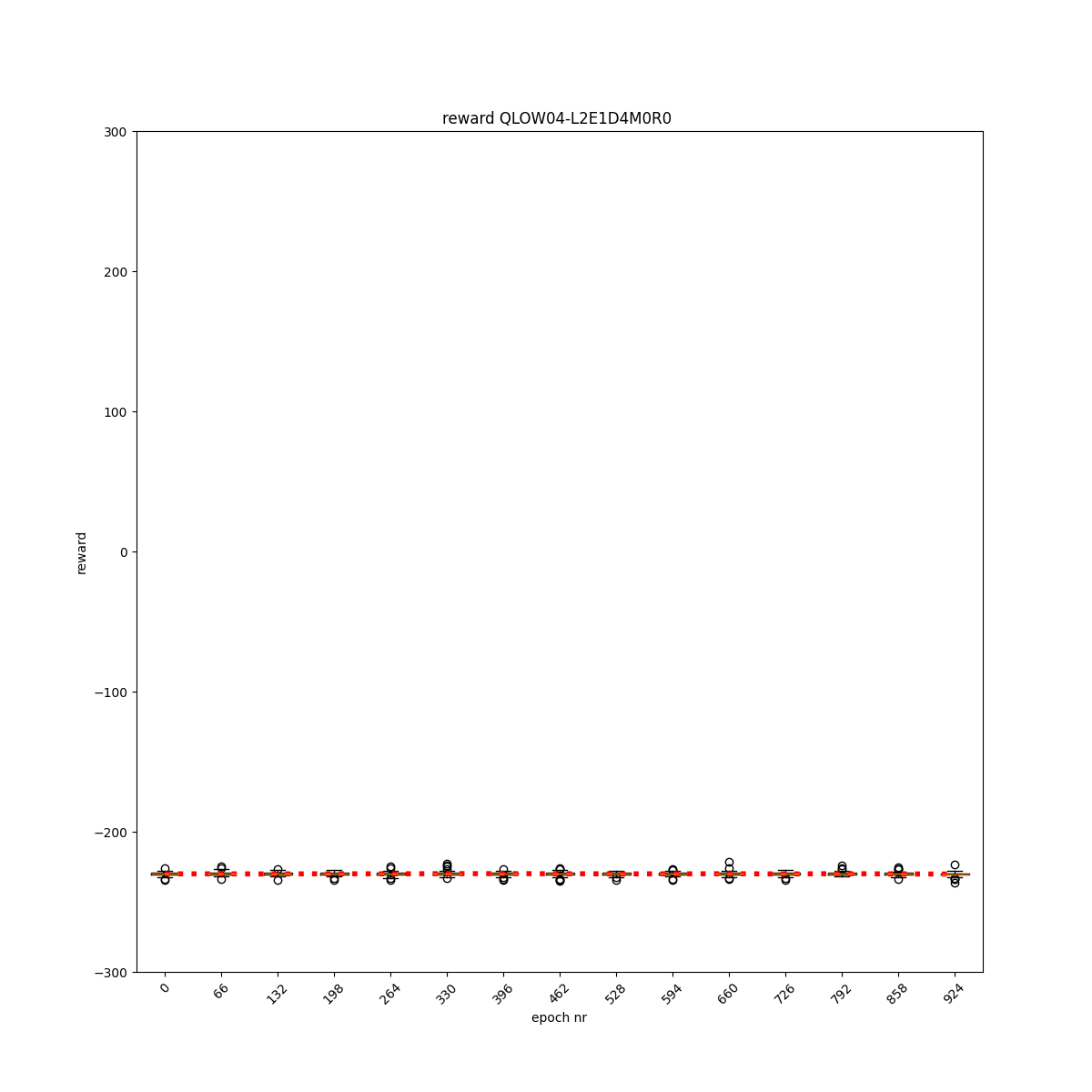

L2 E1 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

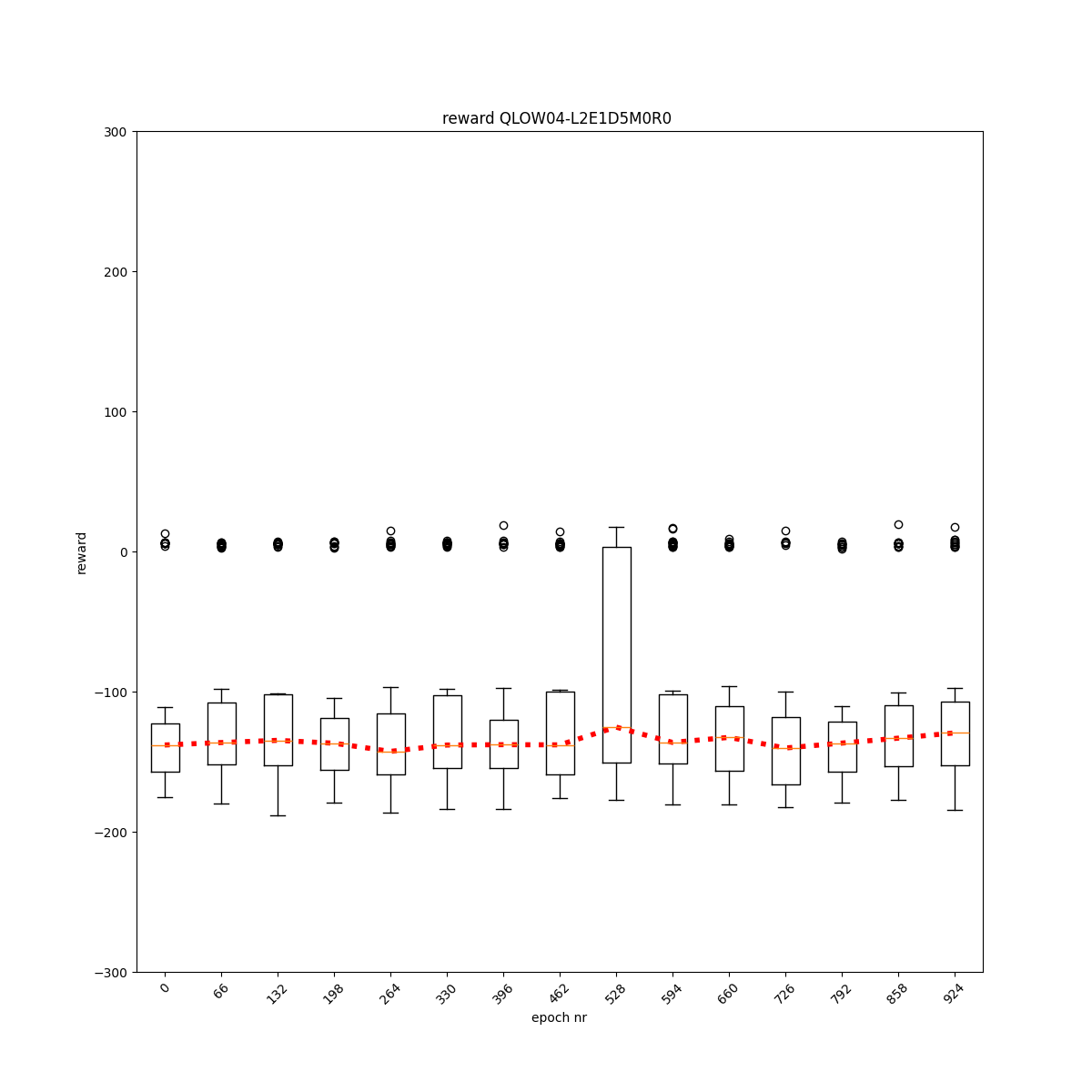

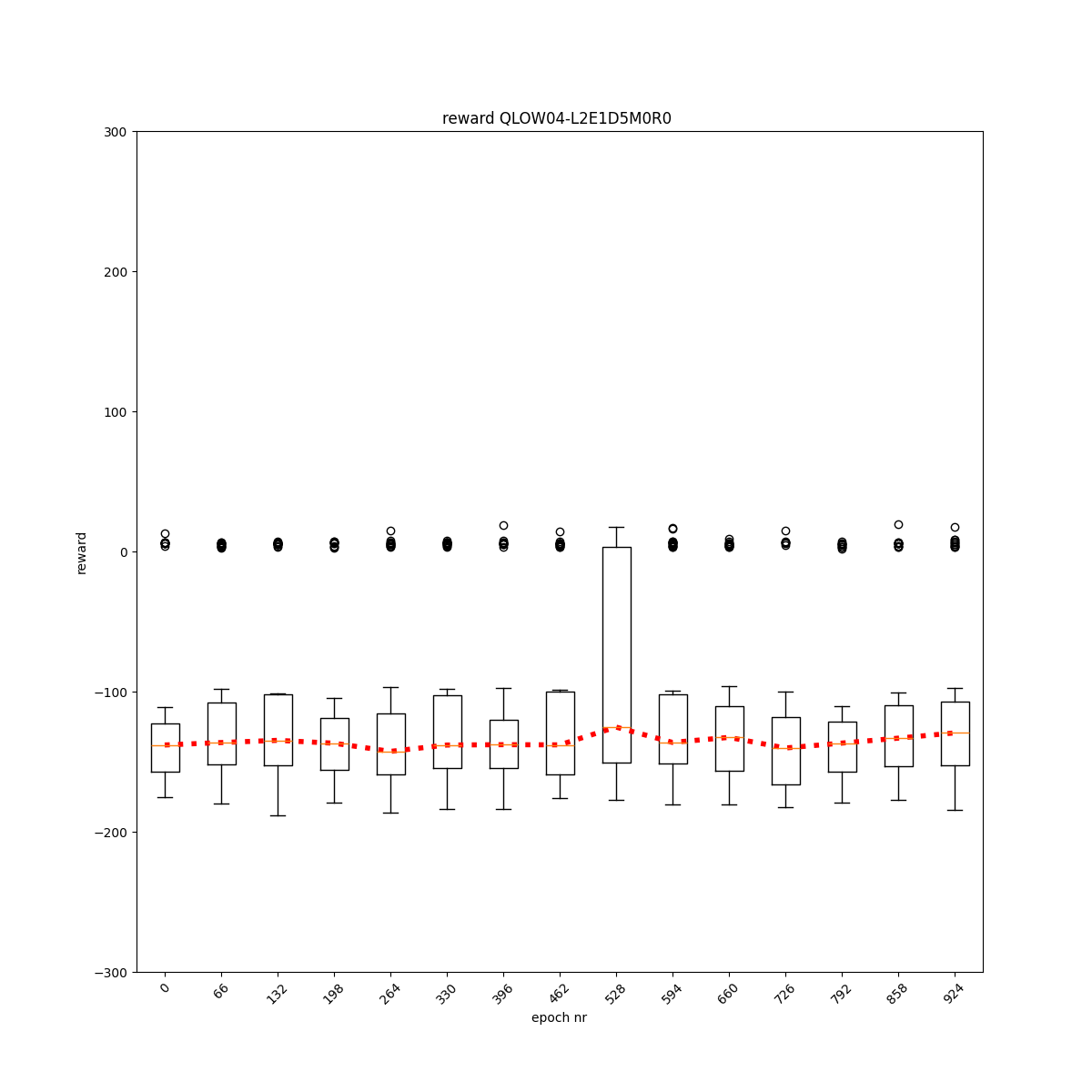

L2 E1 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

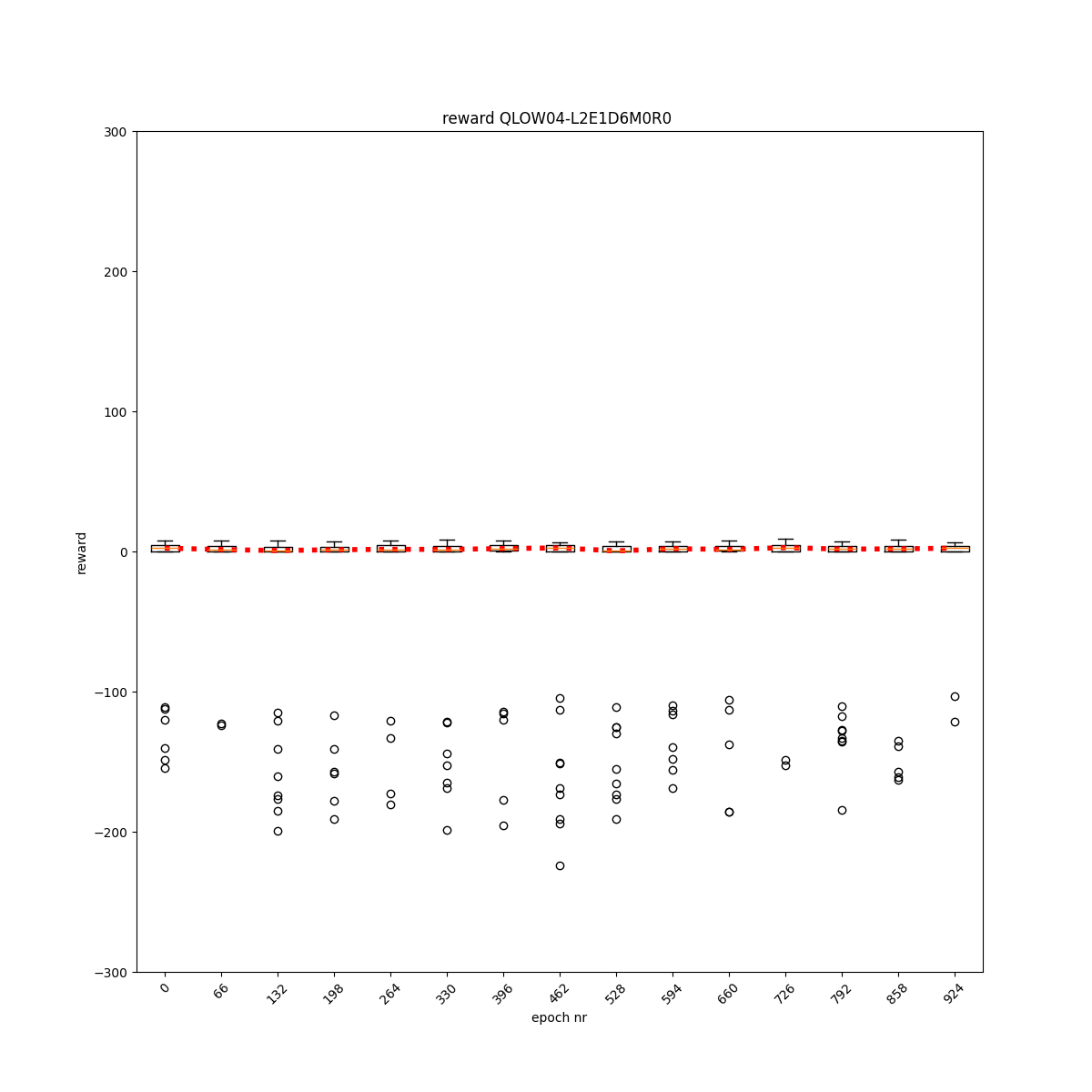

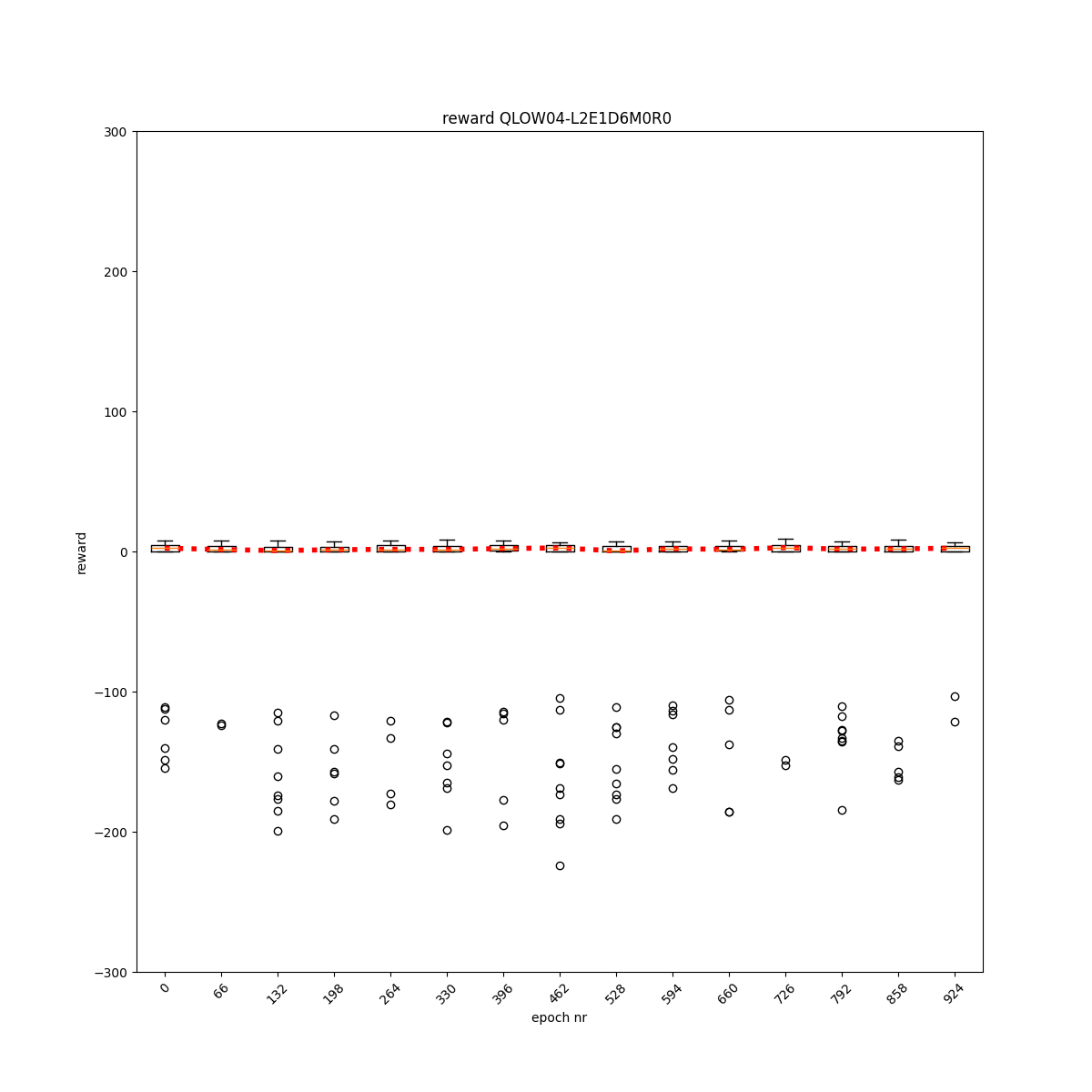

L2 E1 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

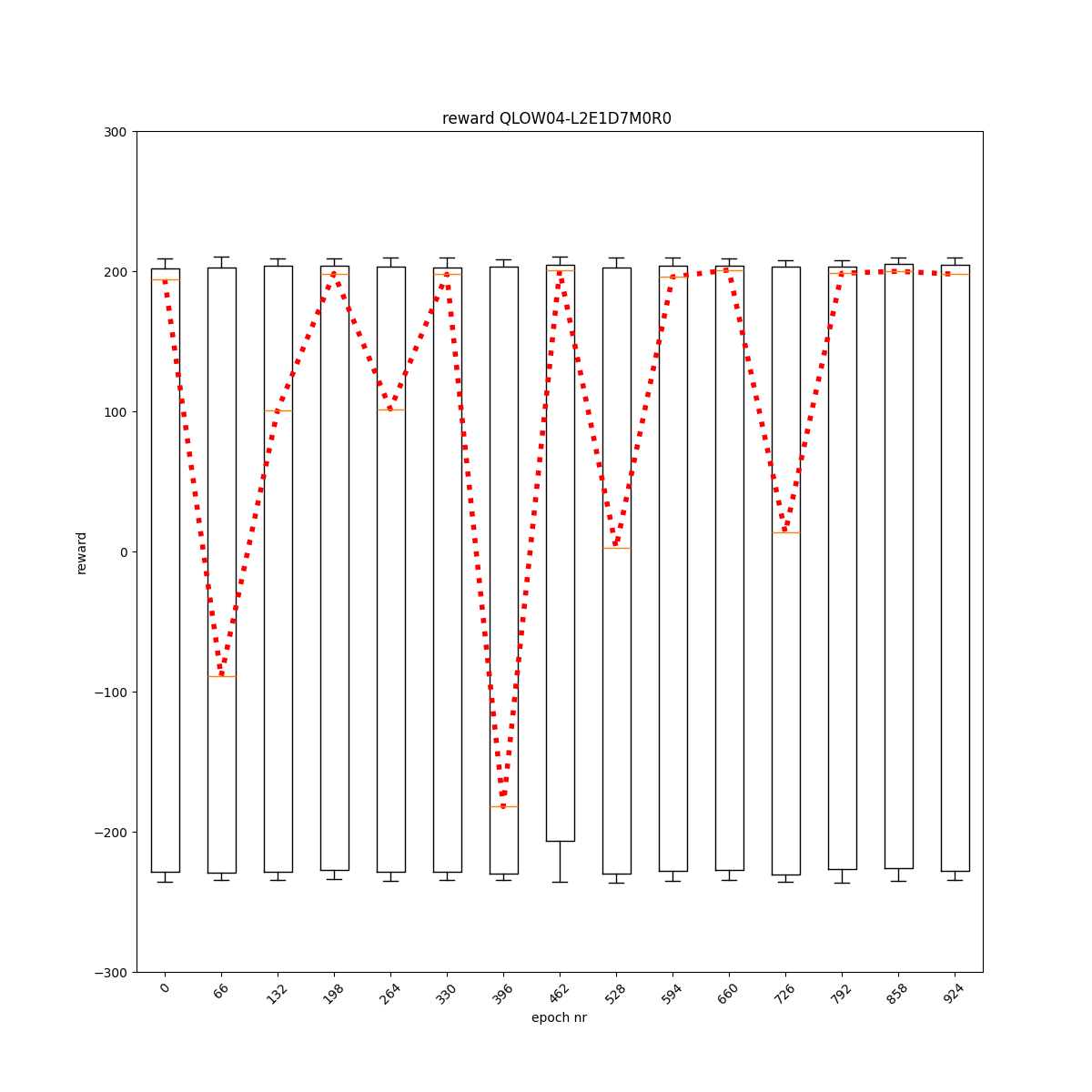

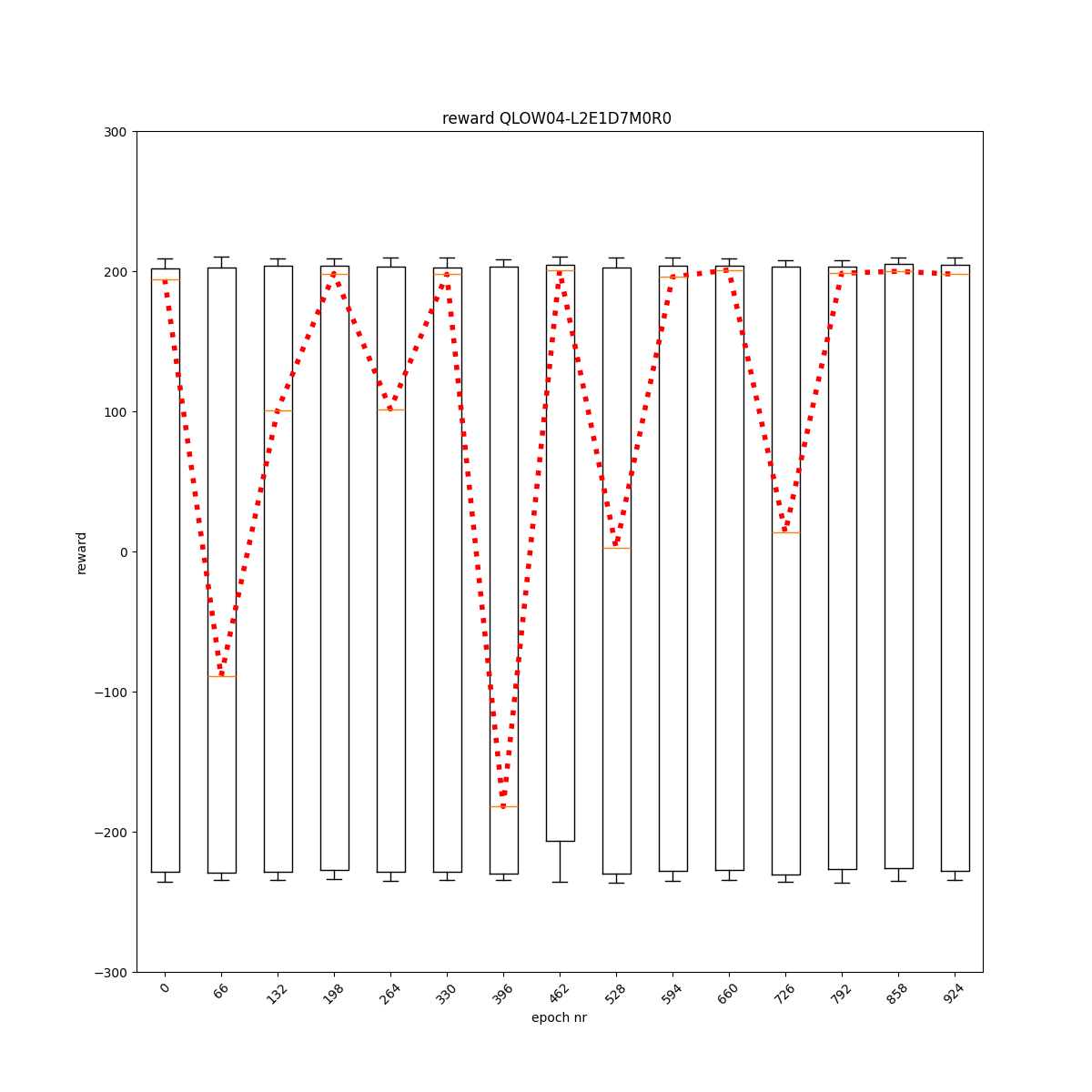

L2 E1 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

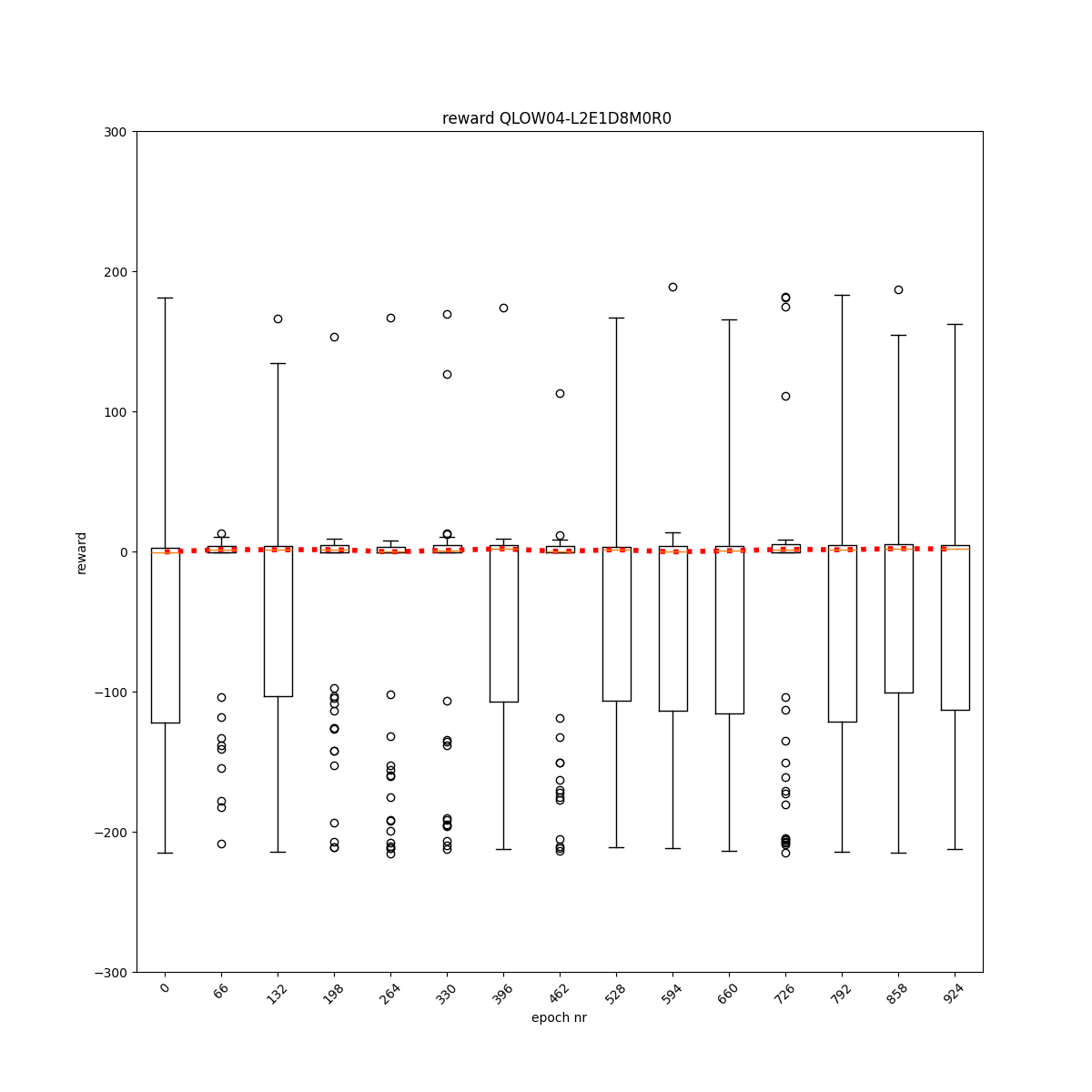

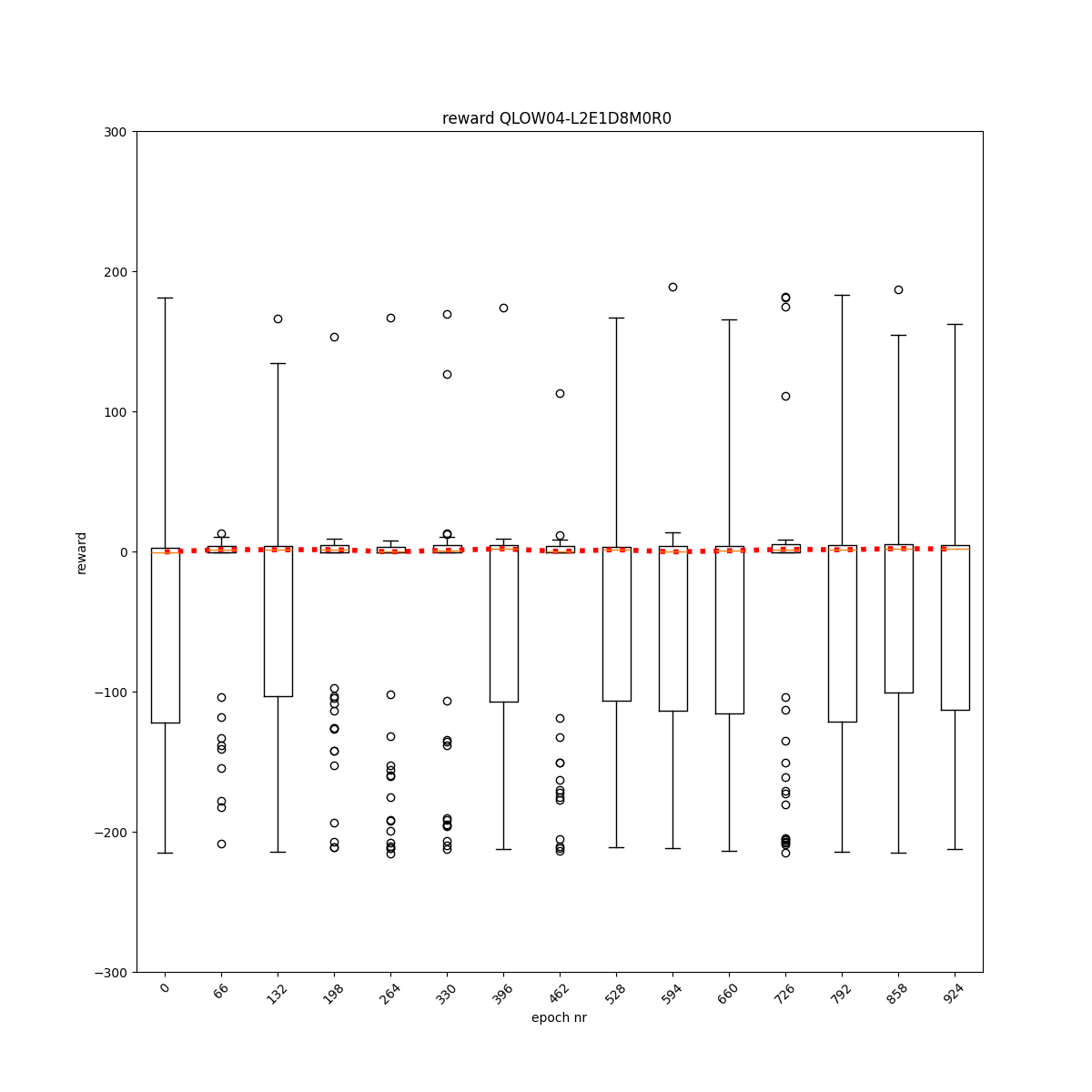

L2 E1 D8 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E1 D9 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

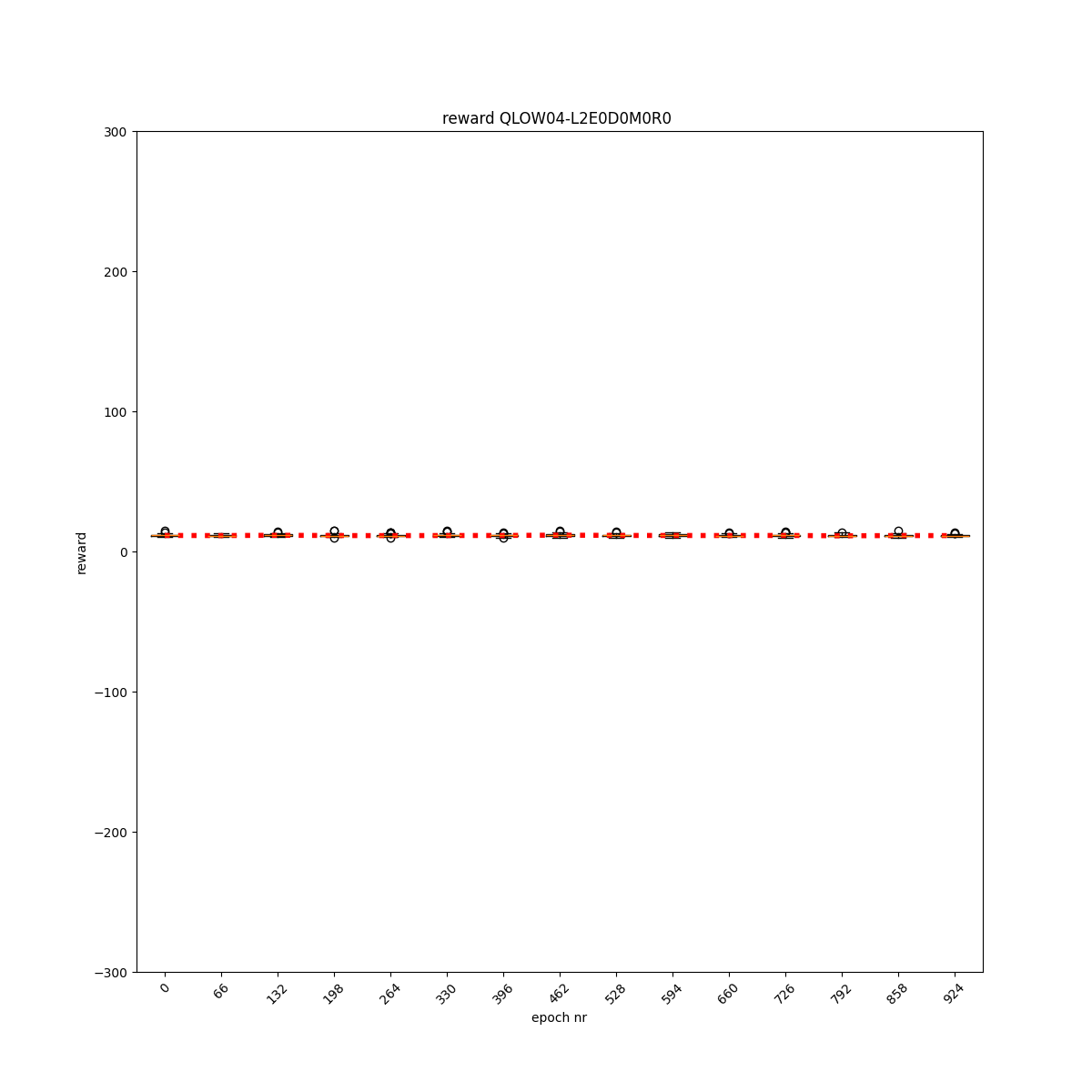

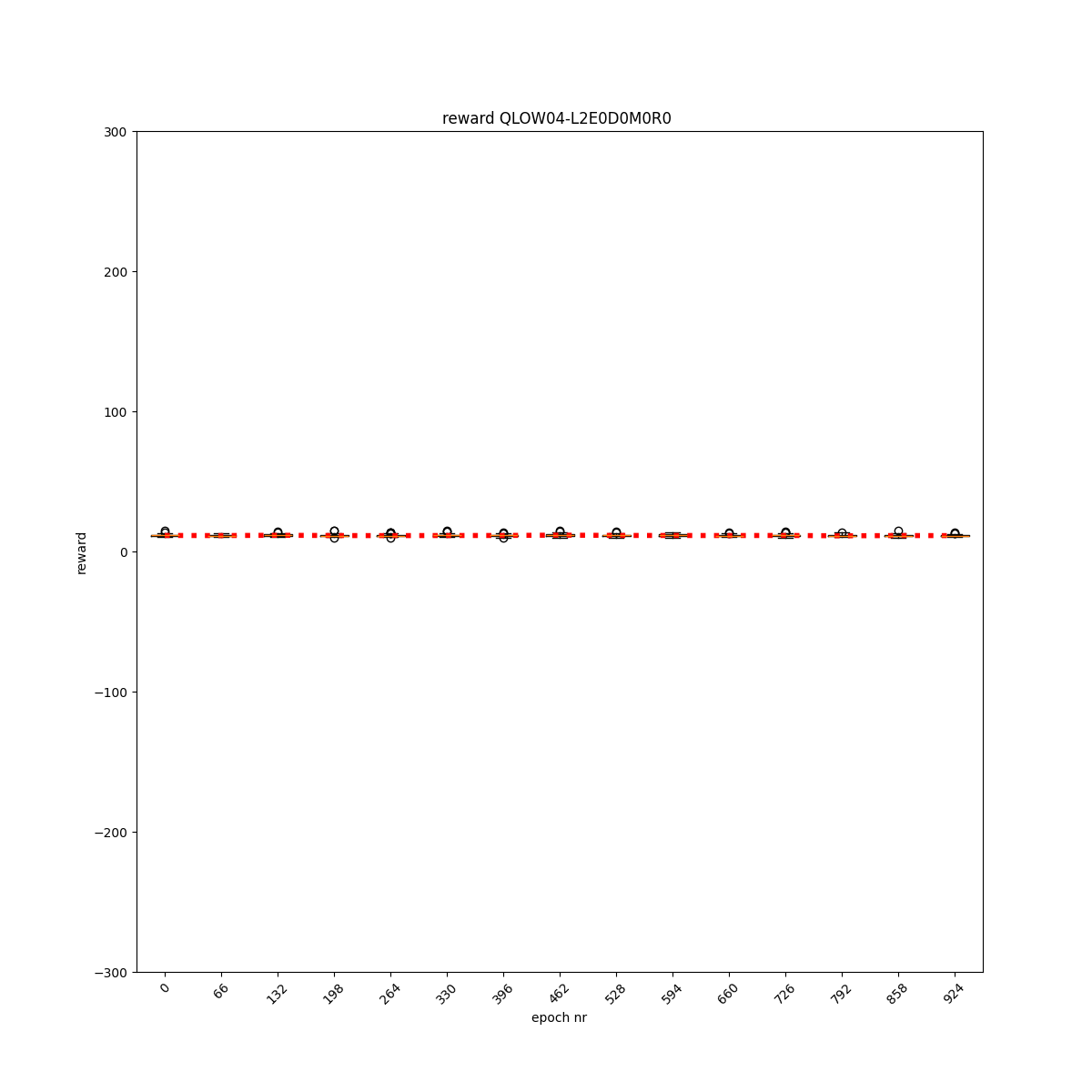

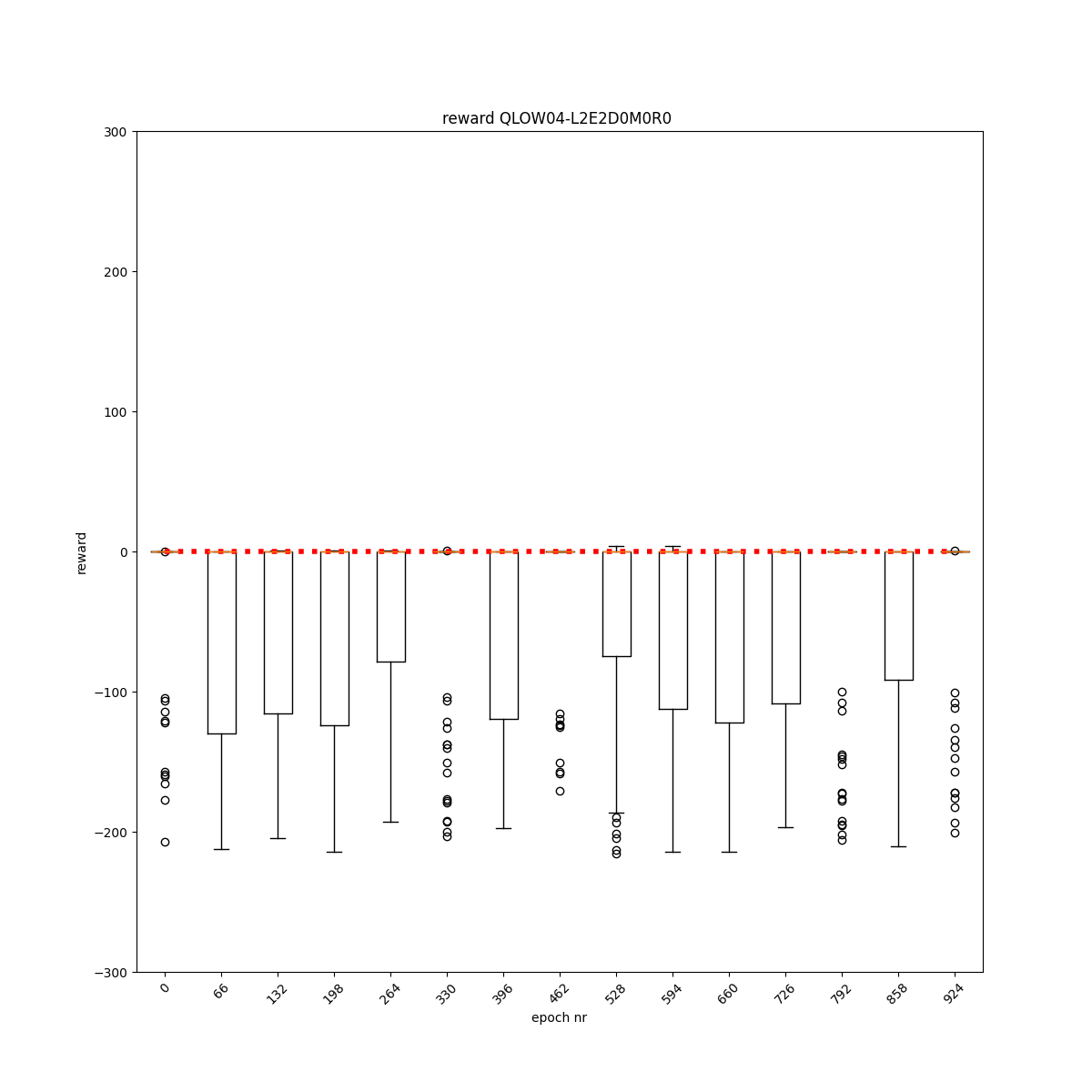

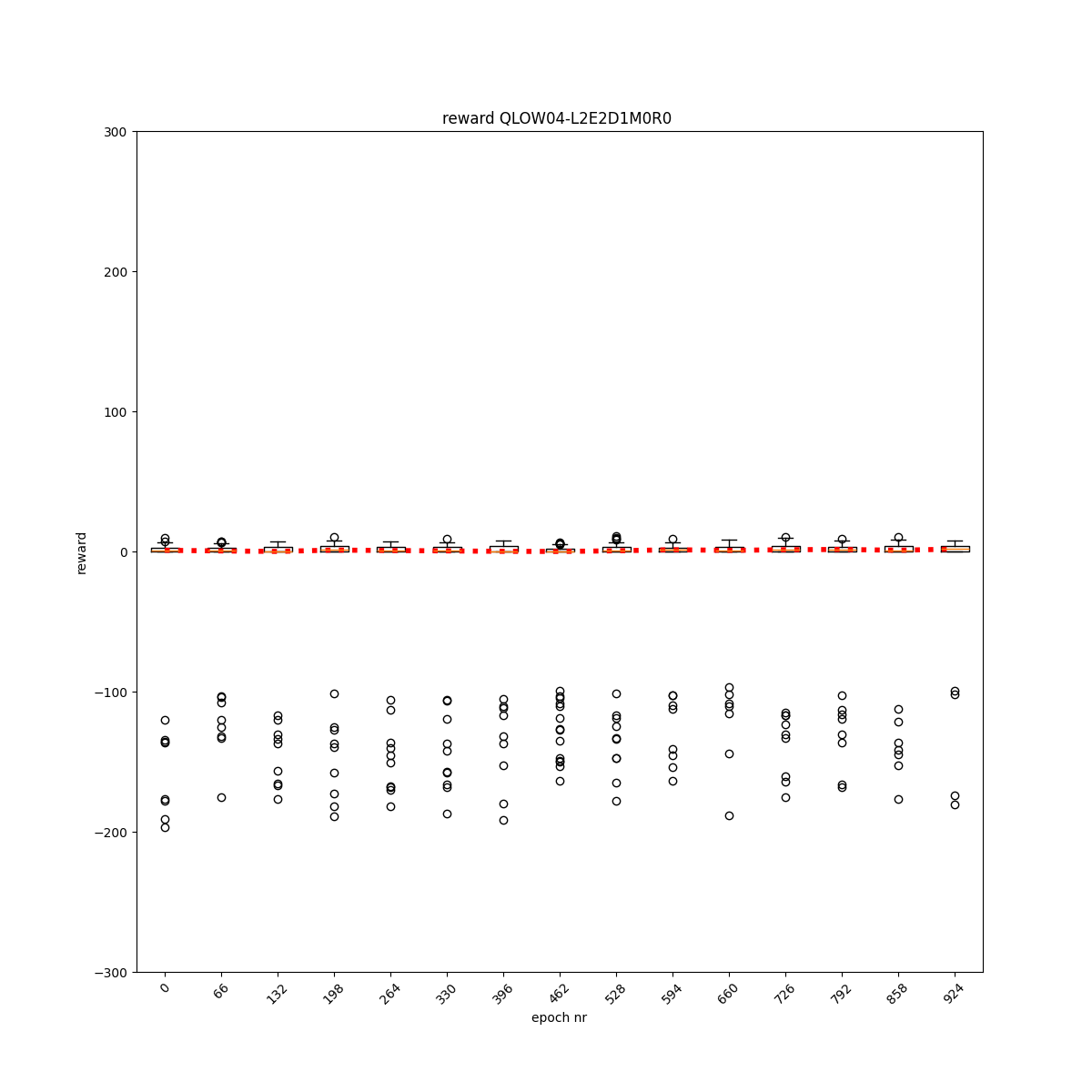

L2 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

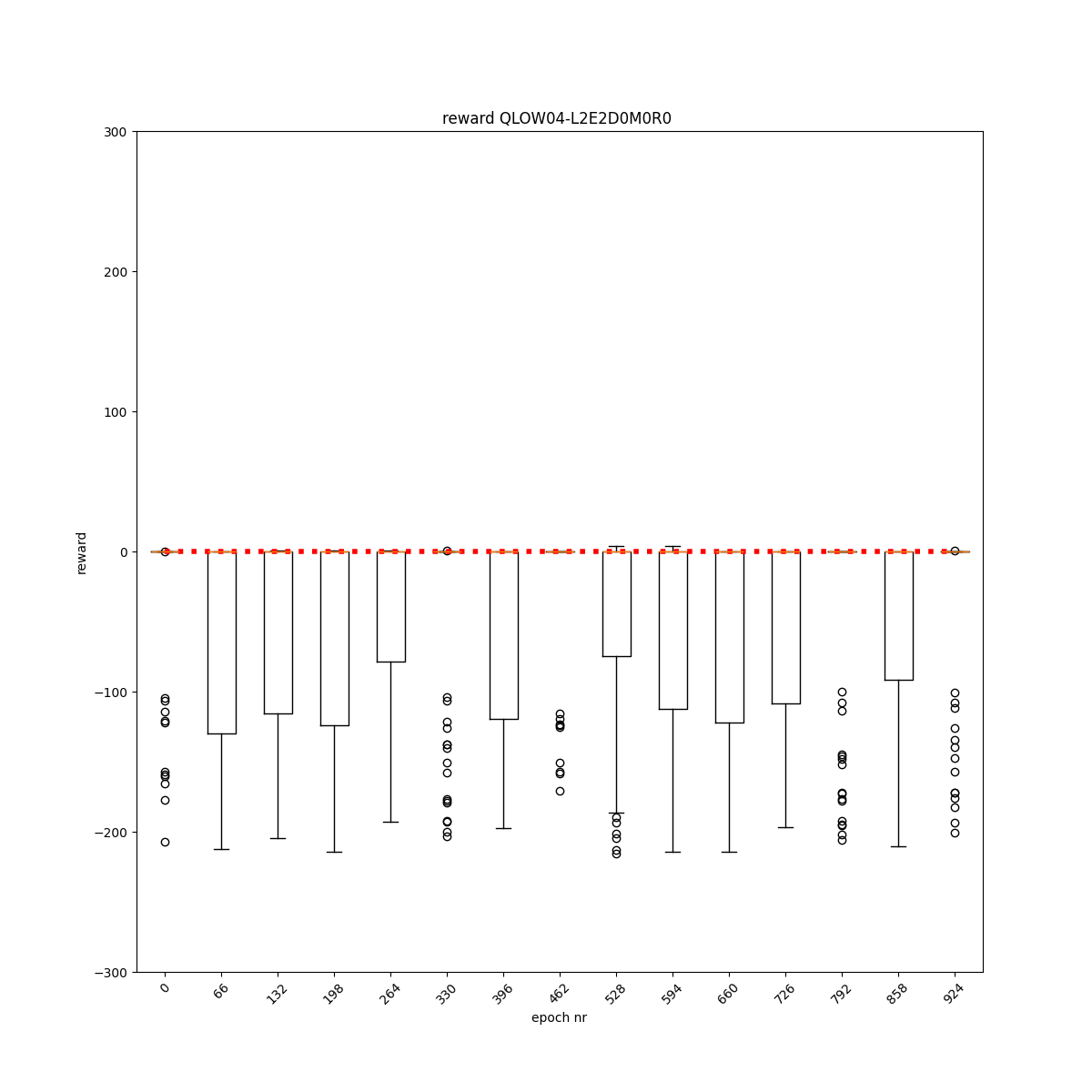

L2 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

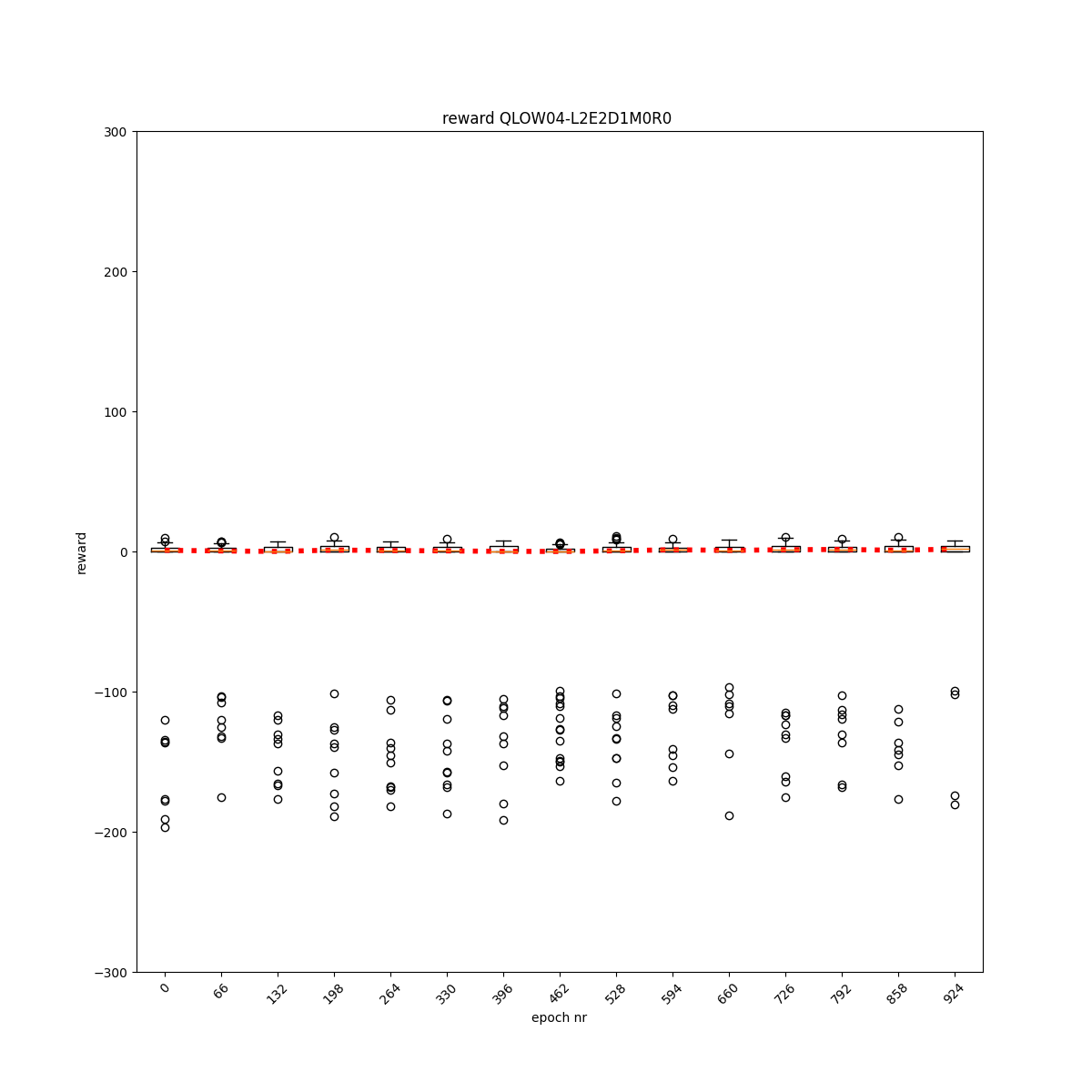

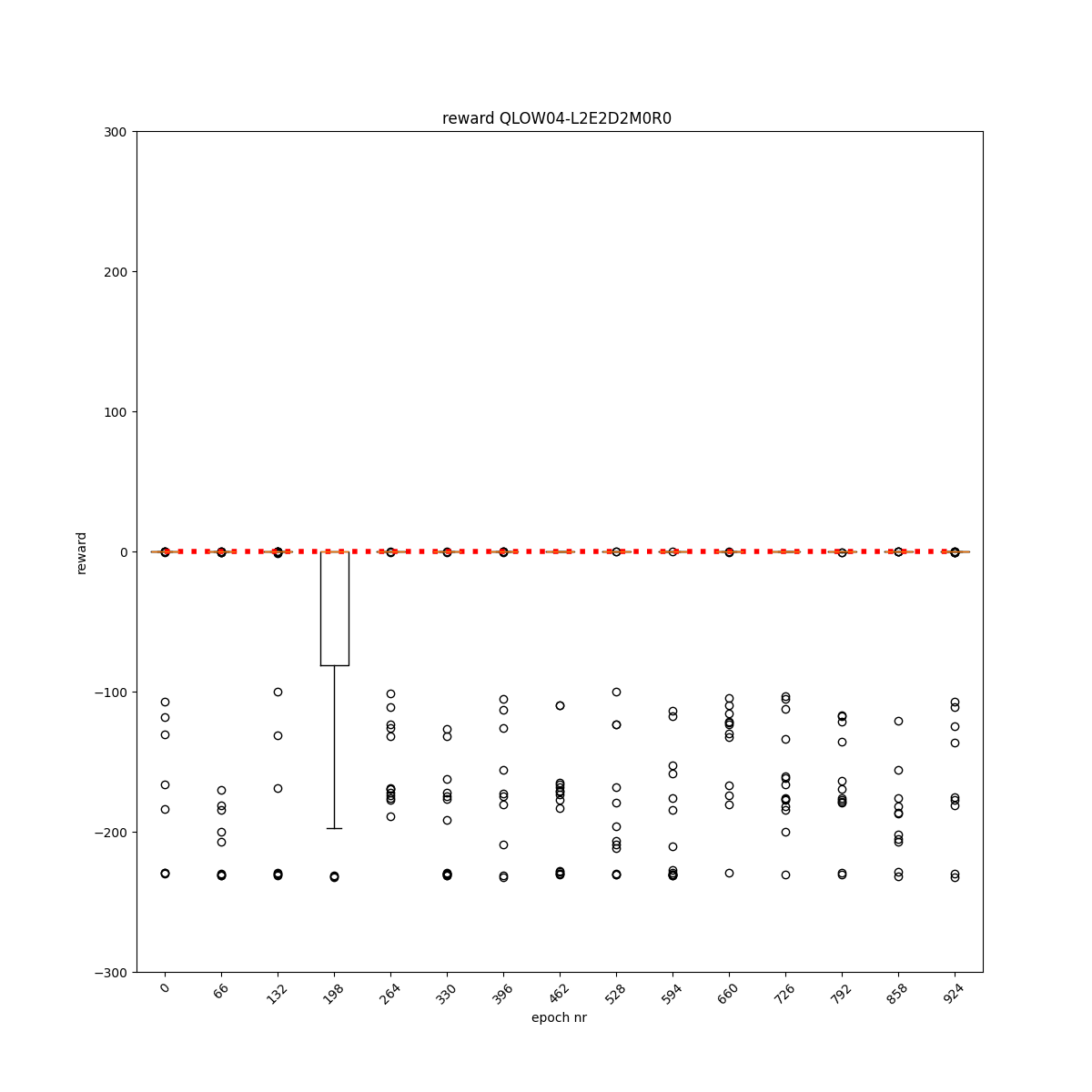

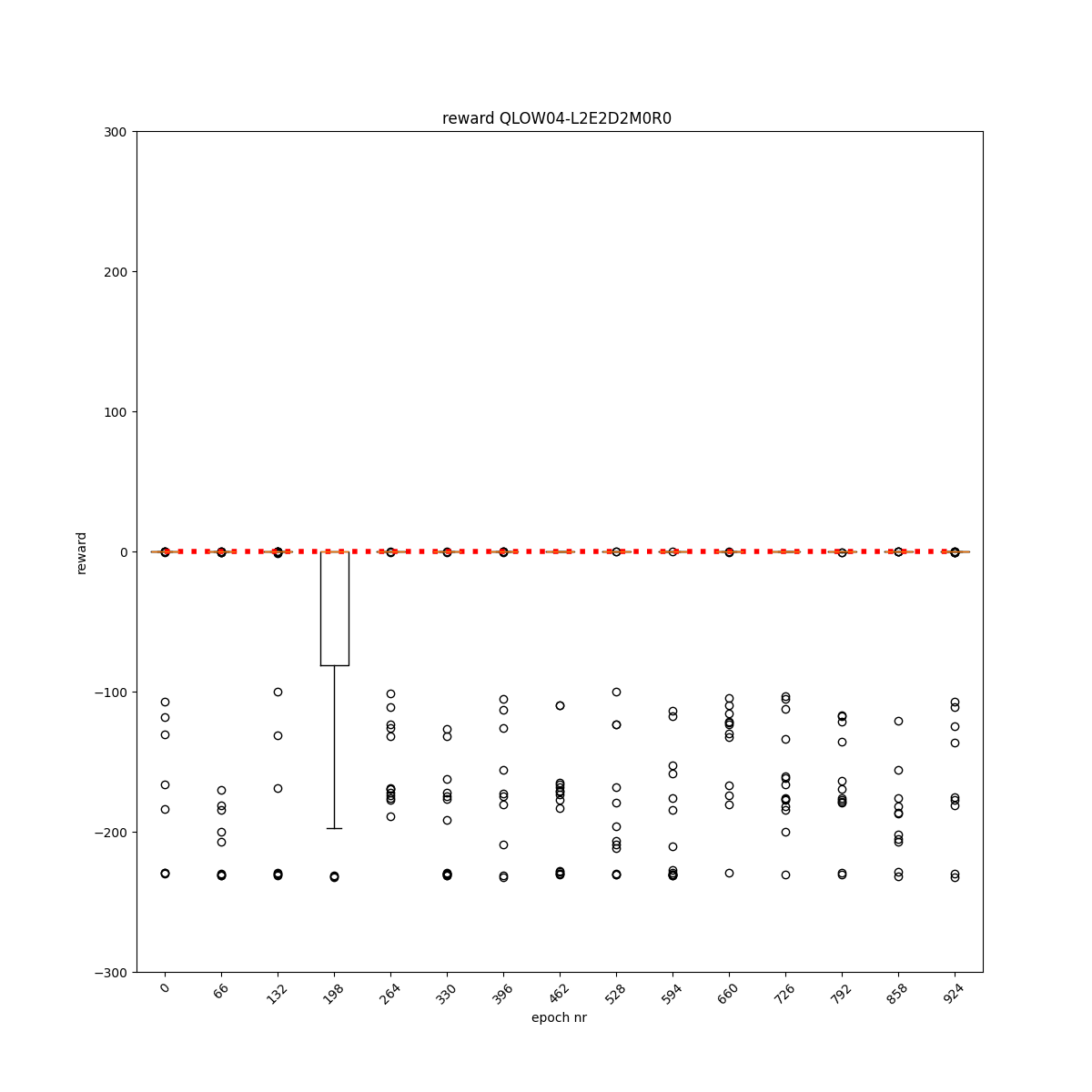

L2 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

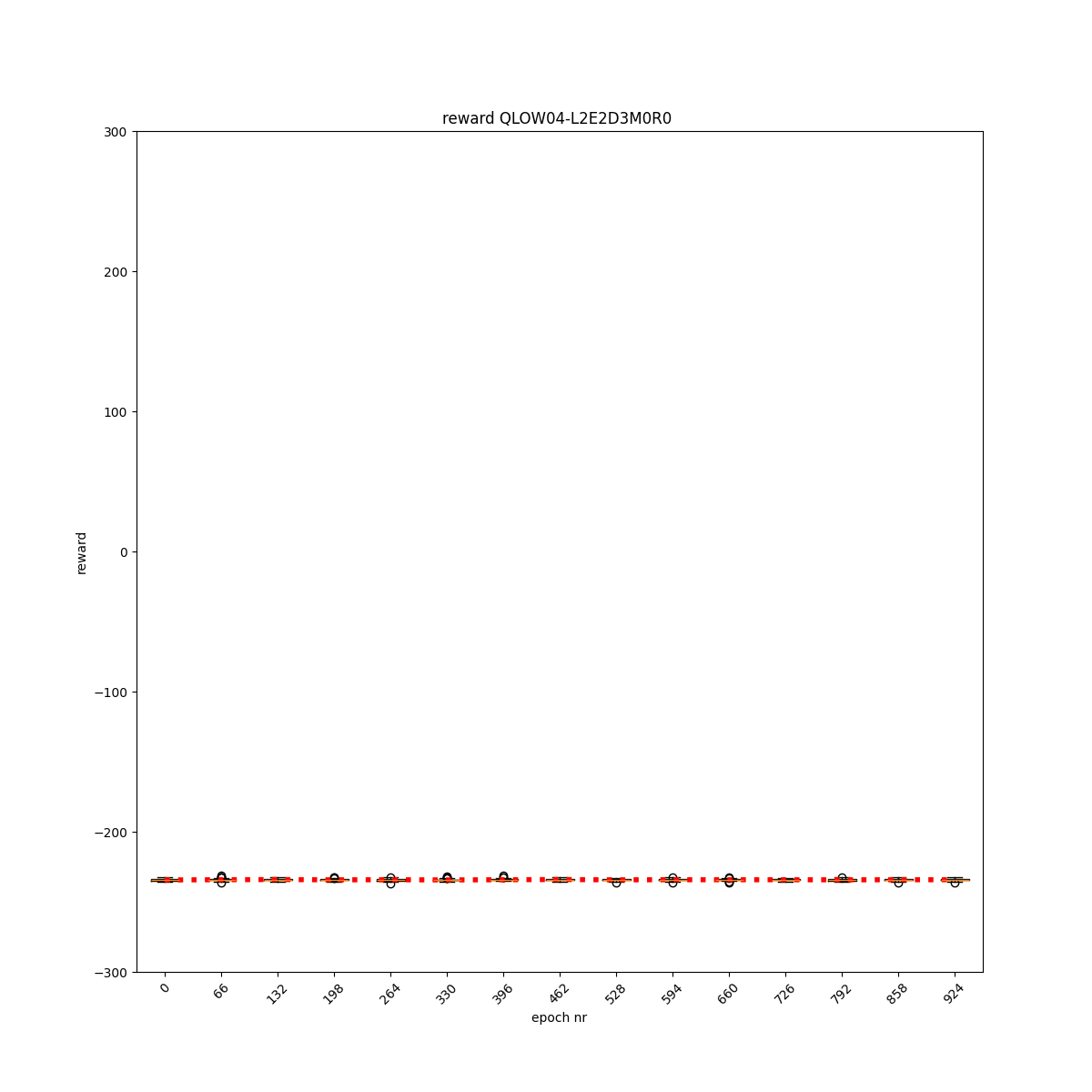

L2 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E2 D4 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E2 D5 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

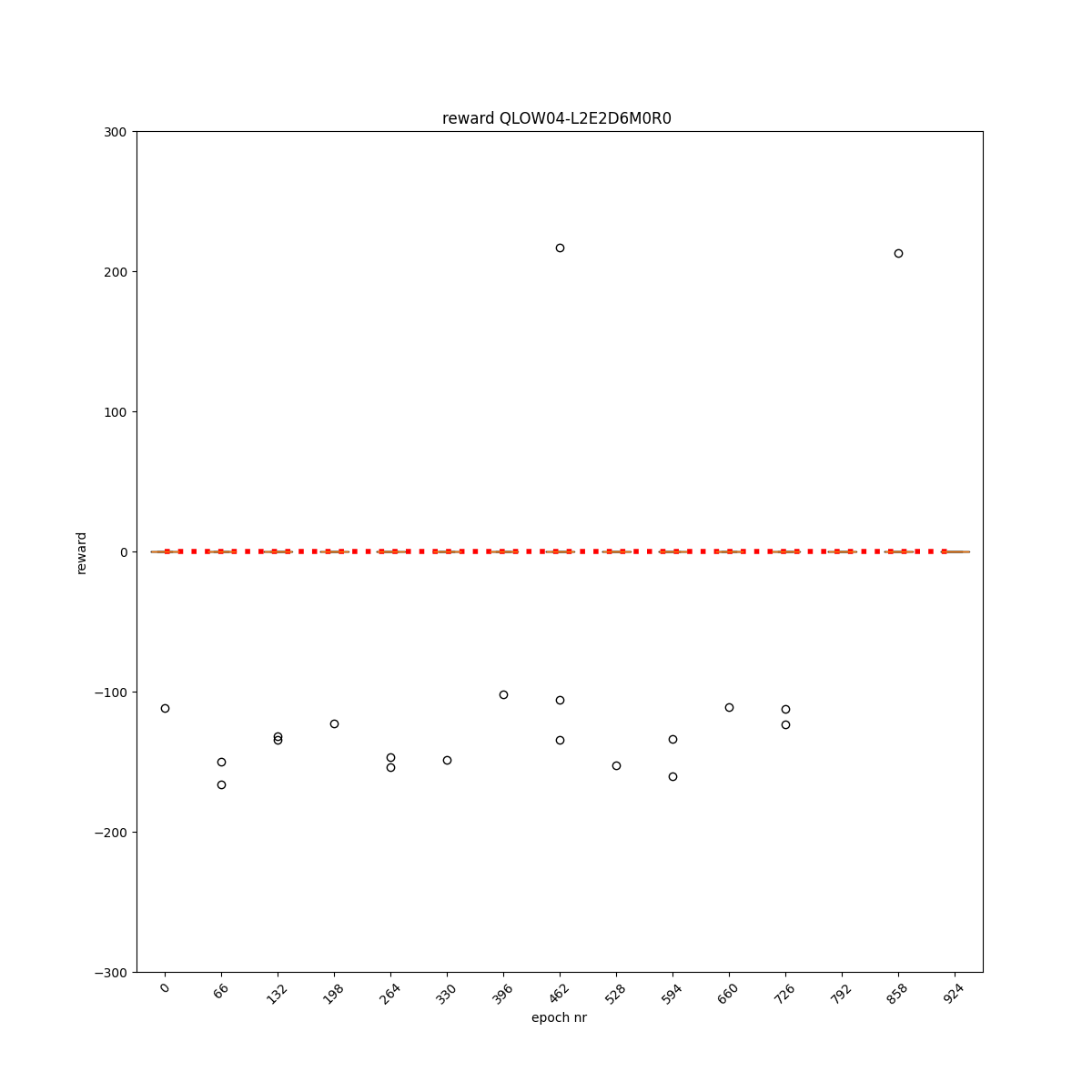

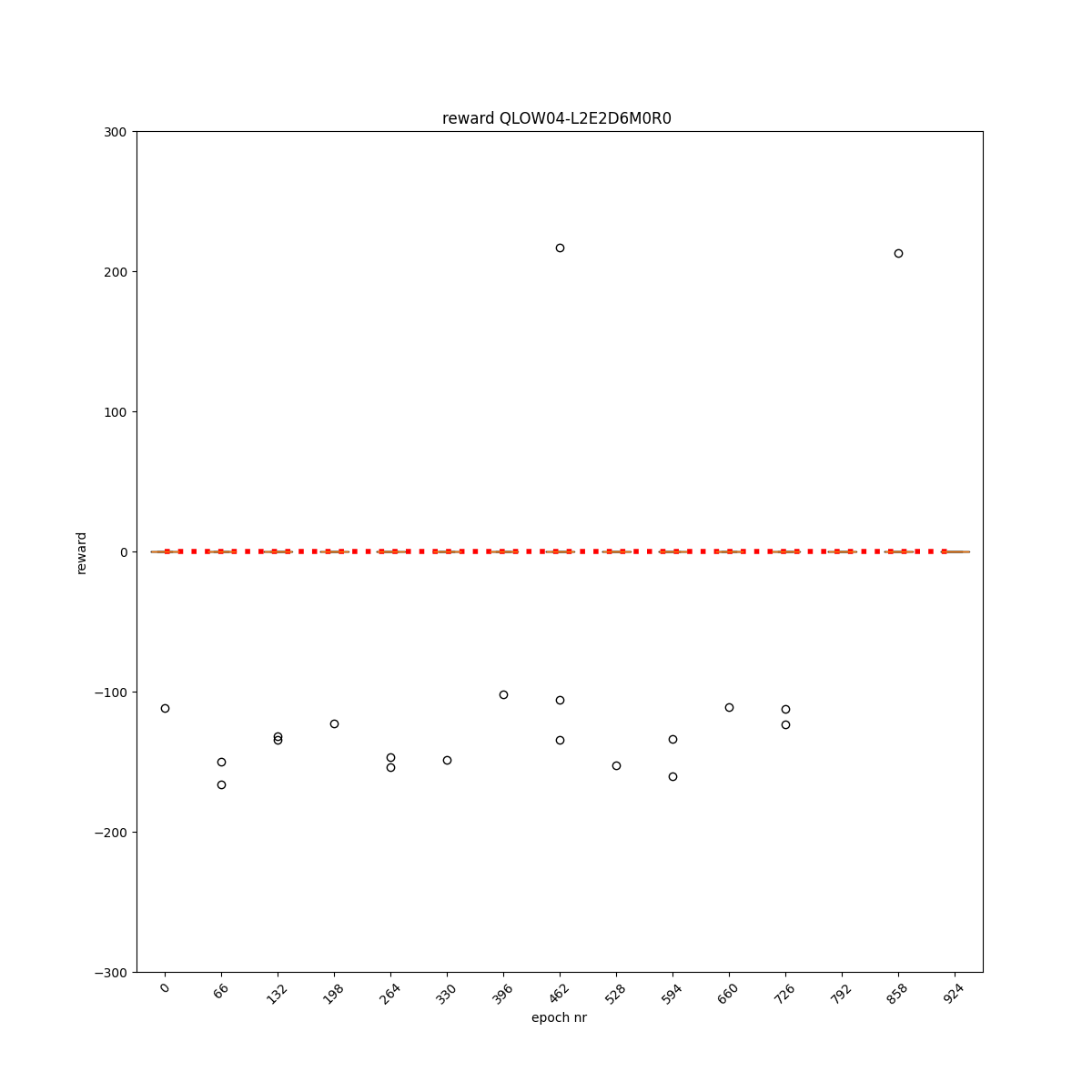

L2 E2 D6 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E2 D7 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11