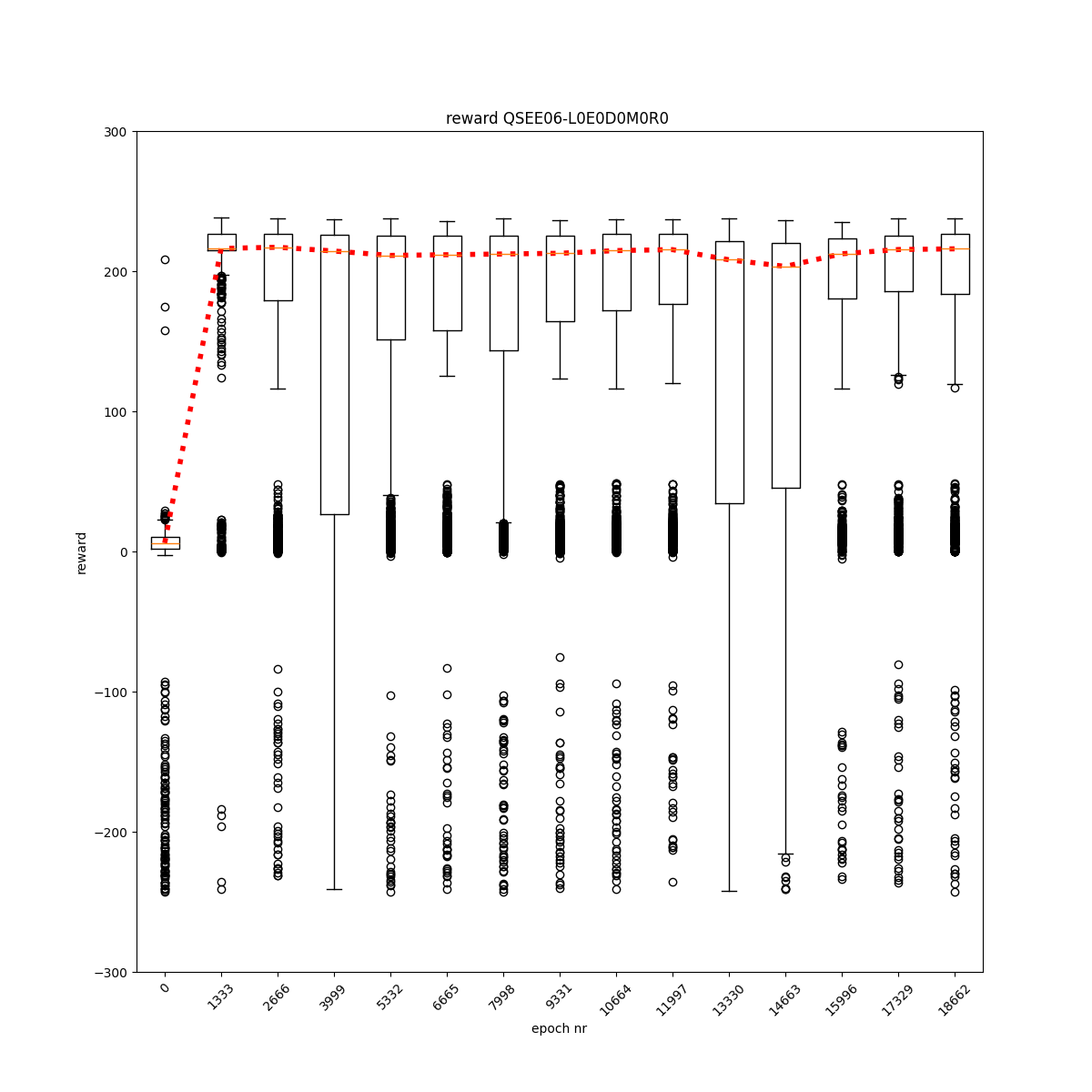

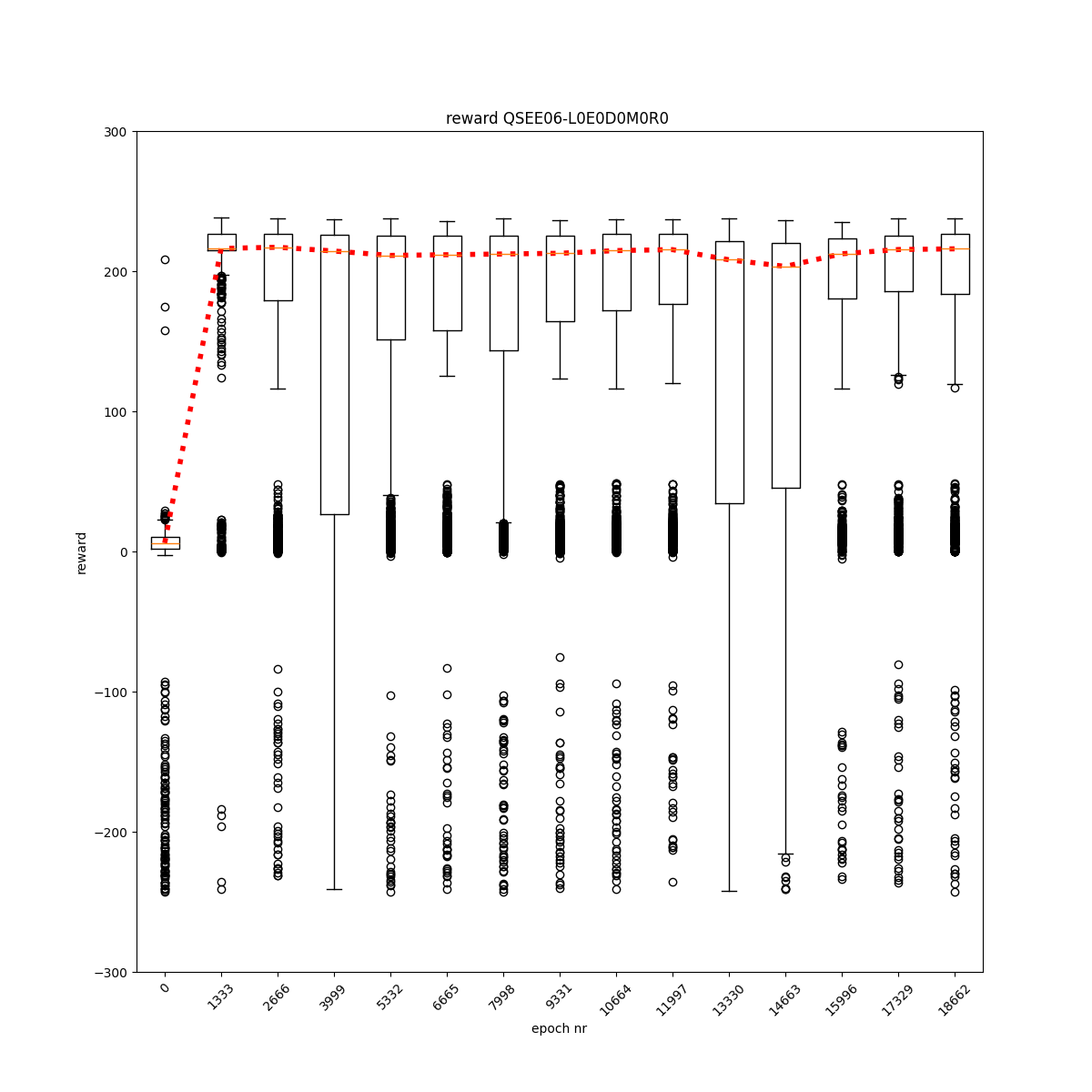

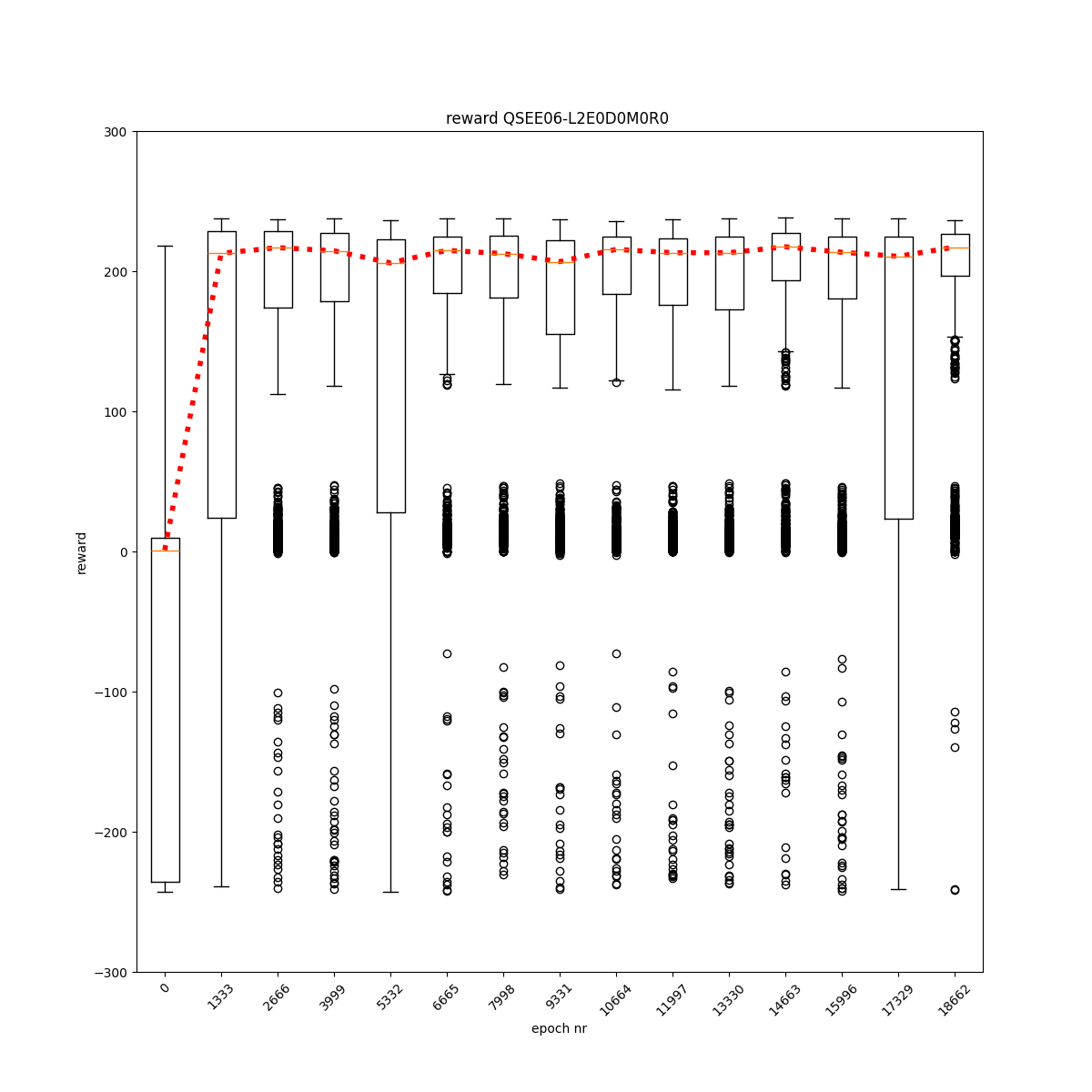

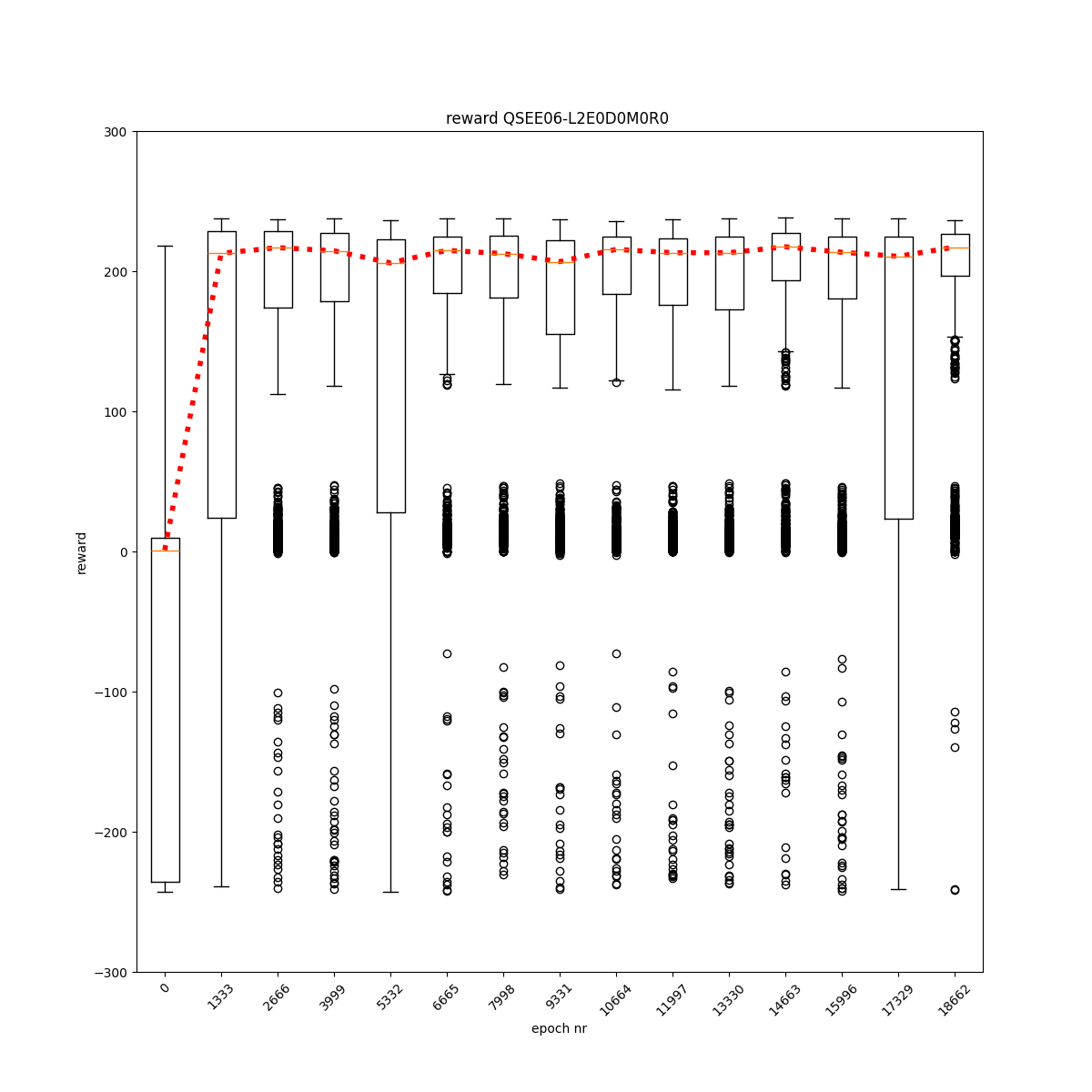

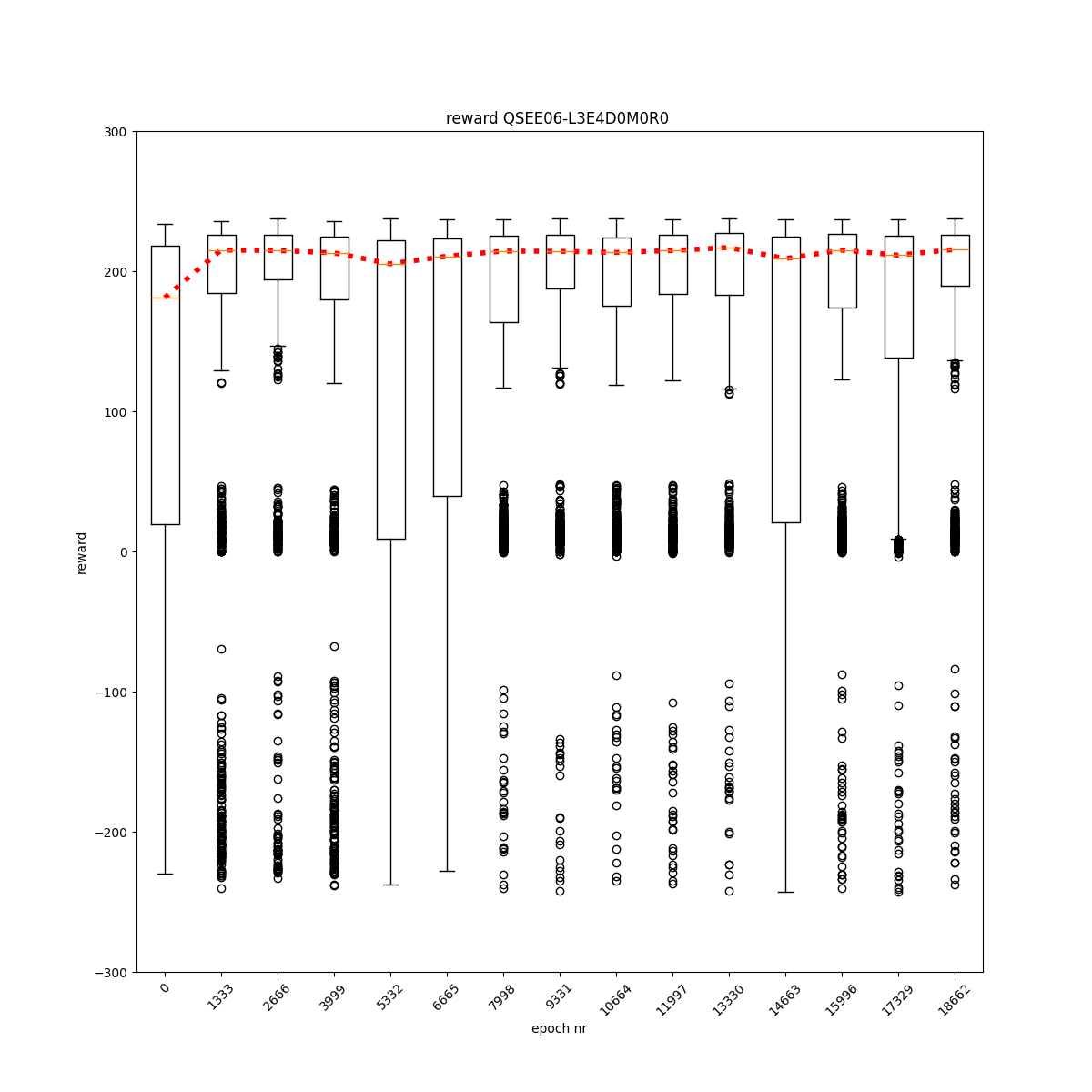

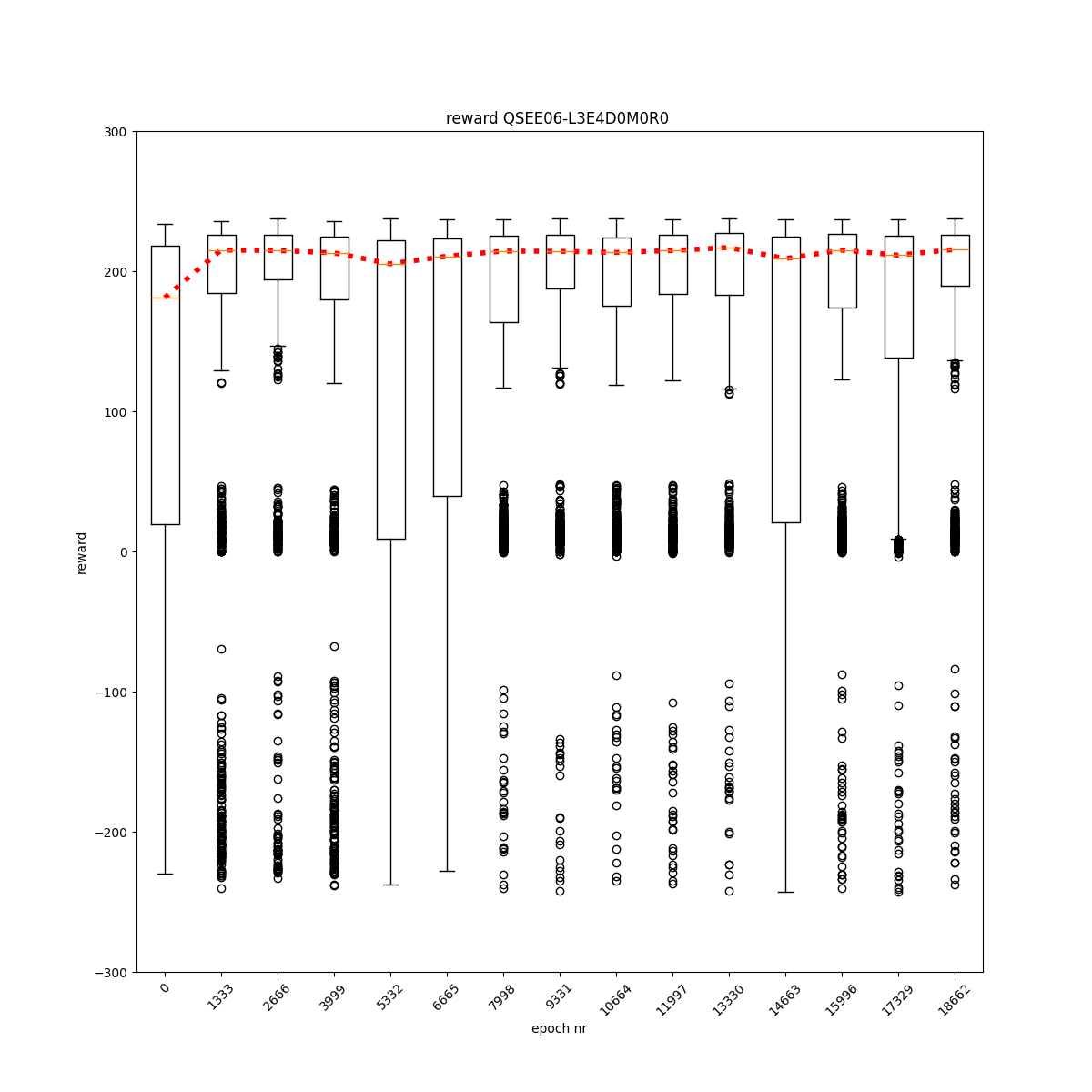

L0 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

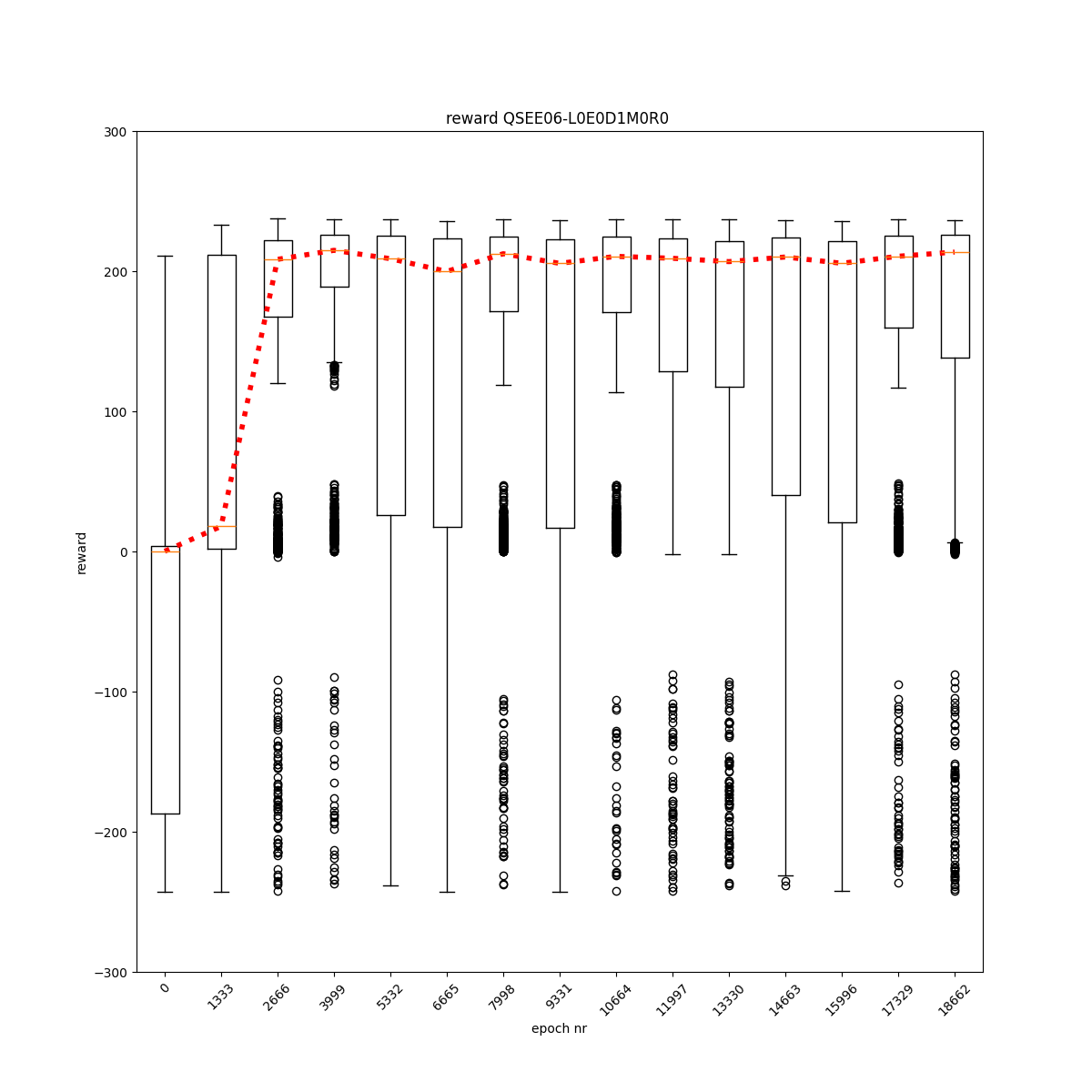

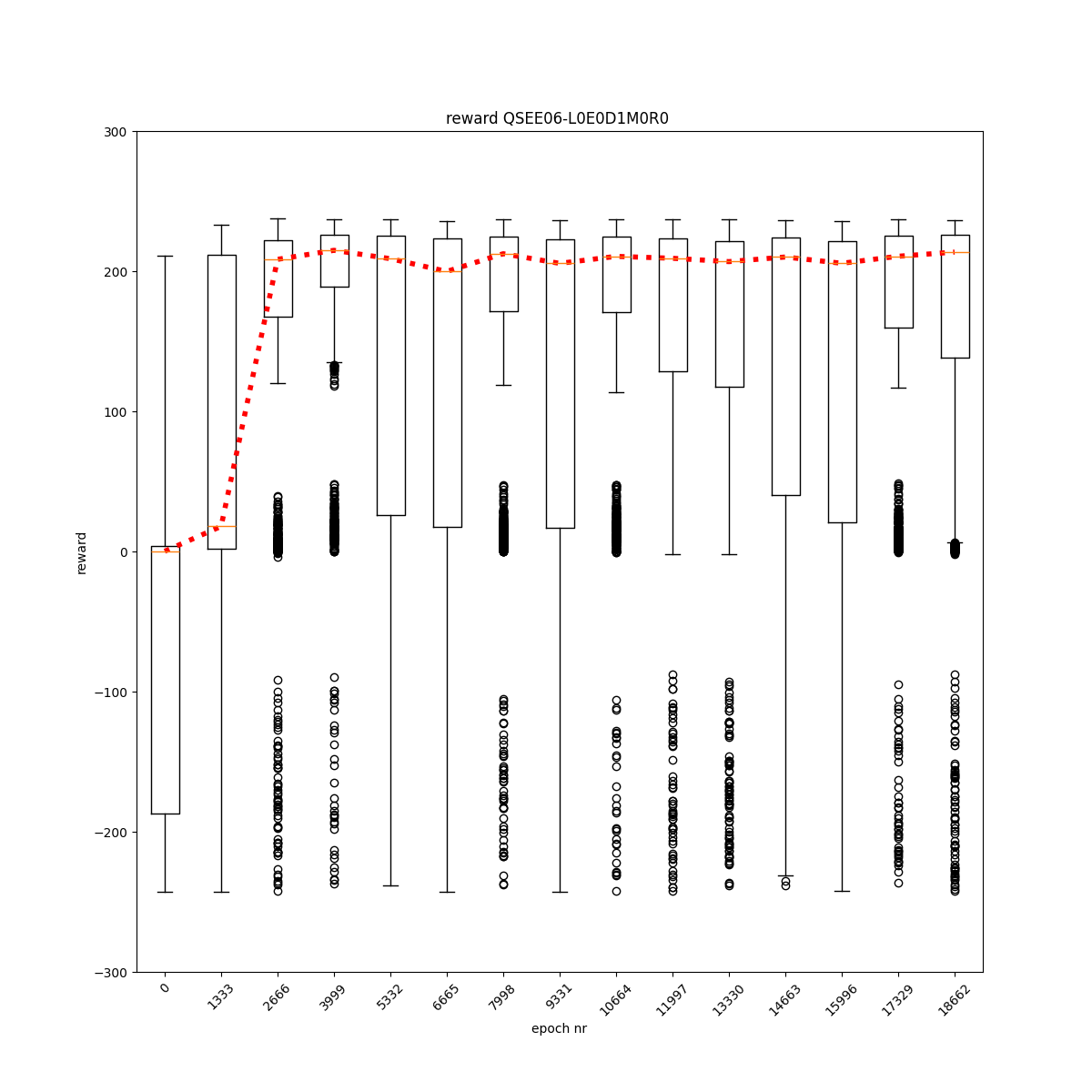

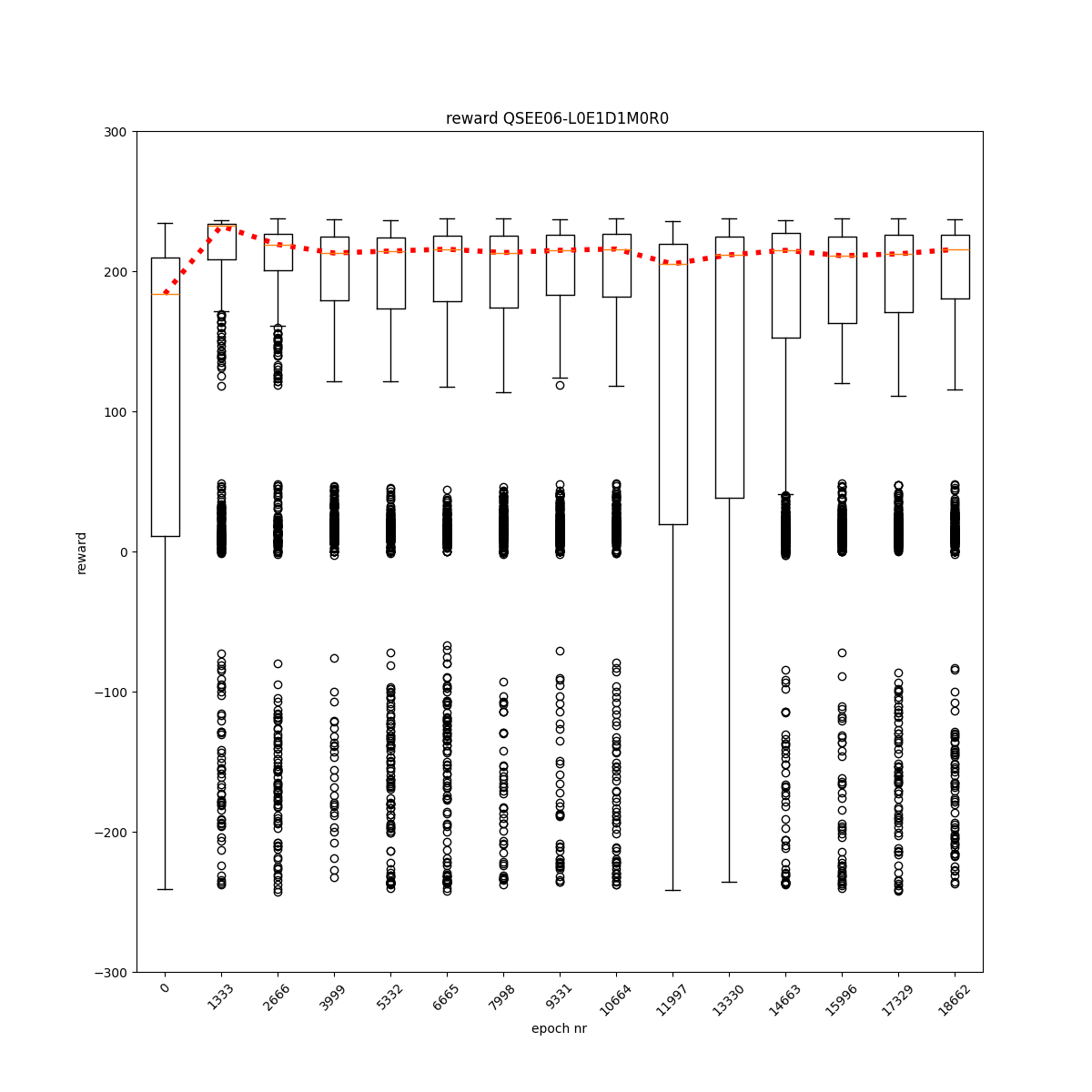

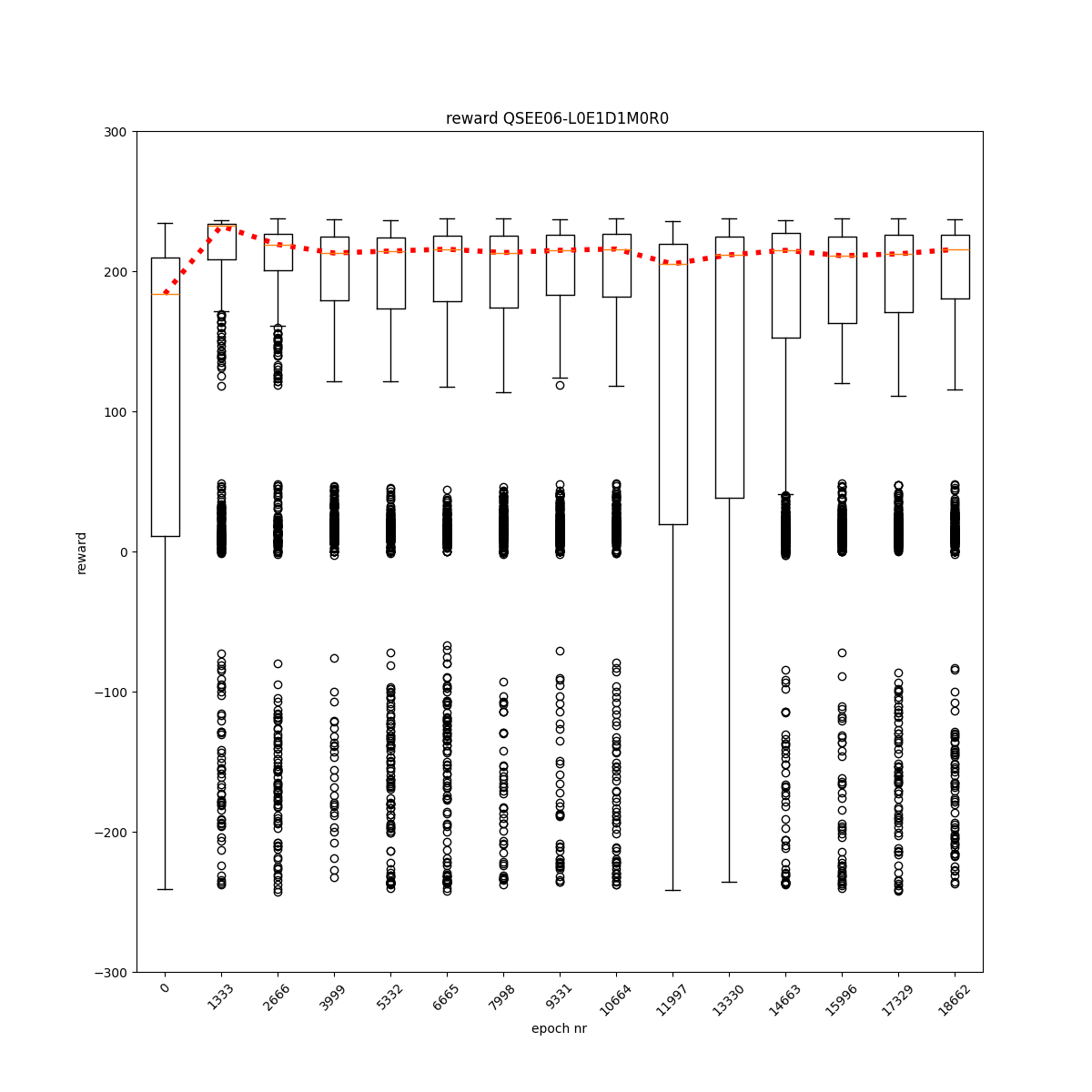

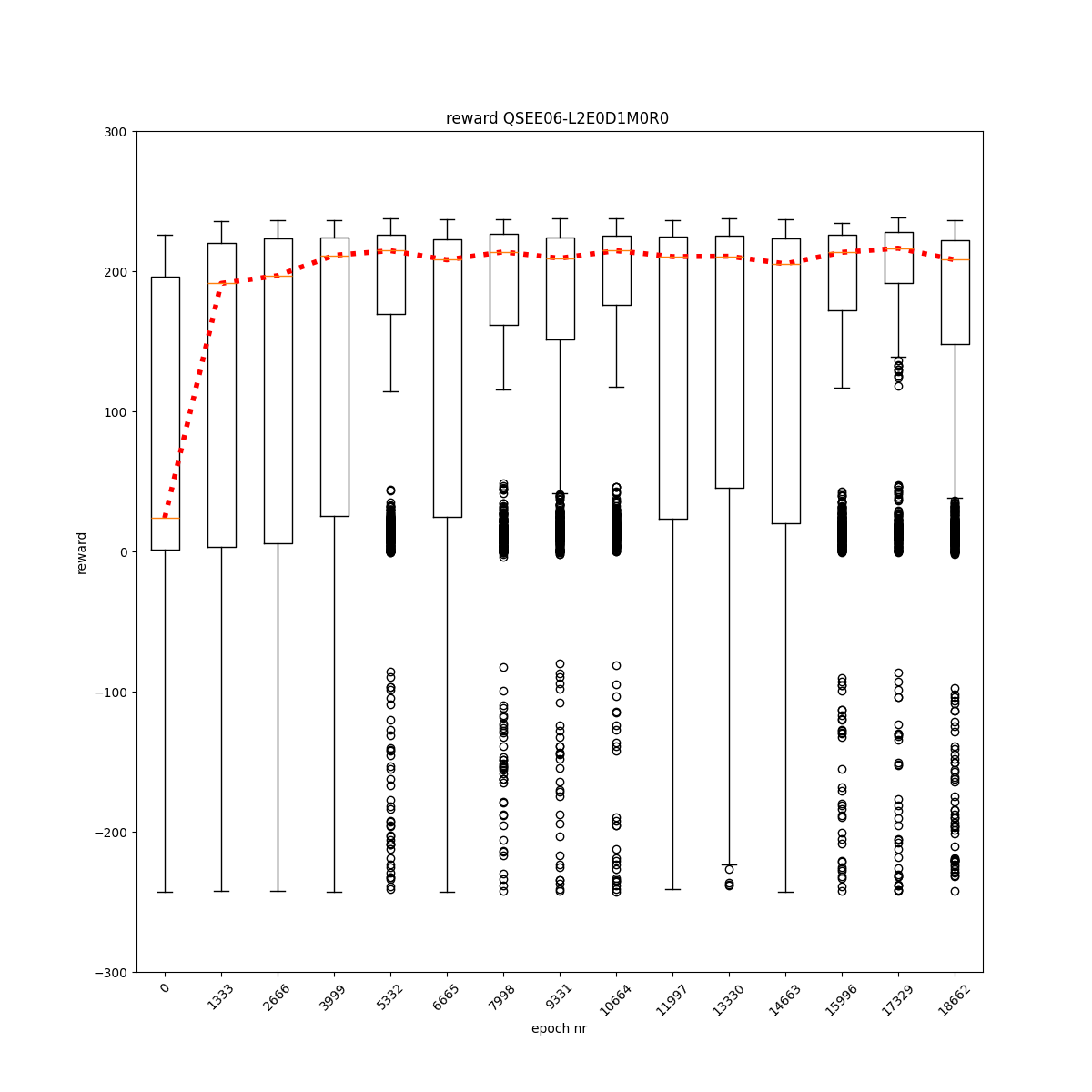

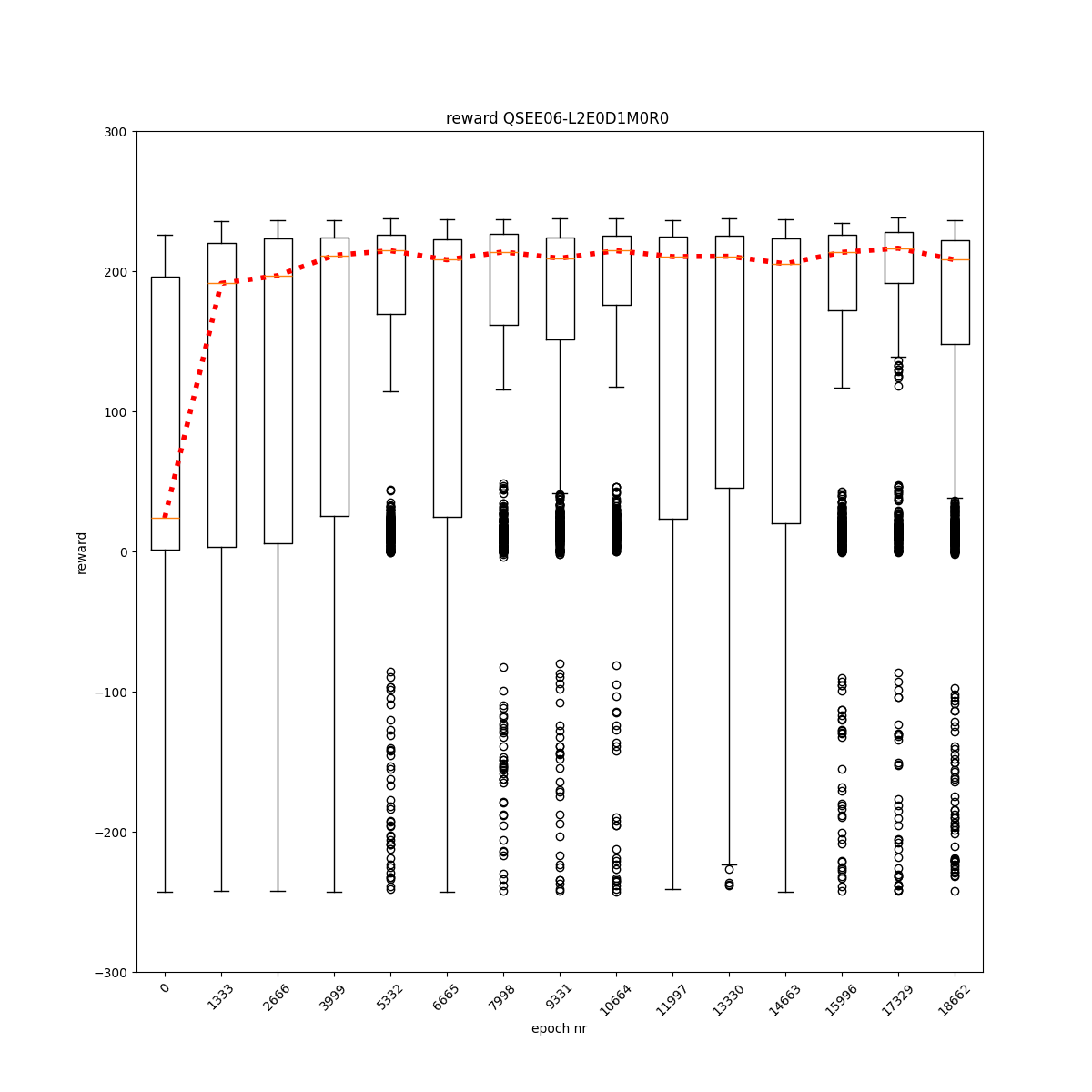

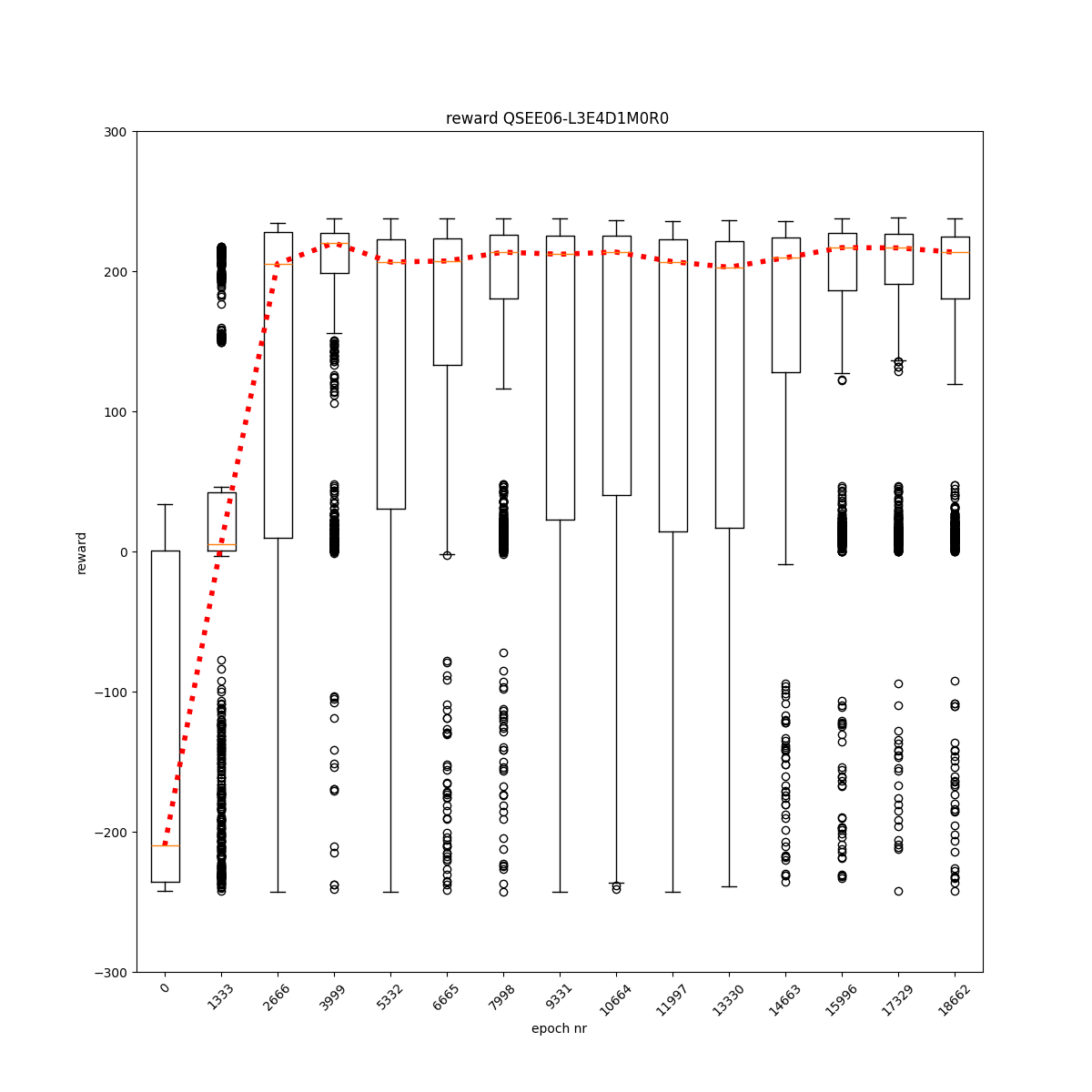

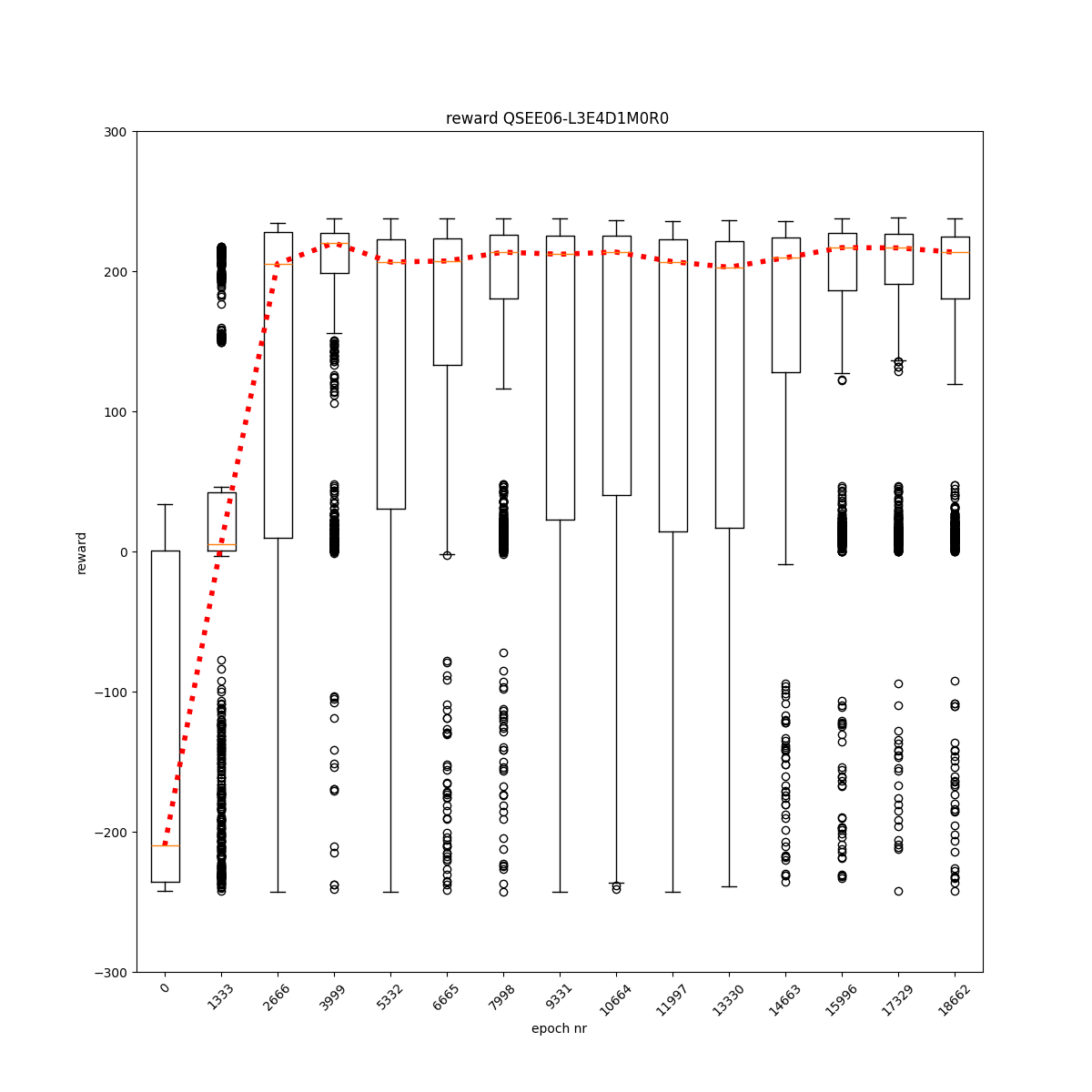

L0 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

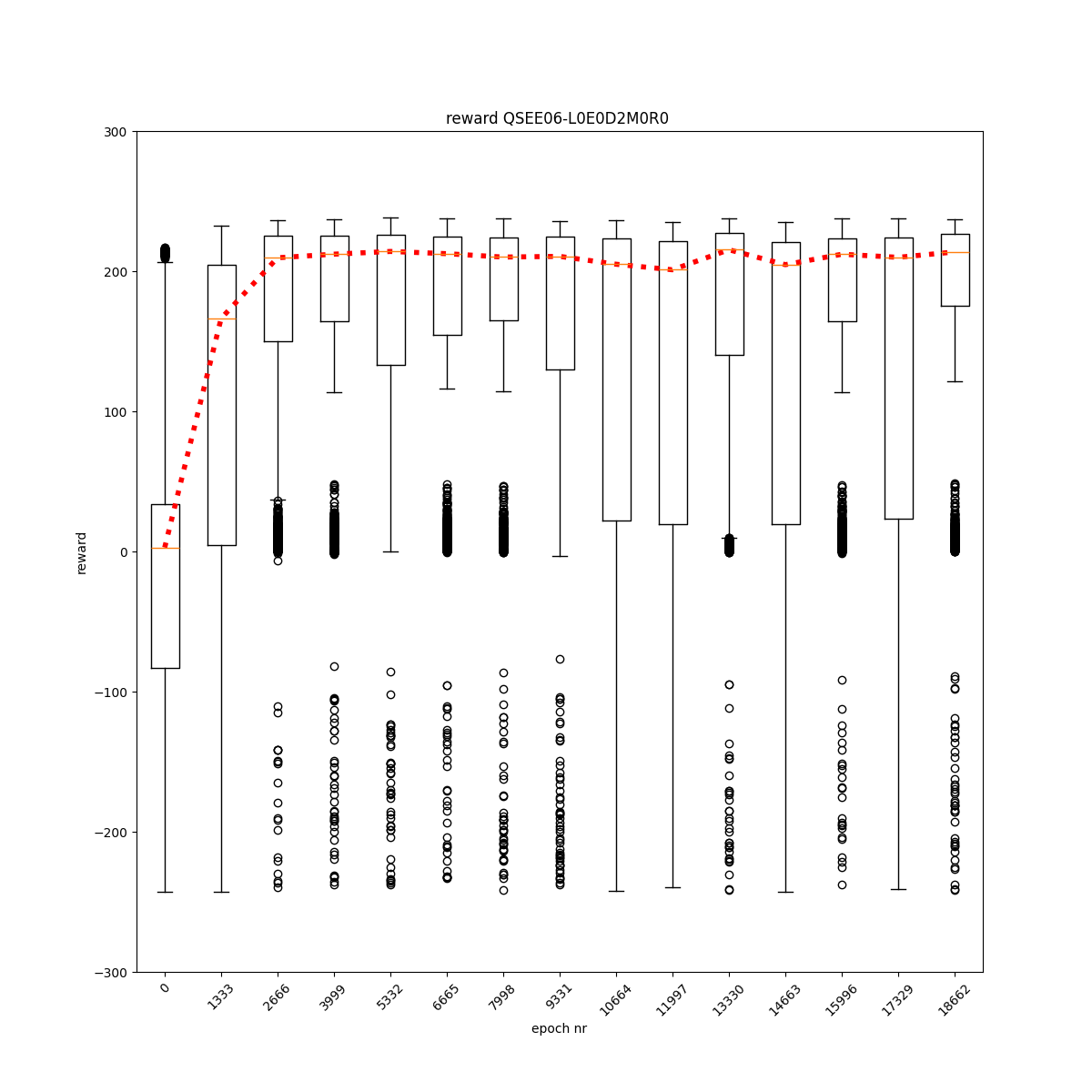

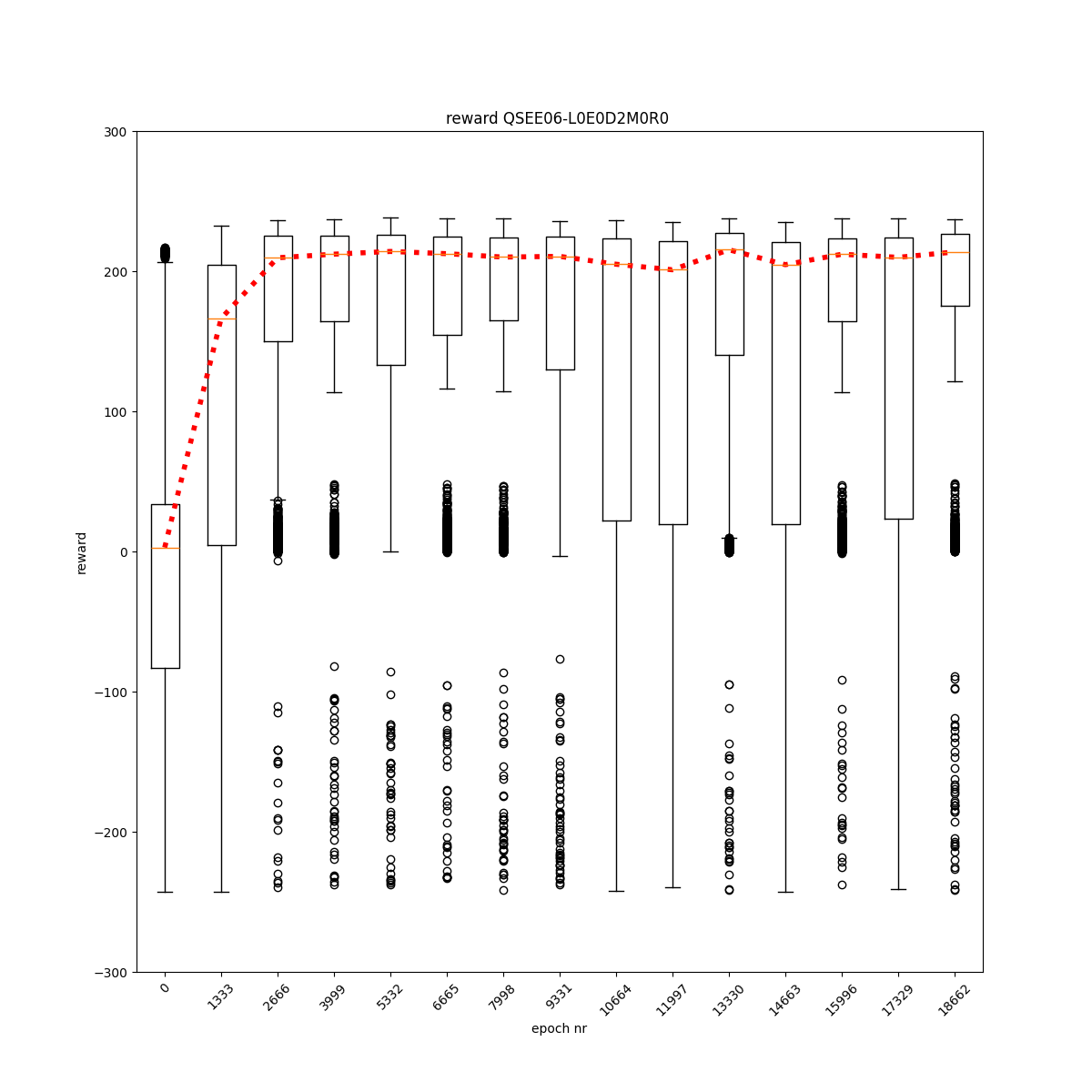

L0 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

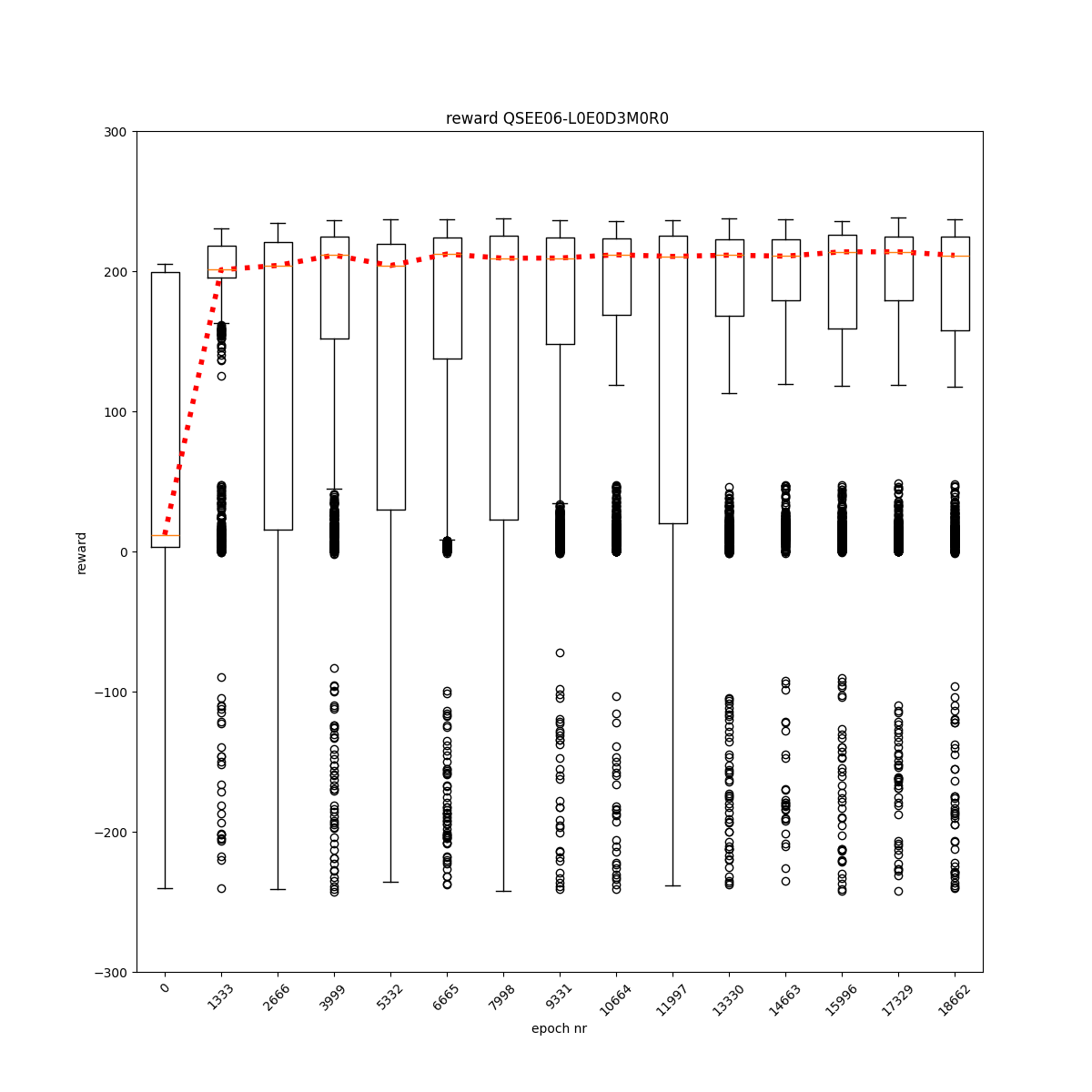

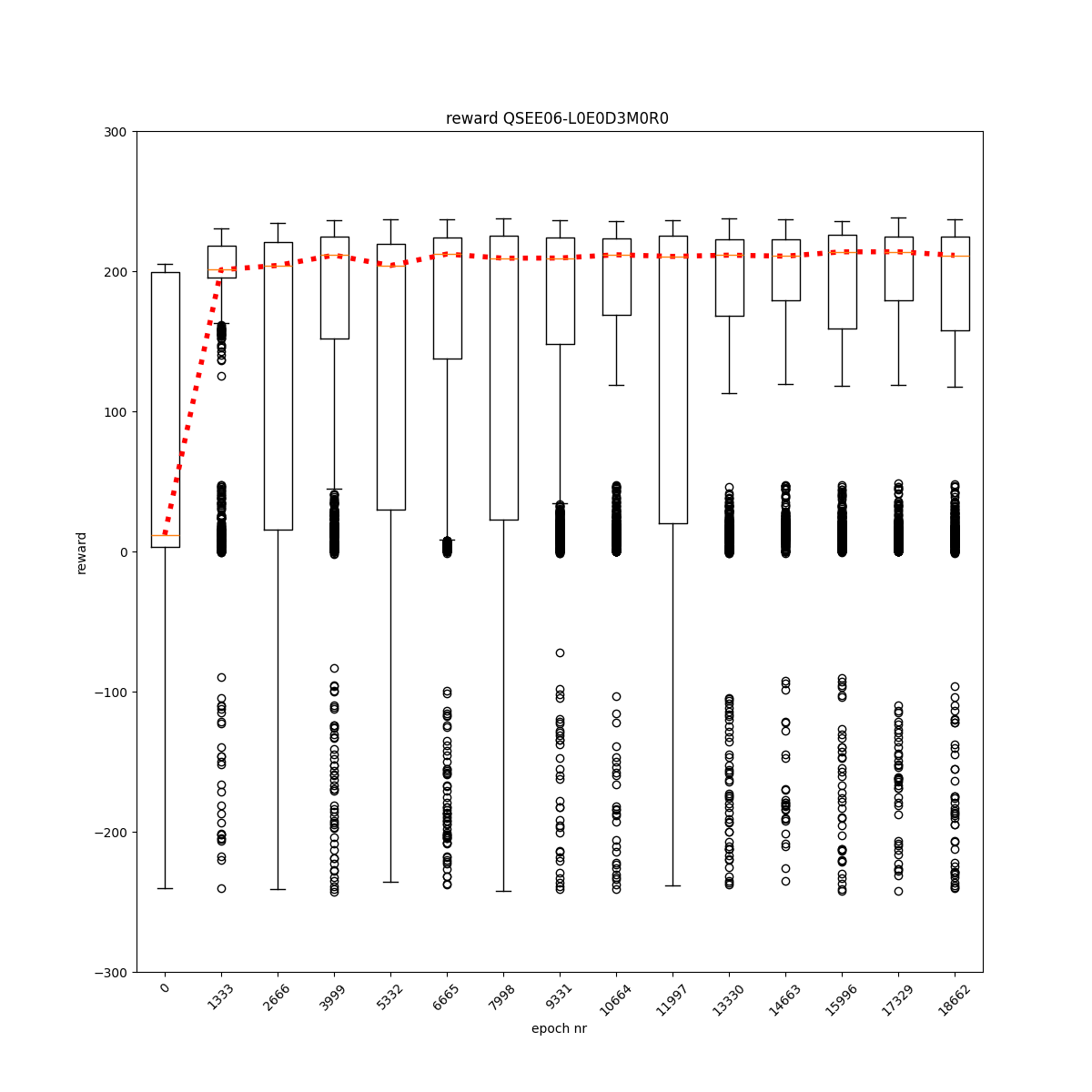

L0 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

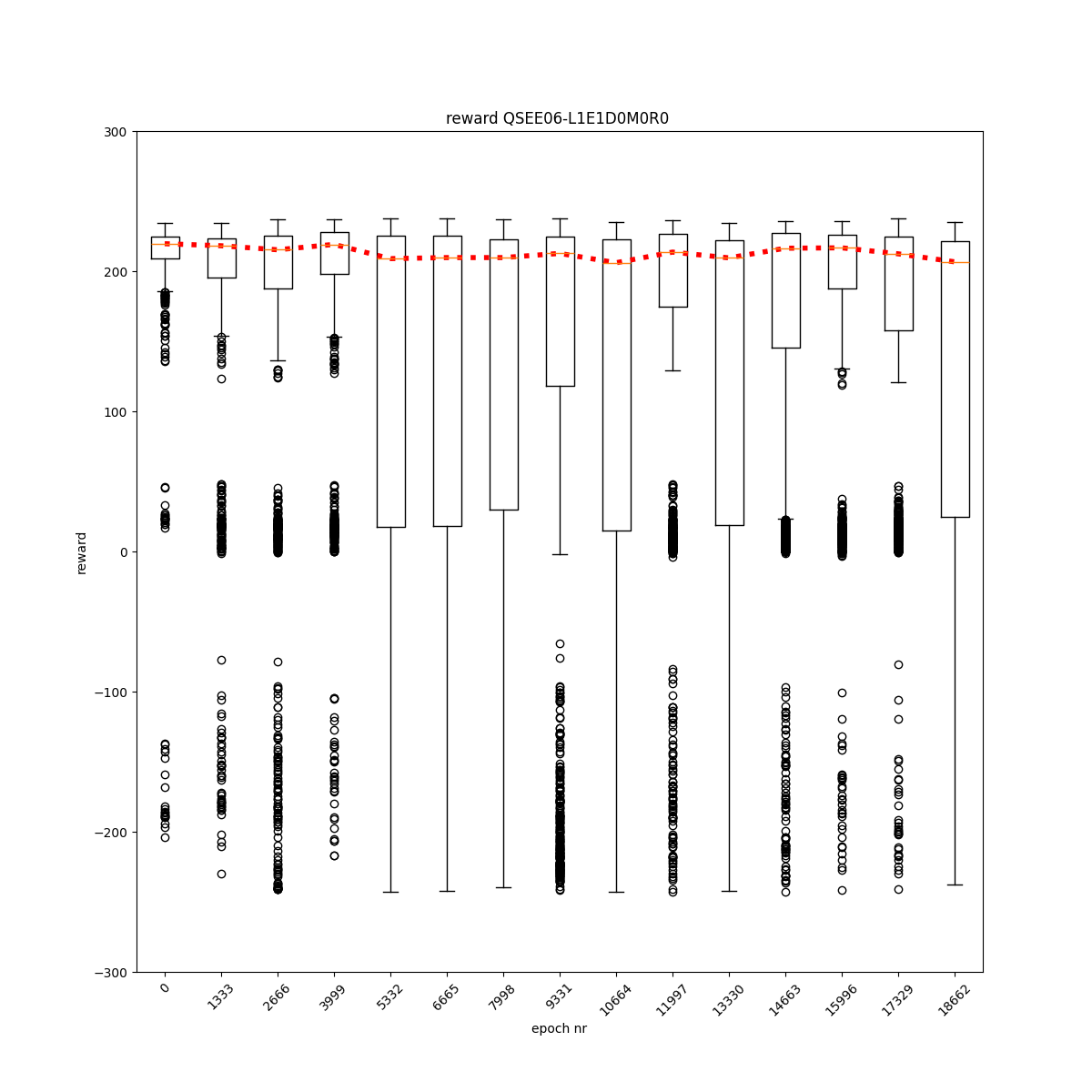

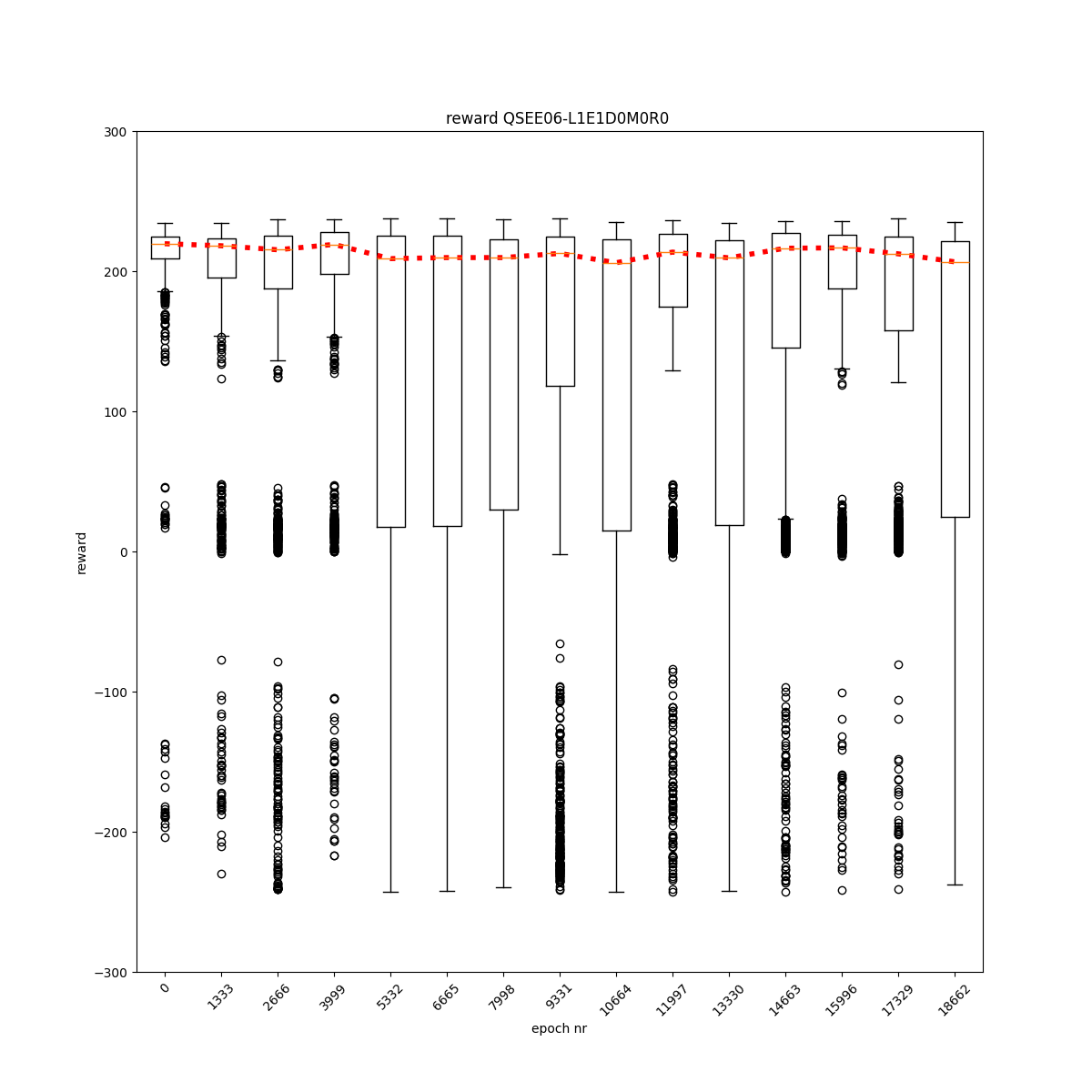

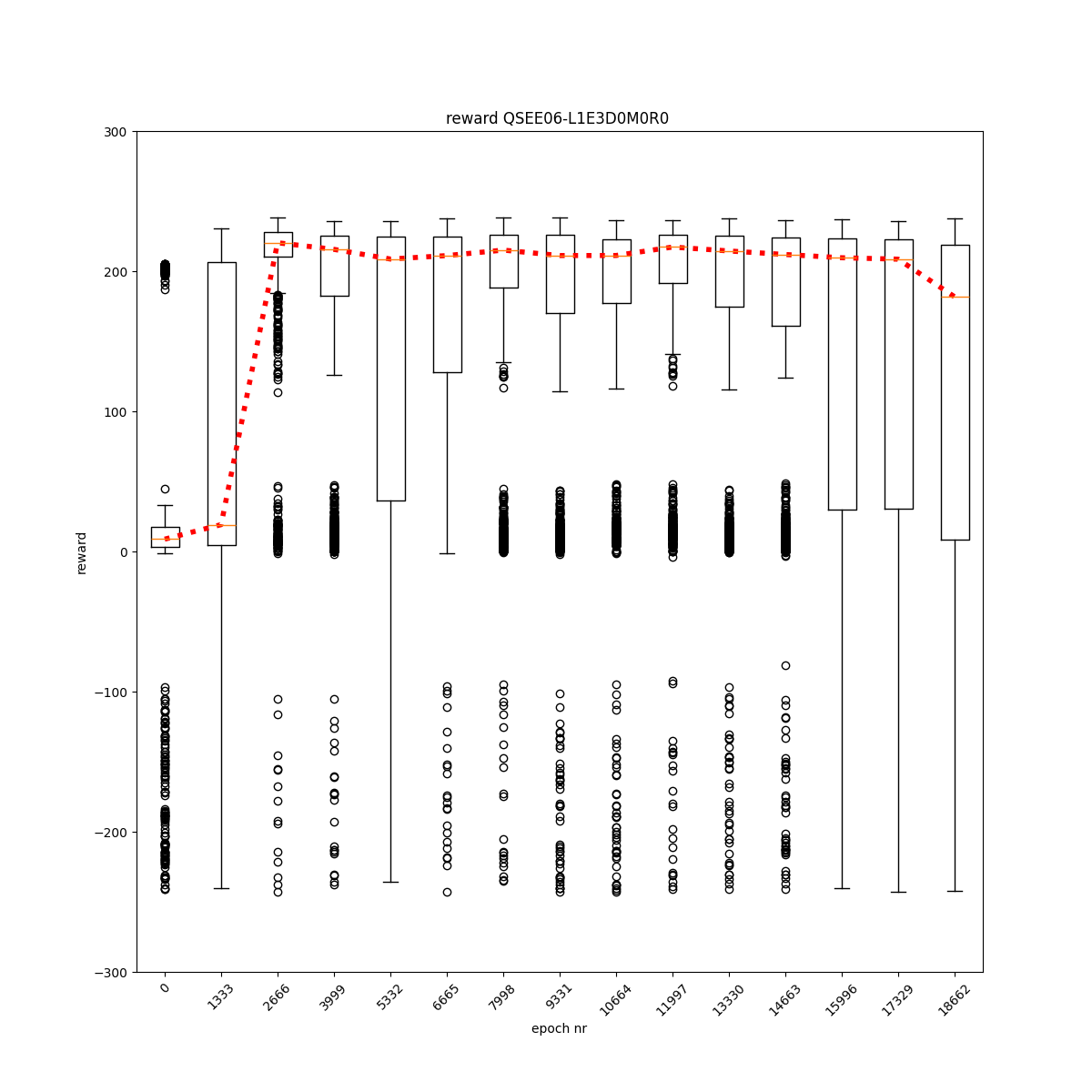

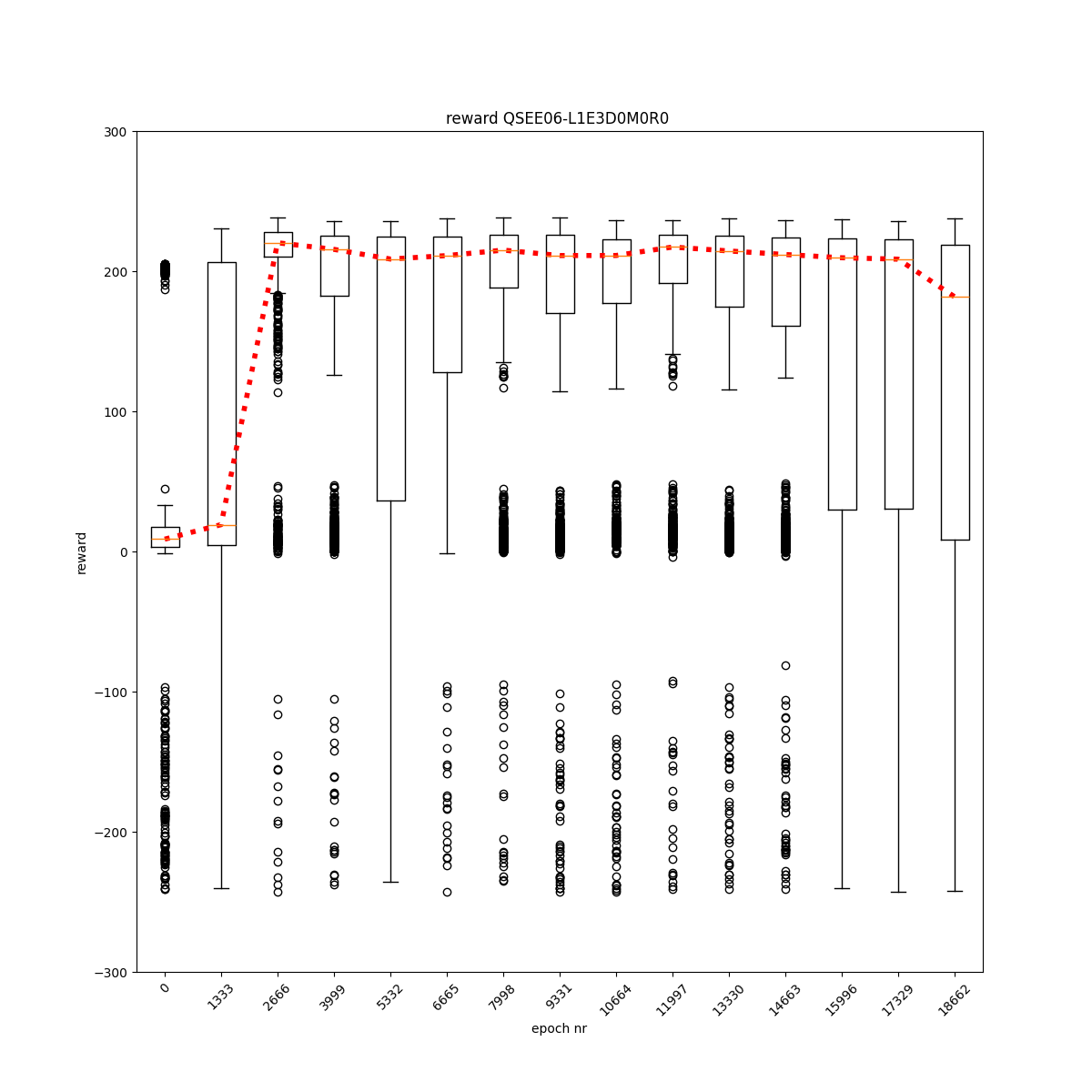

L0 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

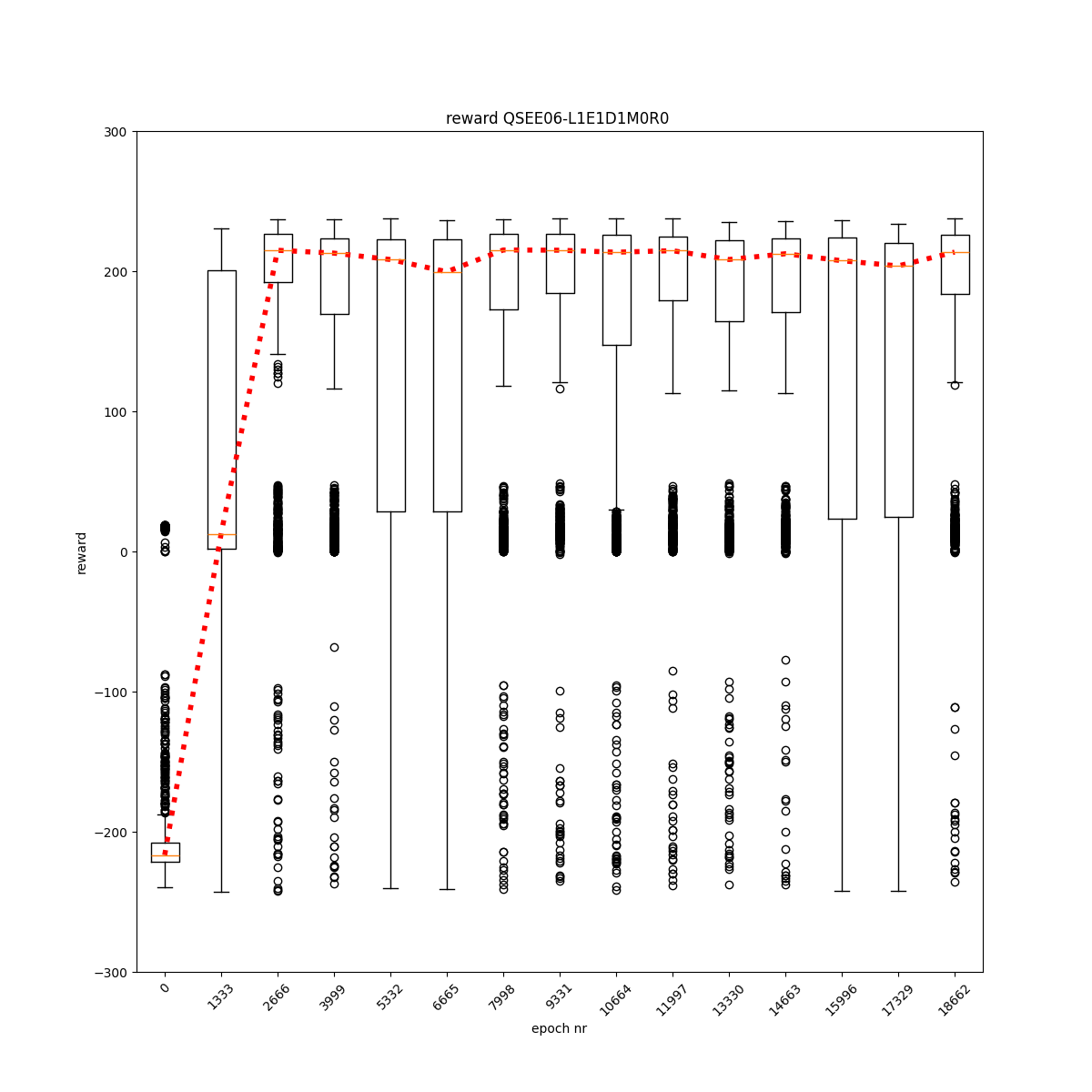

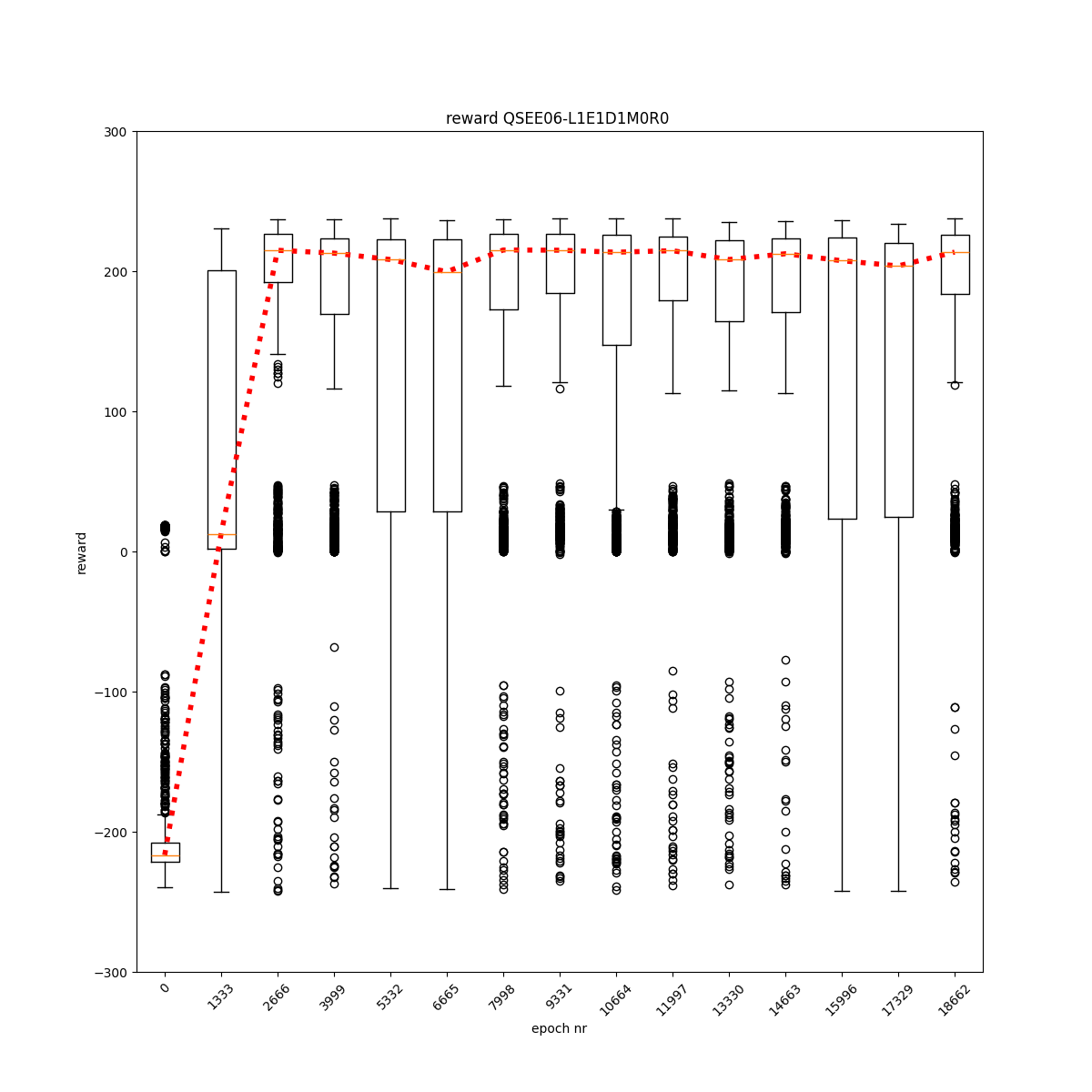

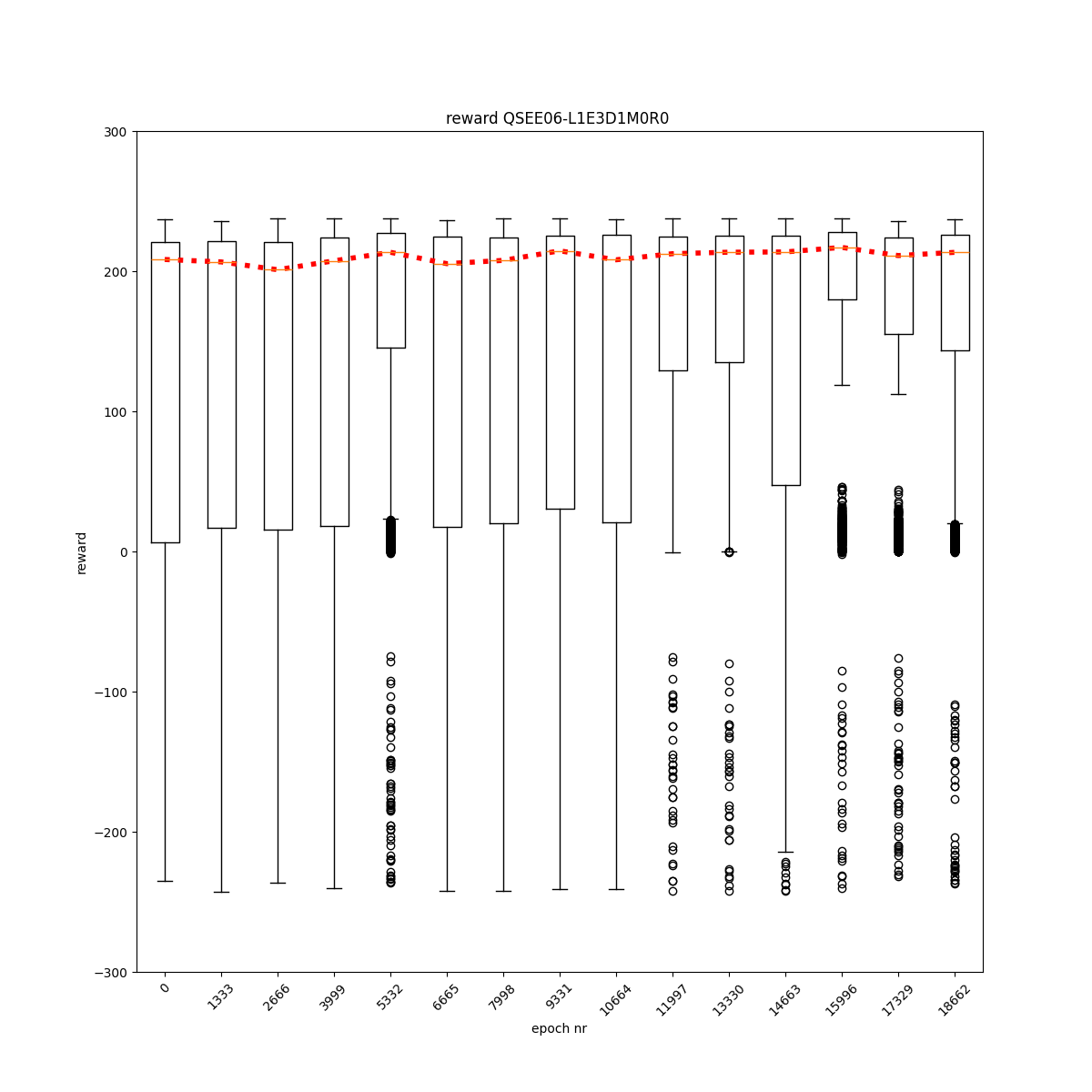

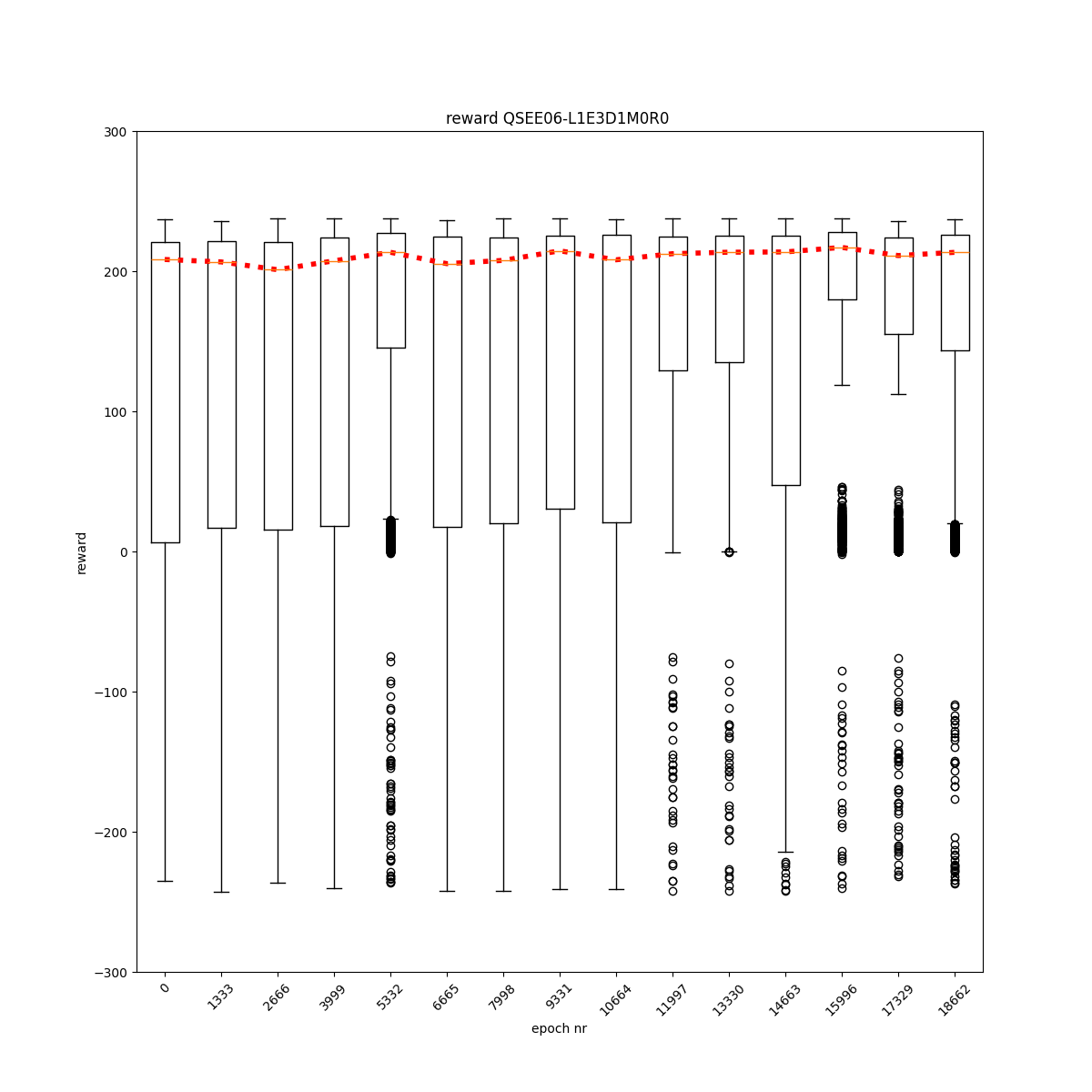

L0 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

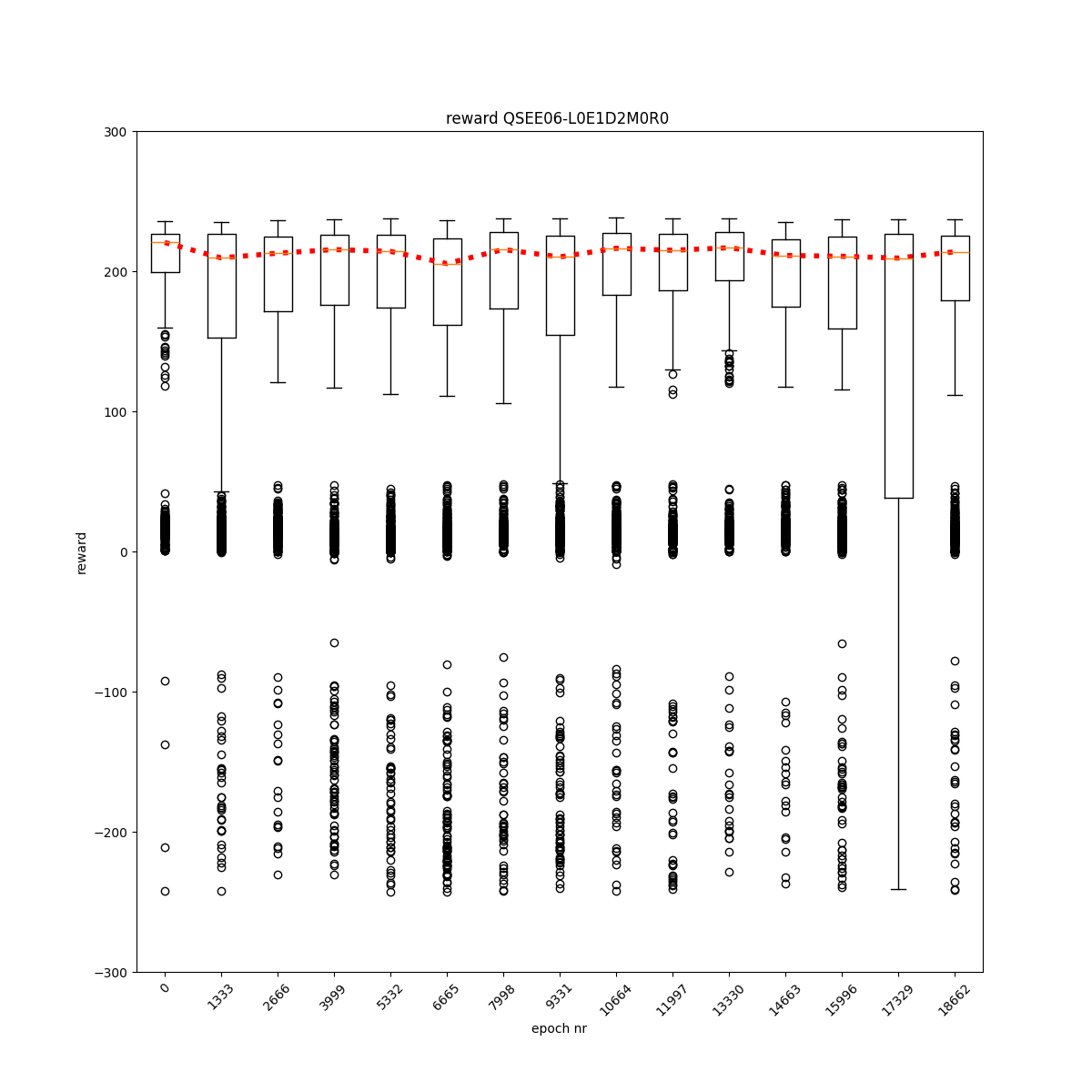

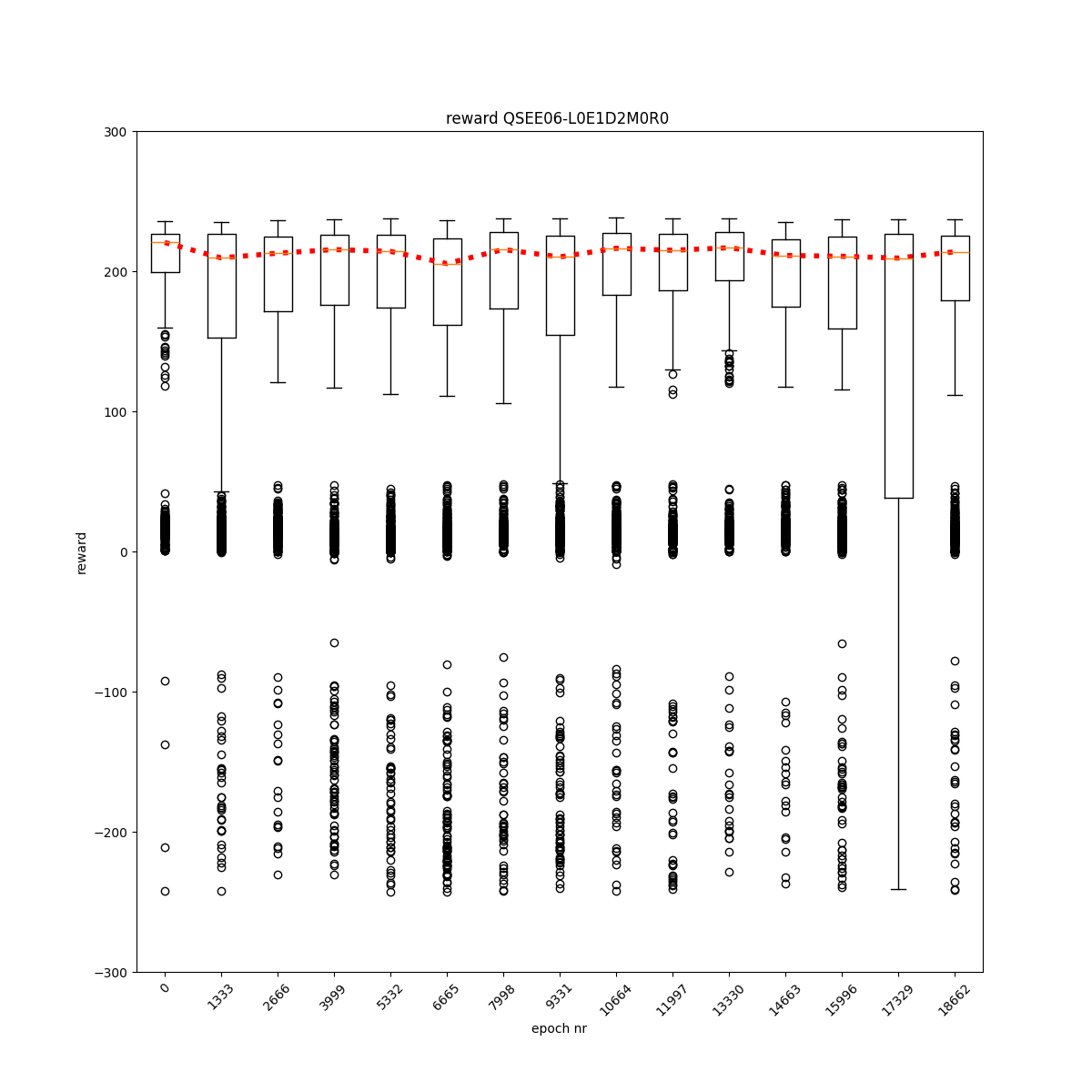

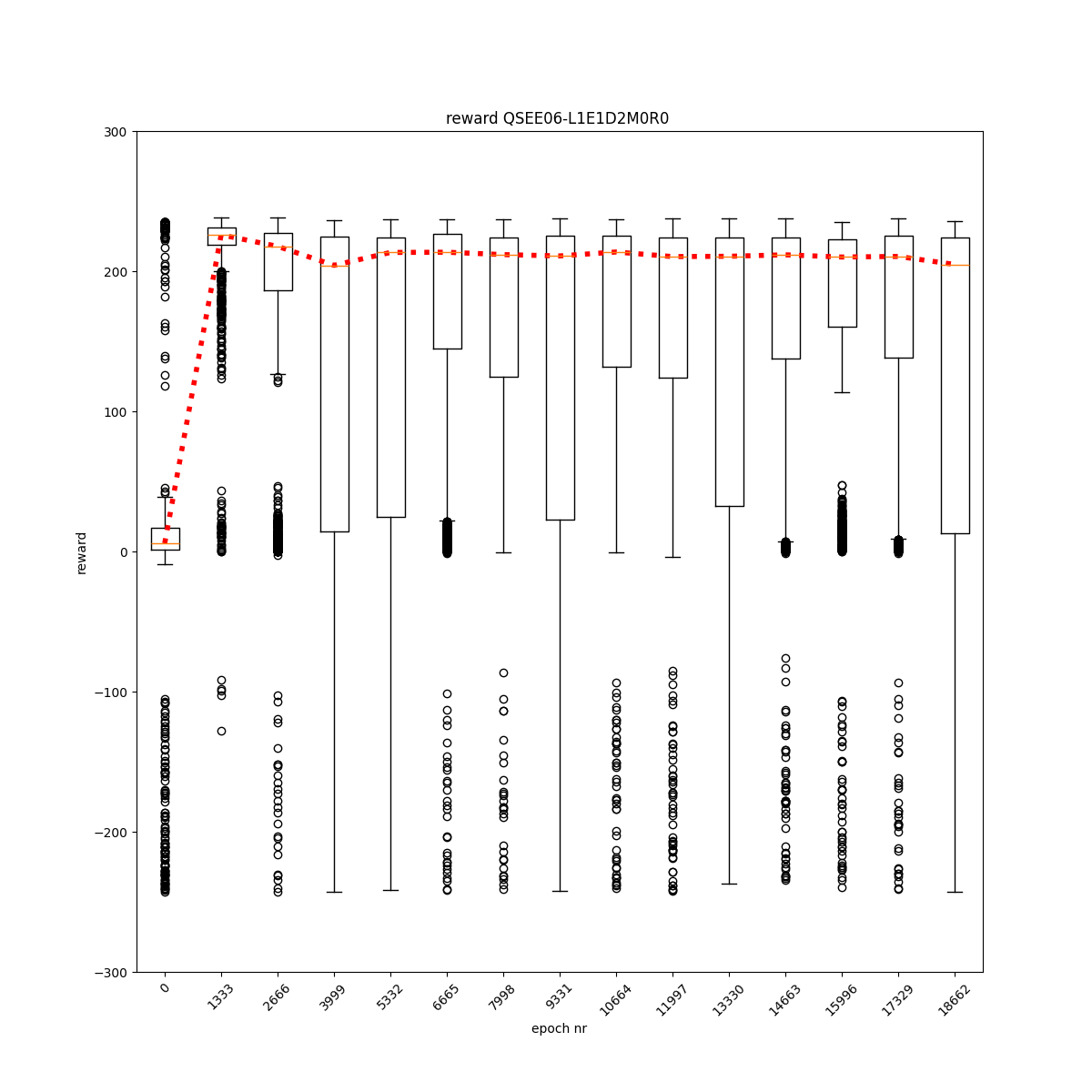

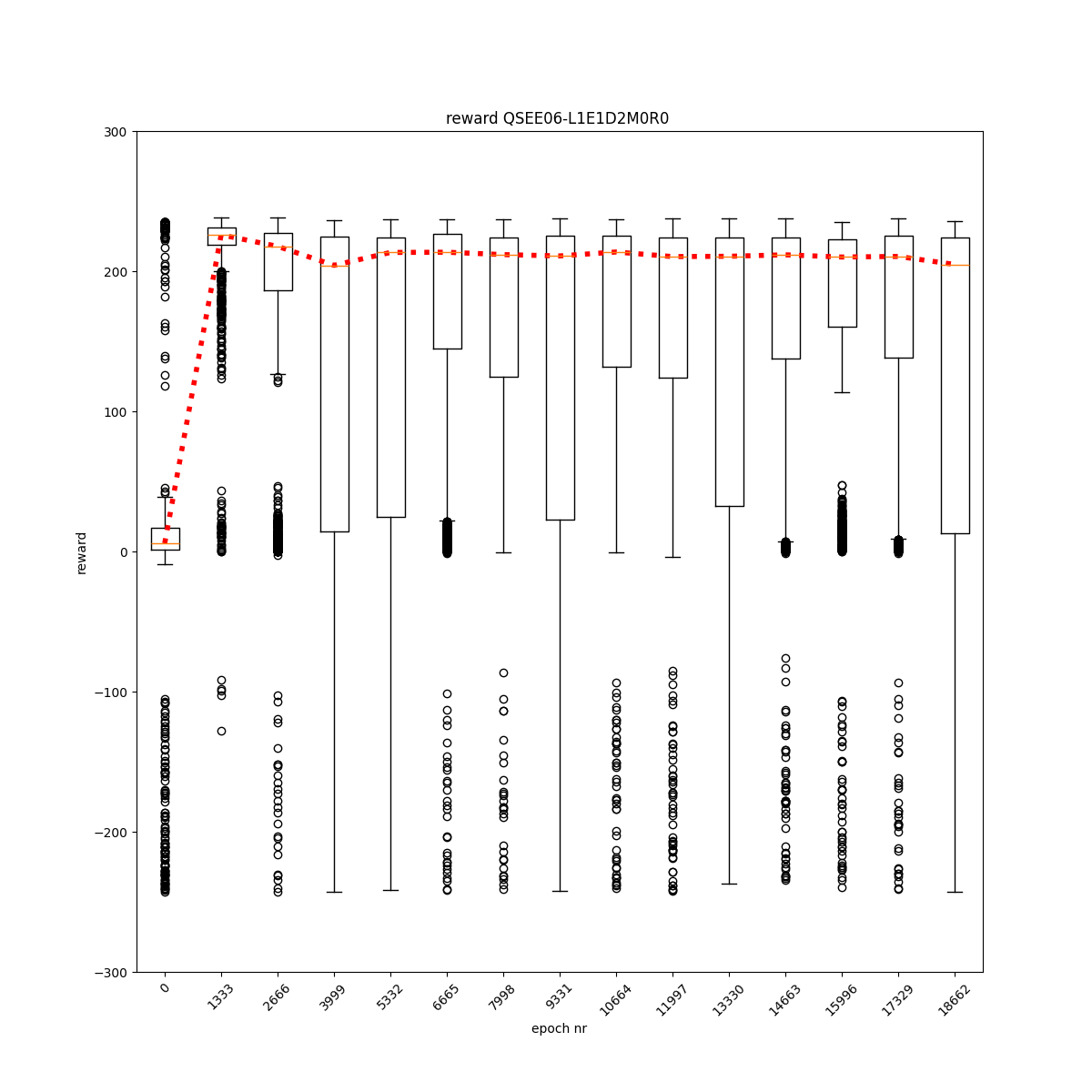

L0 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

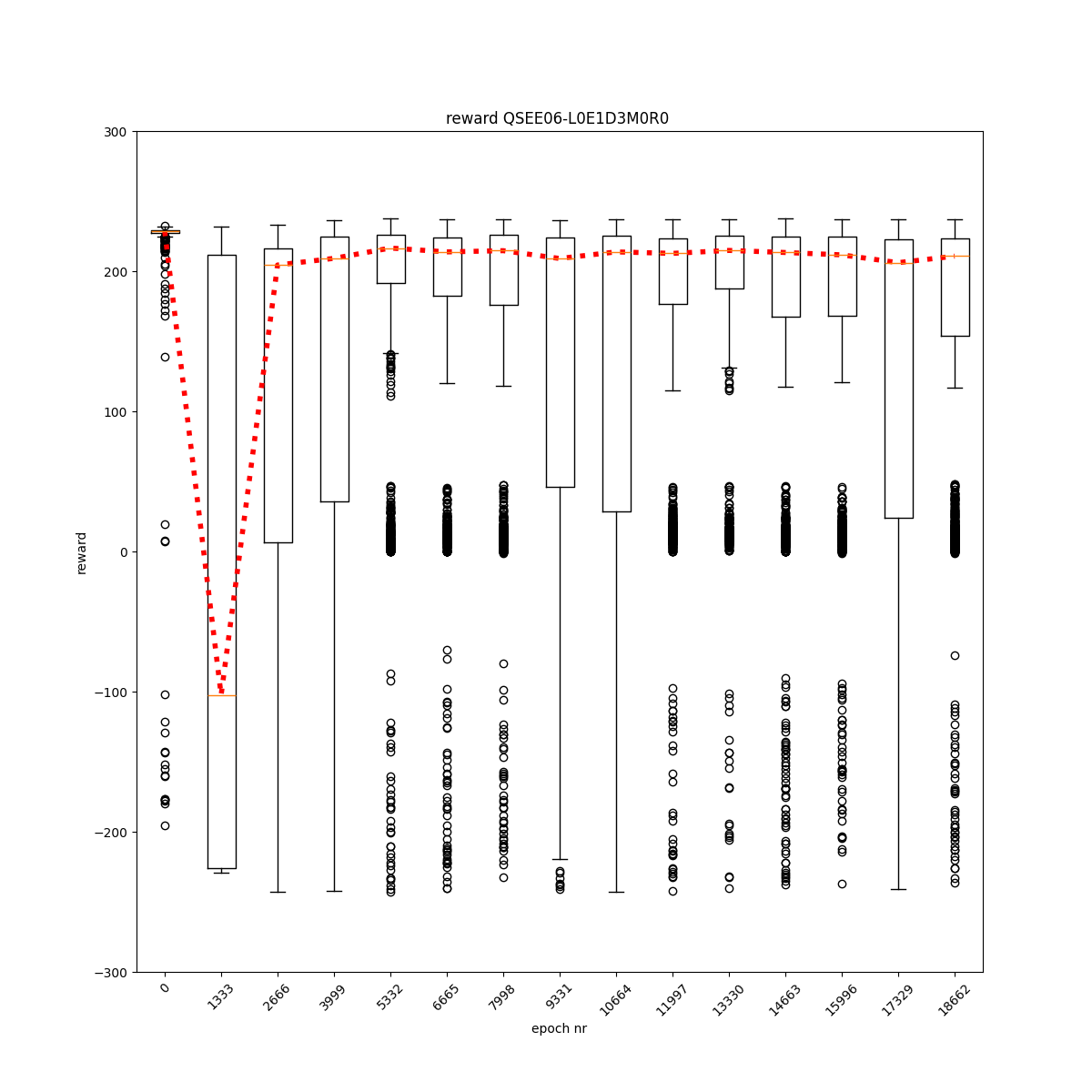

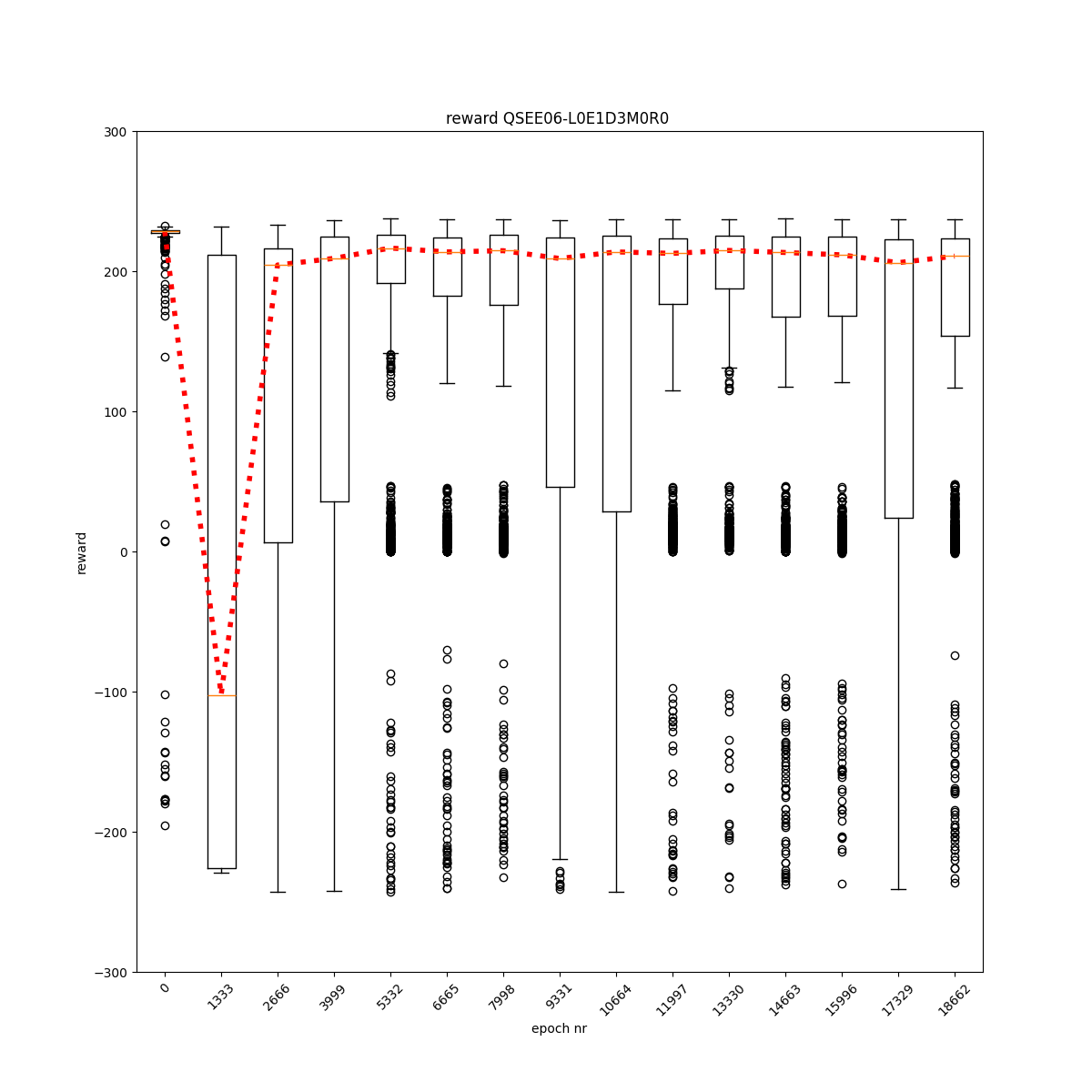

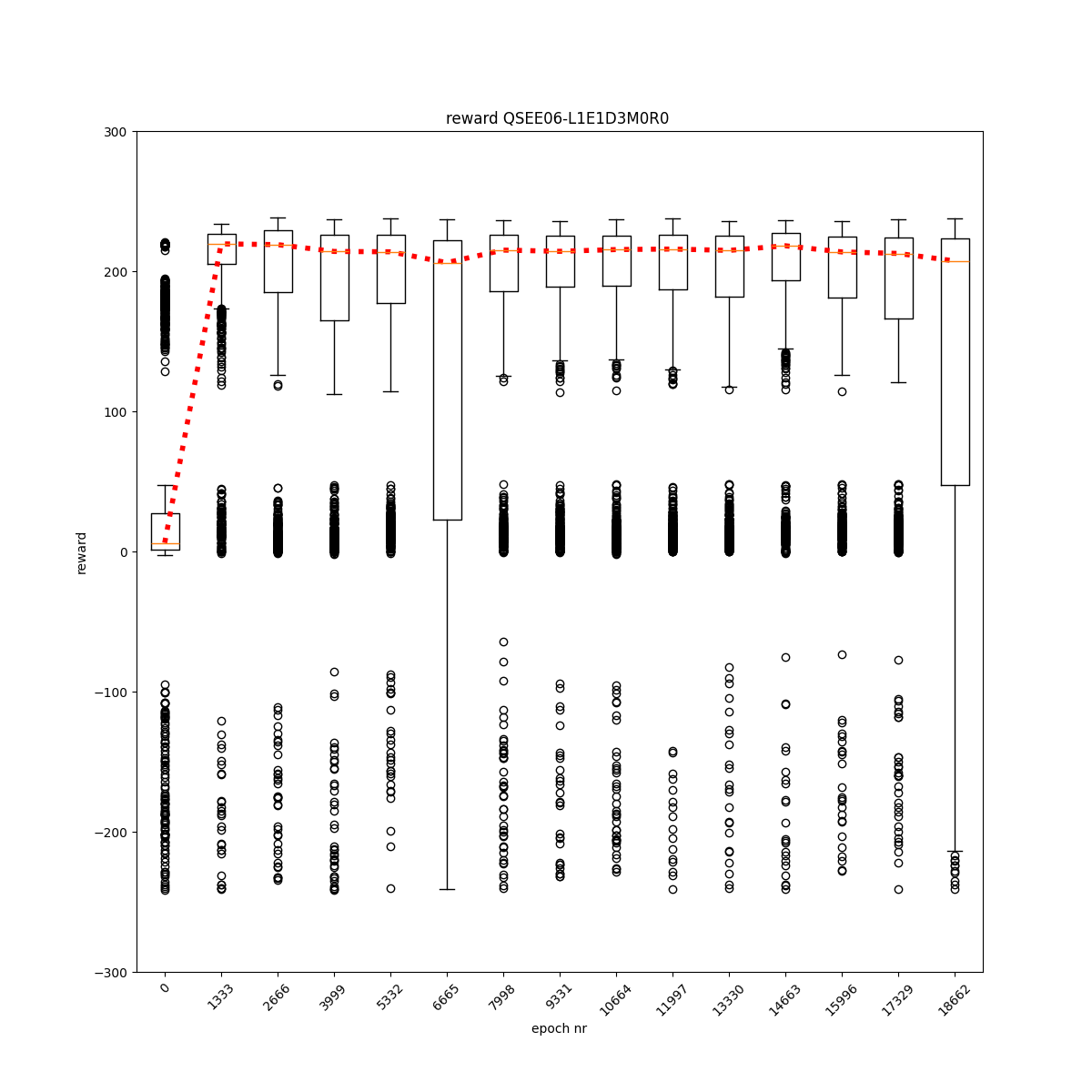

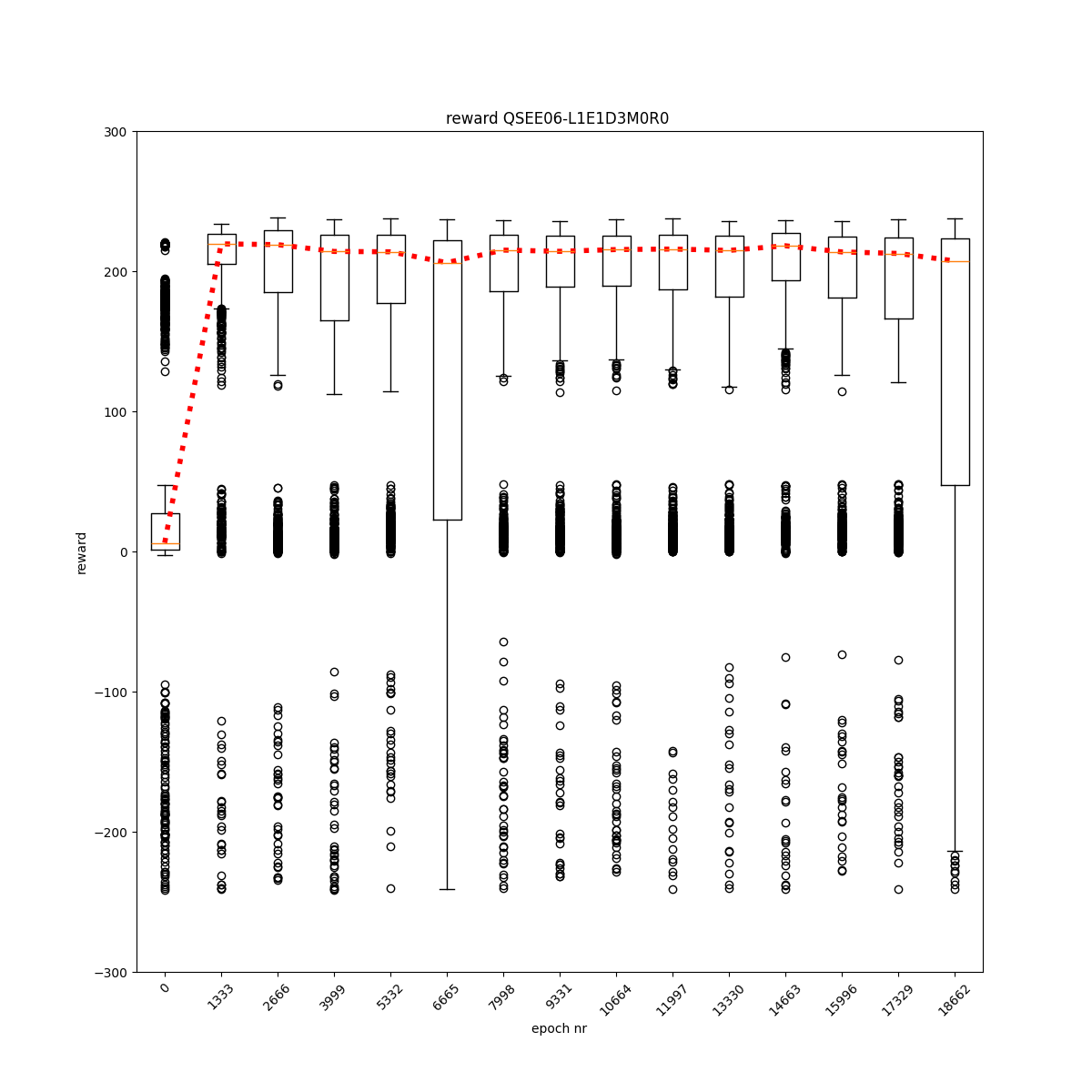

L0 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

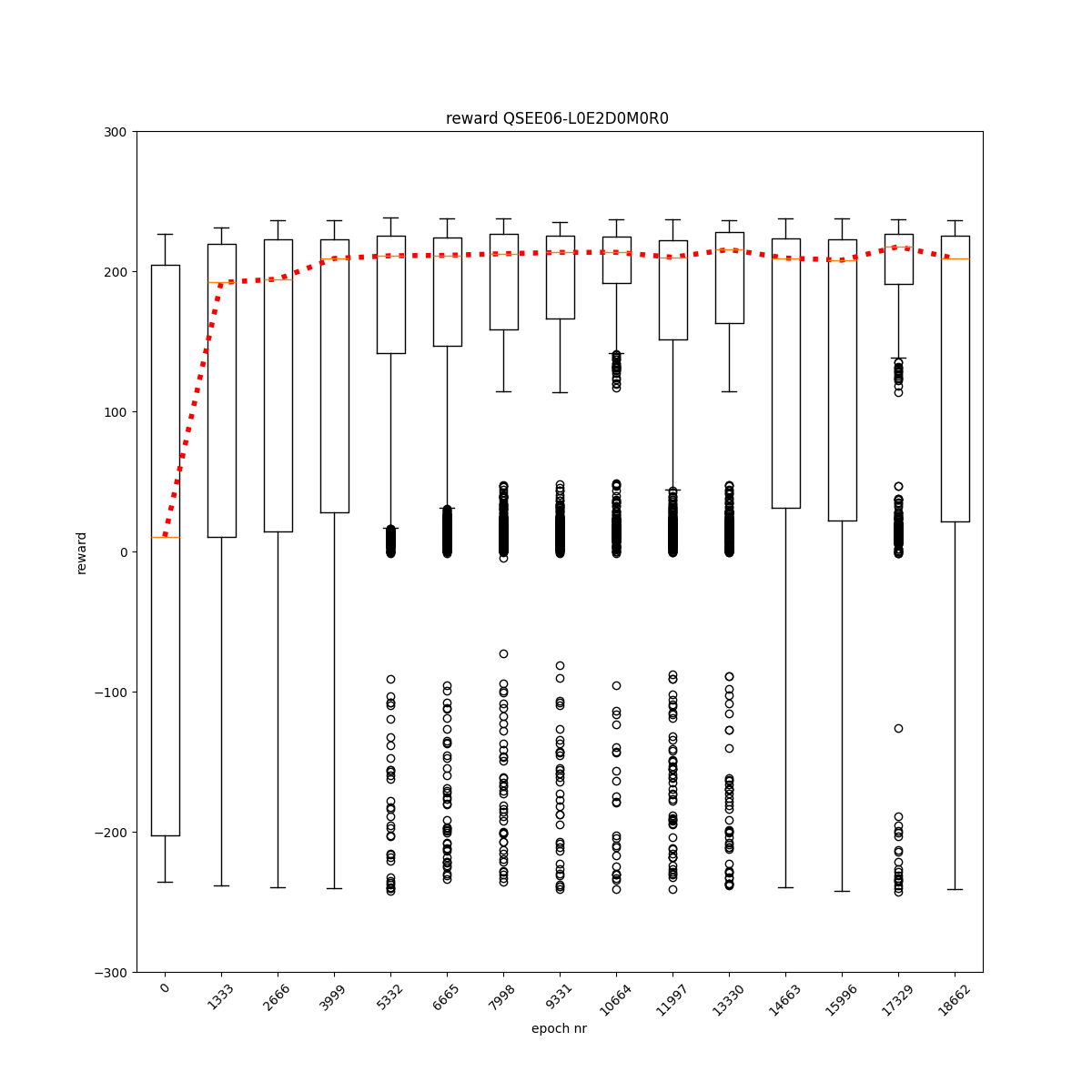

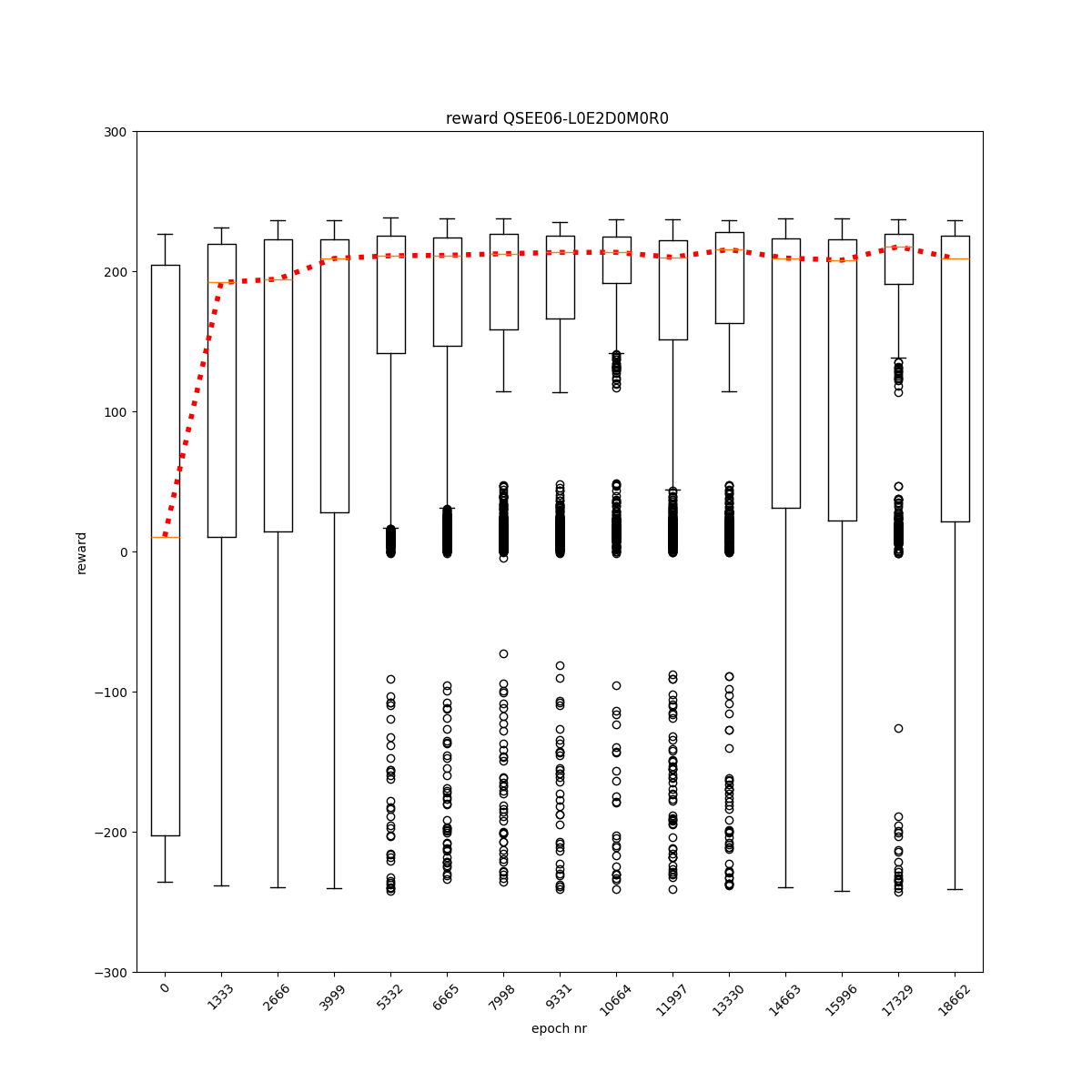

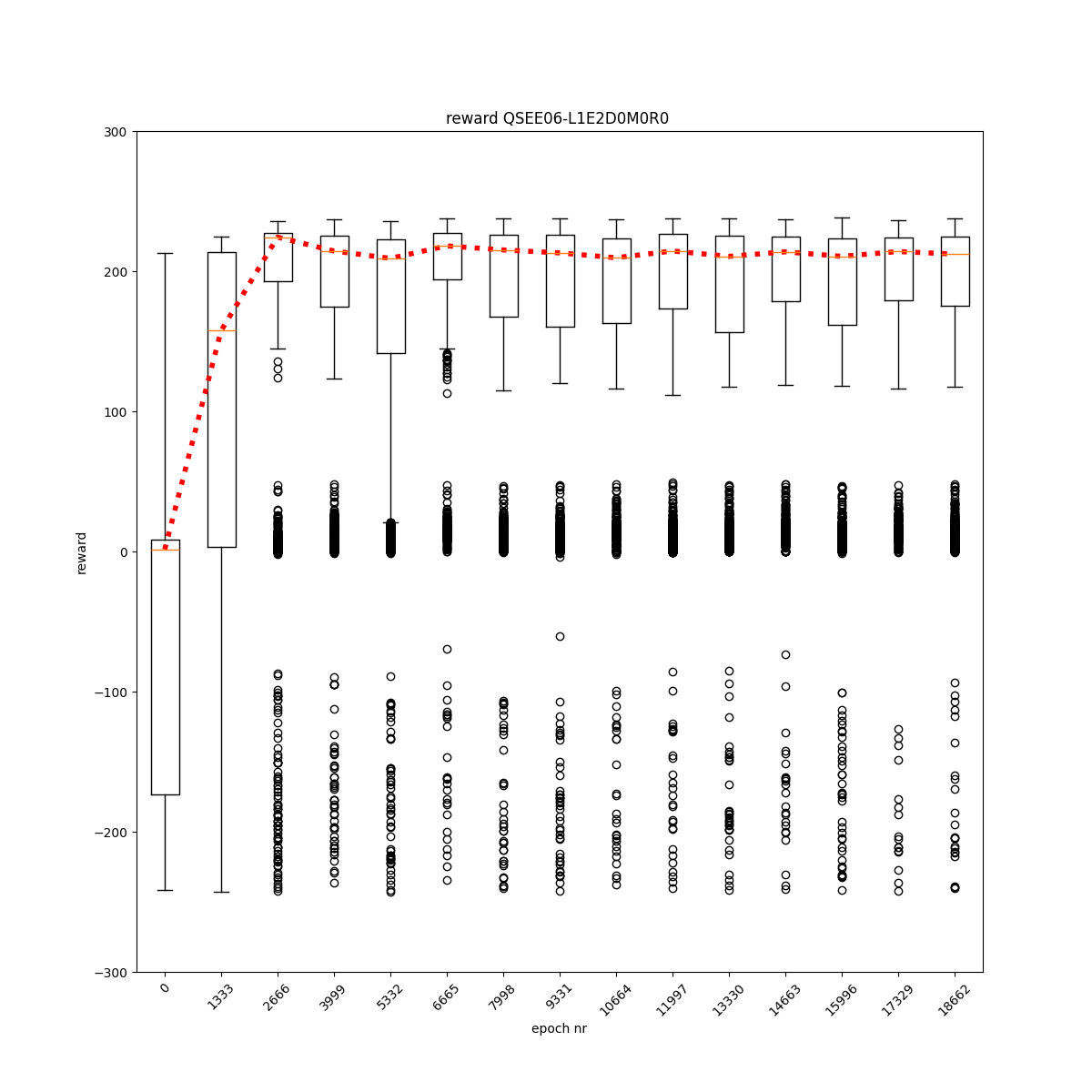

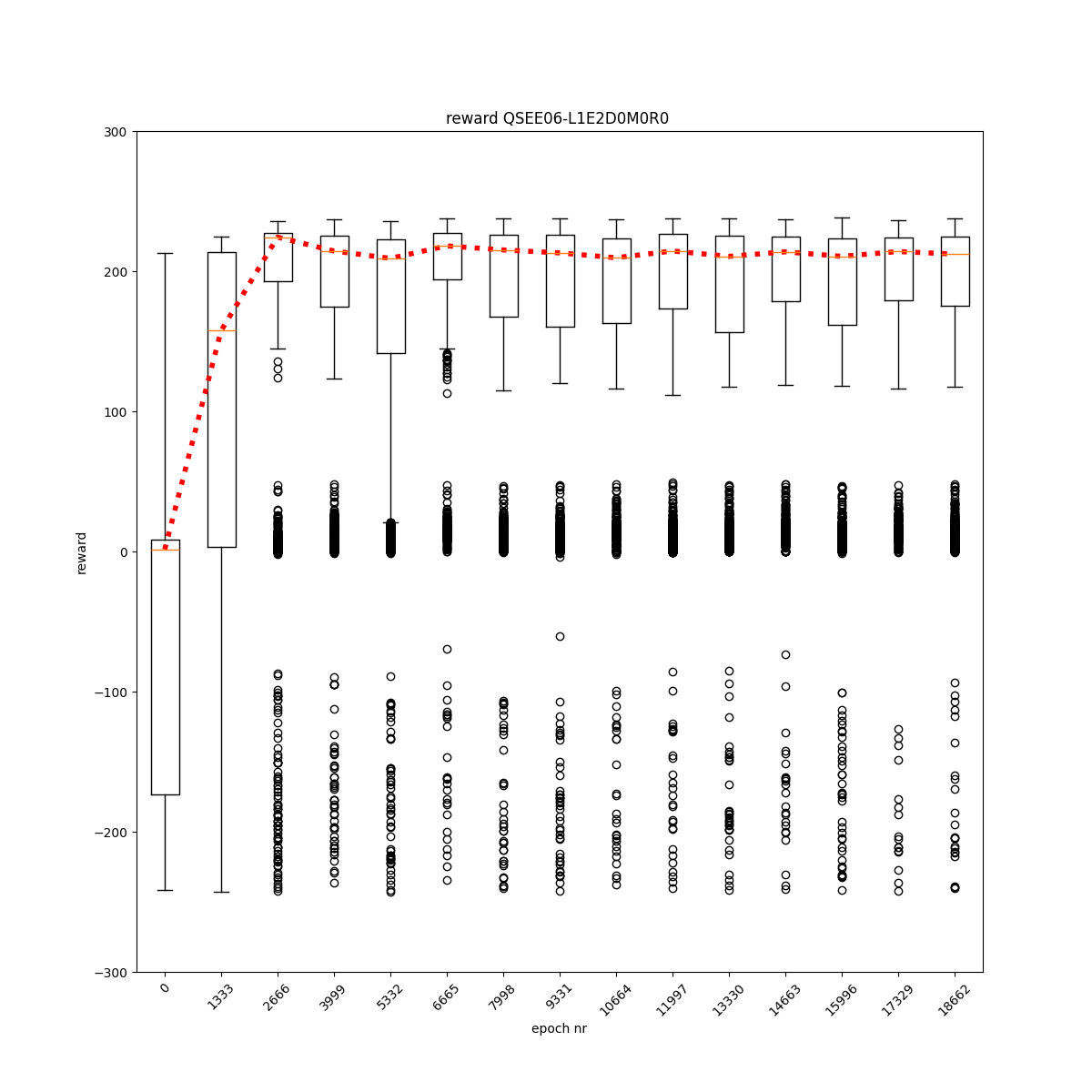

L0 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

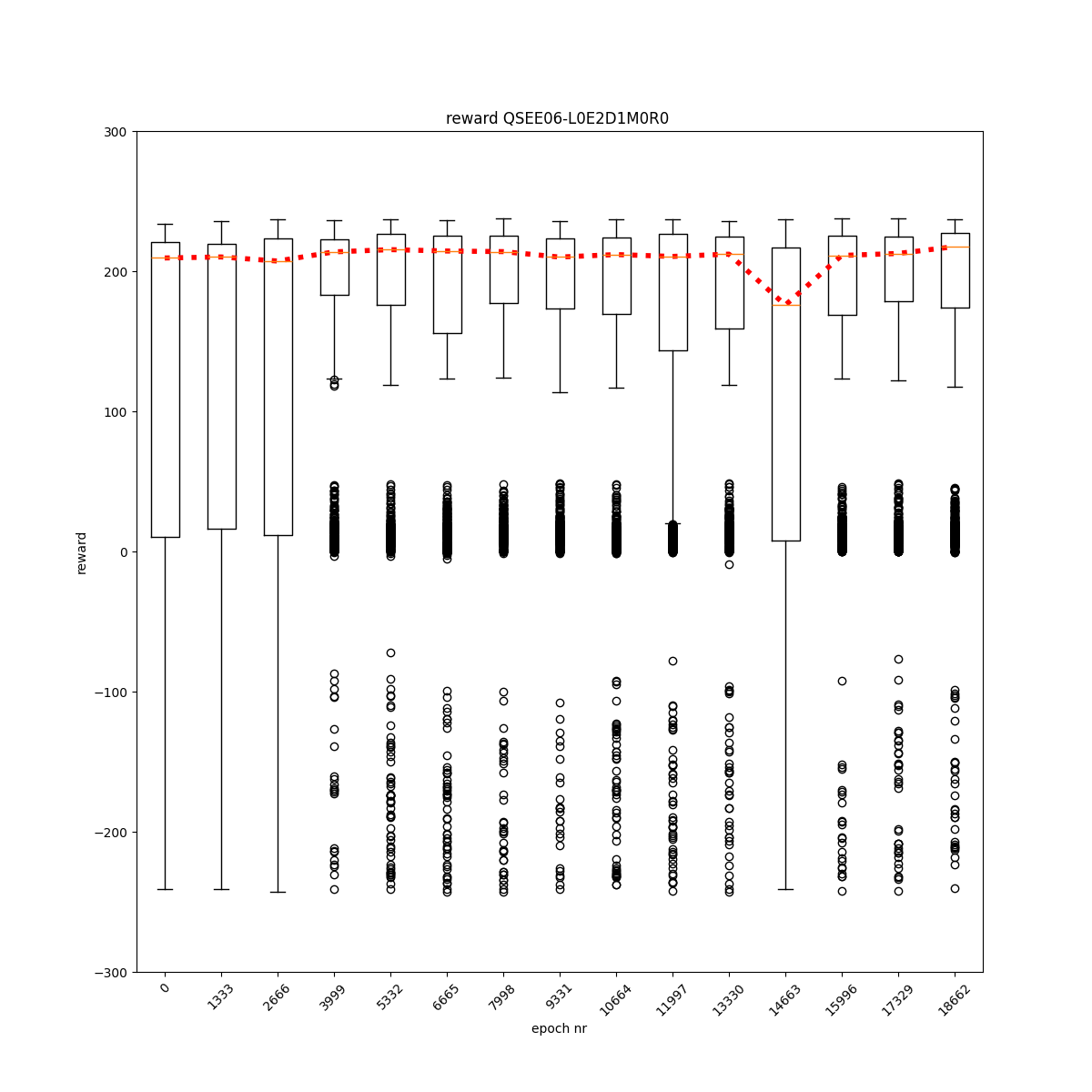

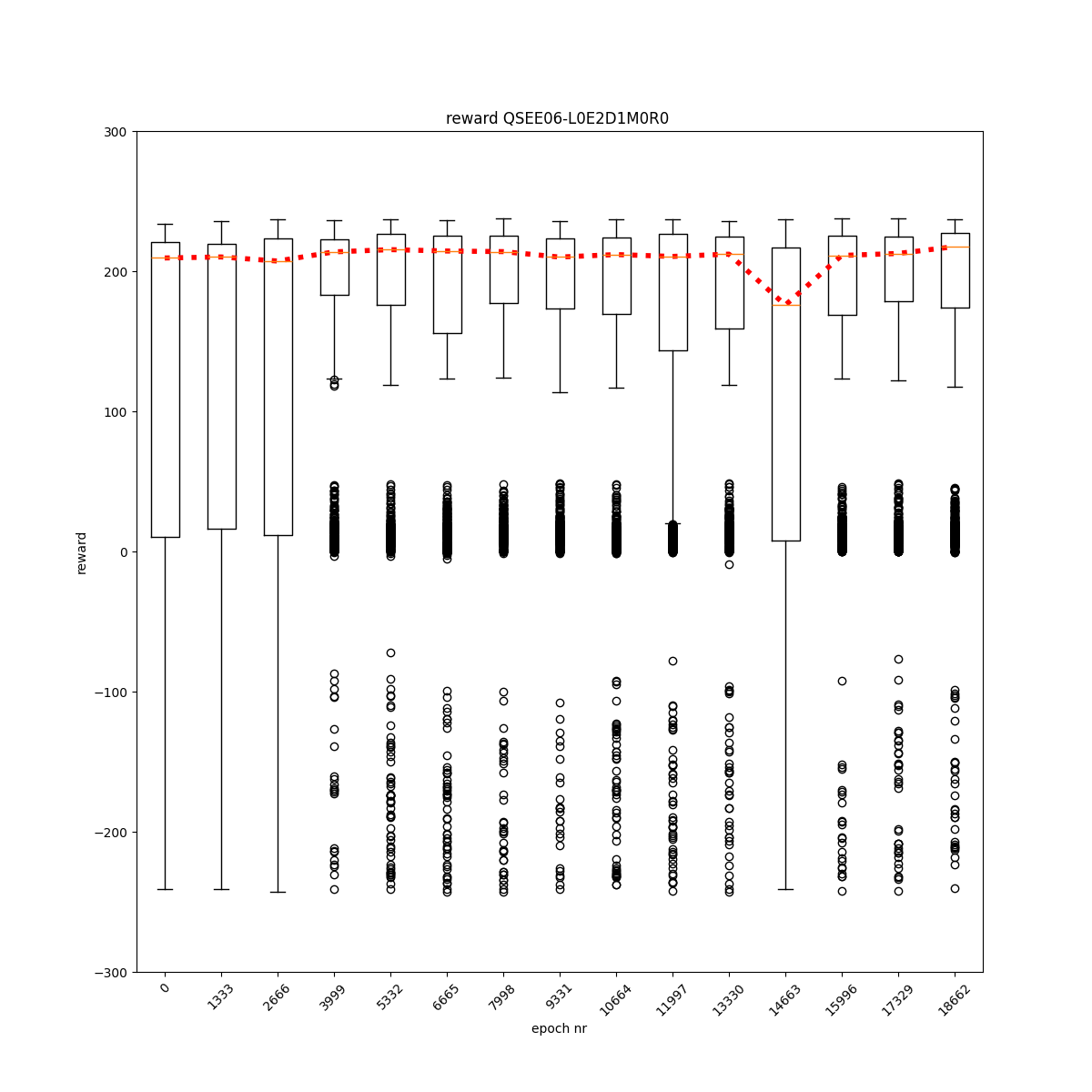

L0 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

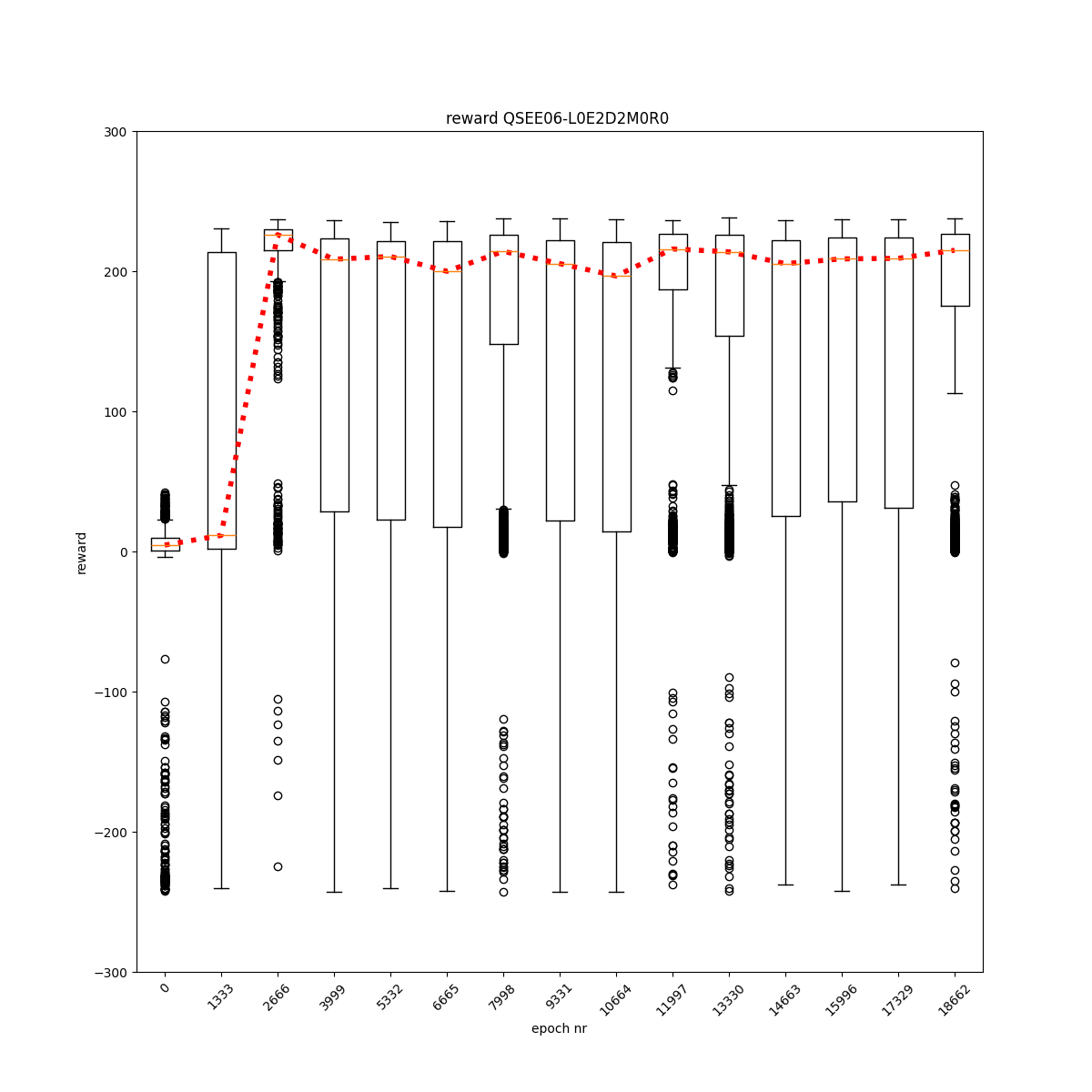

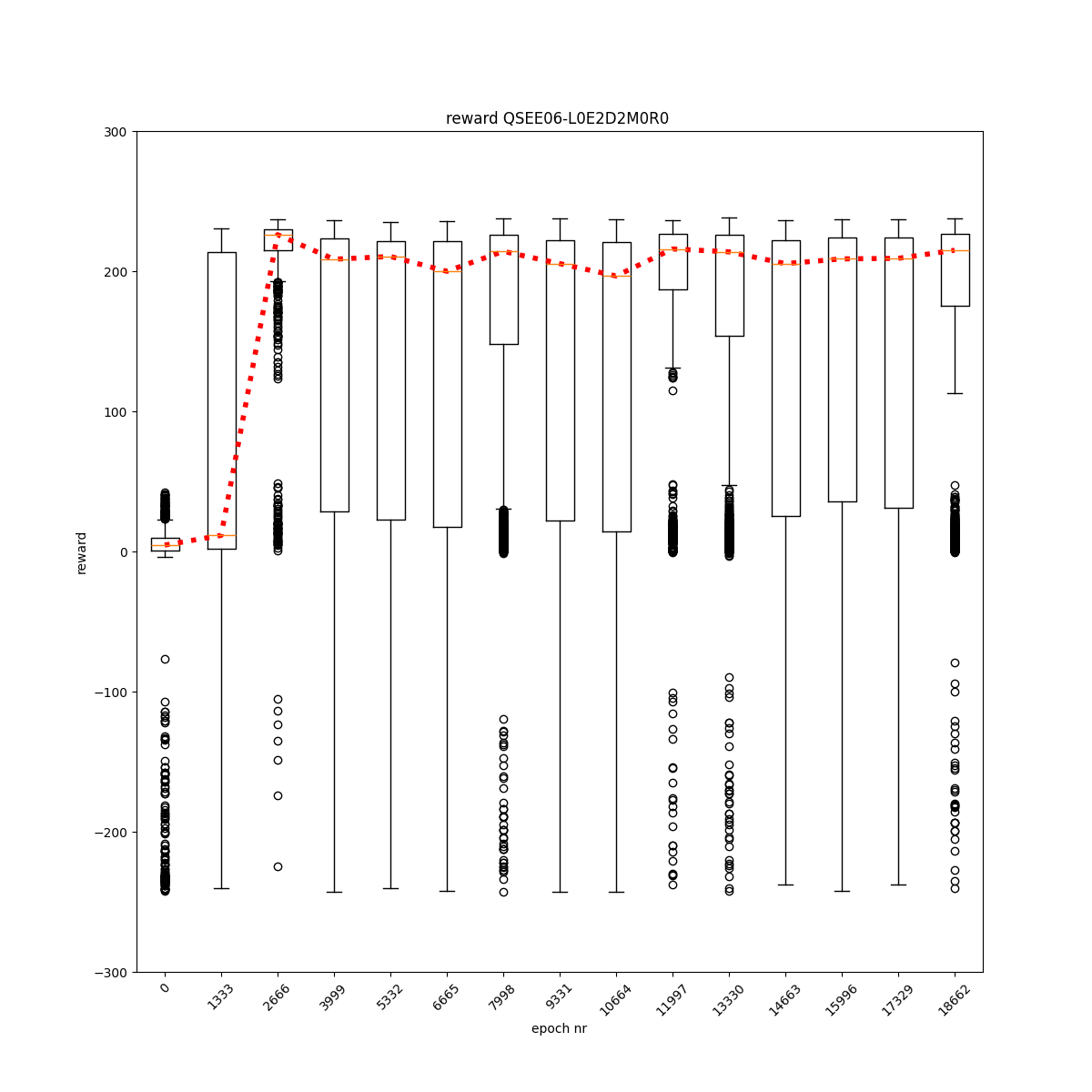

L0 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

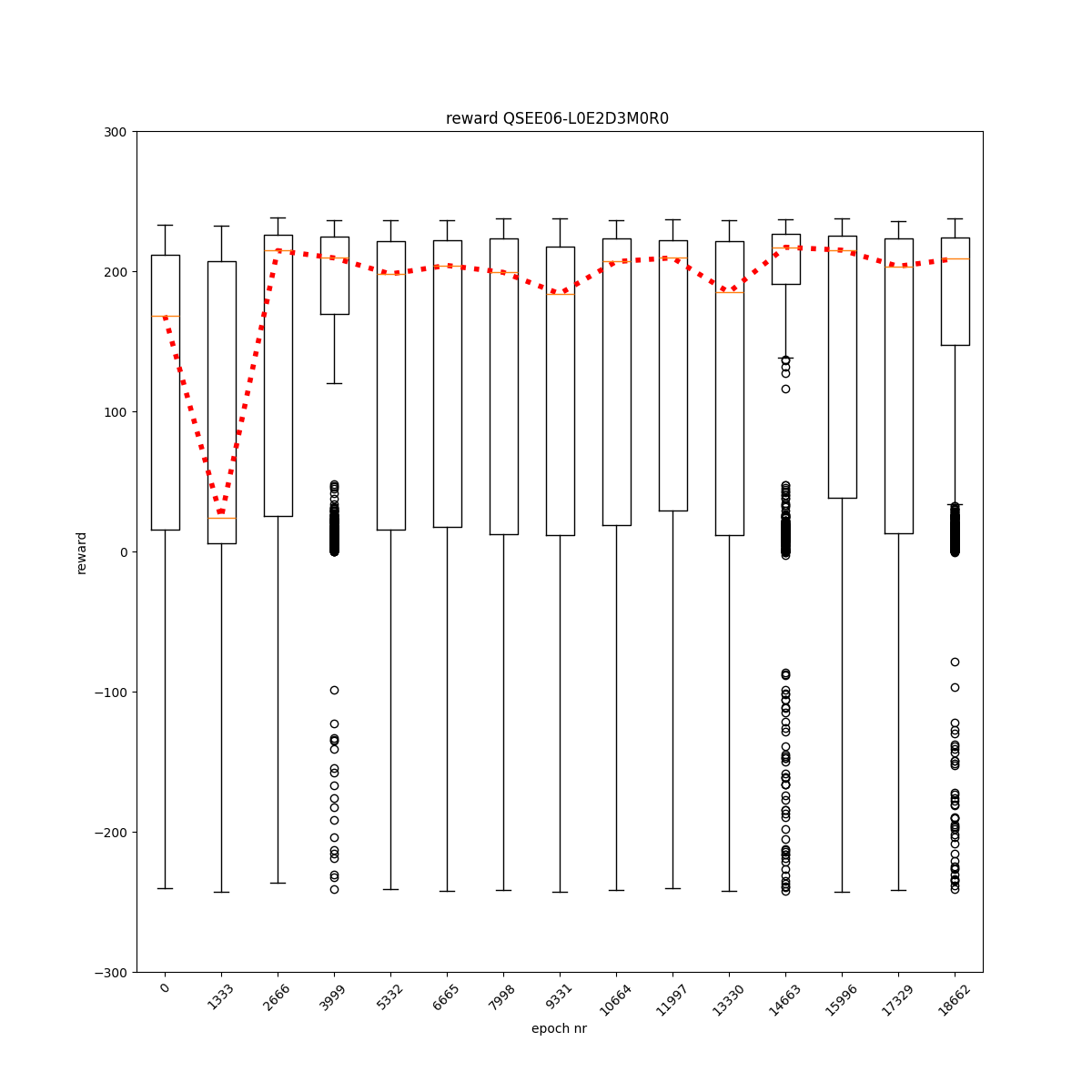

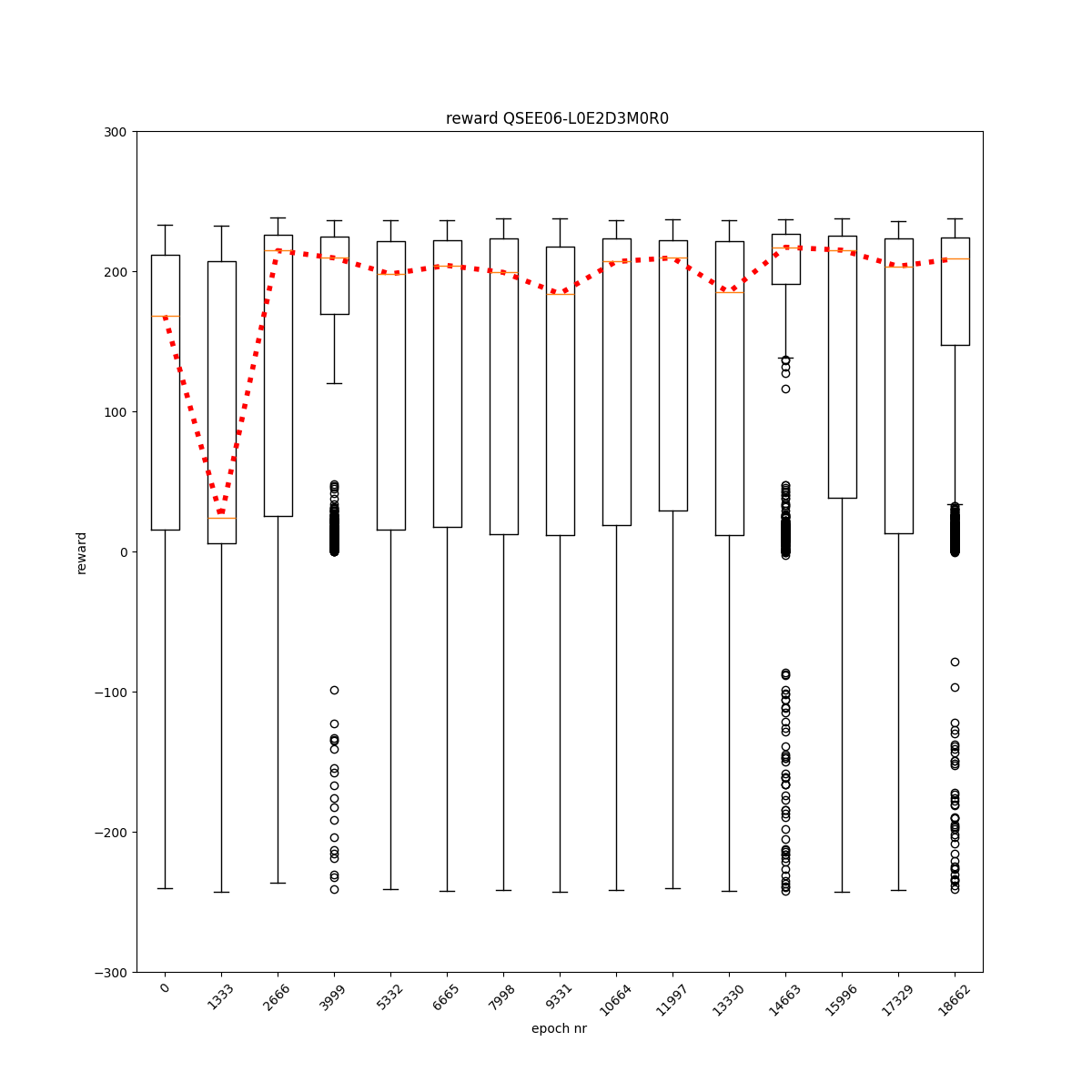

L0 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

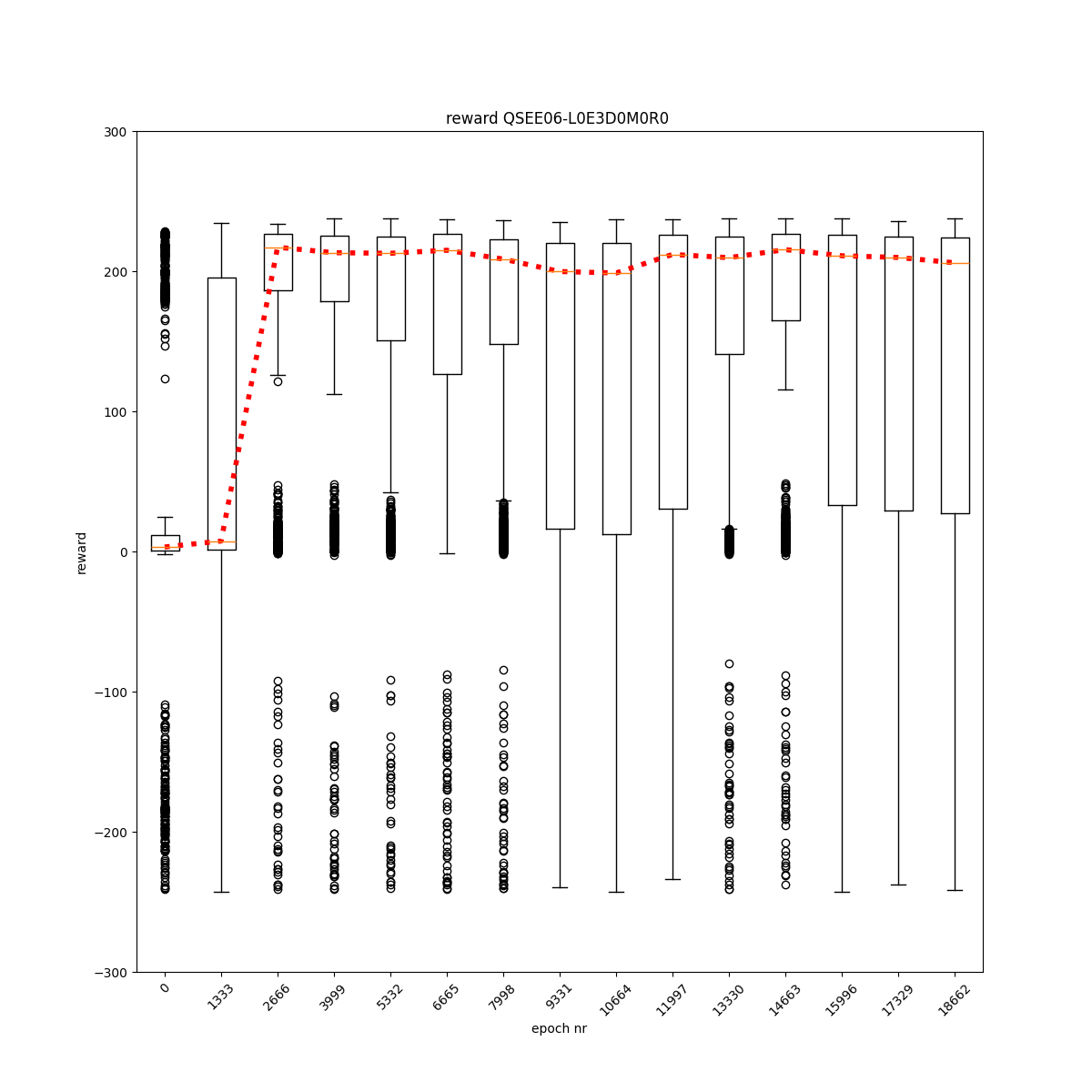

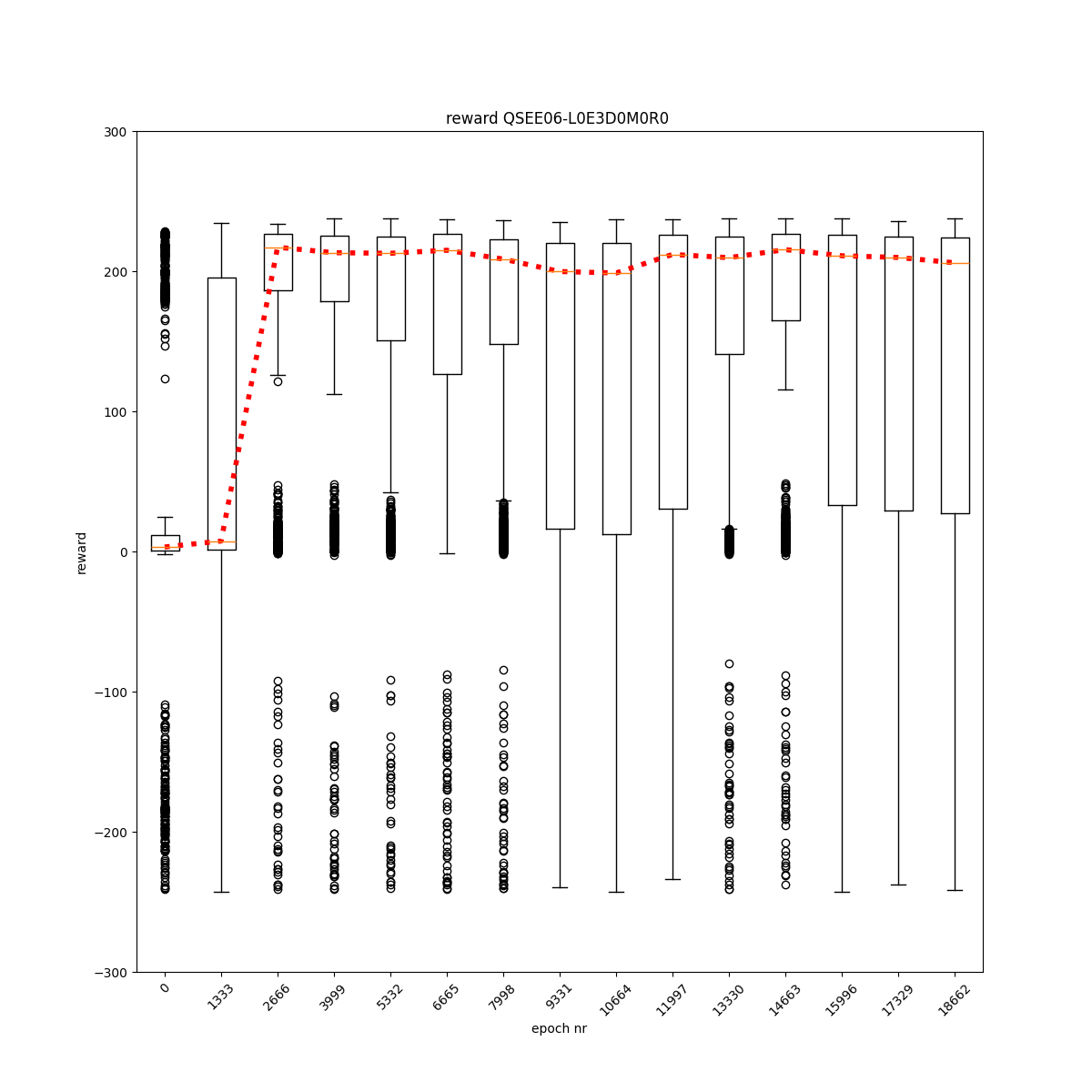

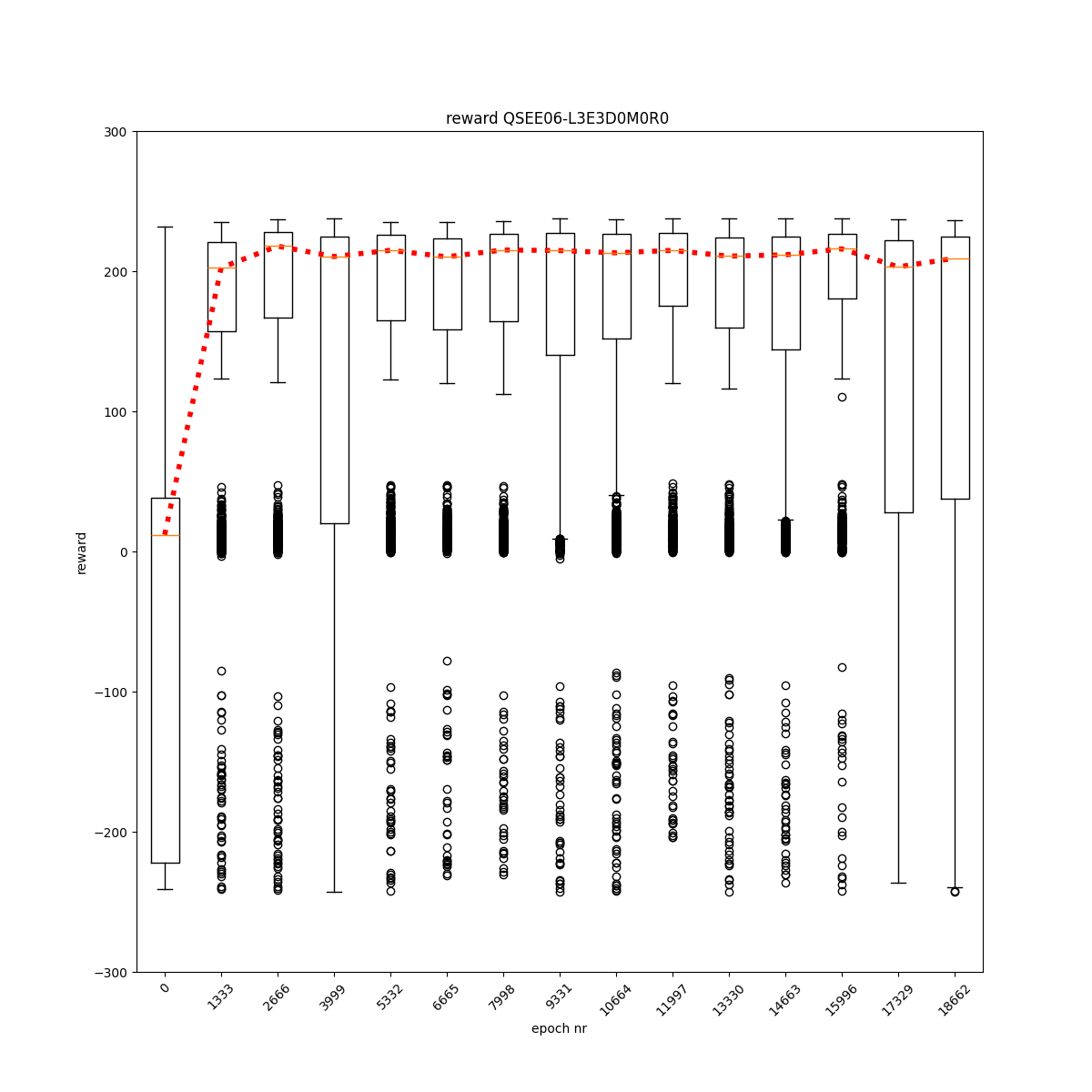

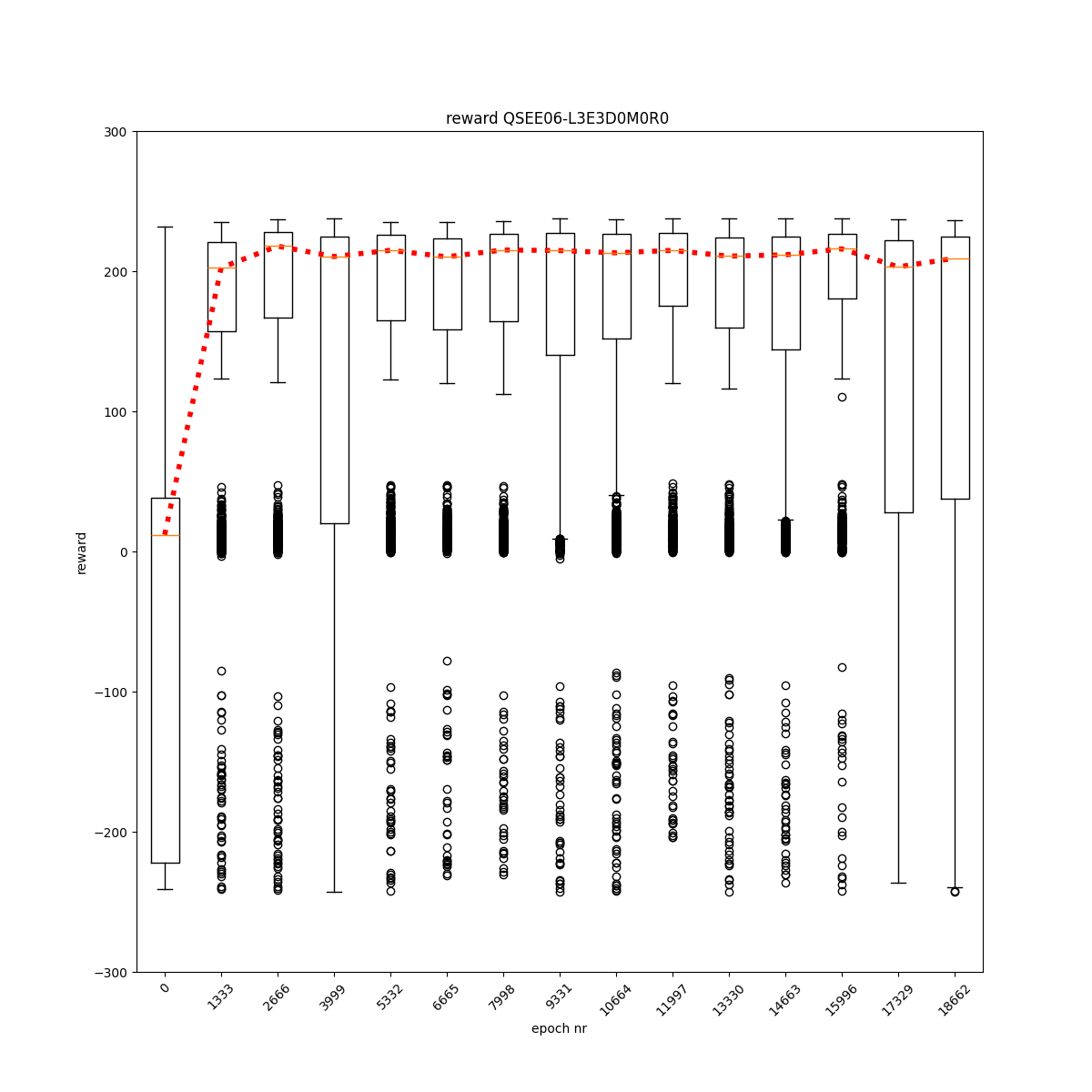

L0 E3 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

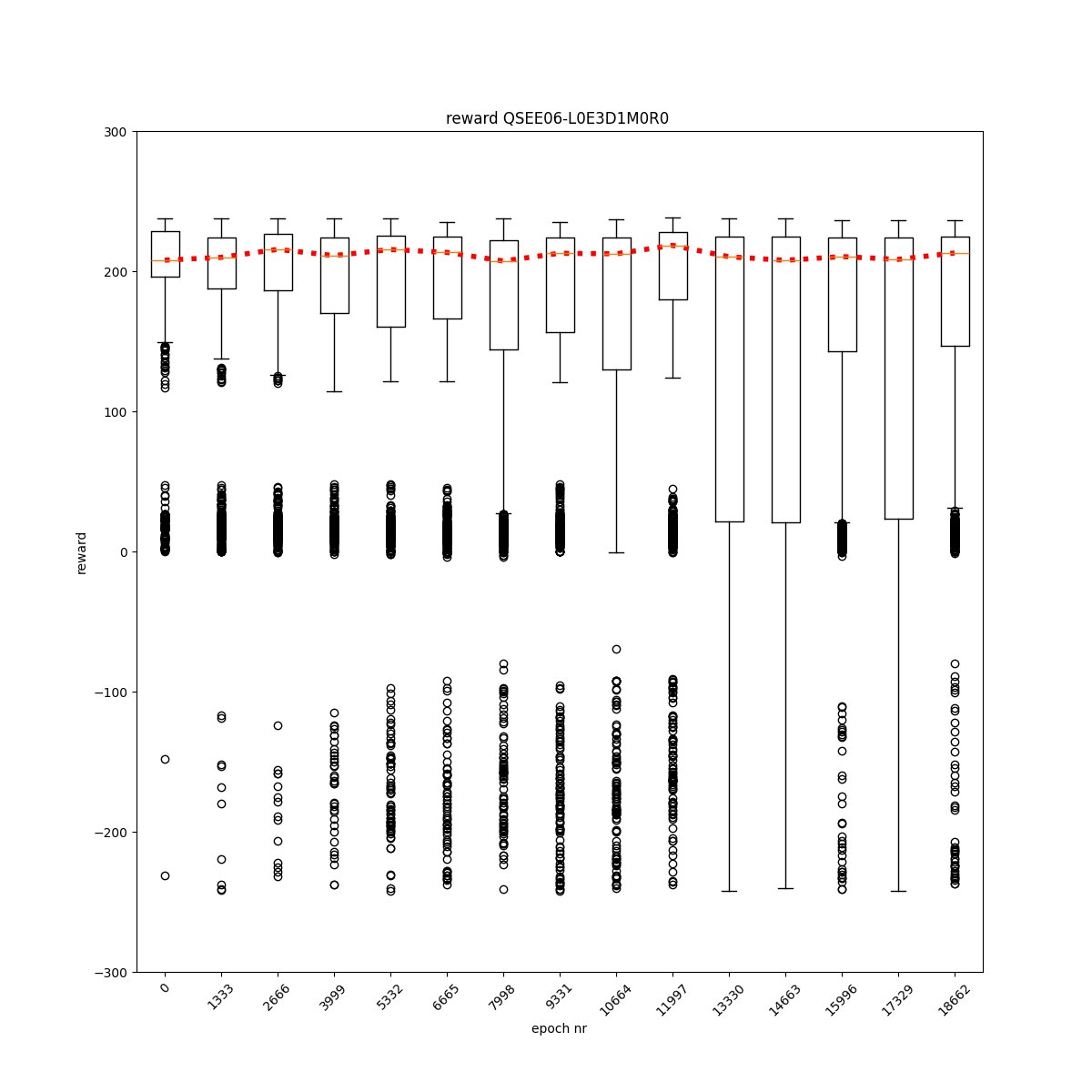

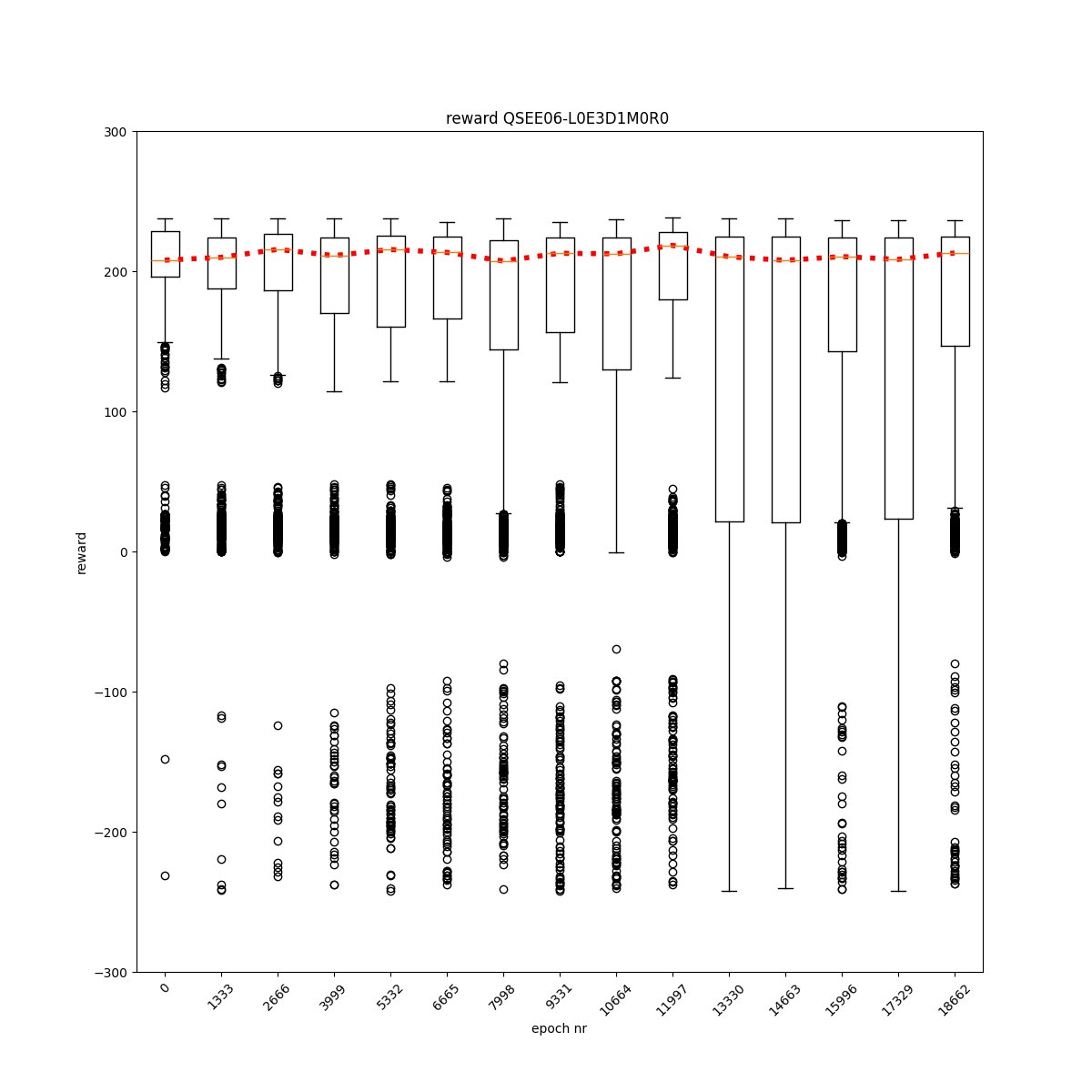

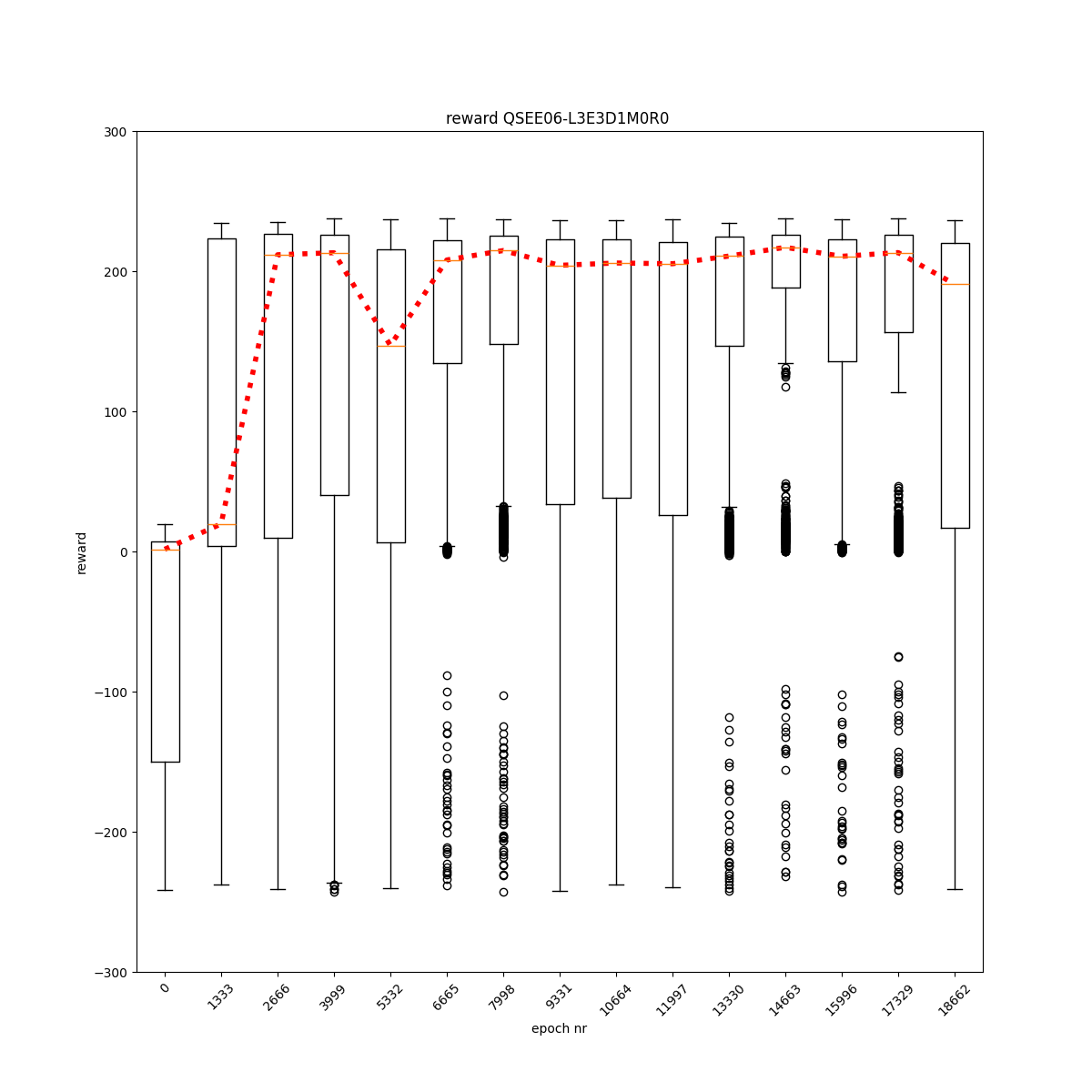

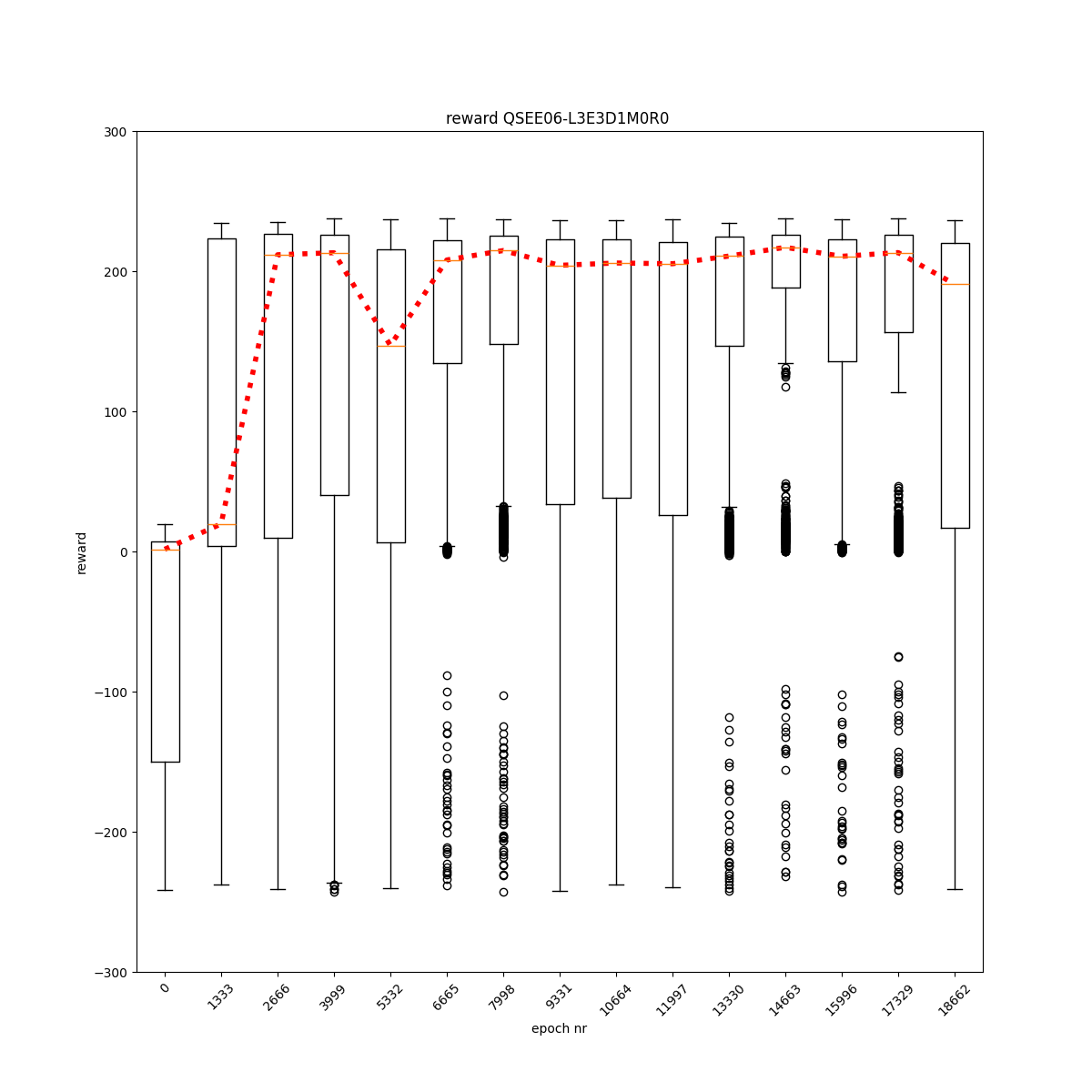

L0 E3 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

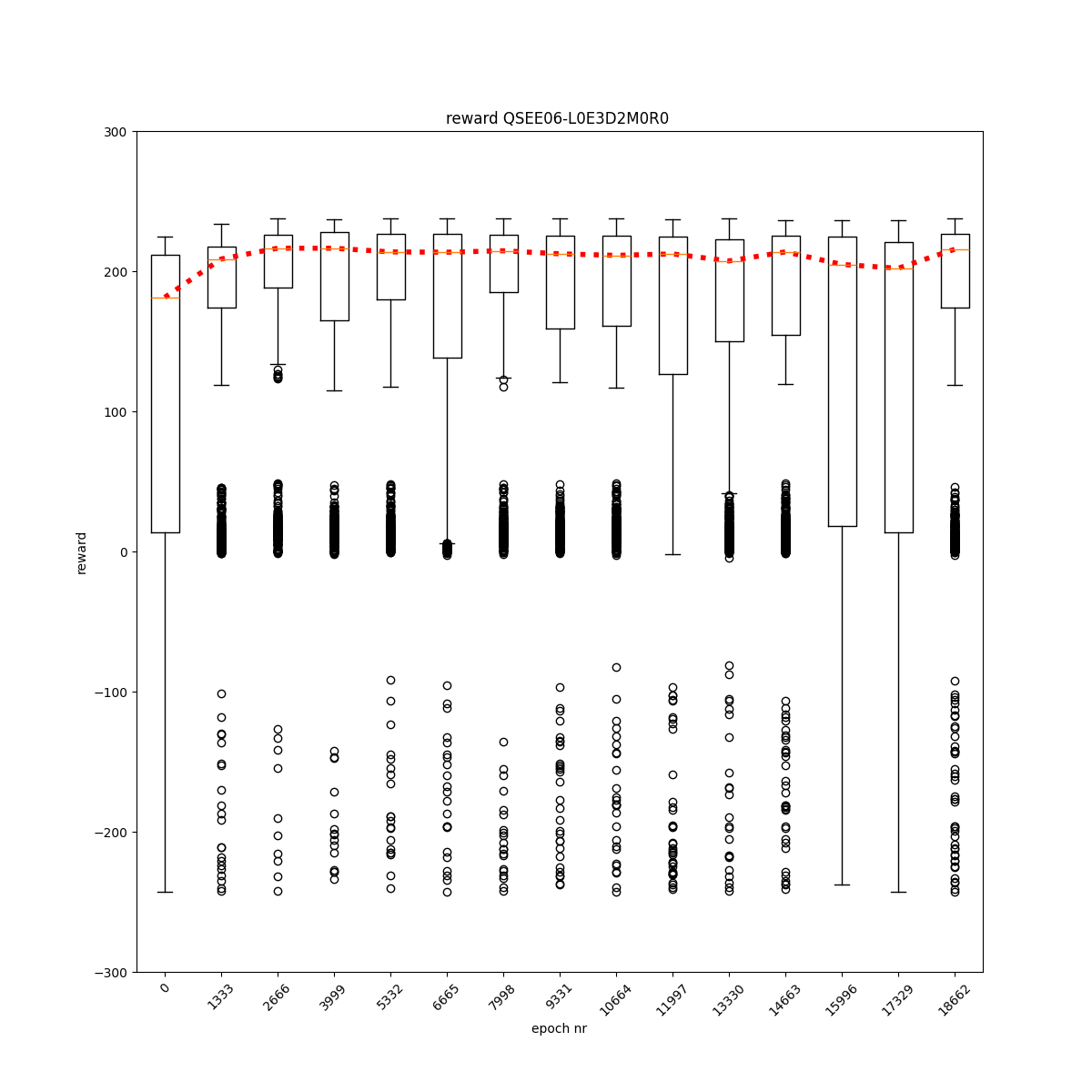

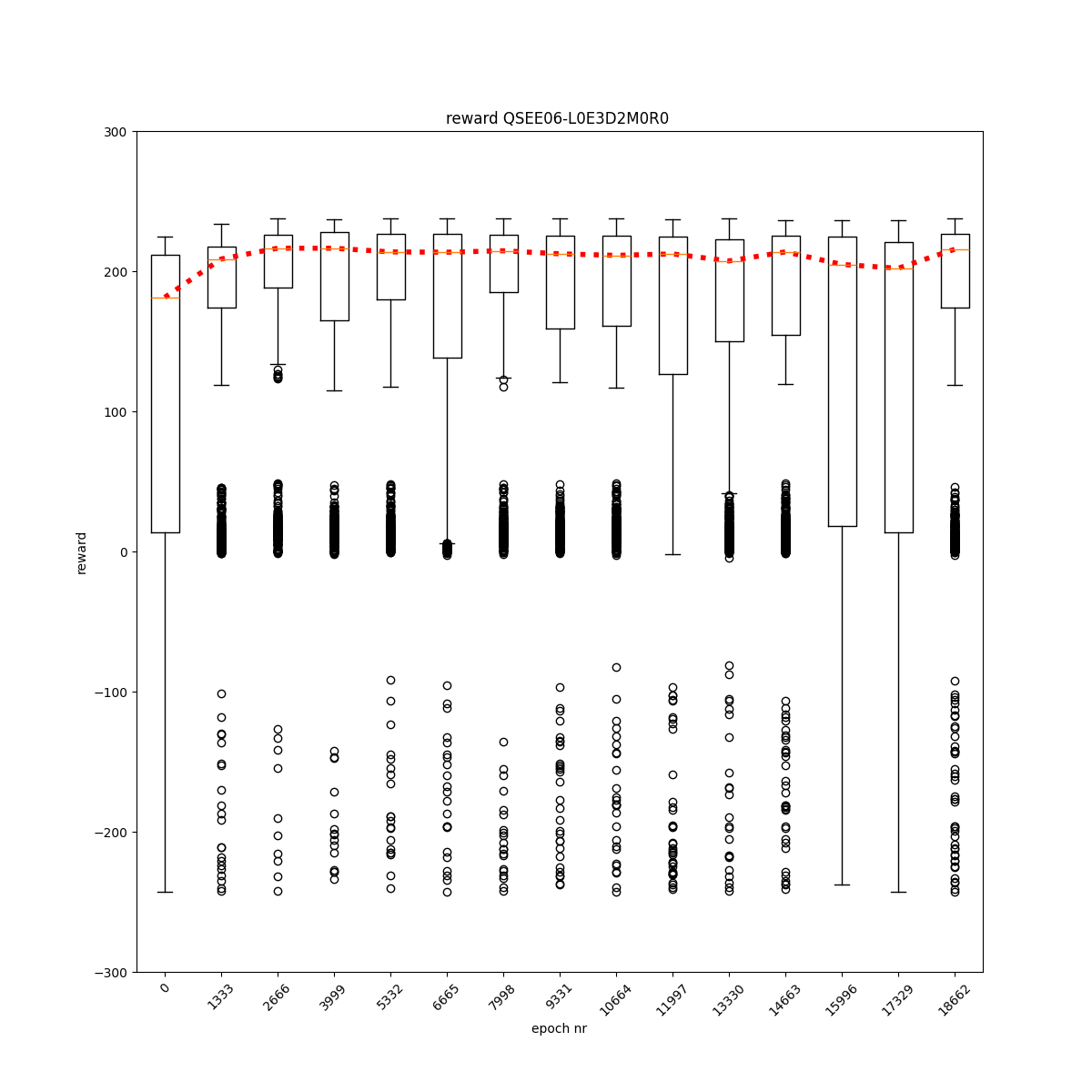

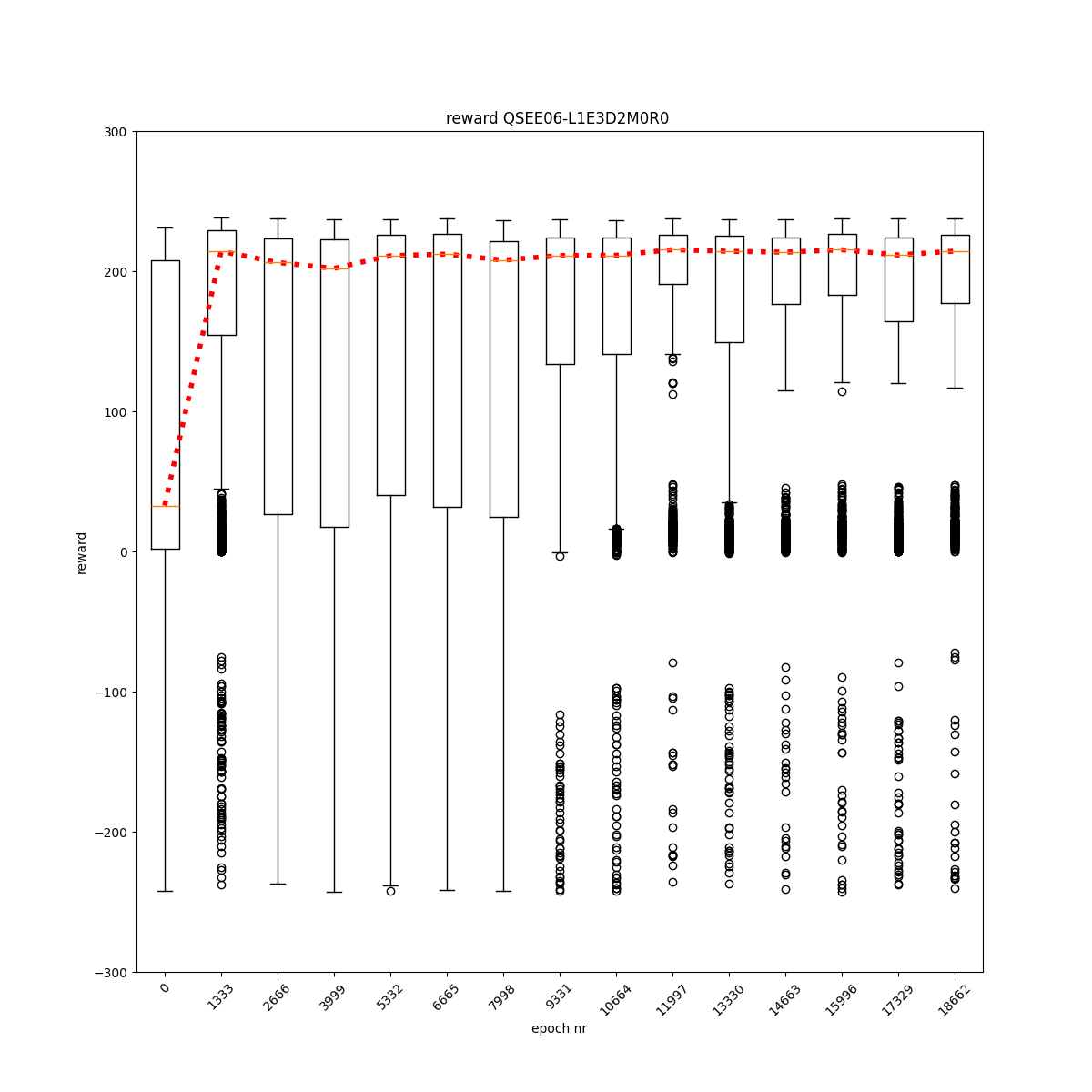

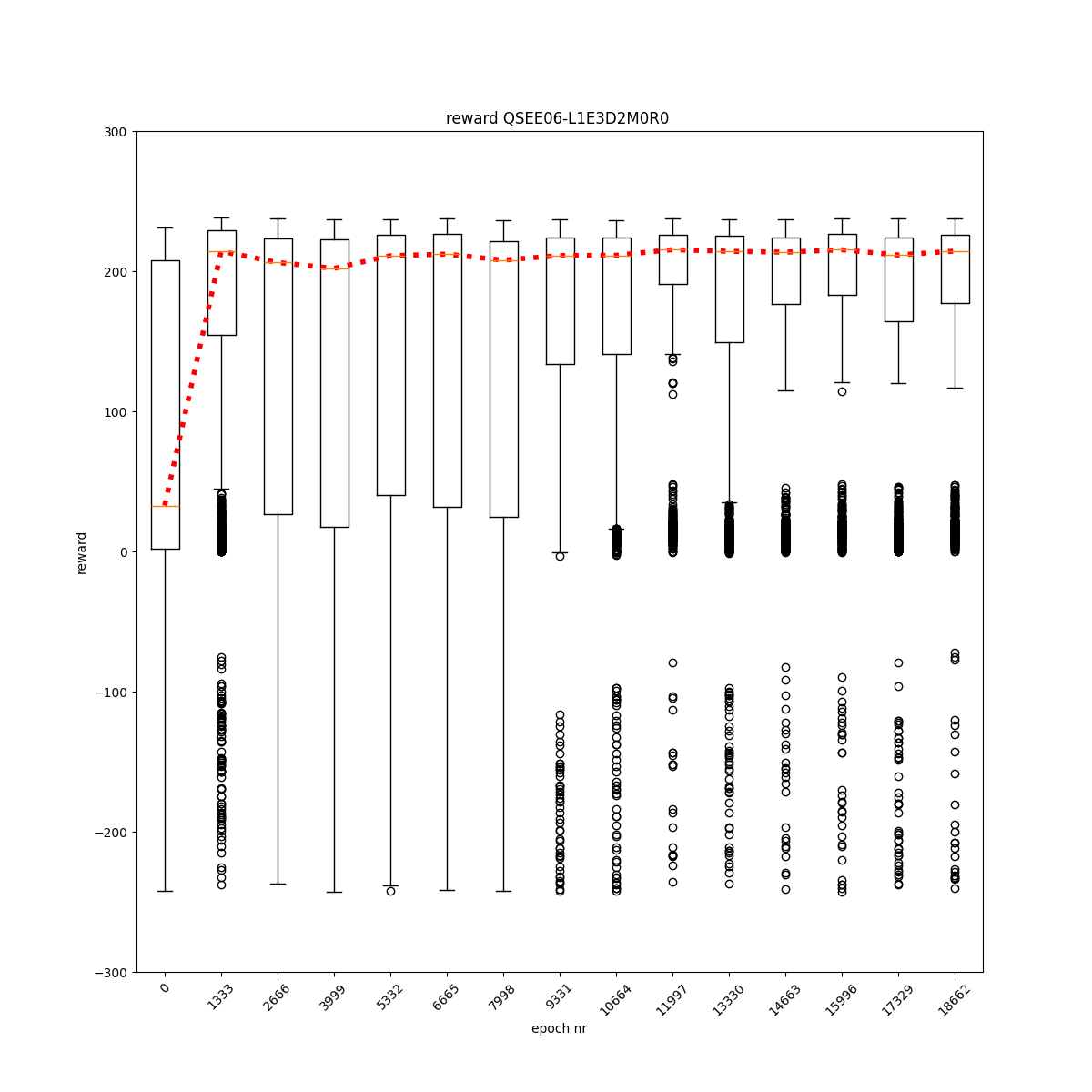

L0 E3 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

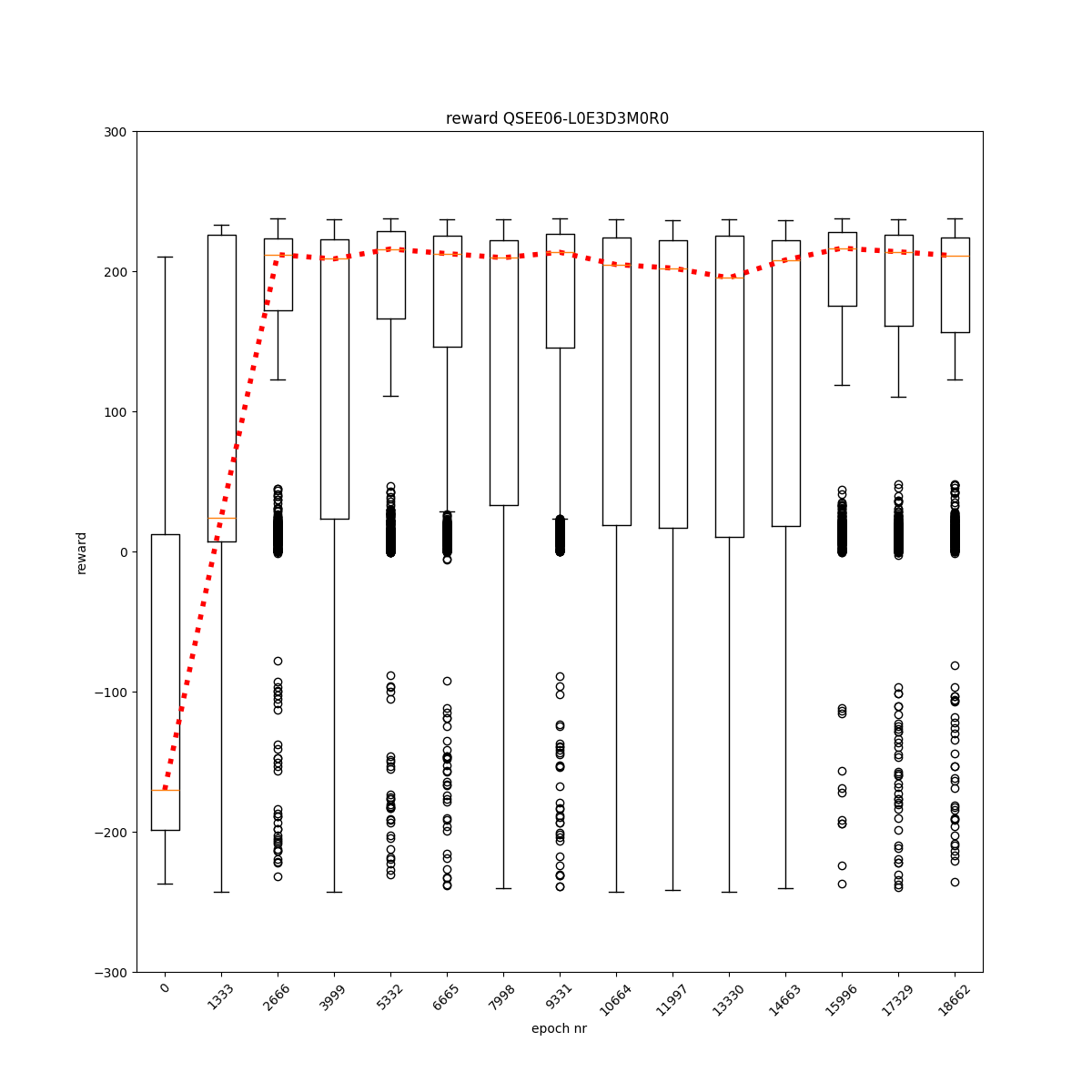

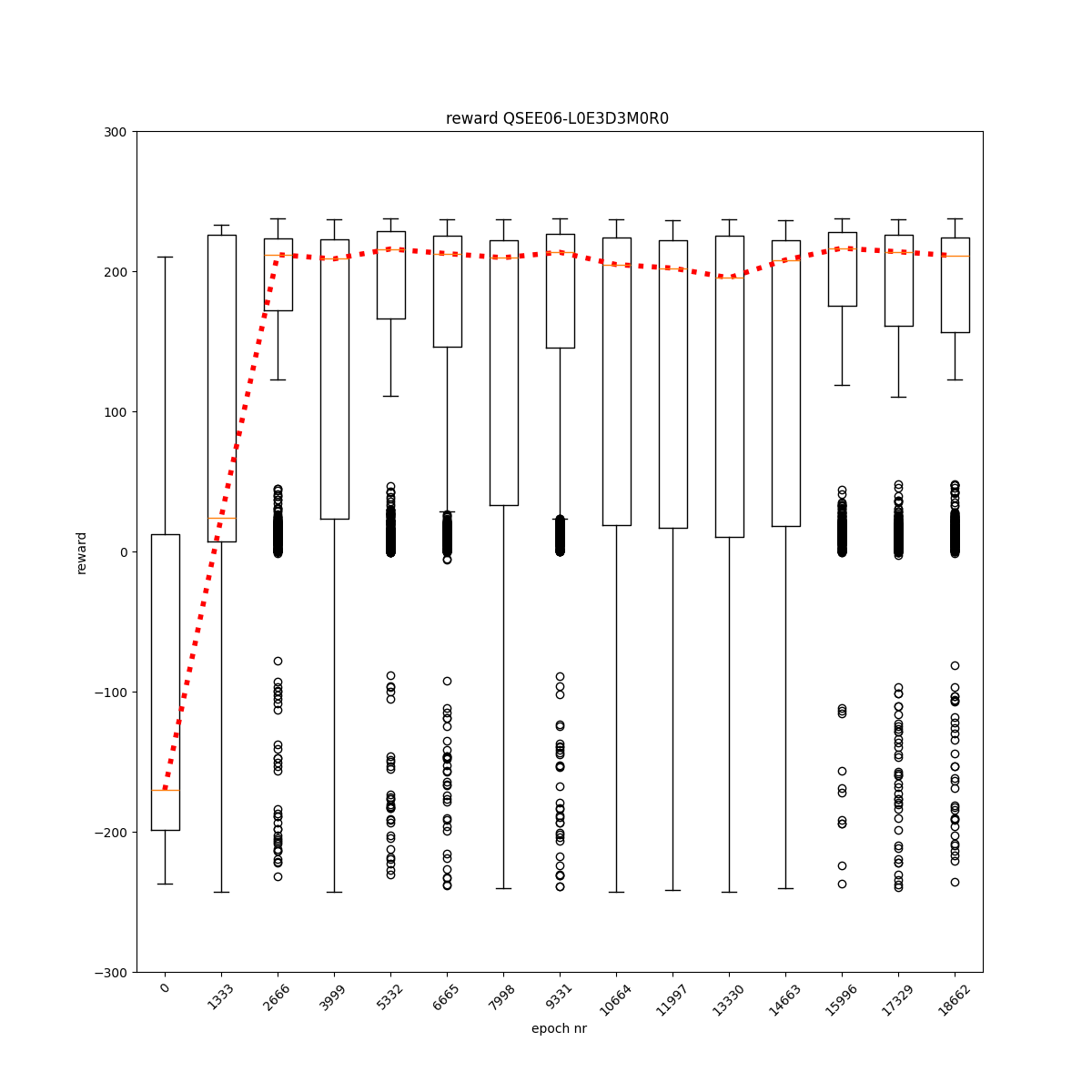

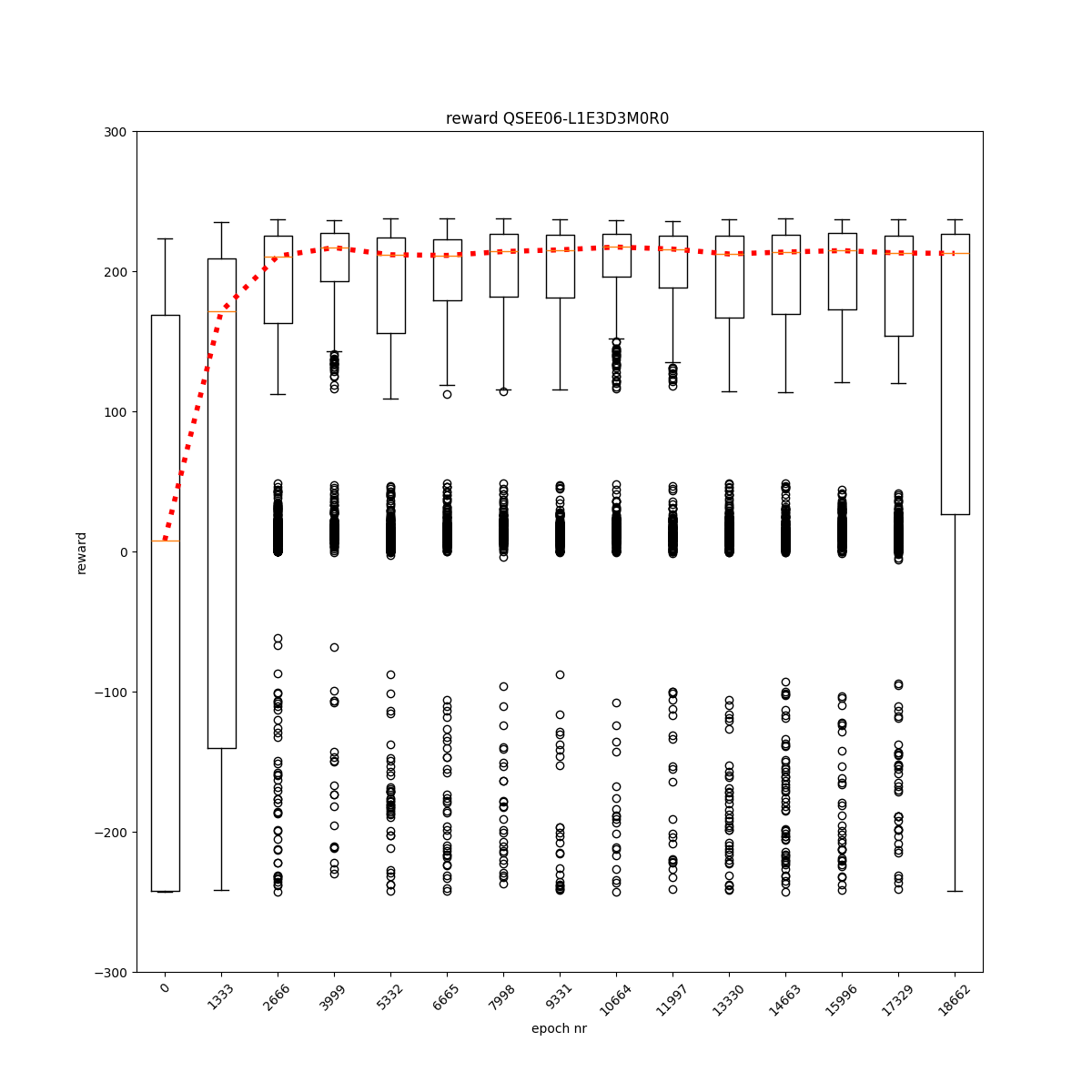

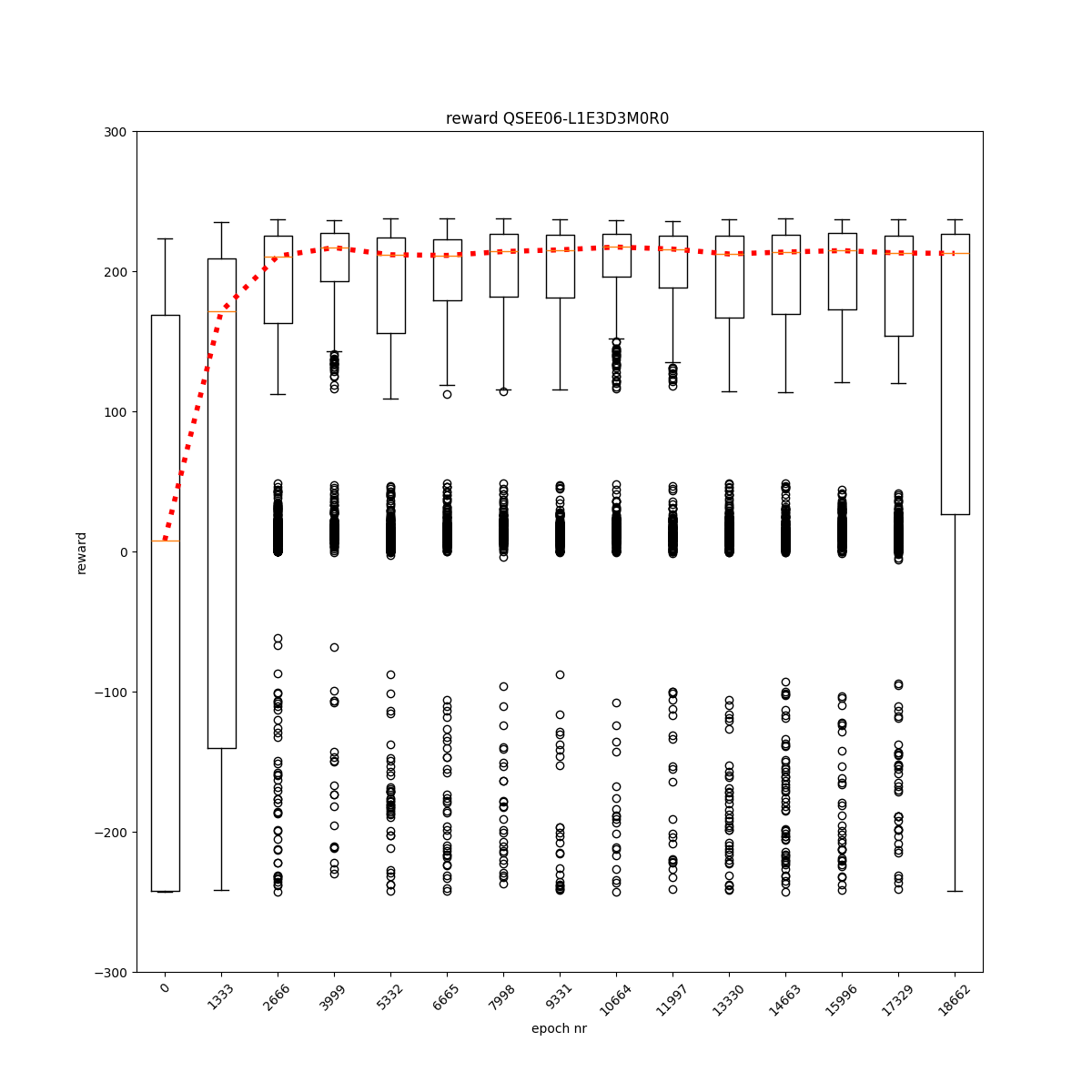

L0 E3 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

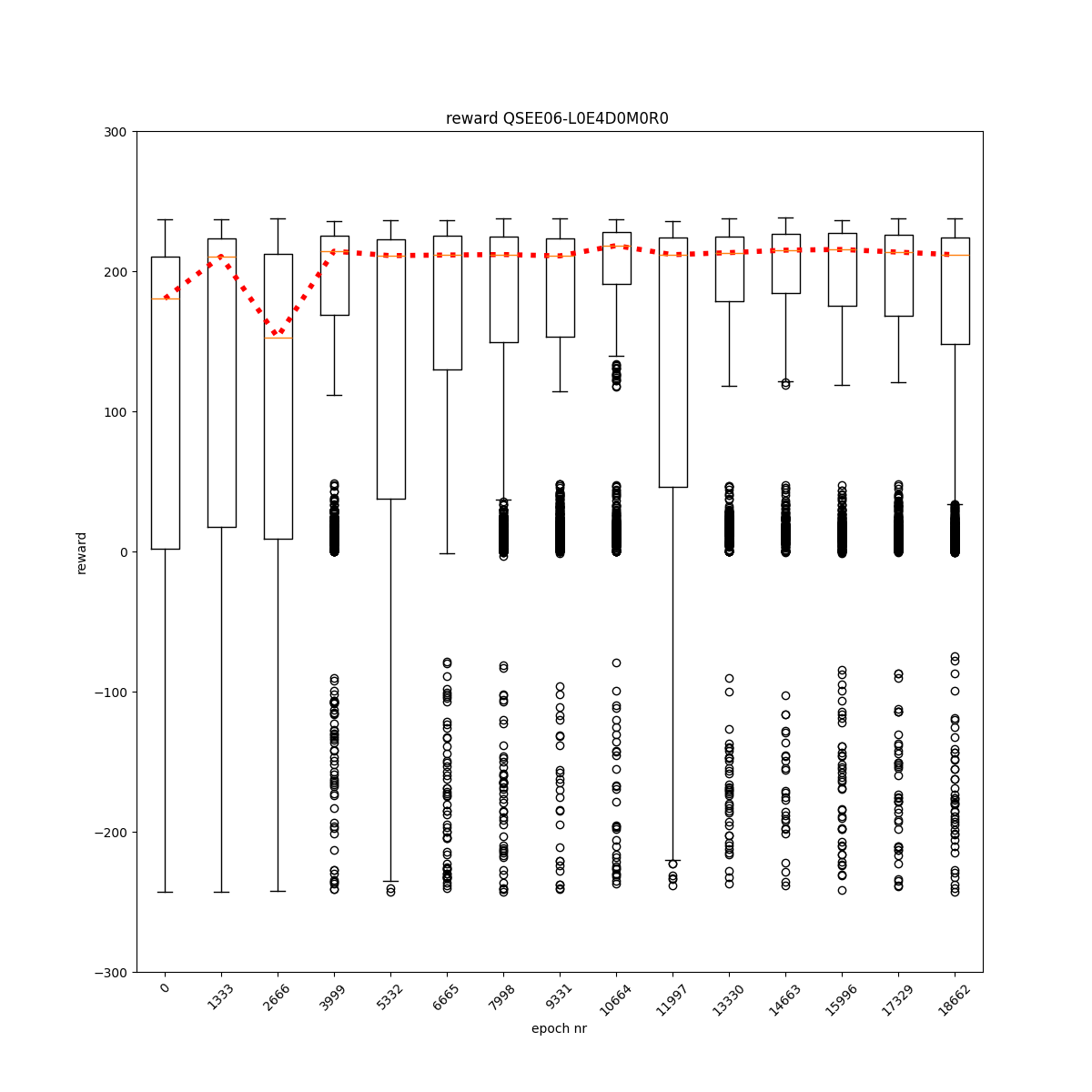

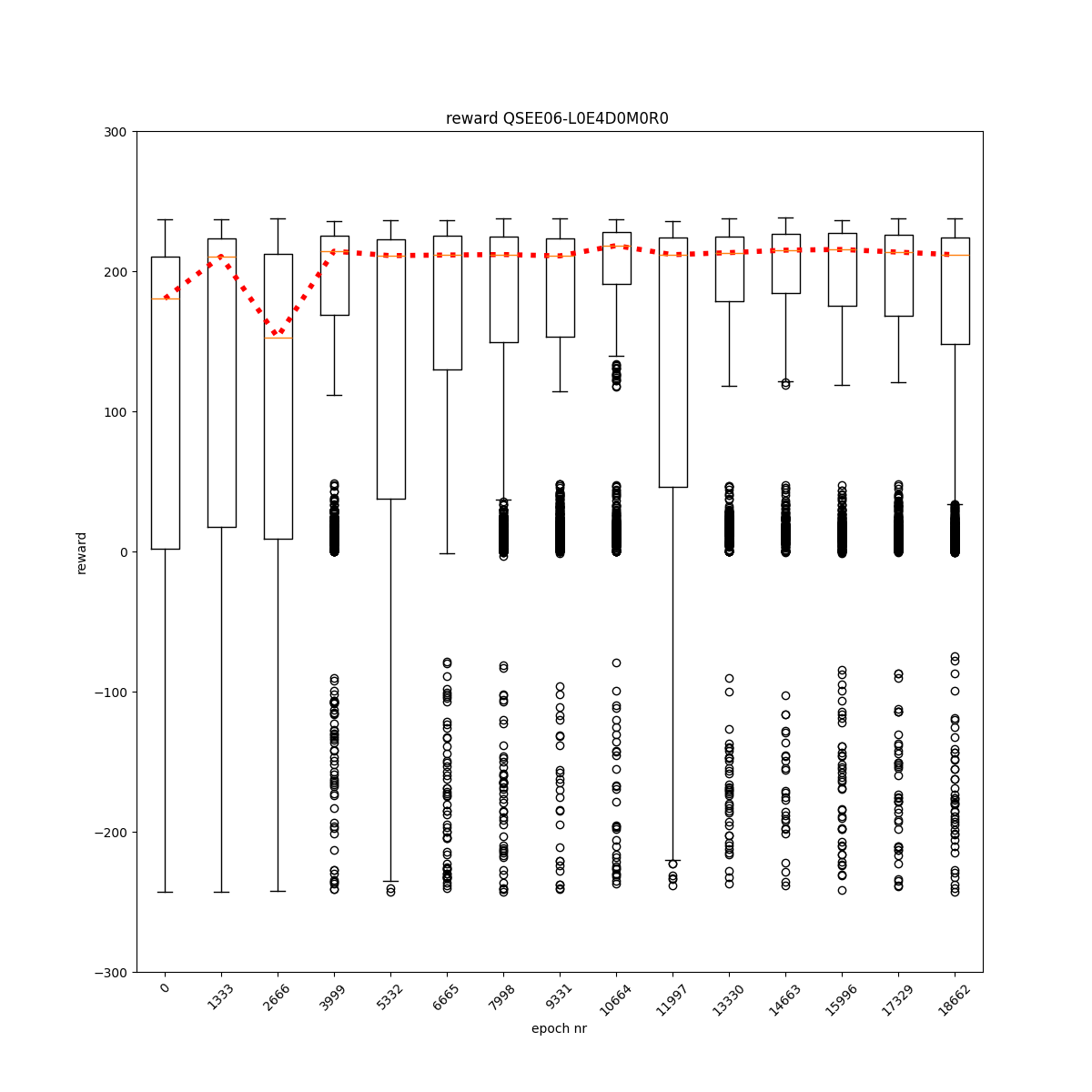

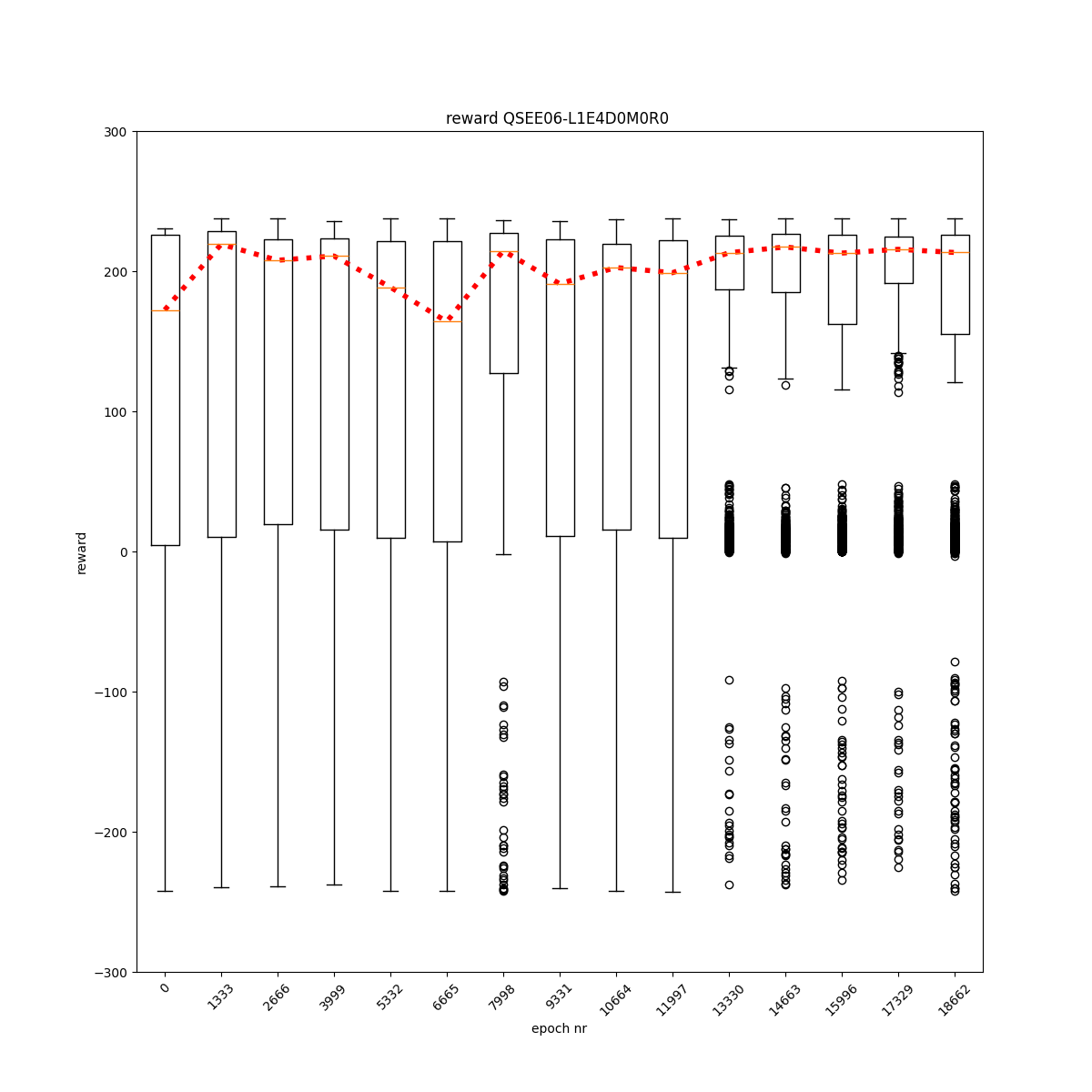

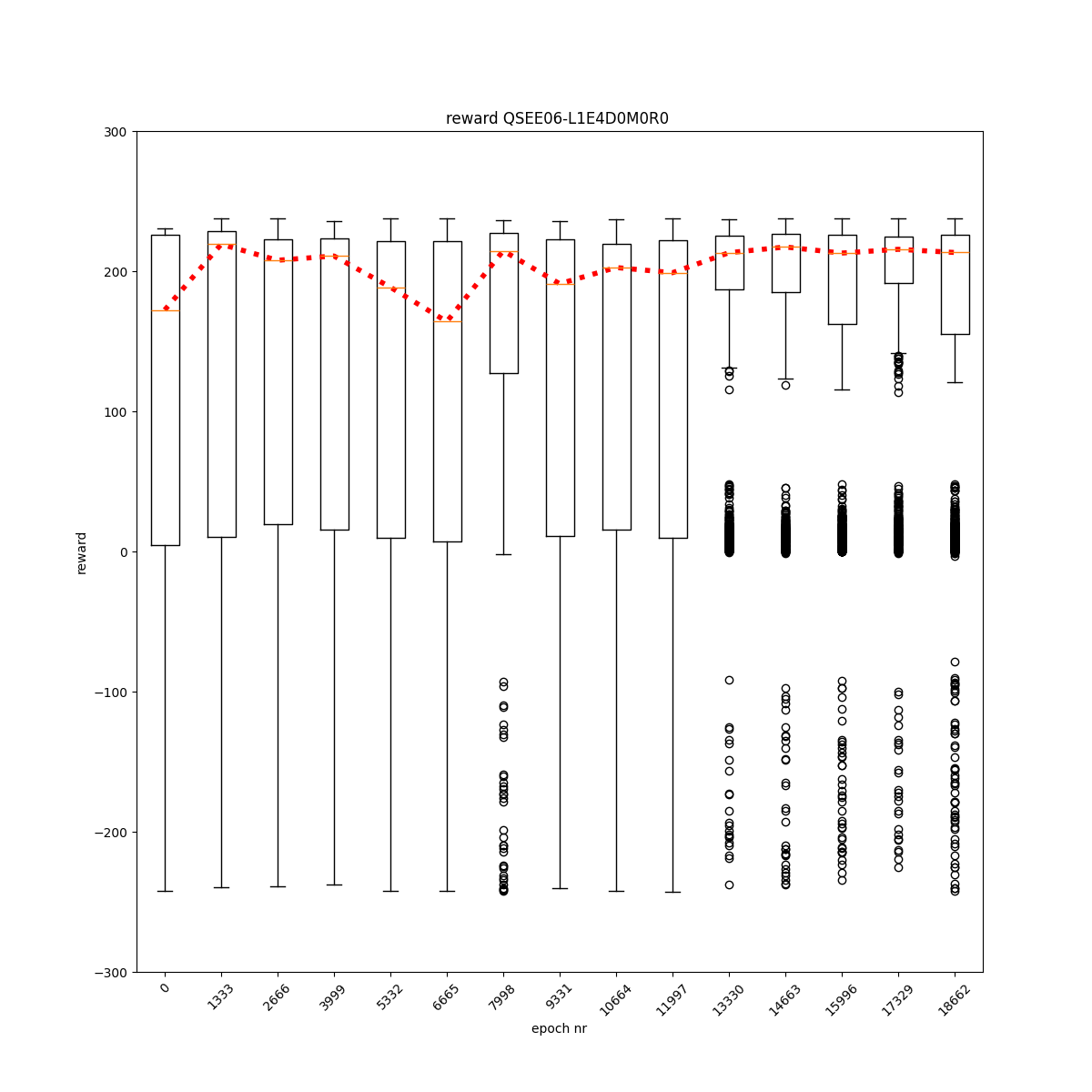

L0 E4 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

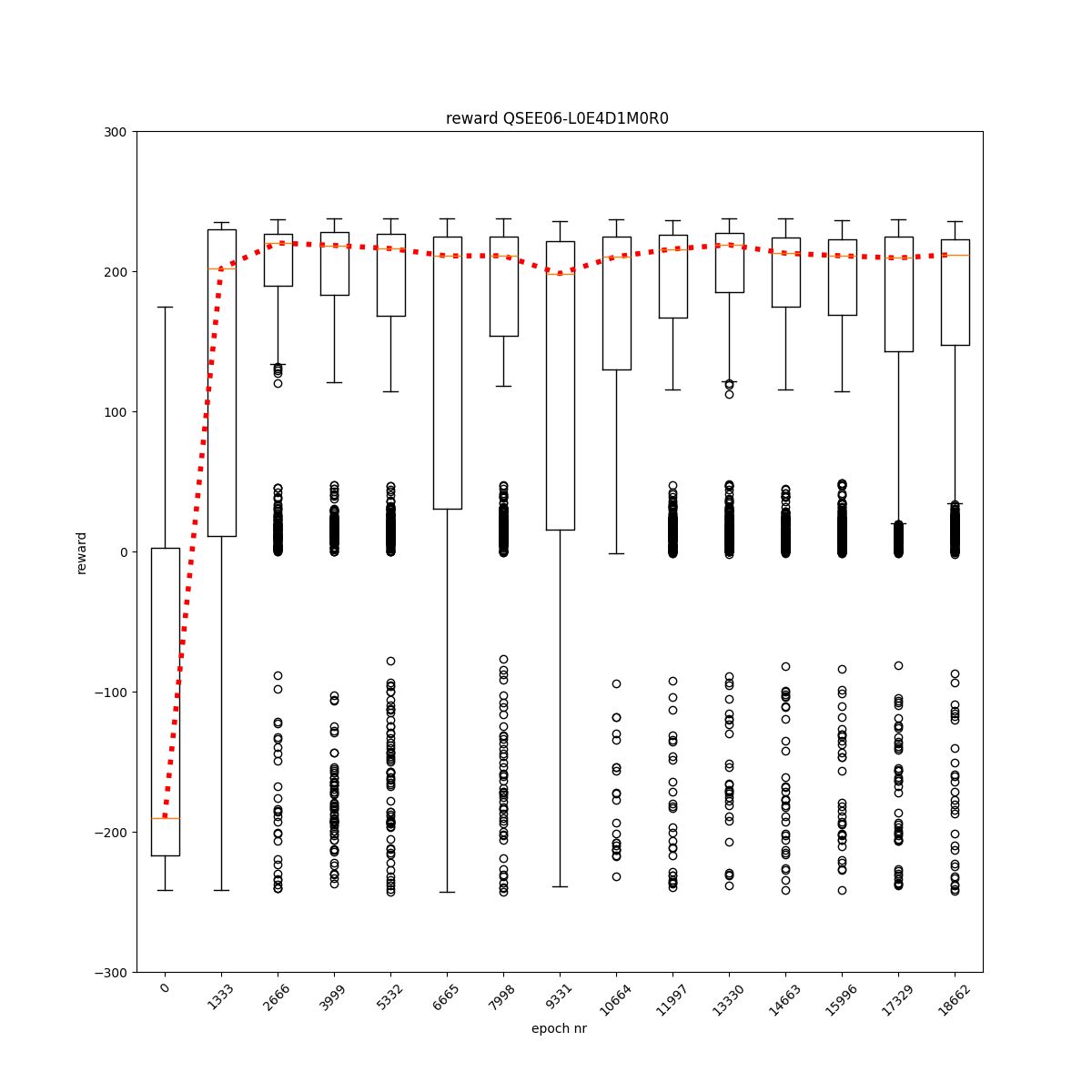

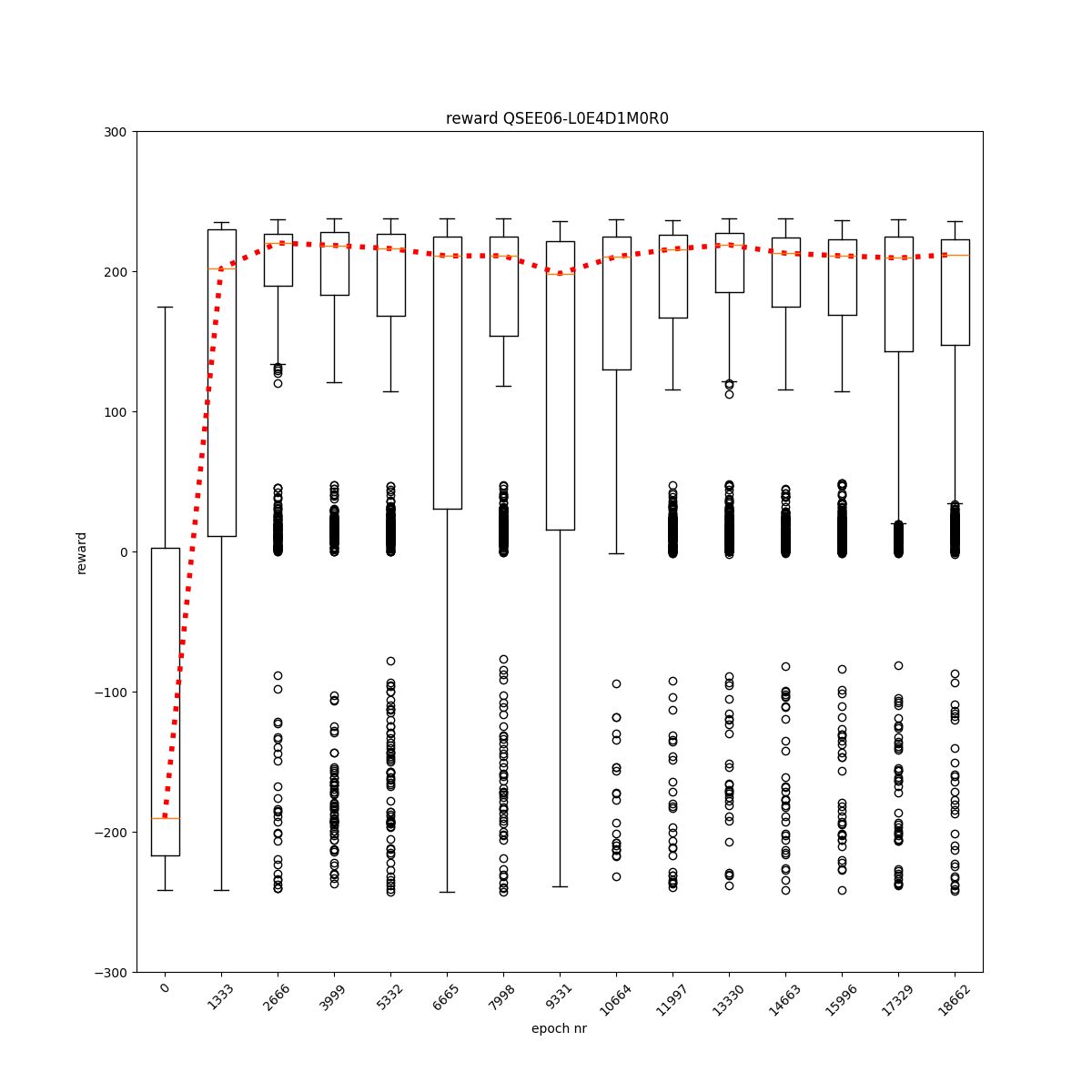

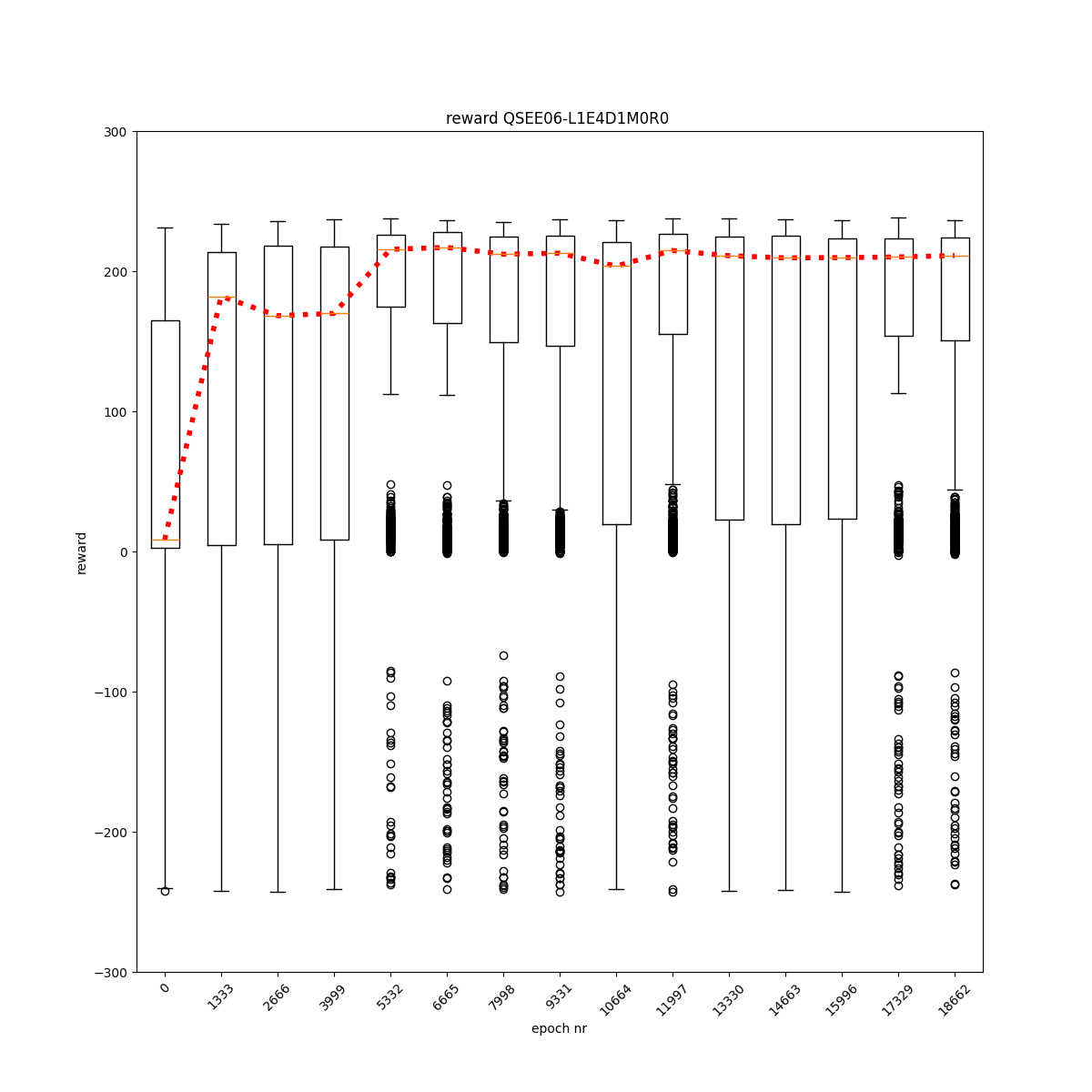

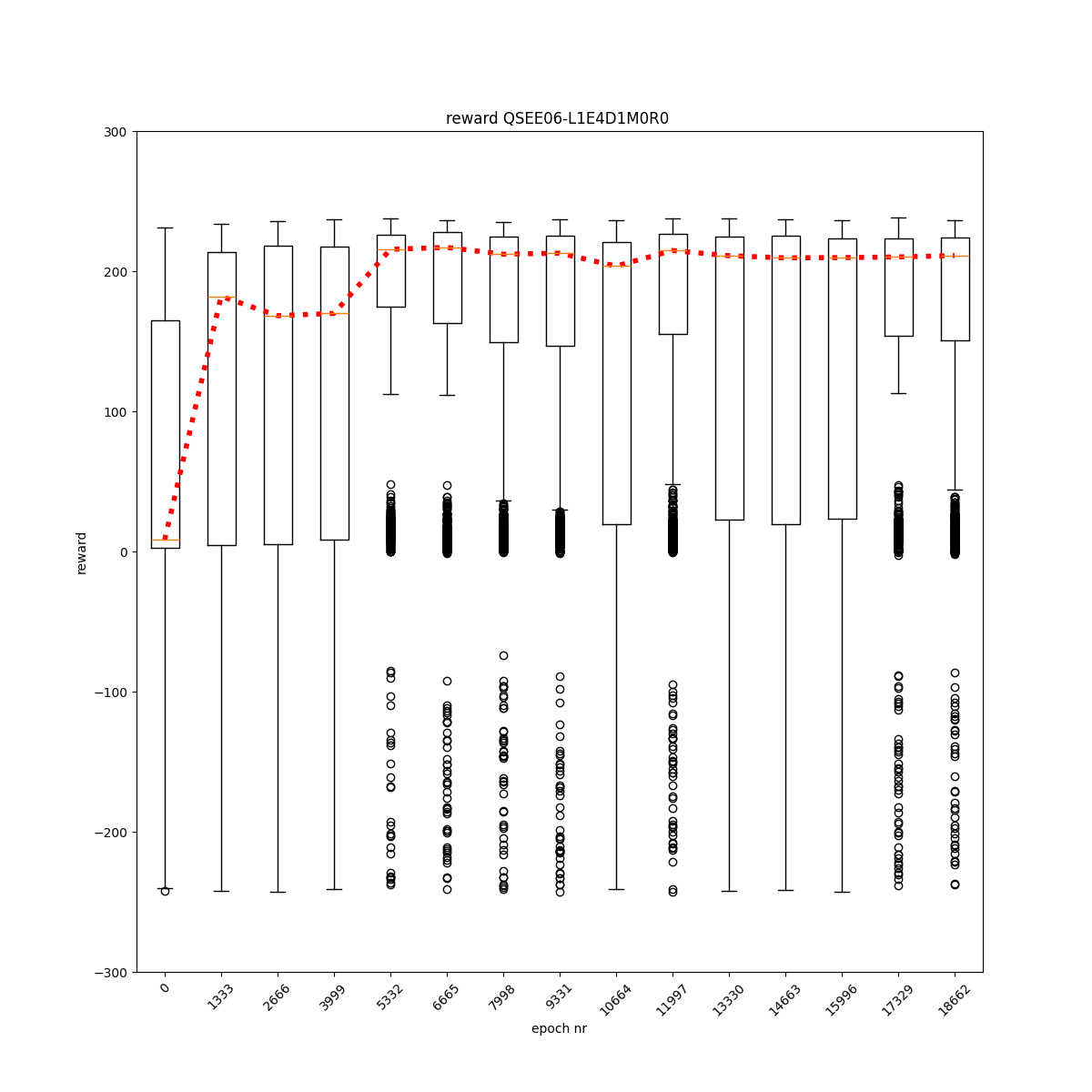

L0 E4 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

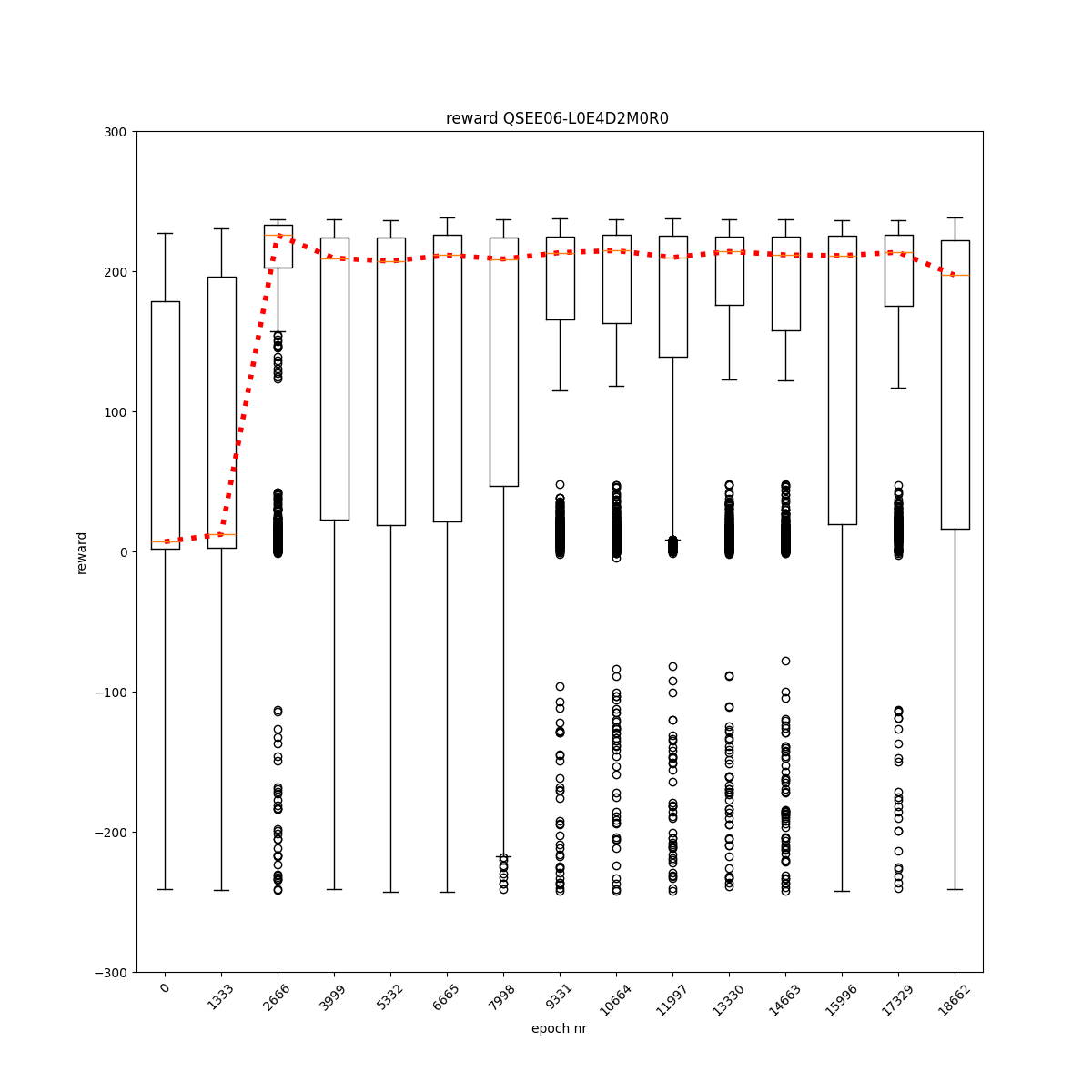

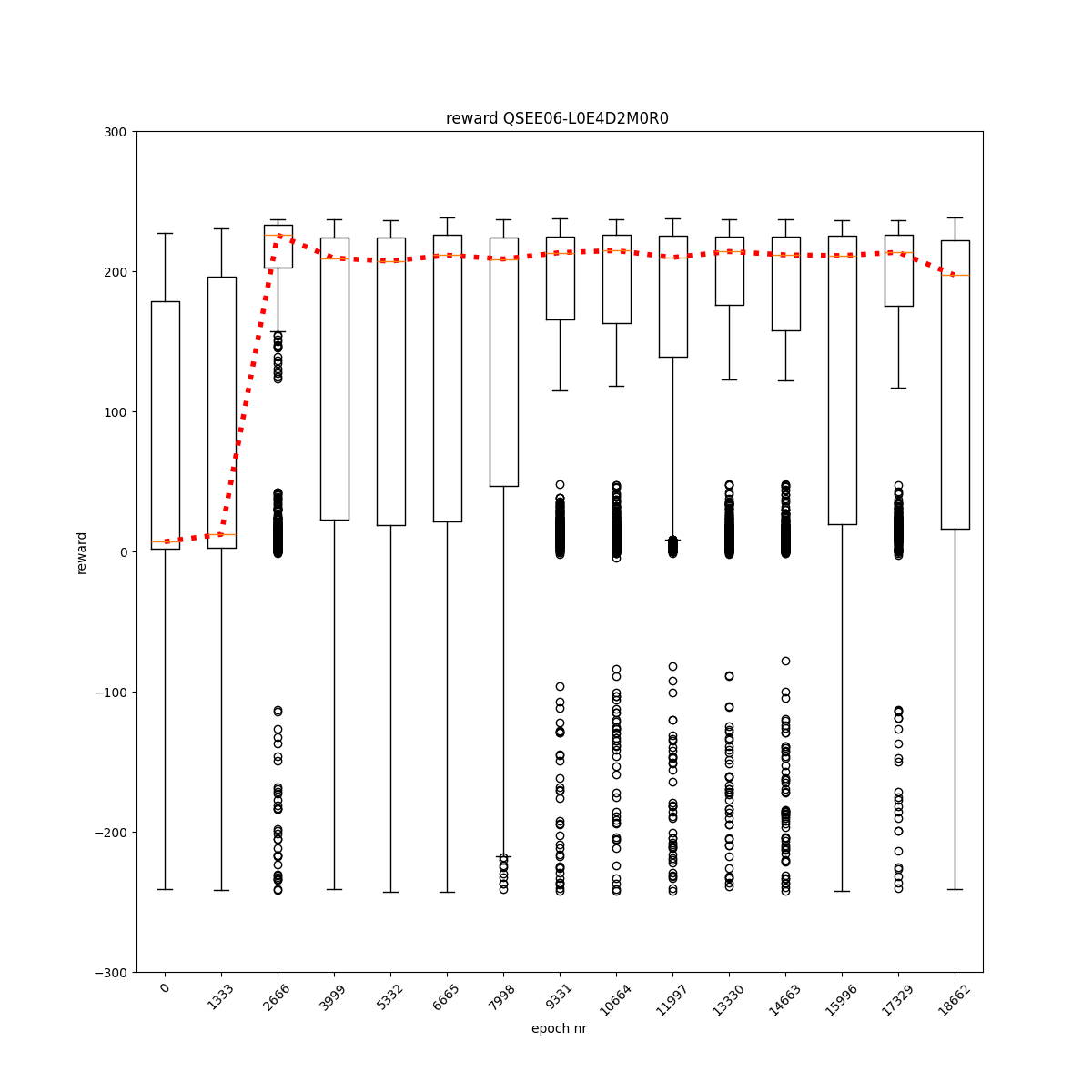

L0 E4 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

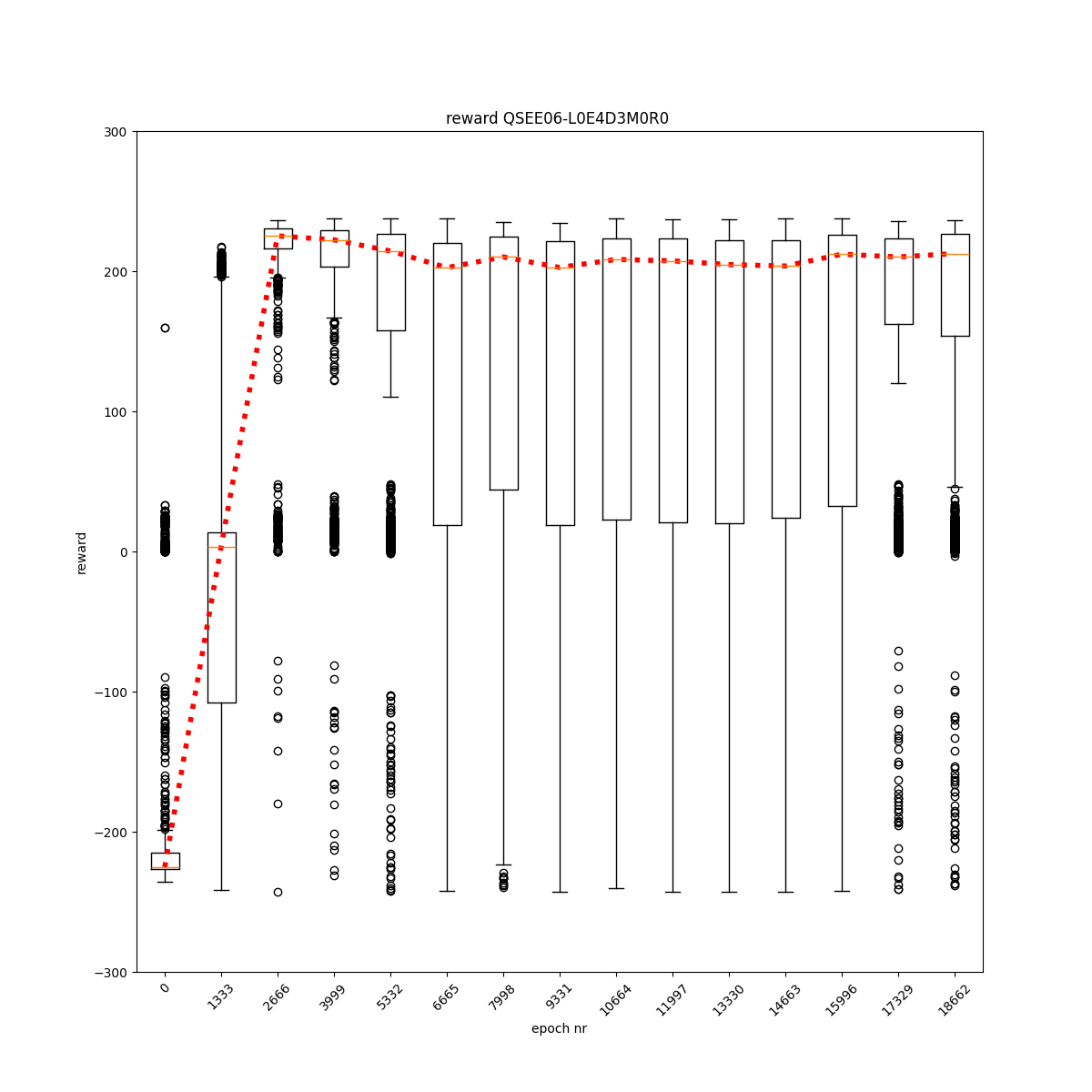

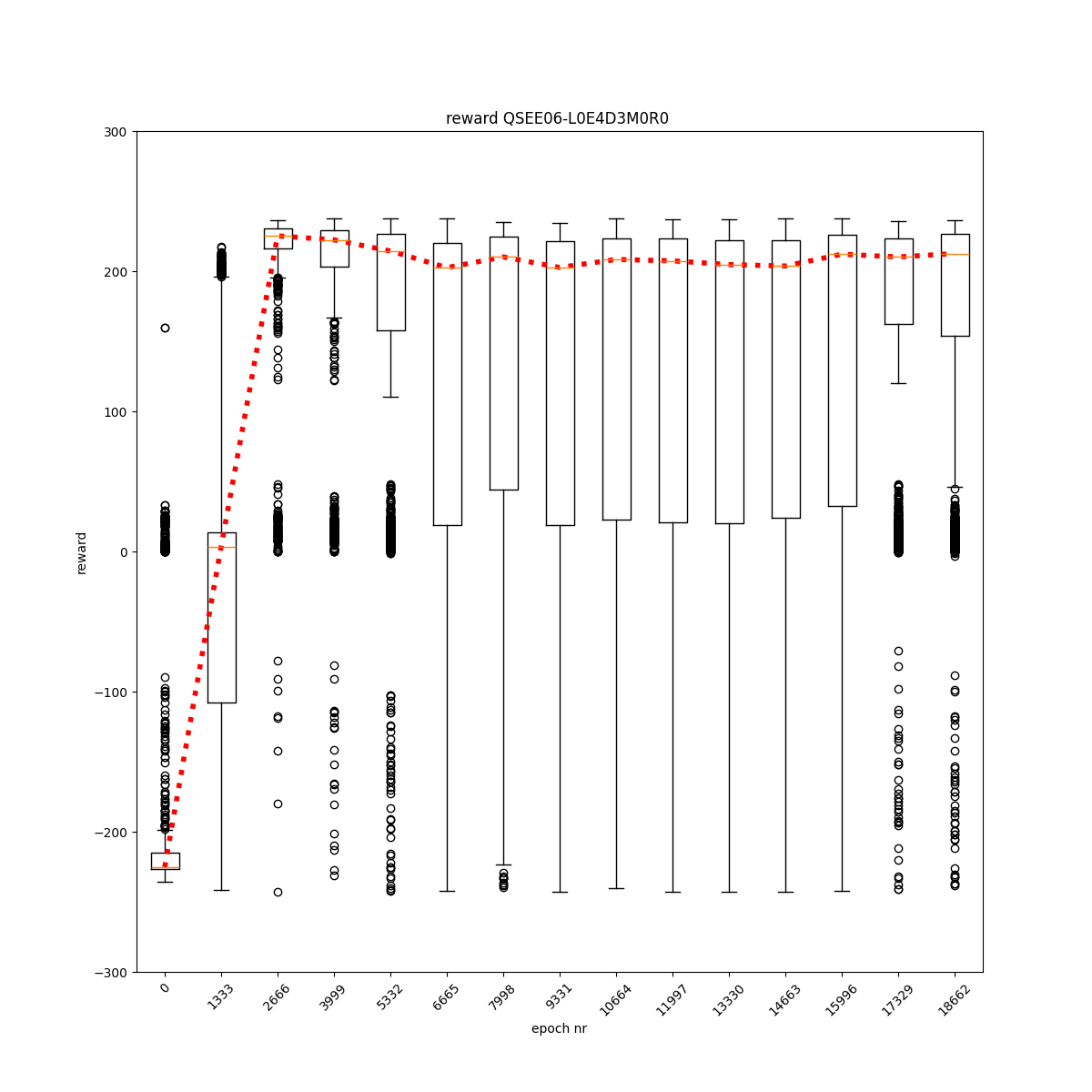

L0 E4 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

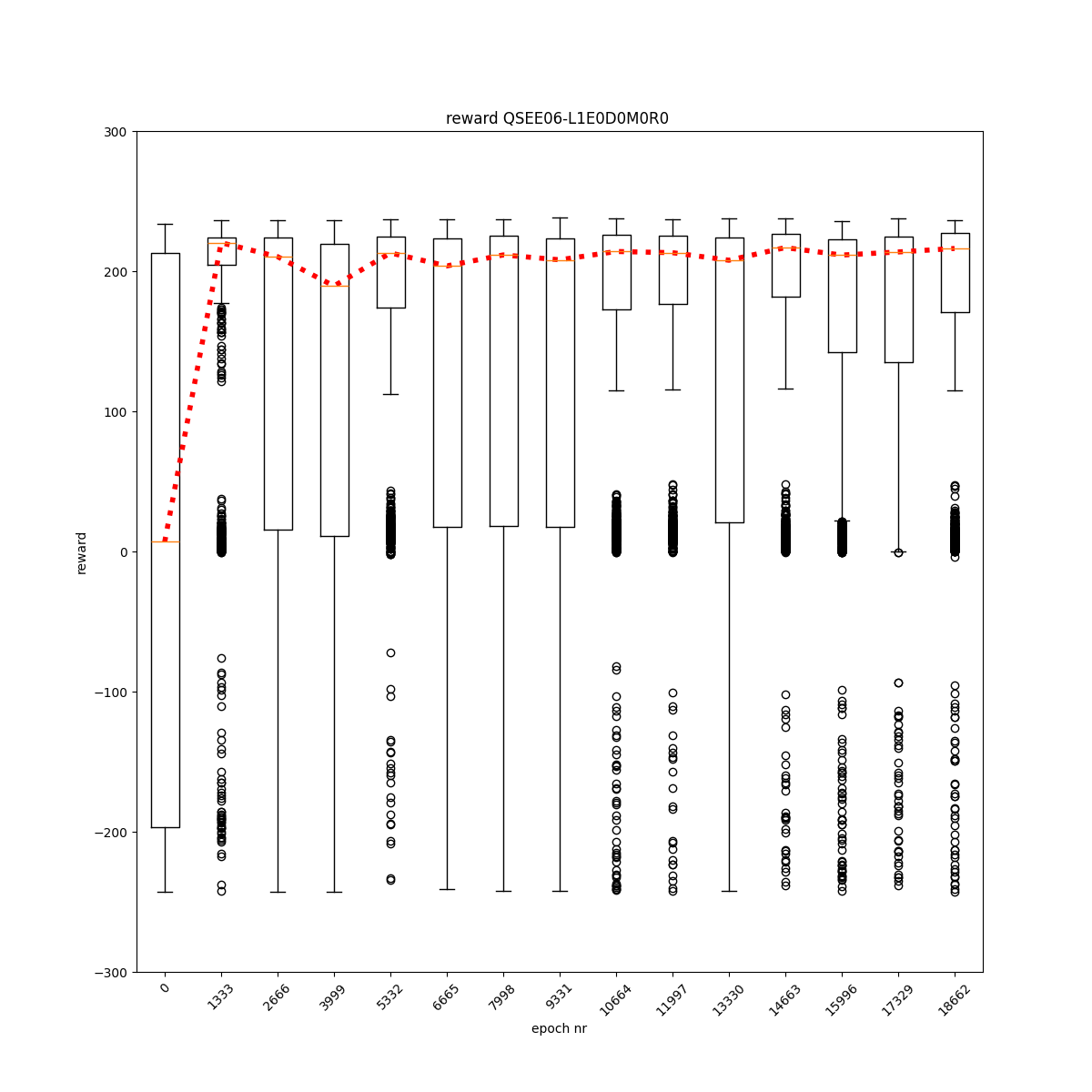

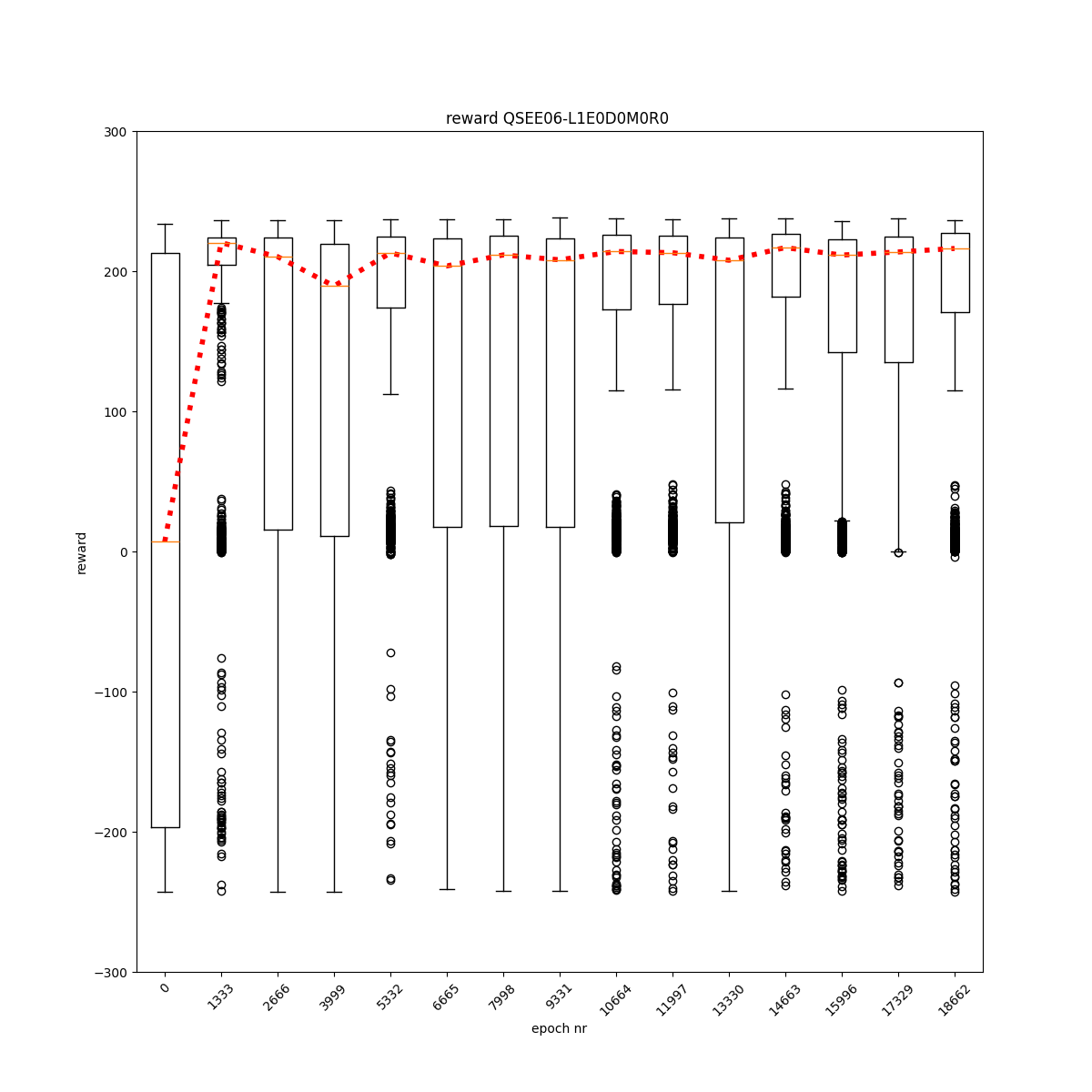

L1 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

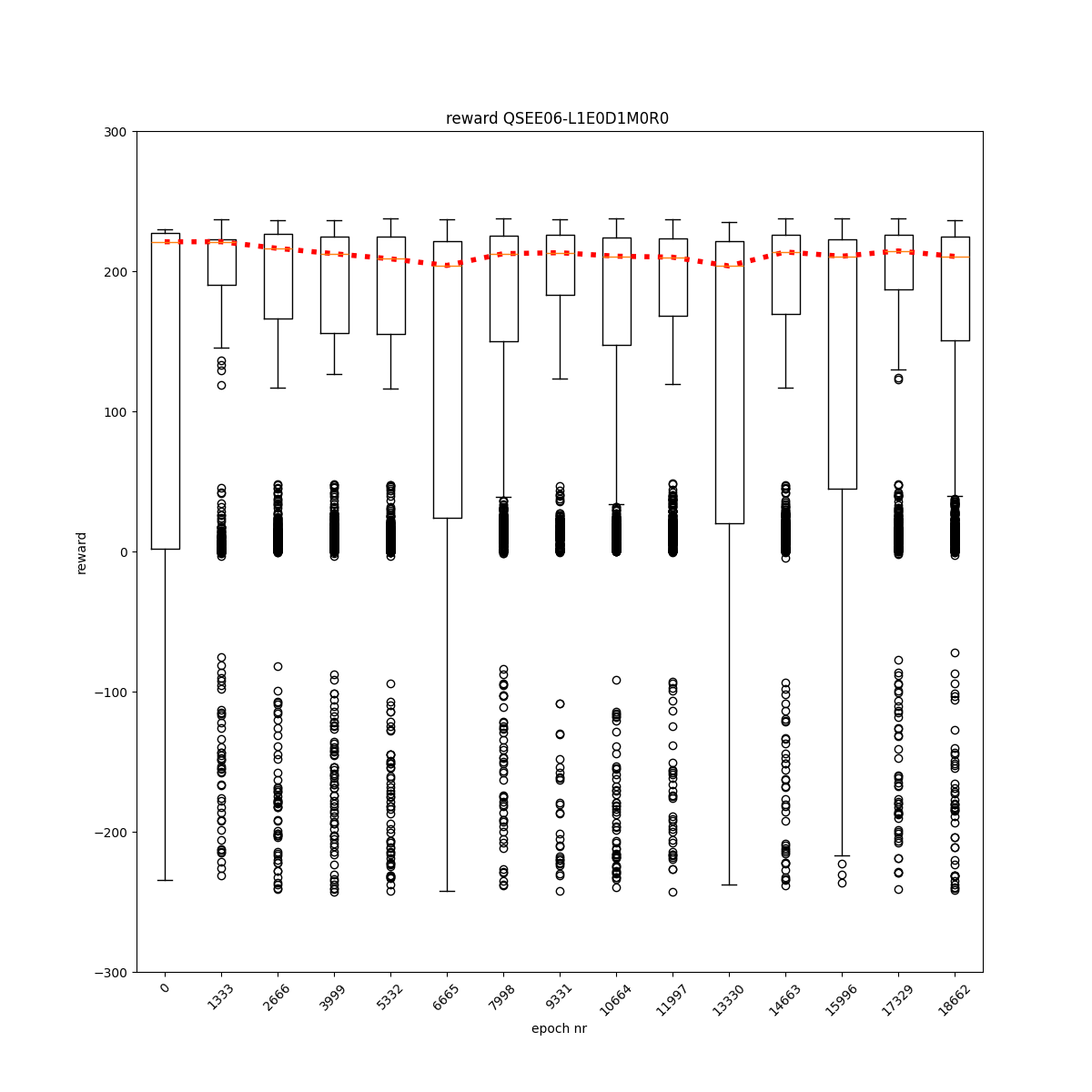

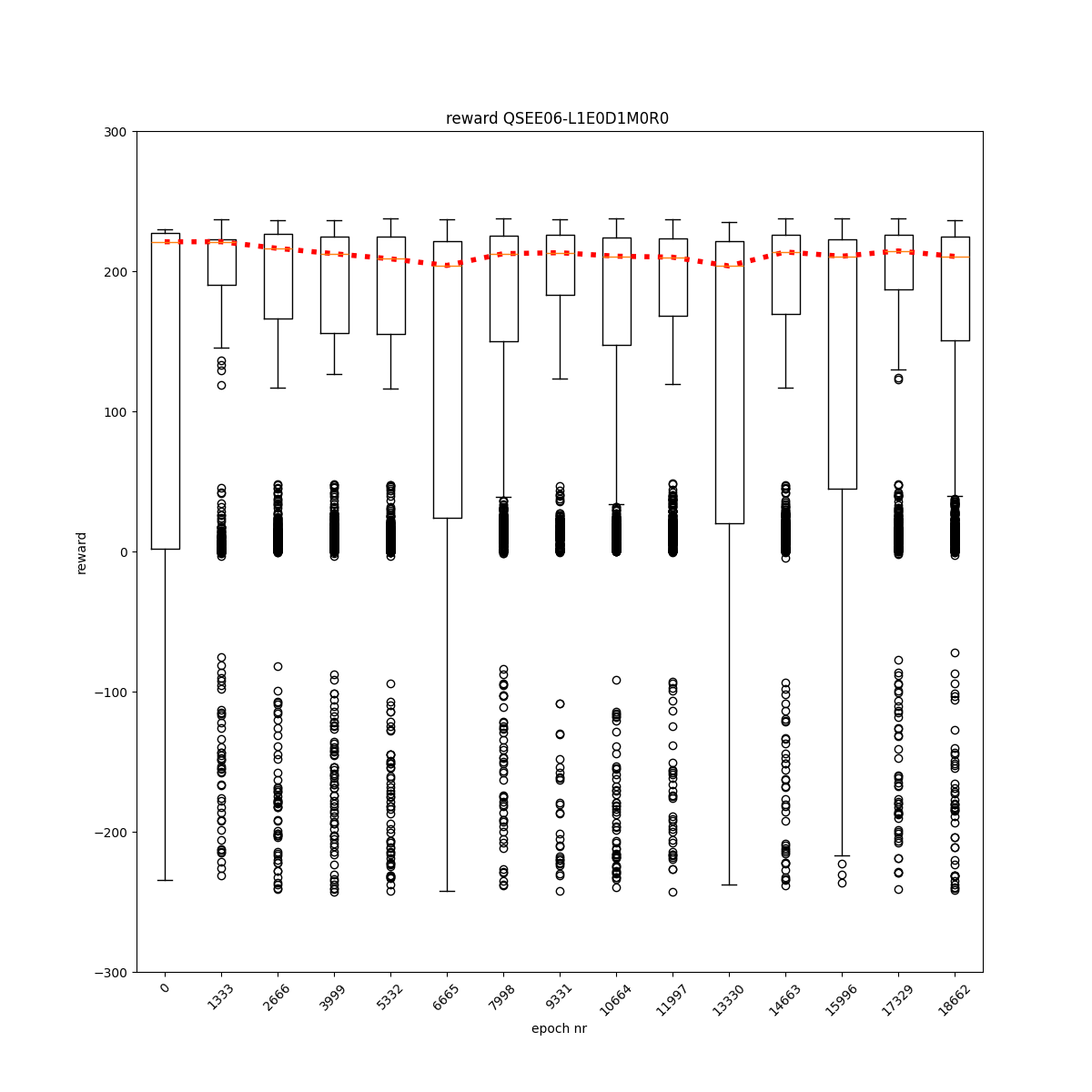

L1 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

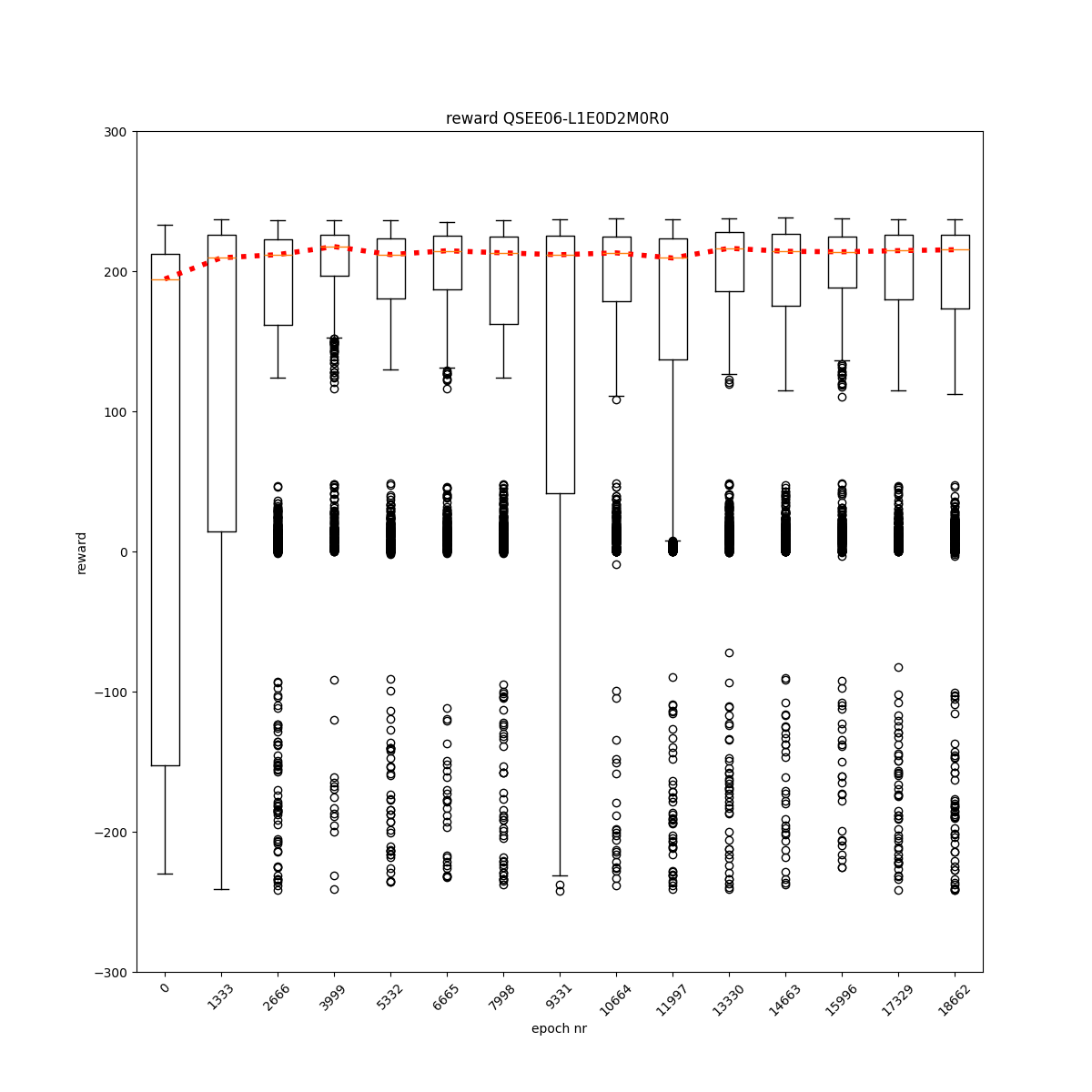

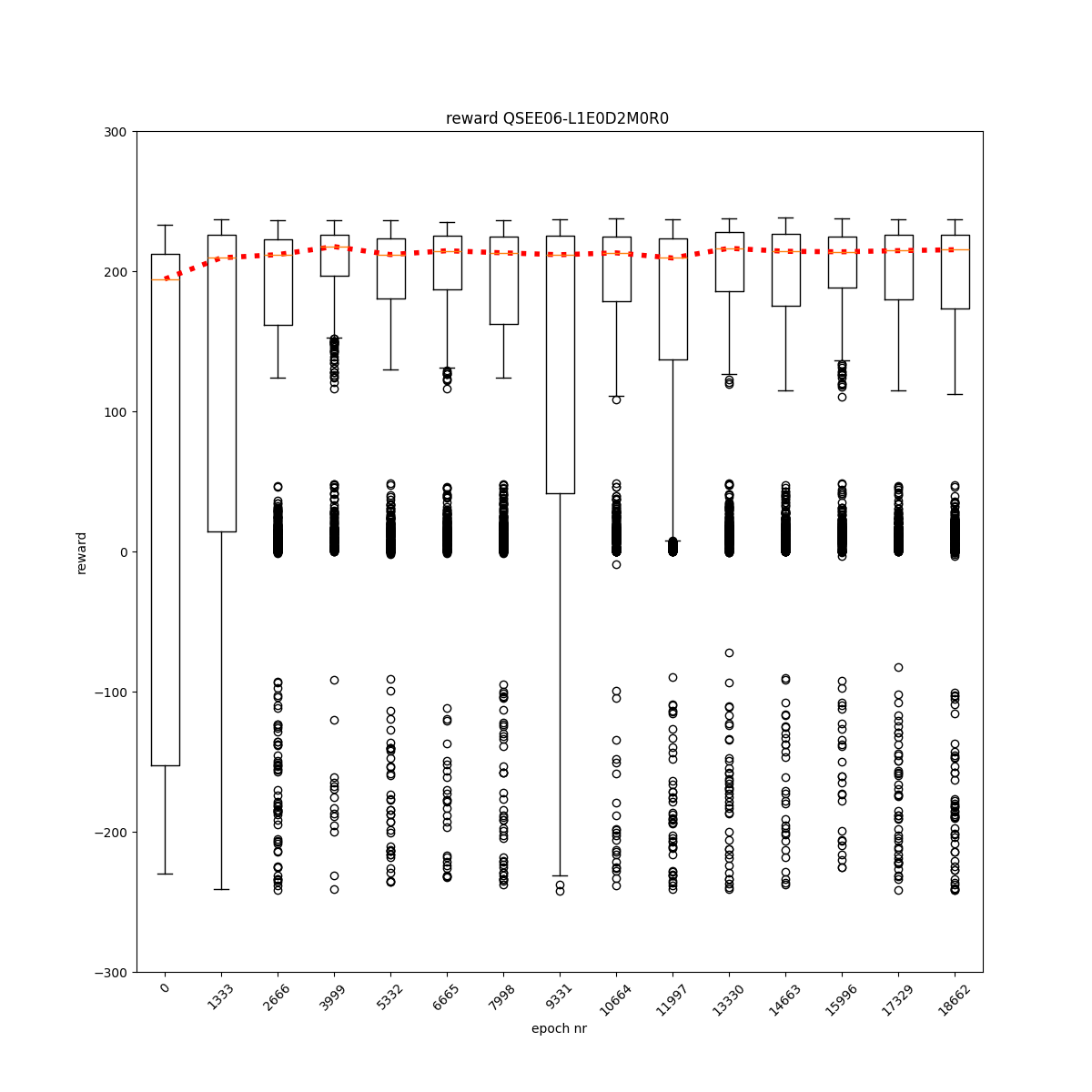

L1 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

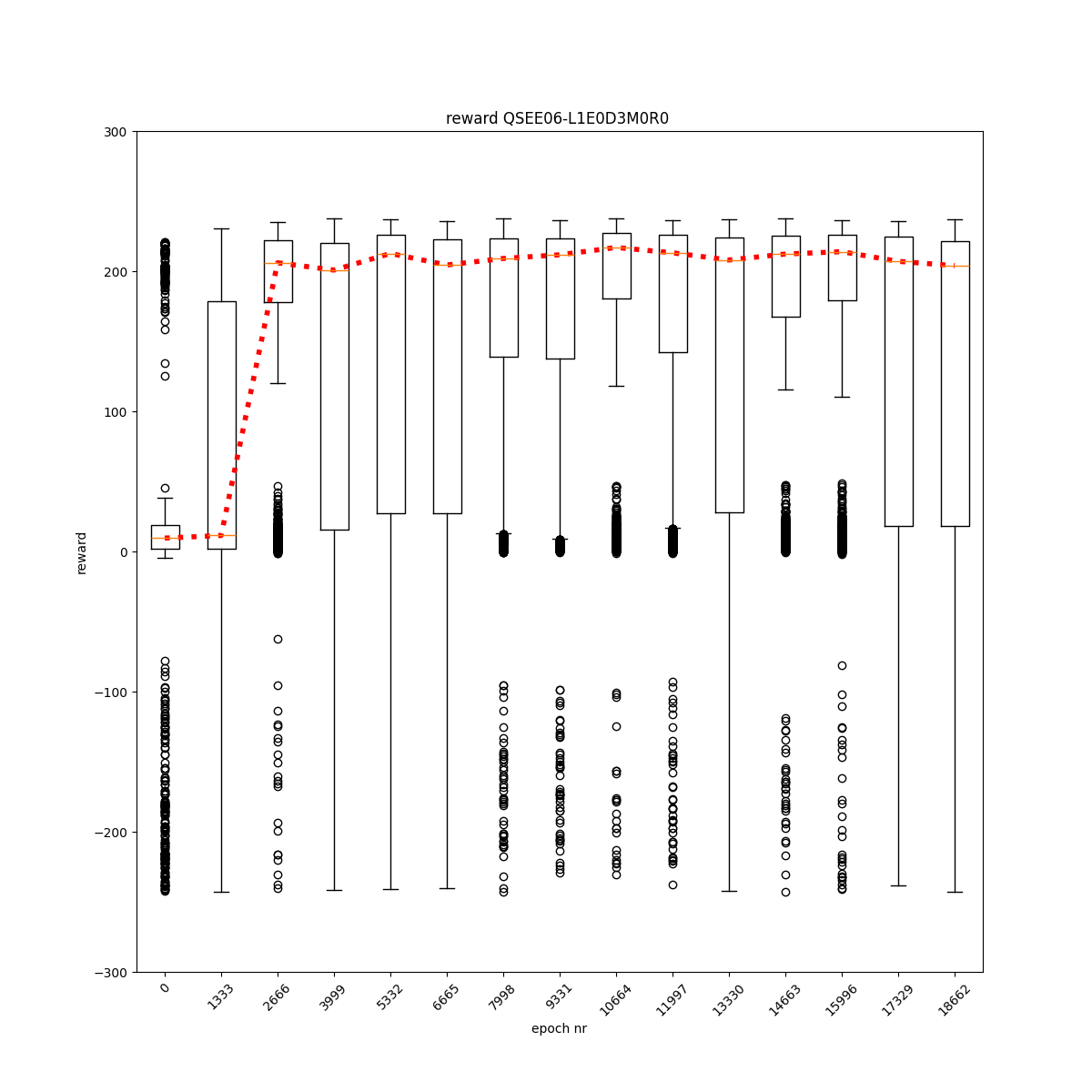

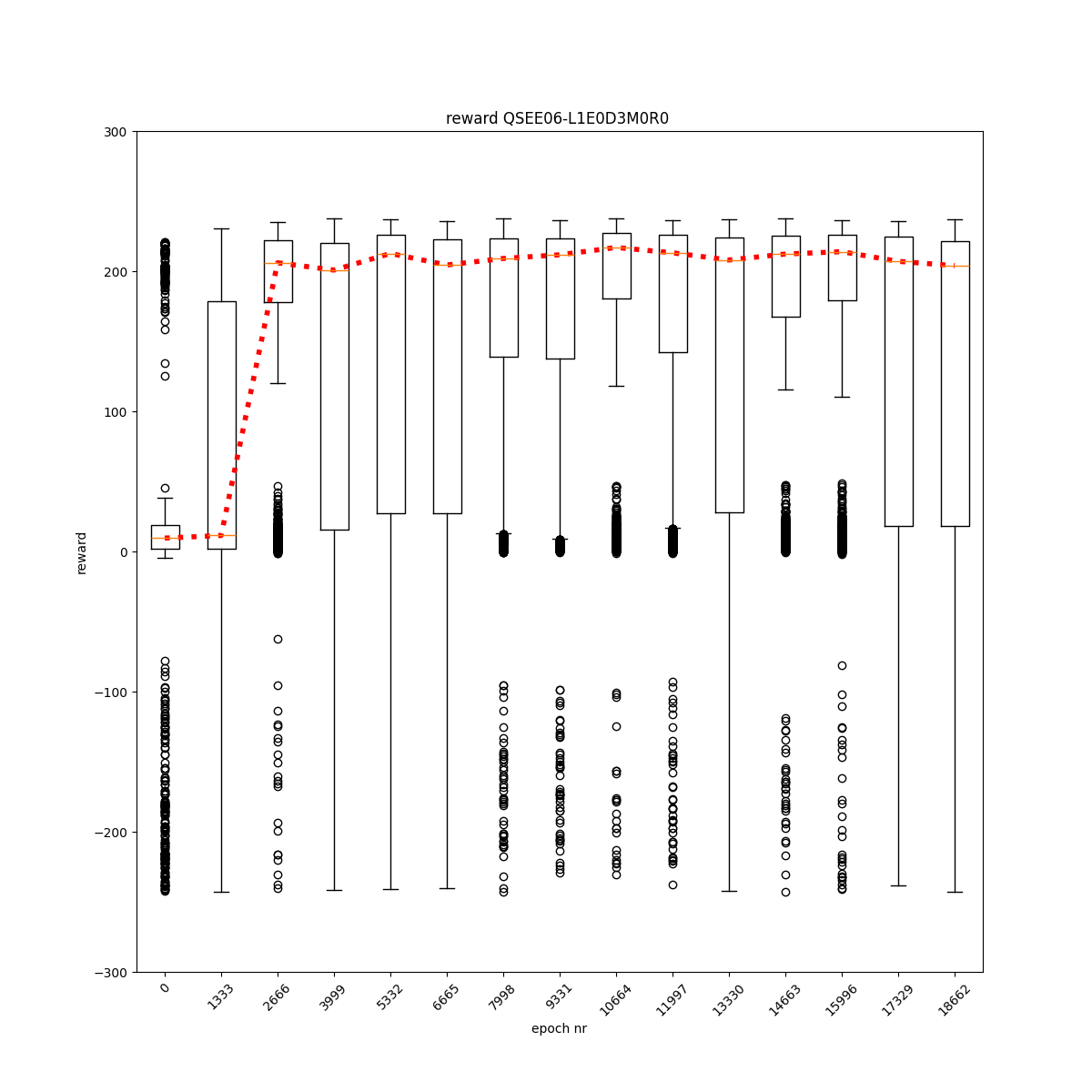

L1 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

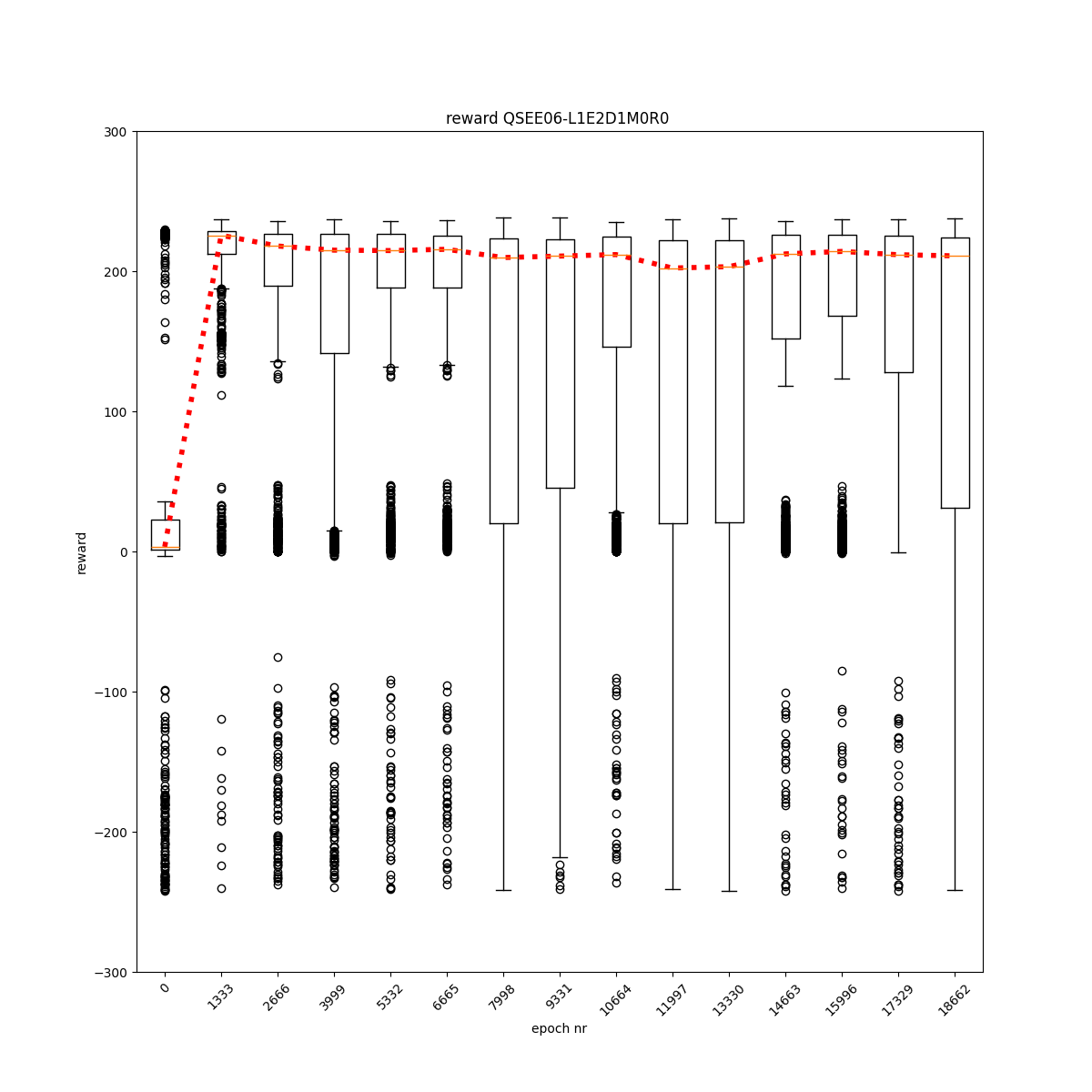

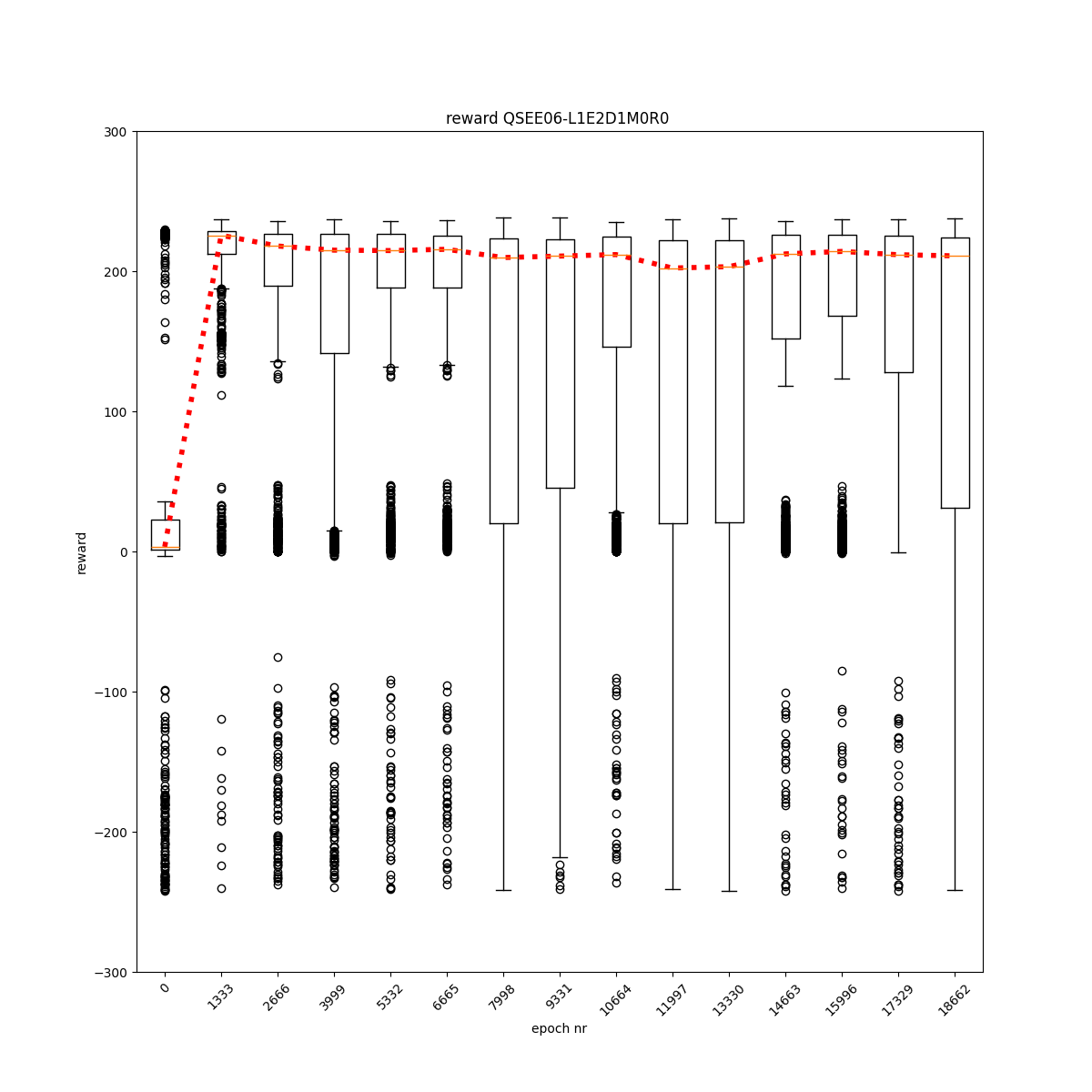

L1 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

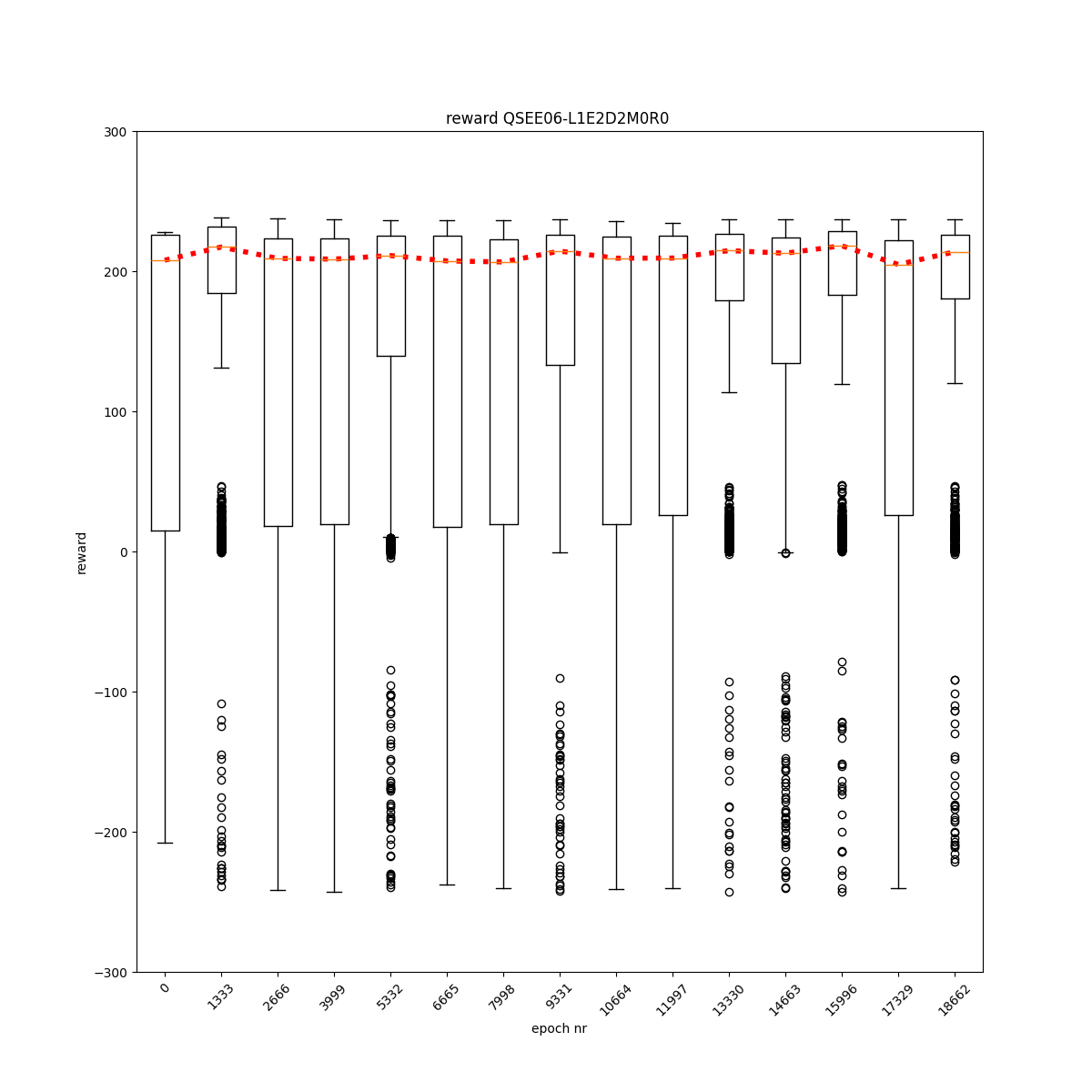

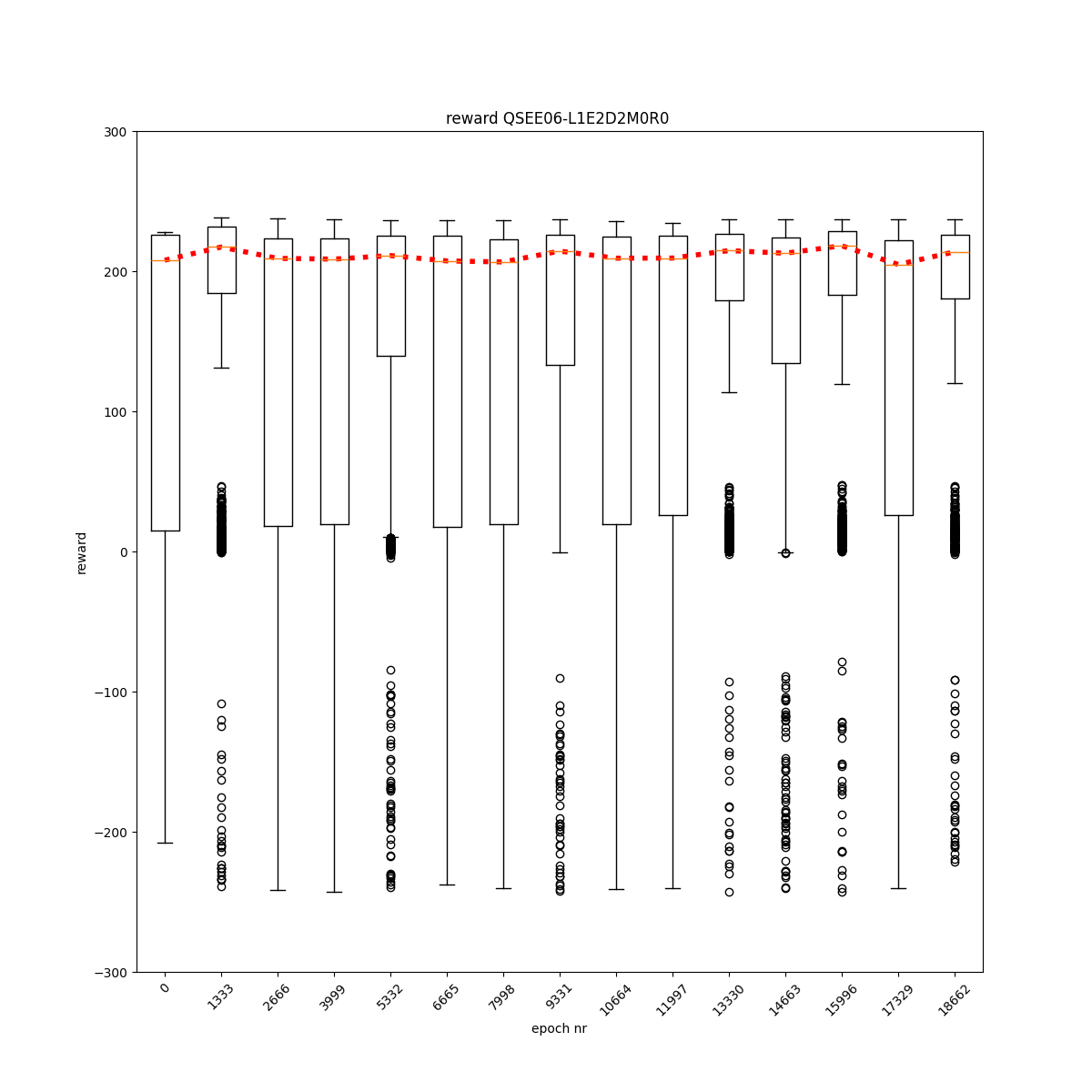

L1 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

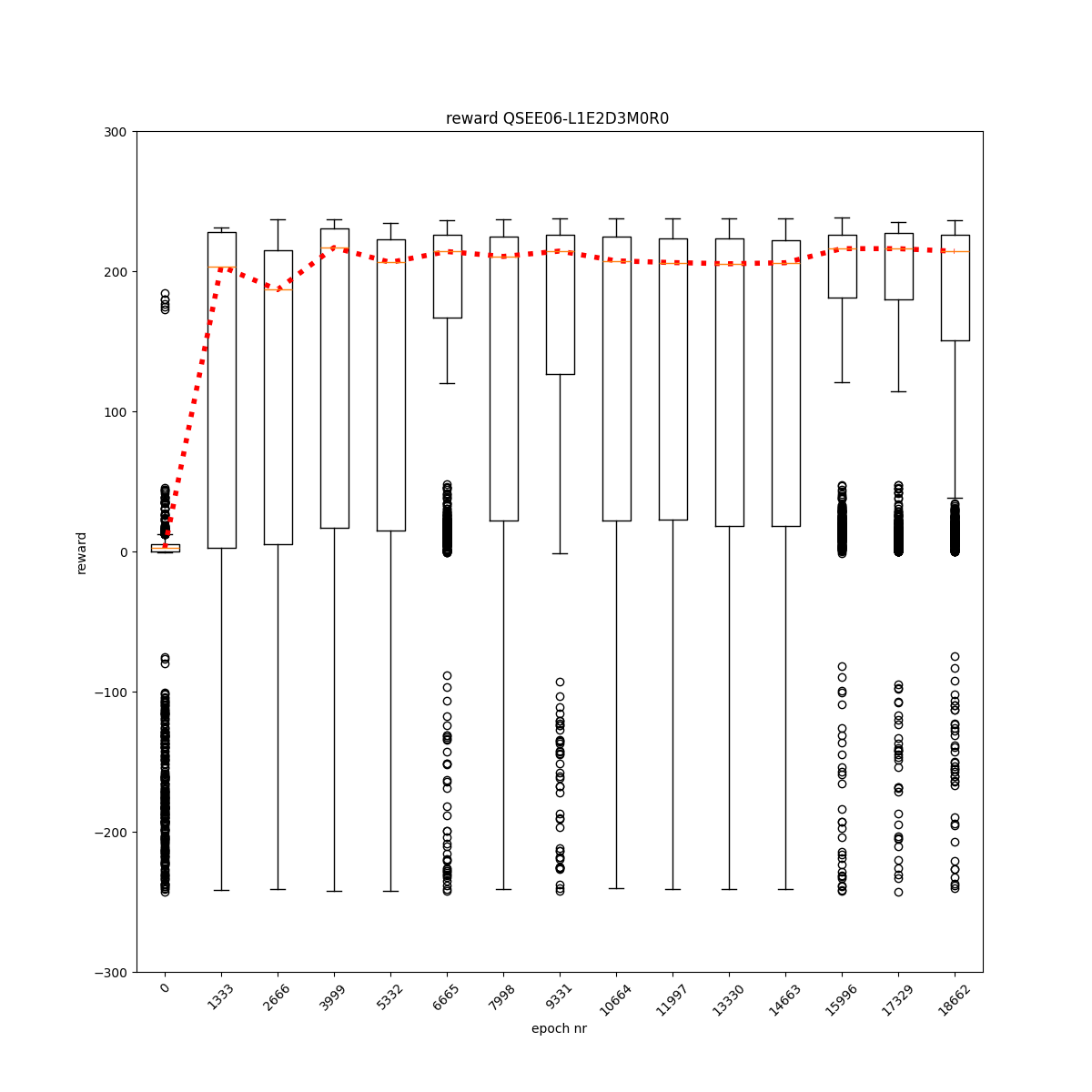

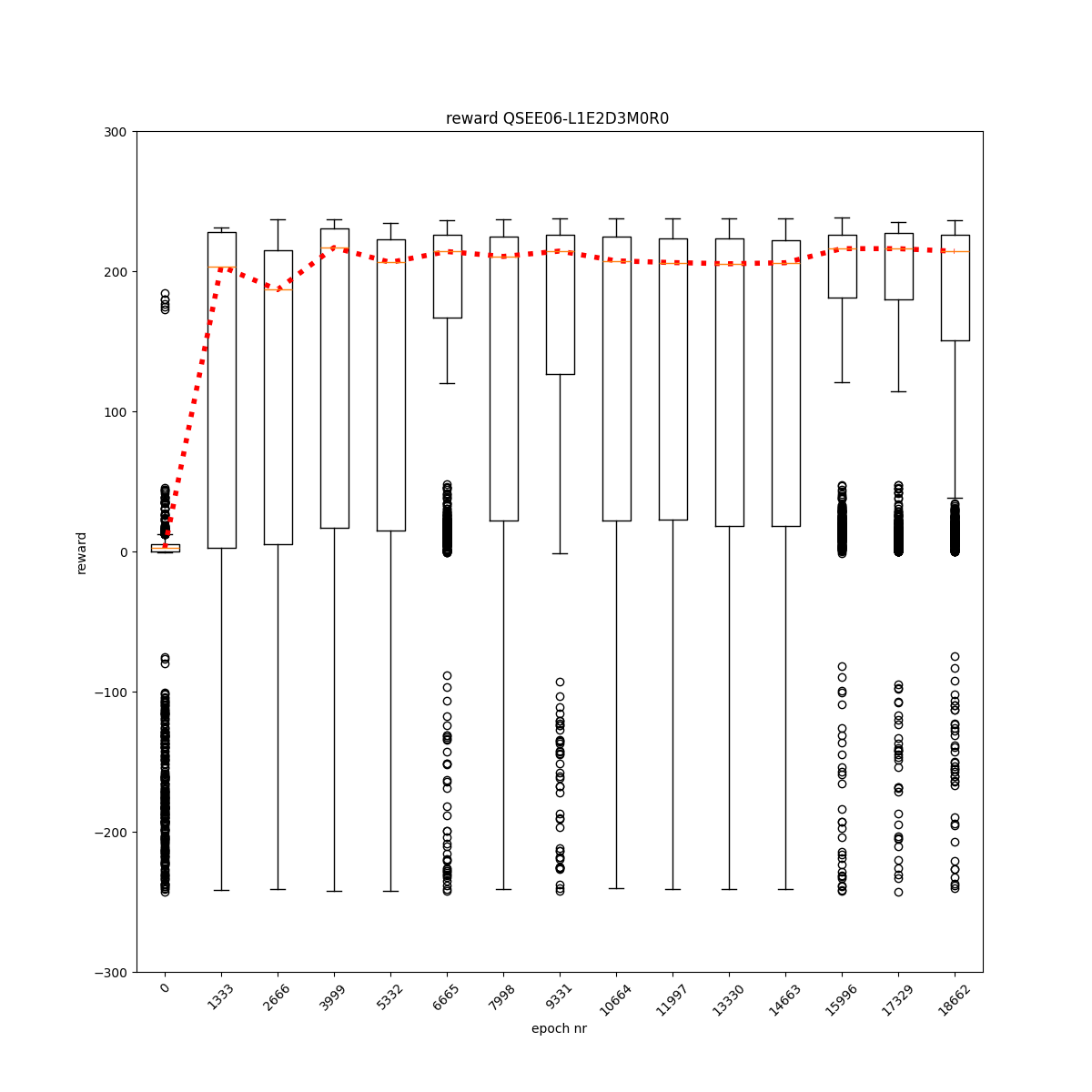

L1 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E3 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E3 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E3 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E3 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E4 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L1 E4 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

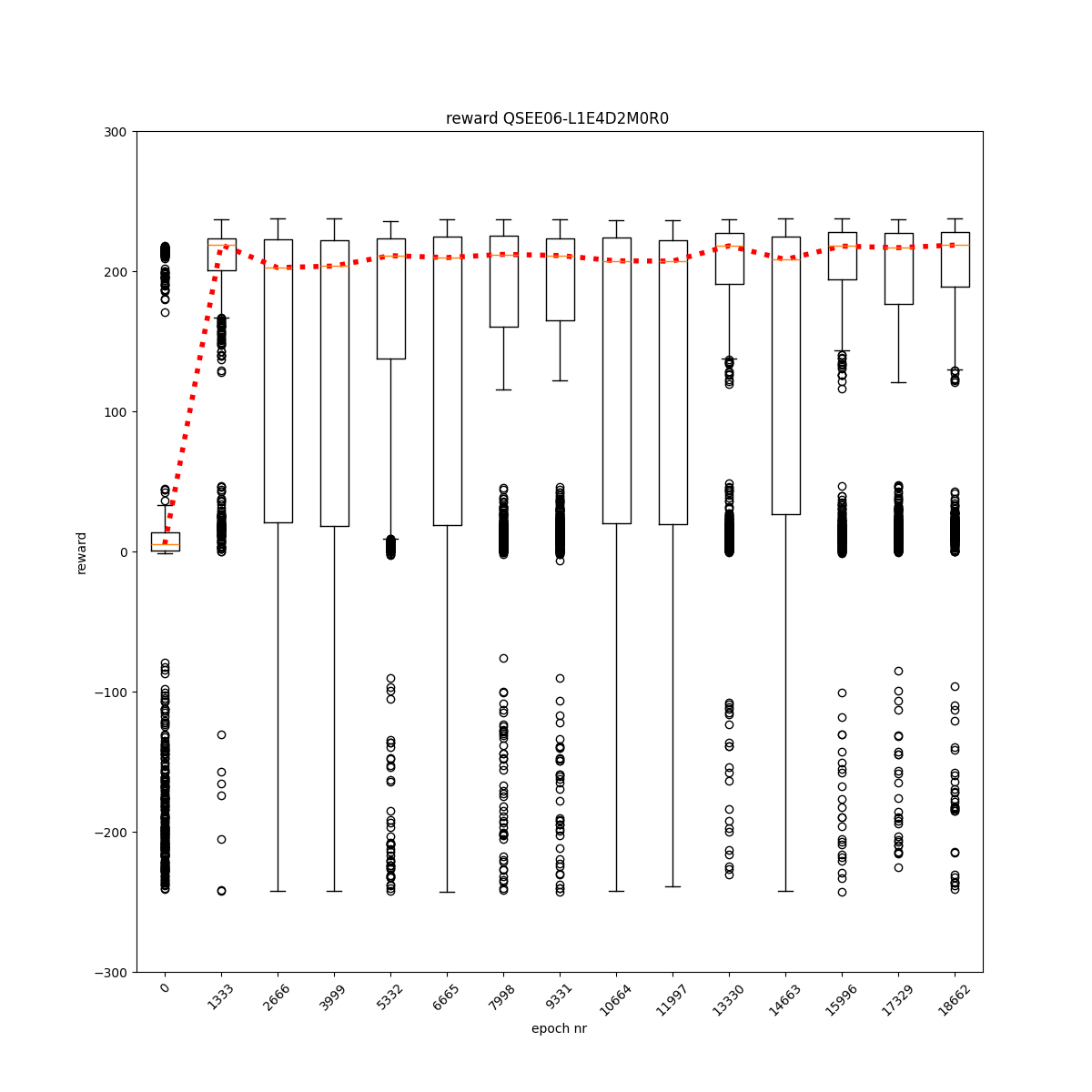

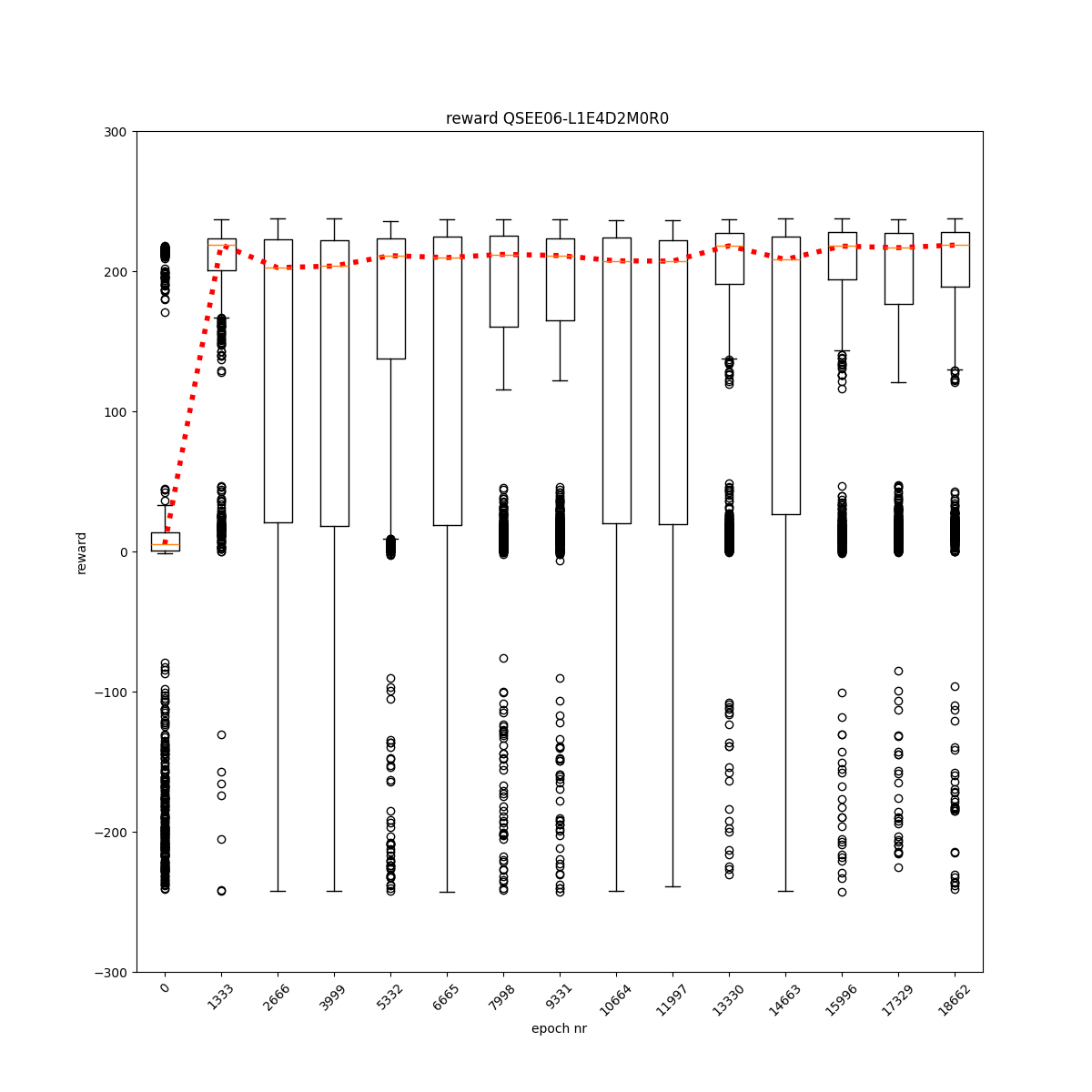

L1 E4 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

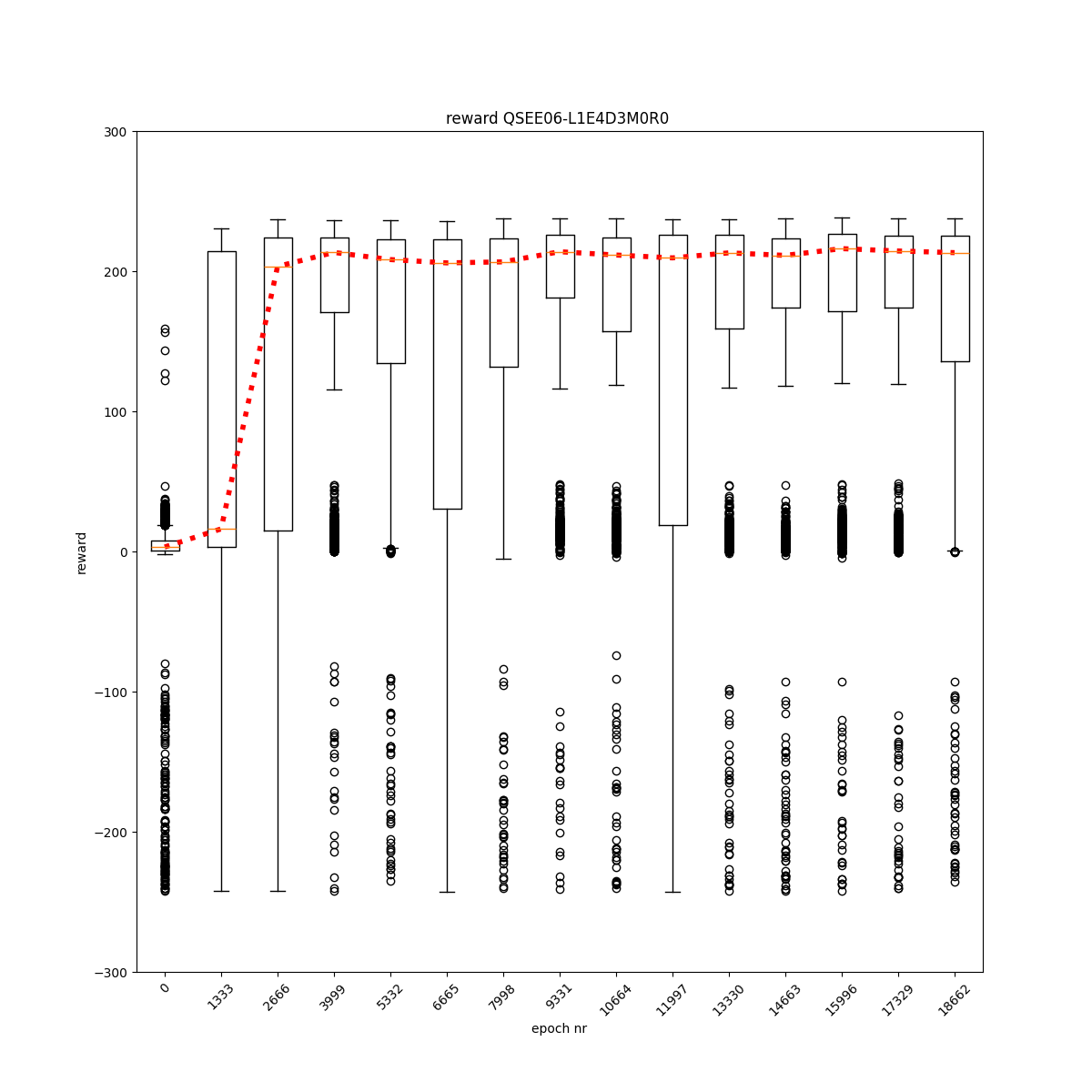

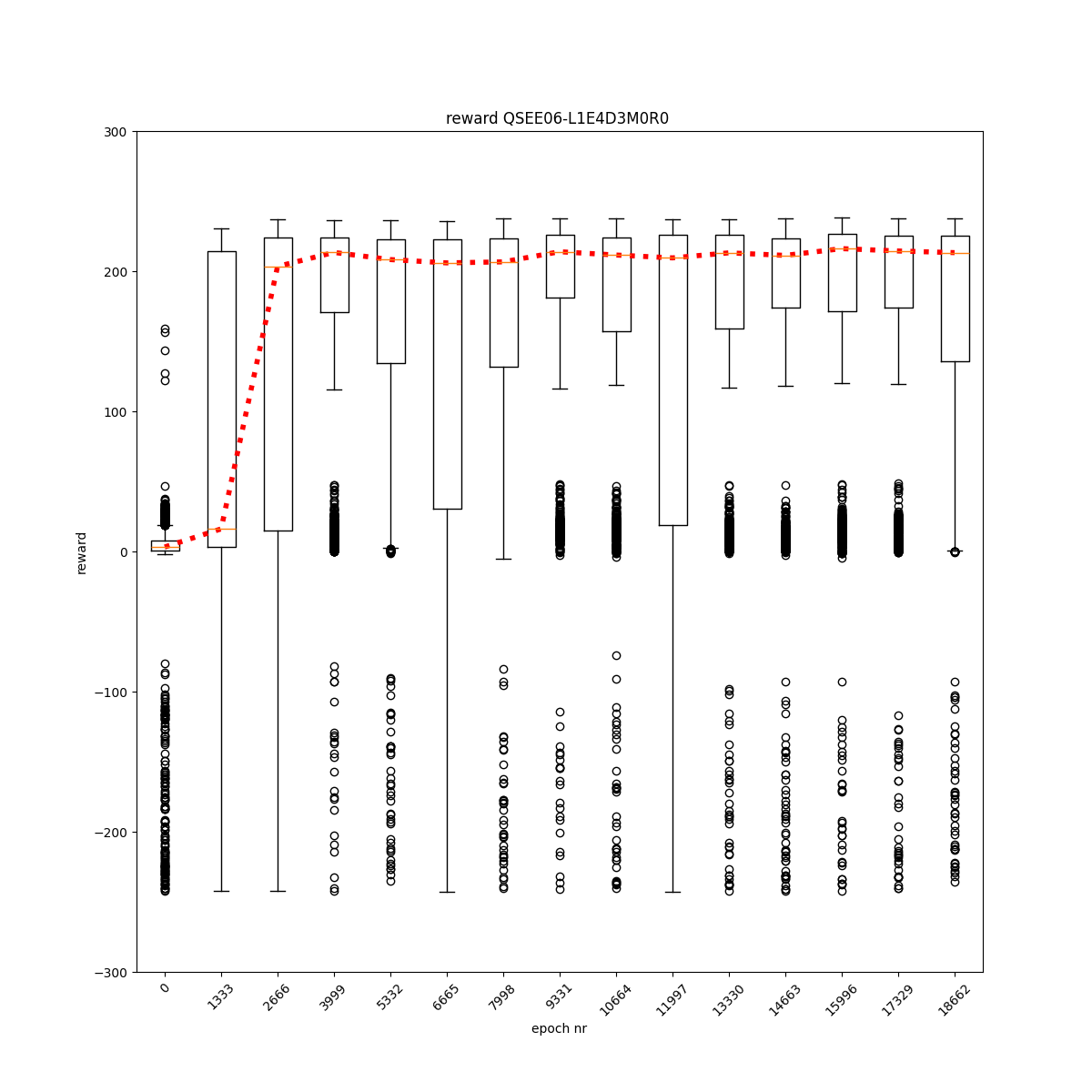

L1 E4 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

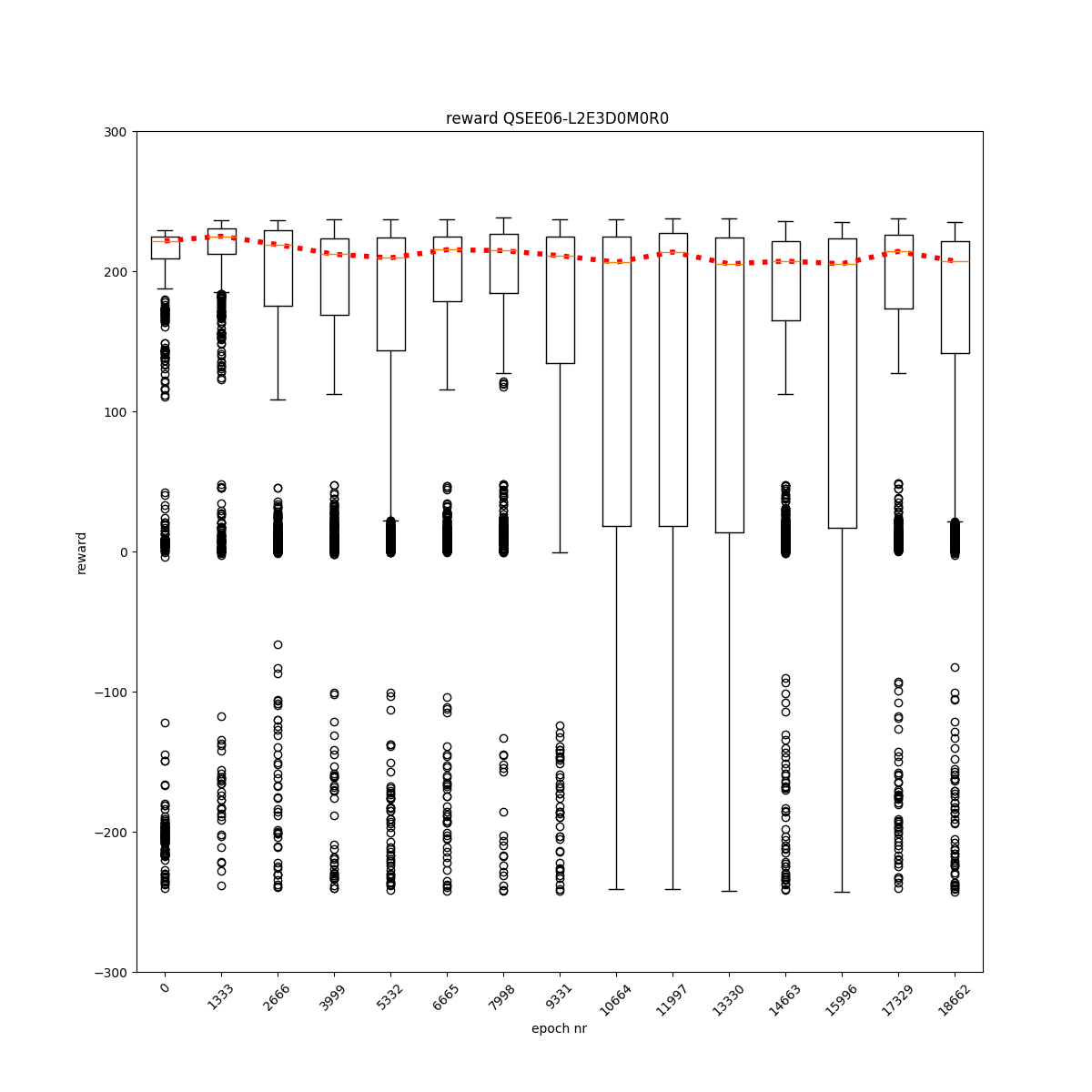

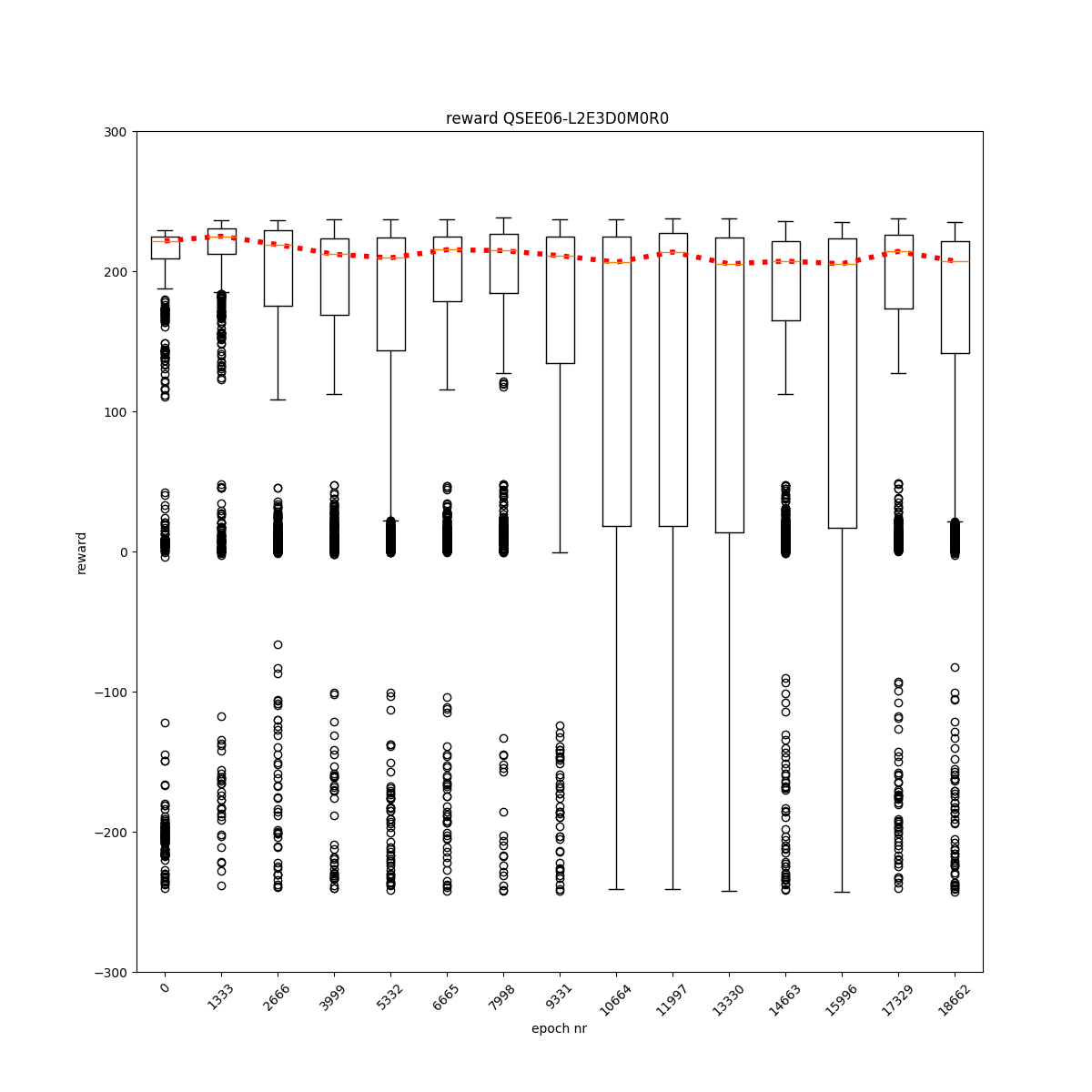

L2 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

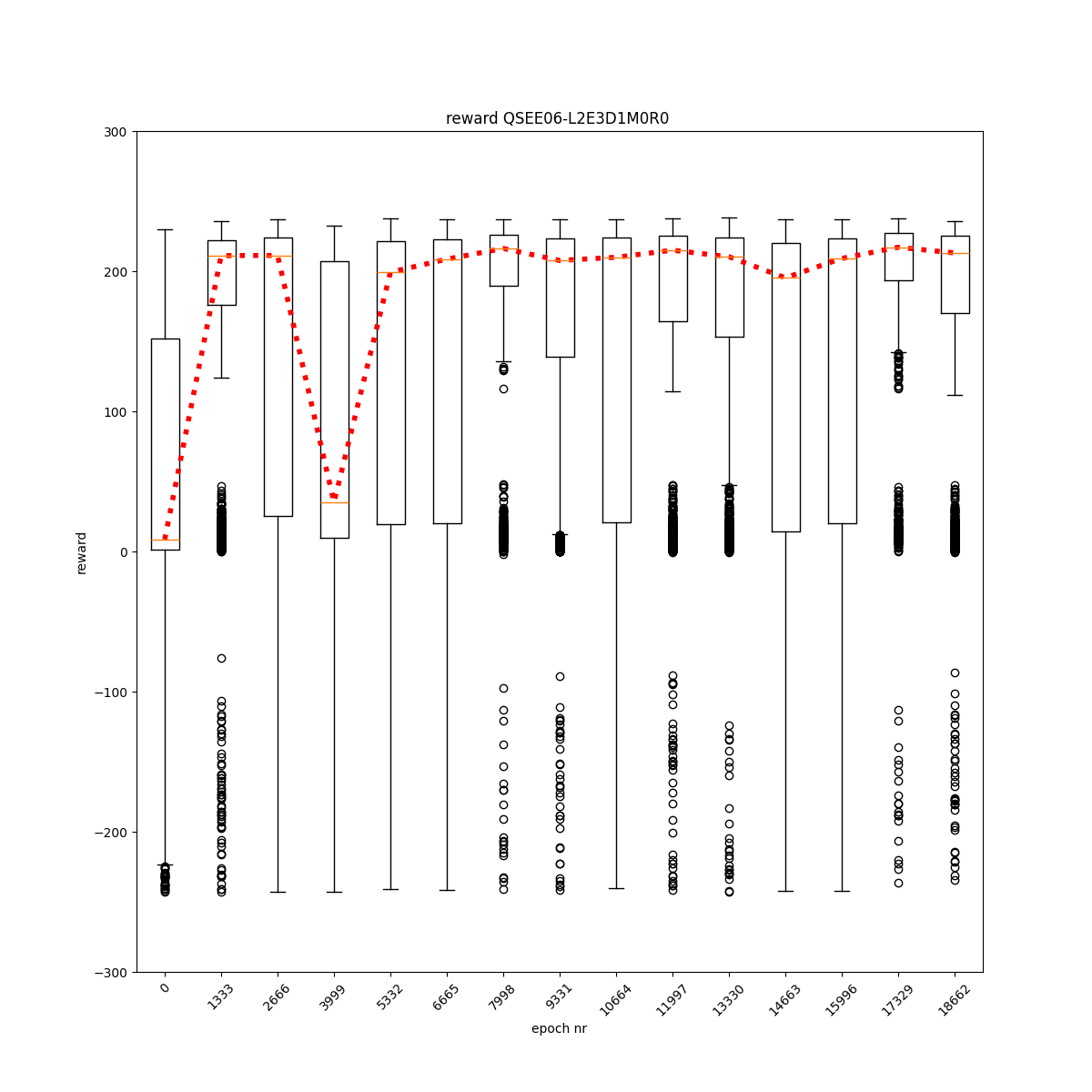

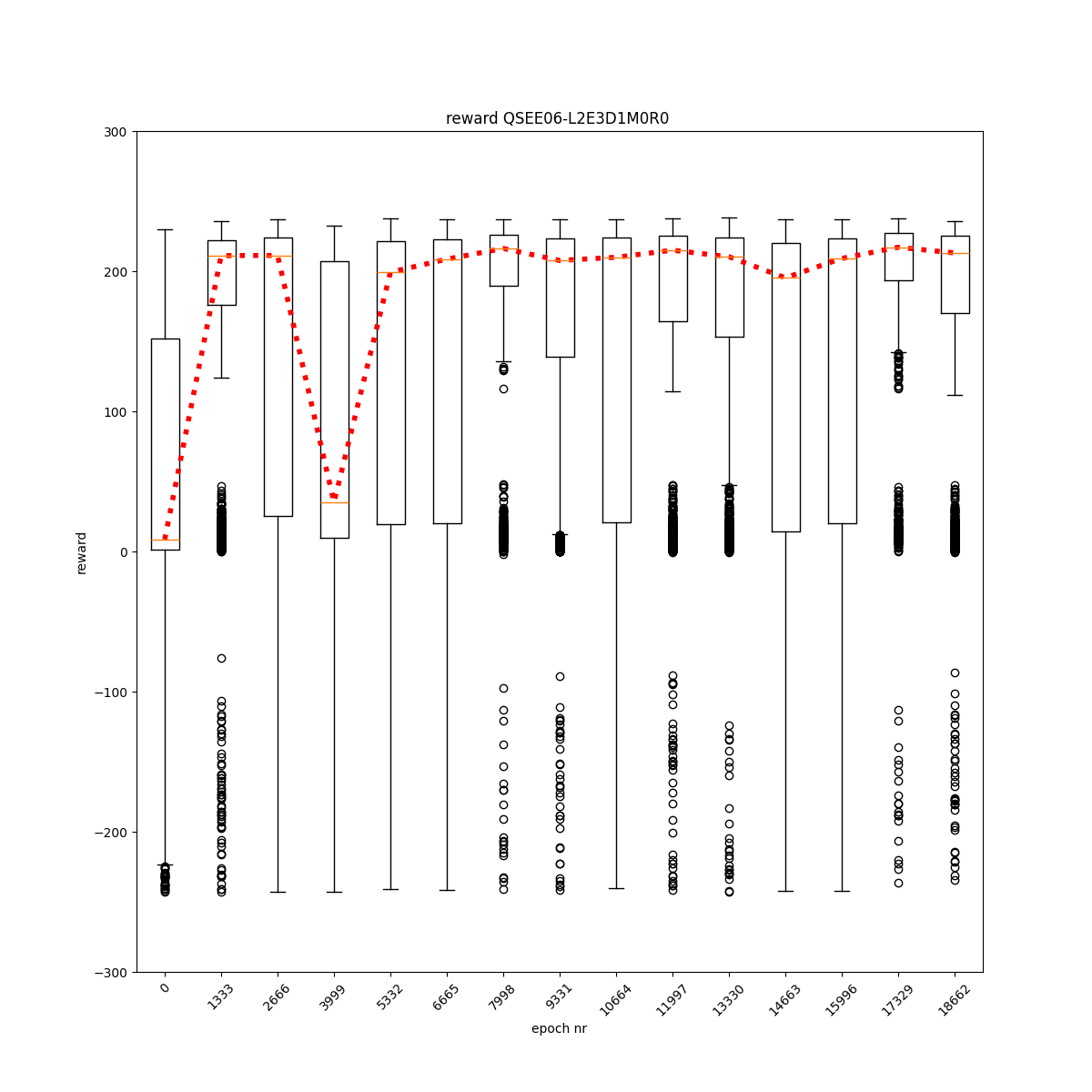

L2 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

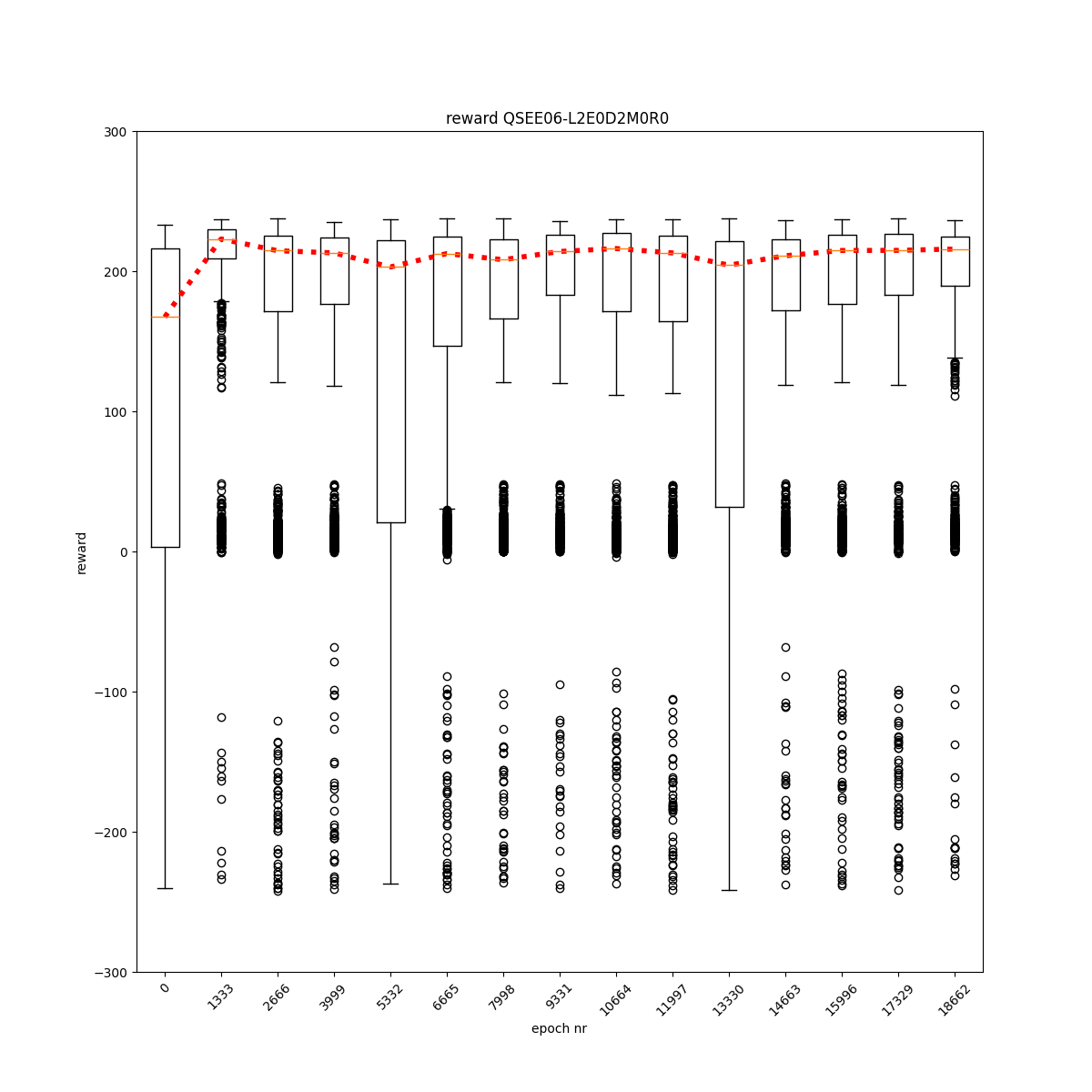

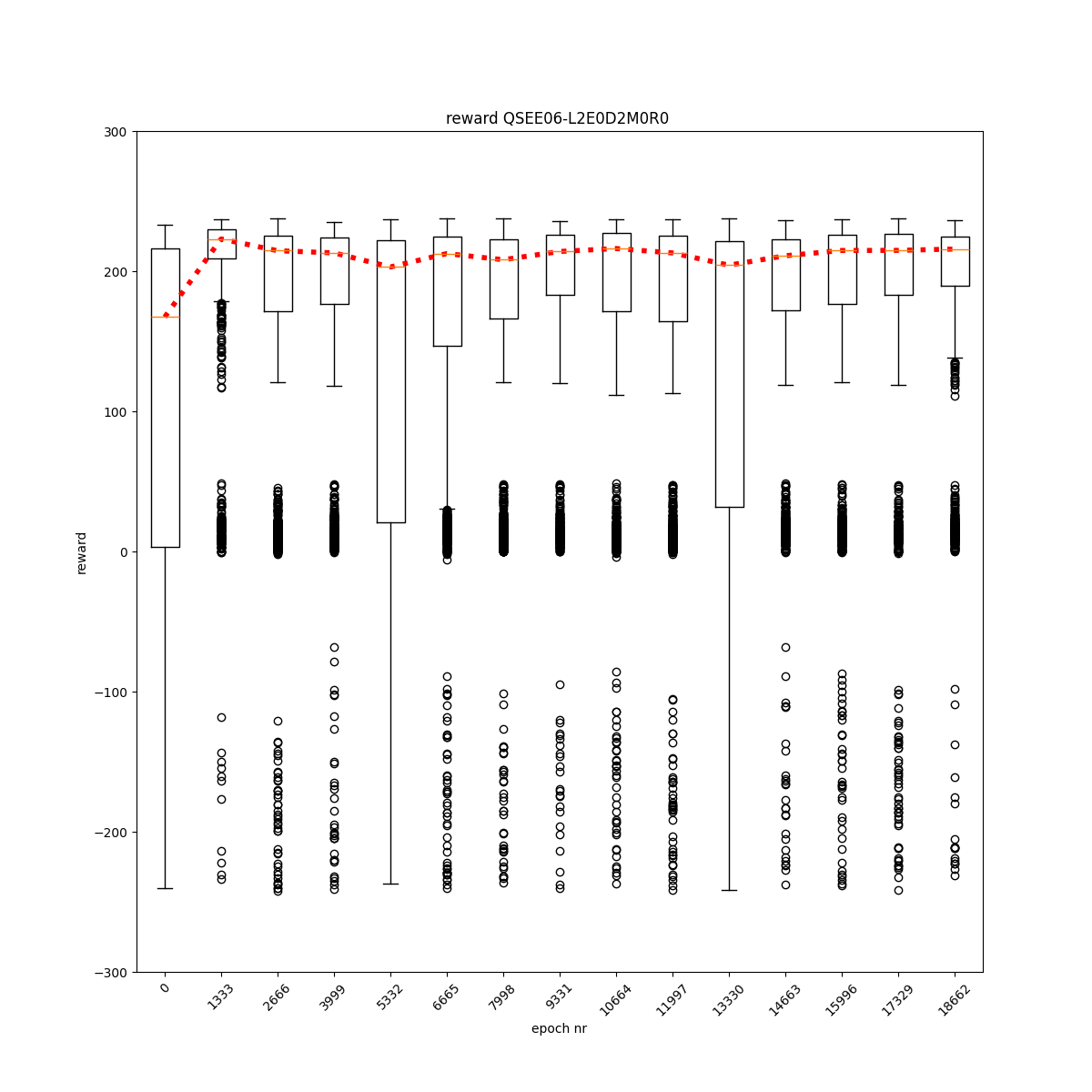

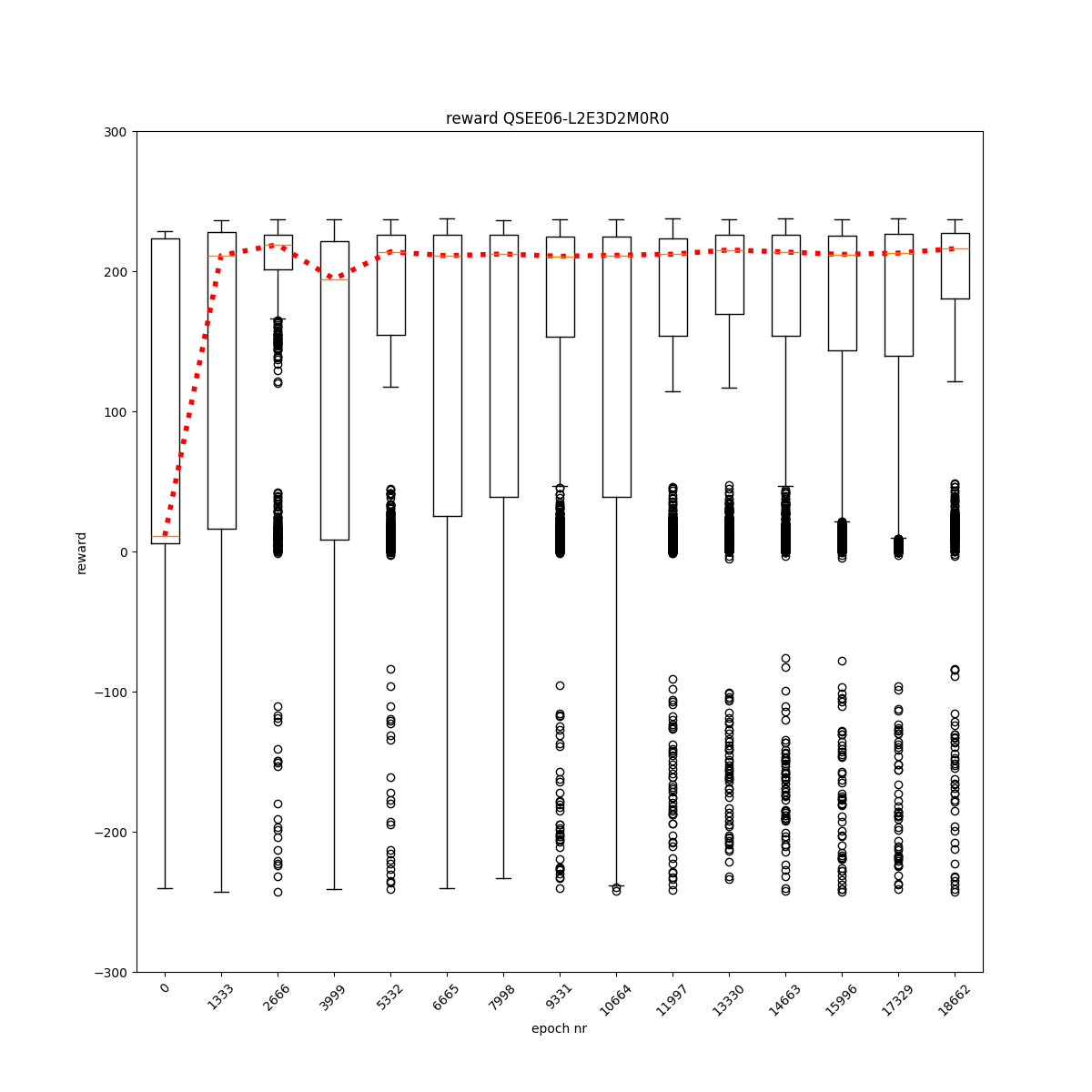

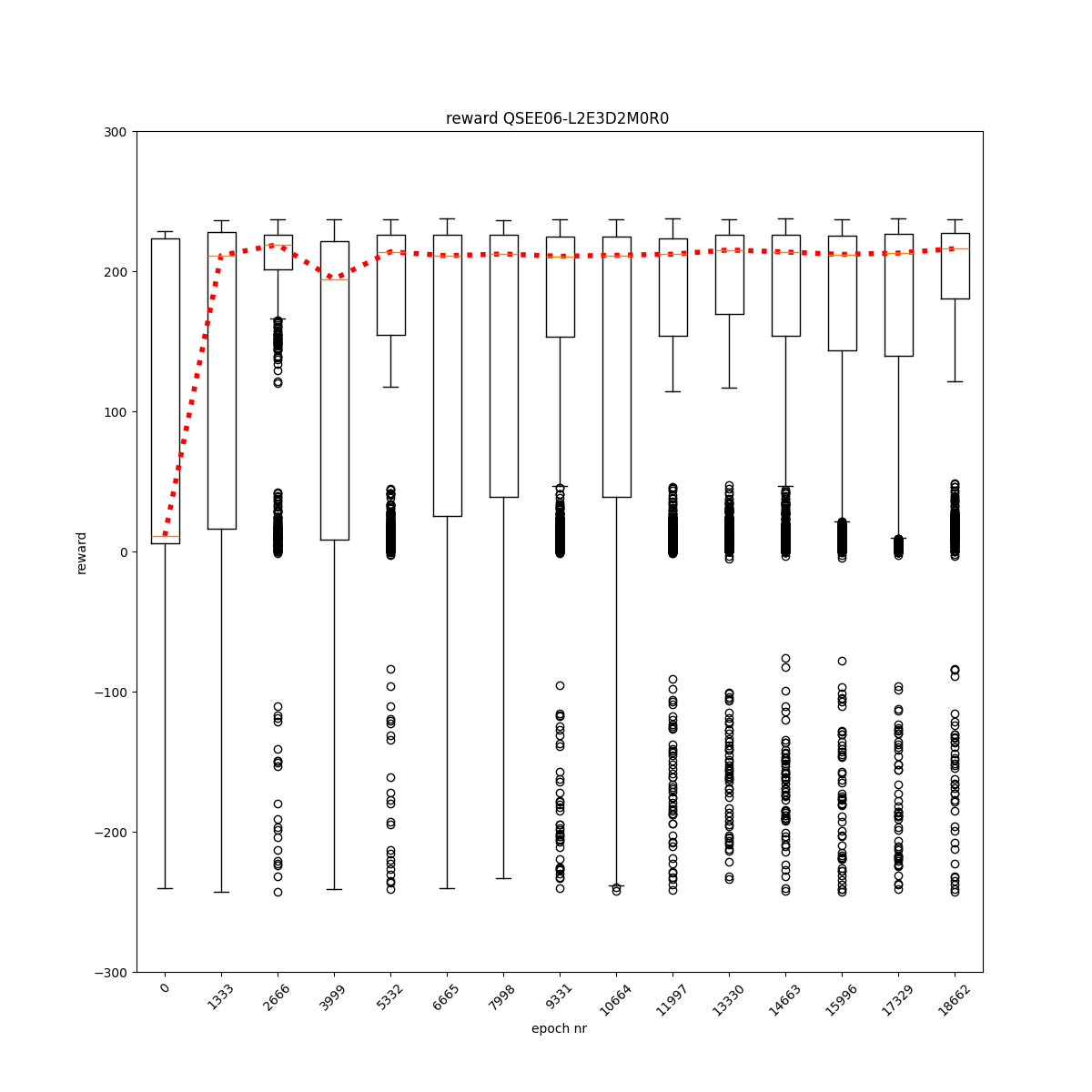

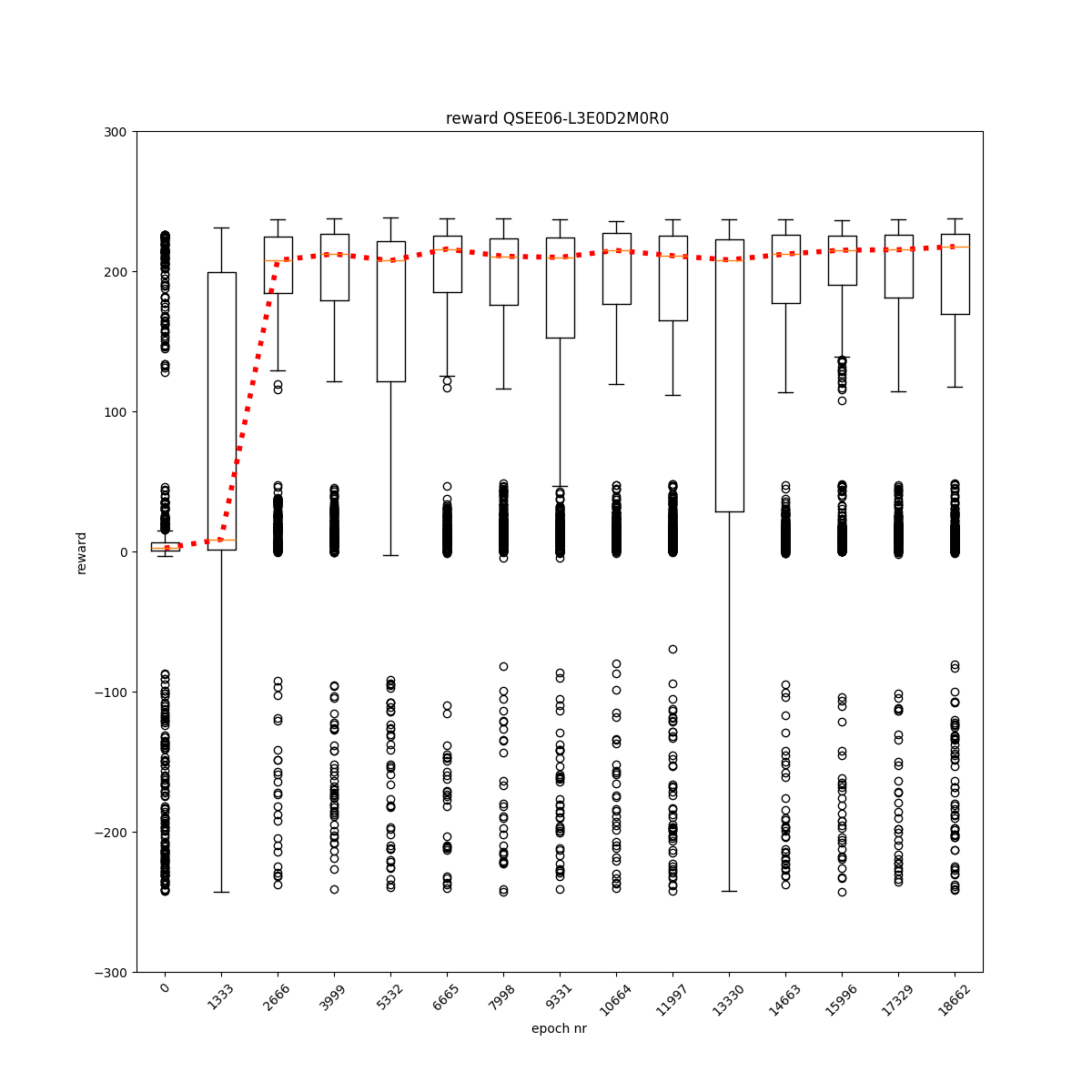

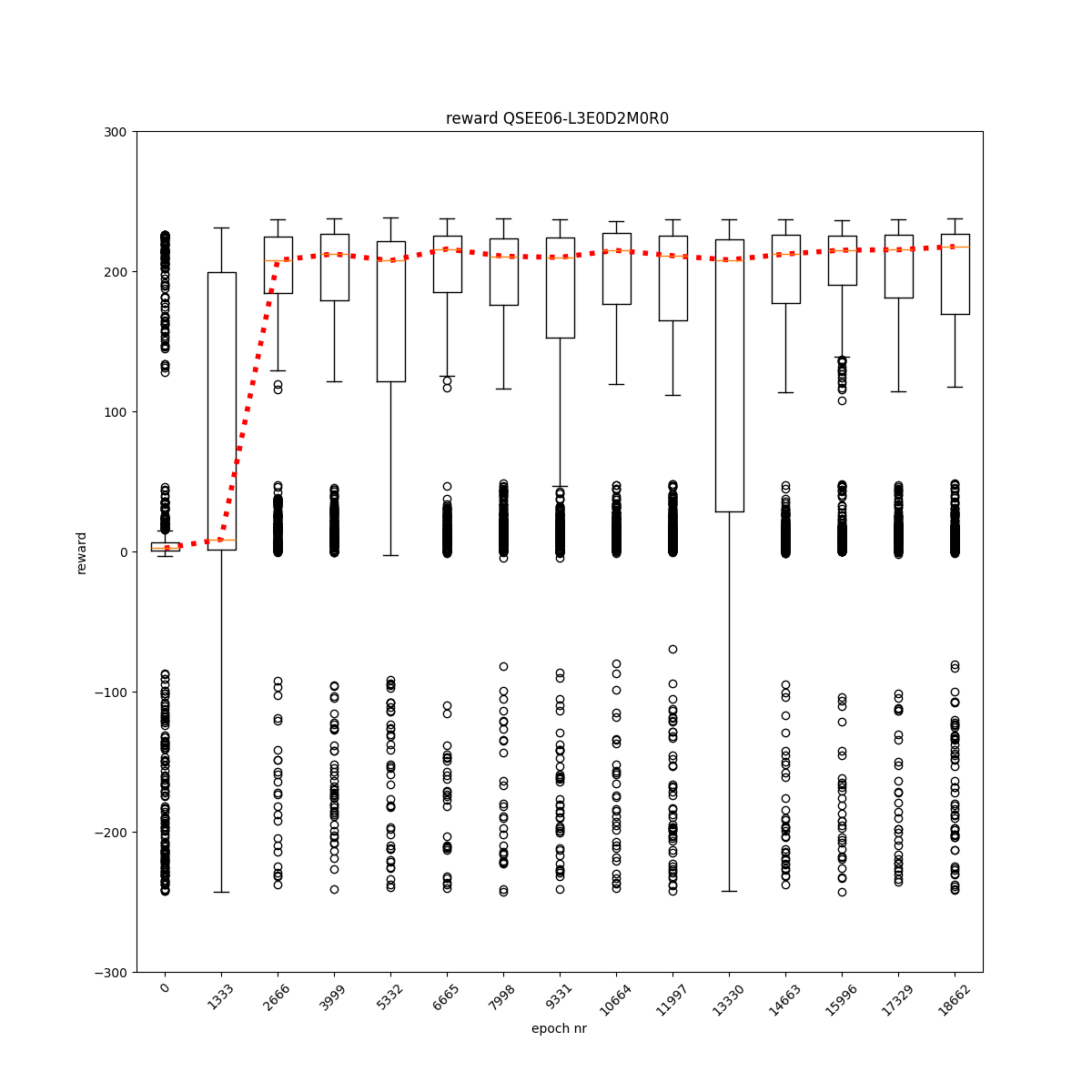

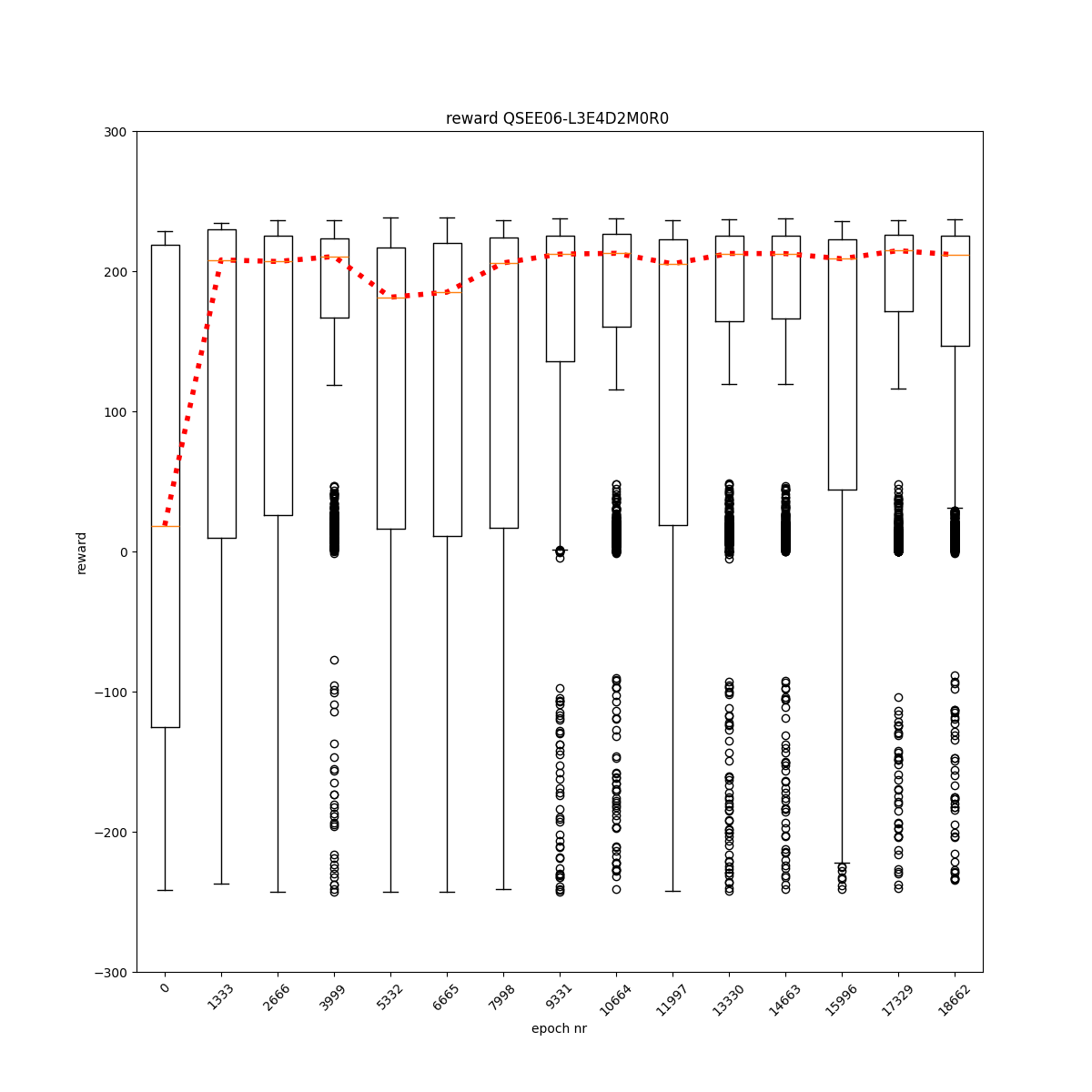

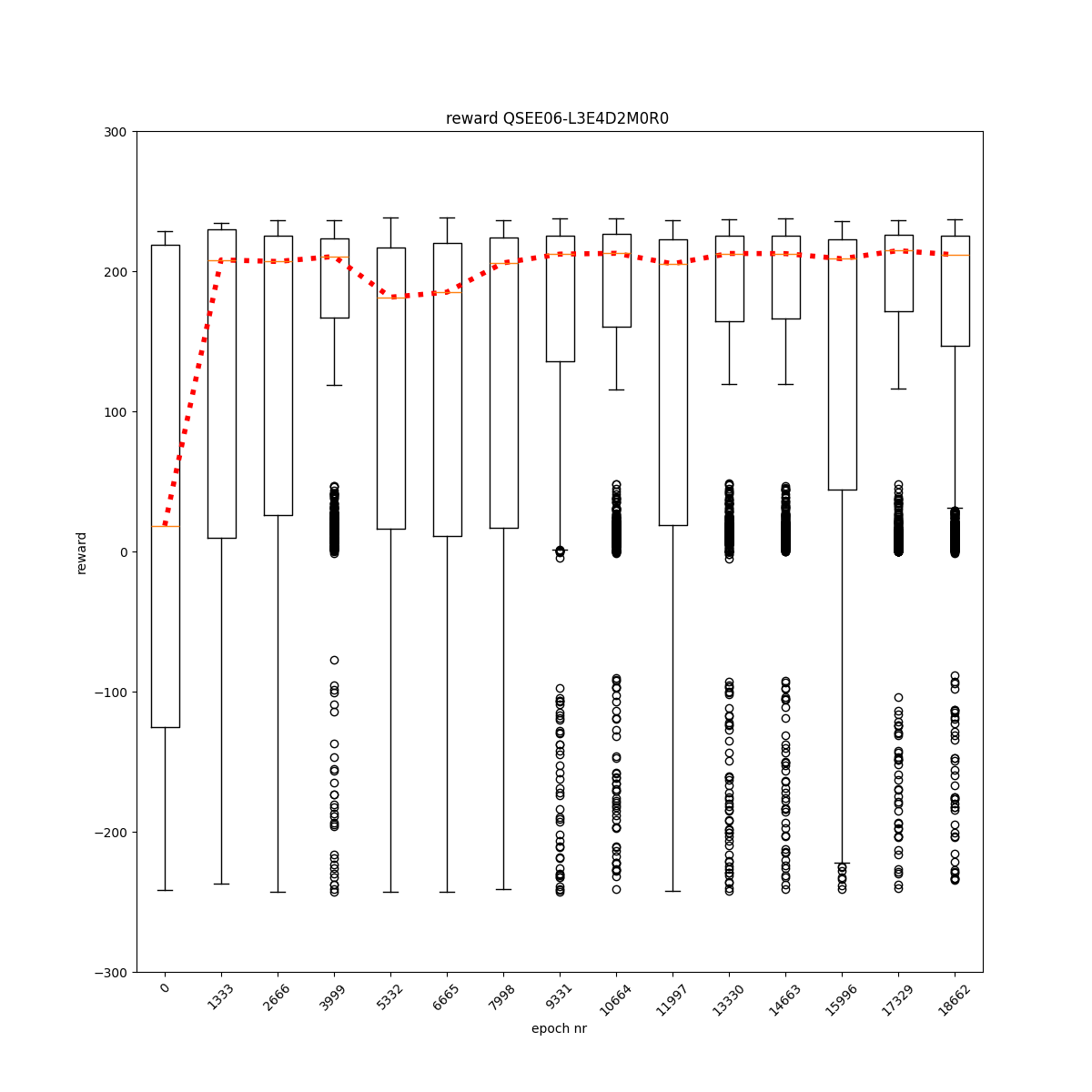

L2 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

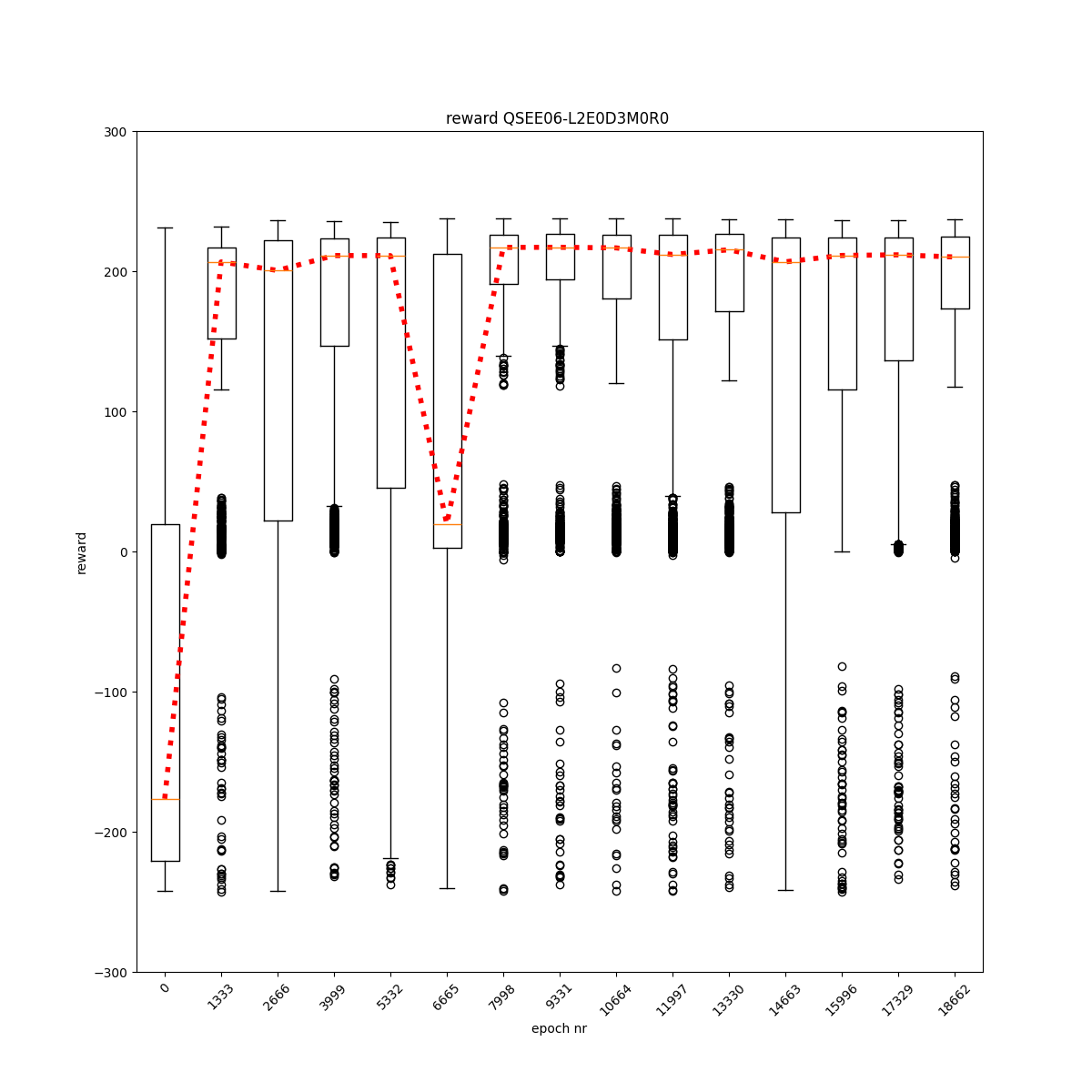

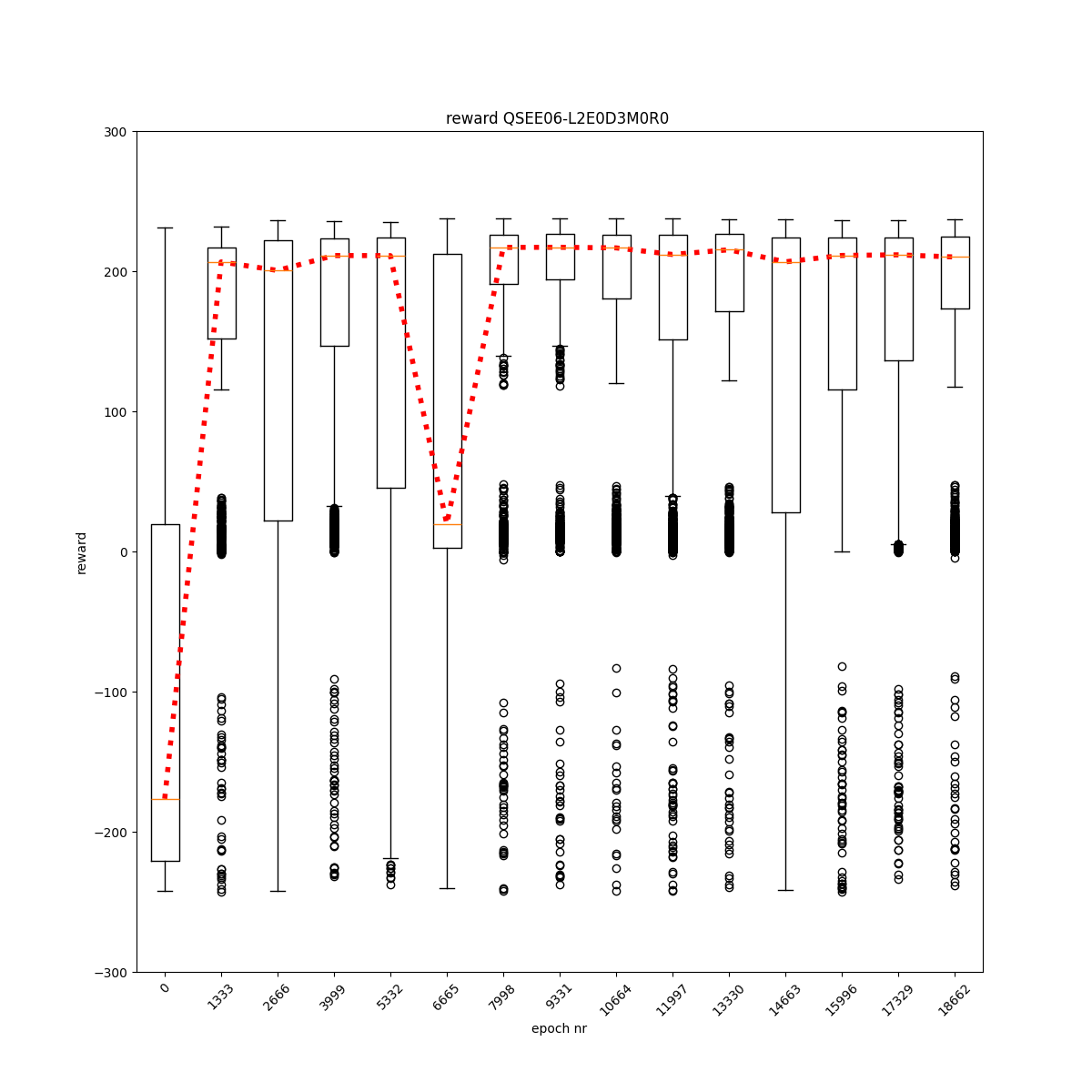

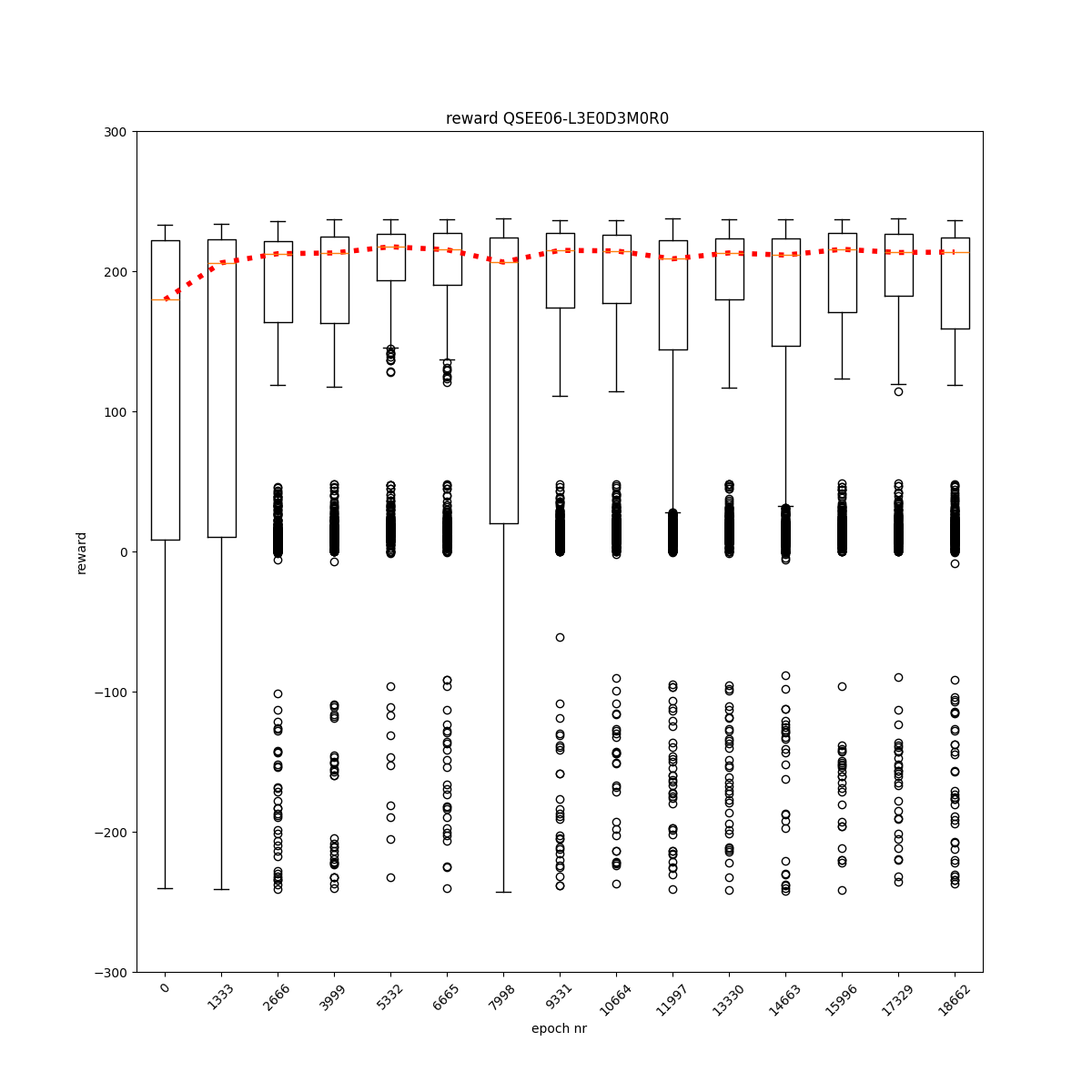

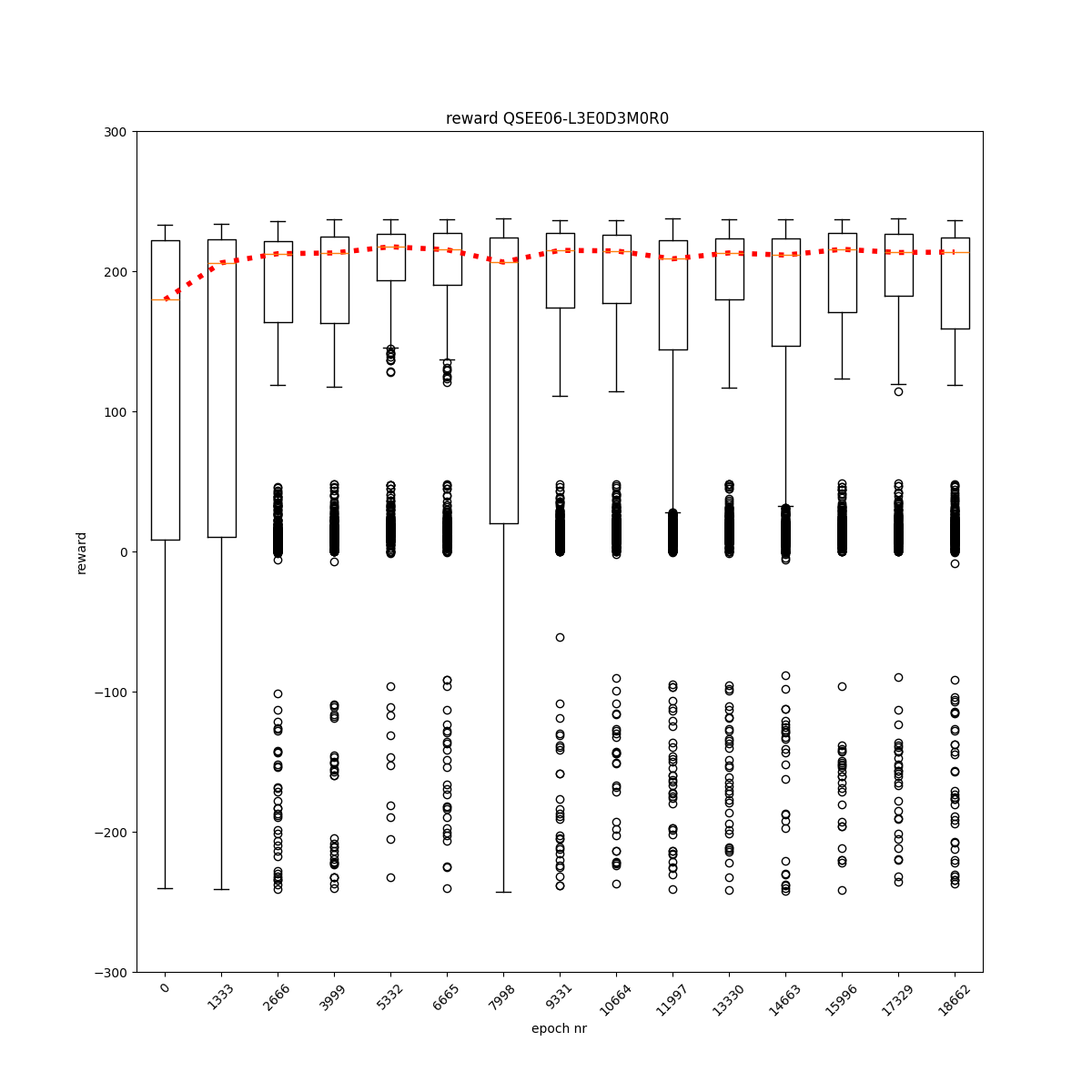

L2 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

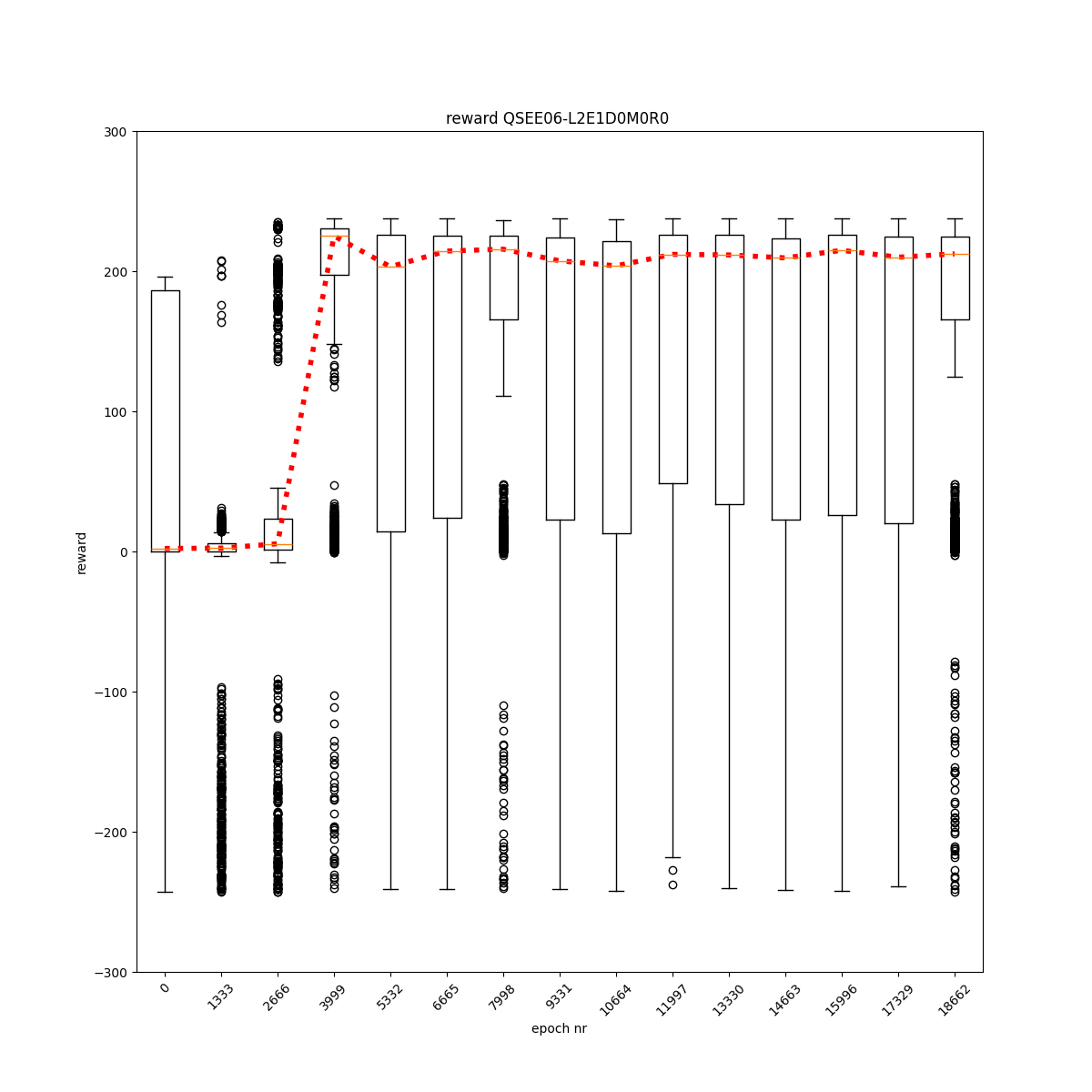

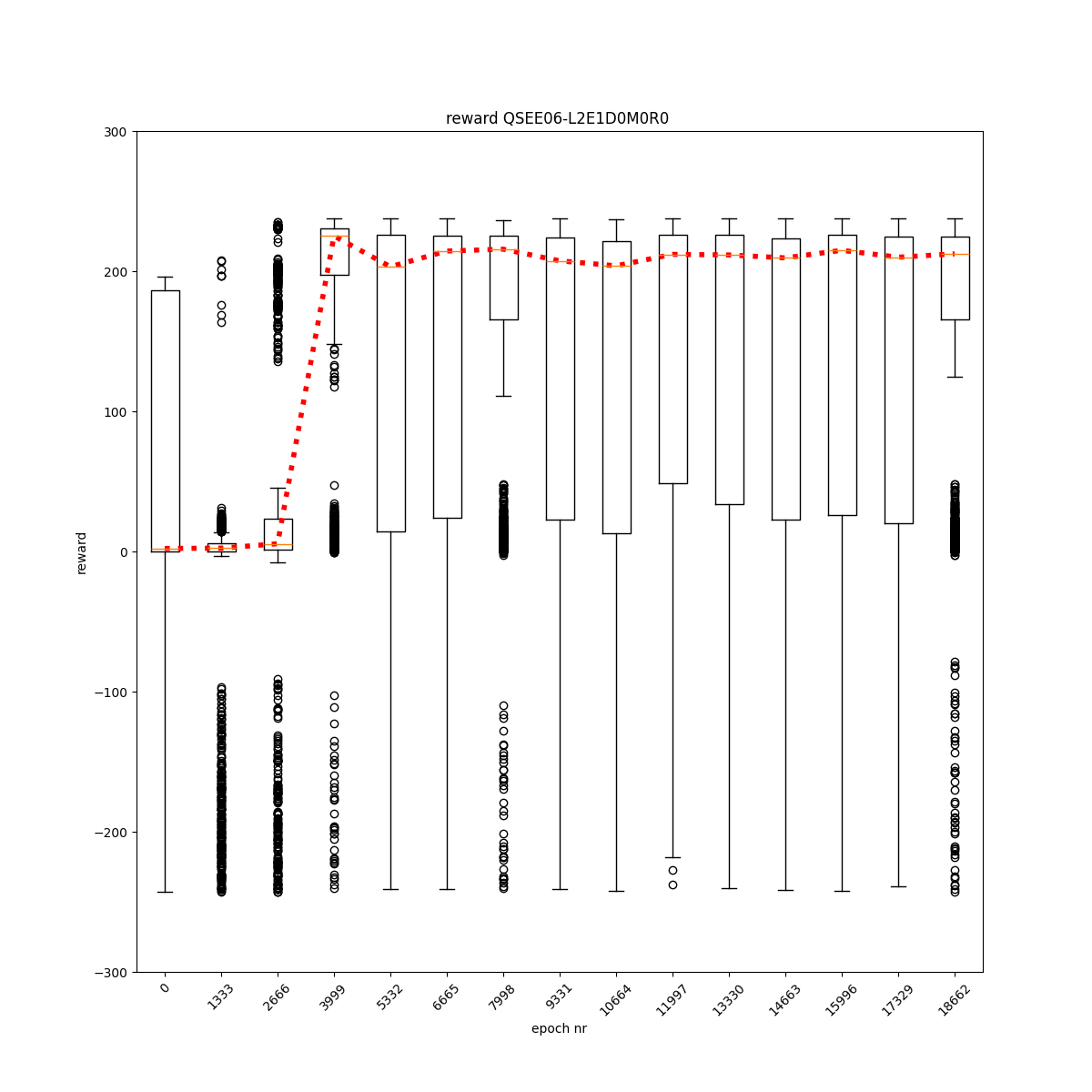

L2 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

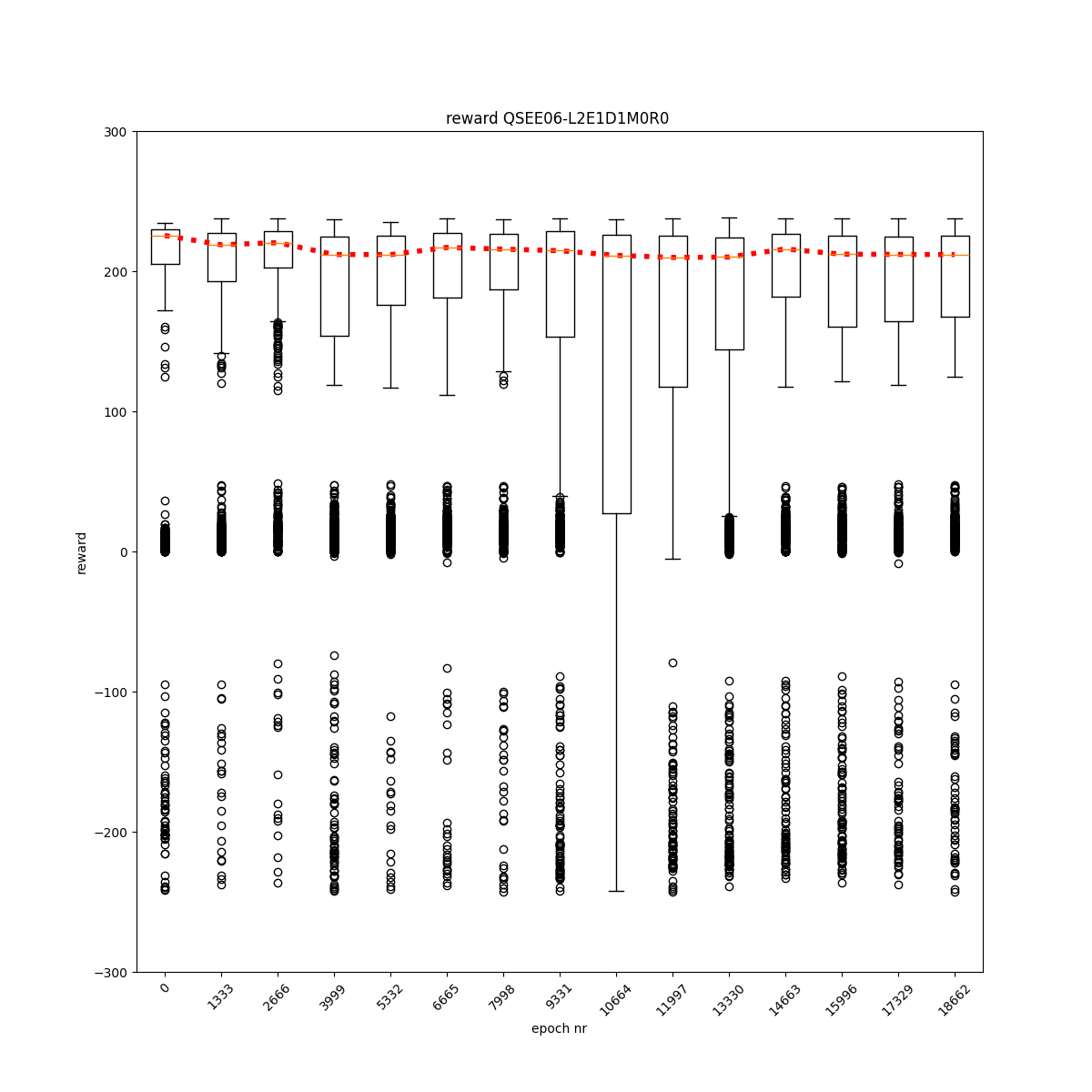

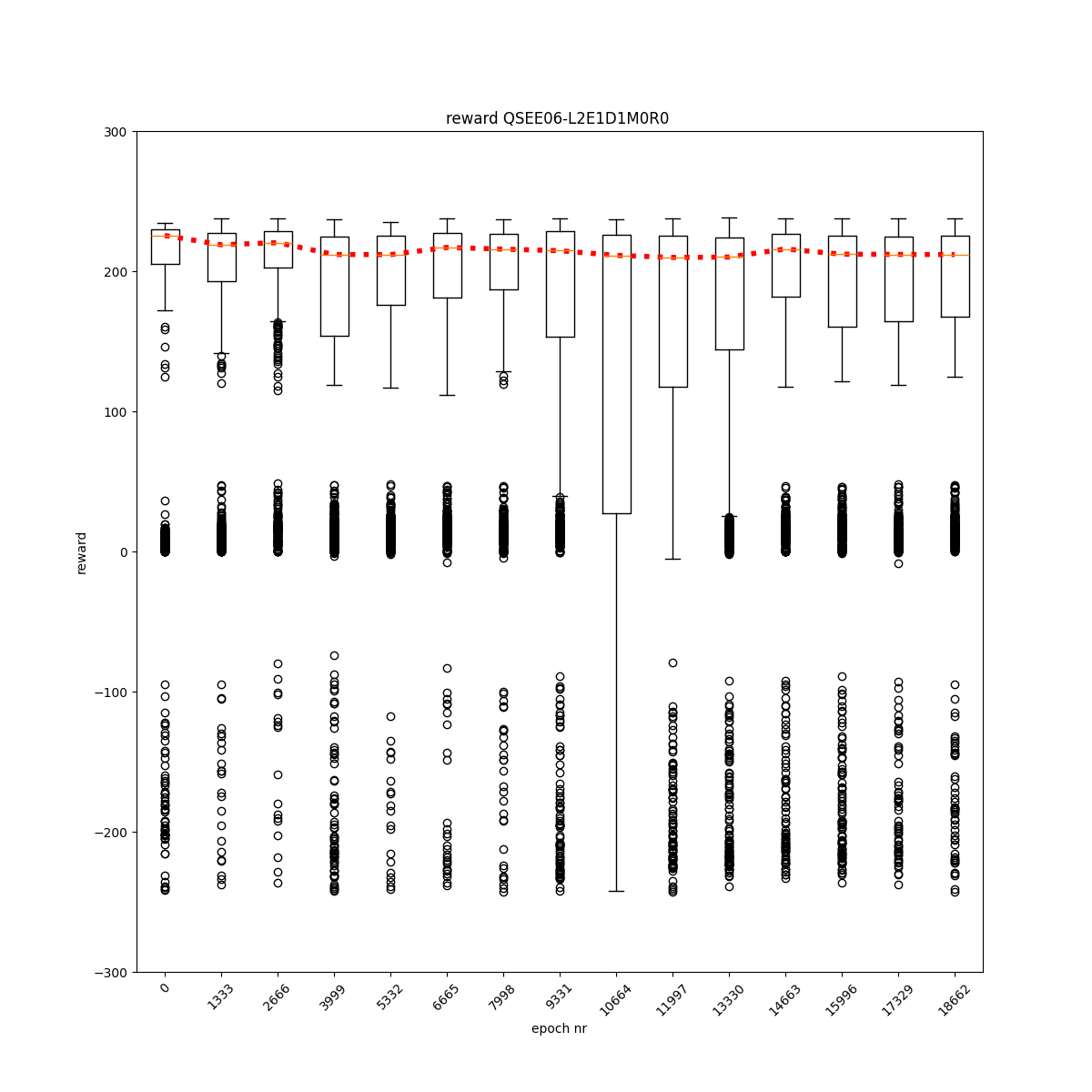

L2 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

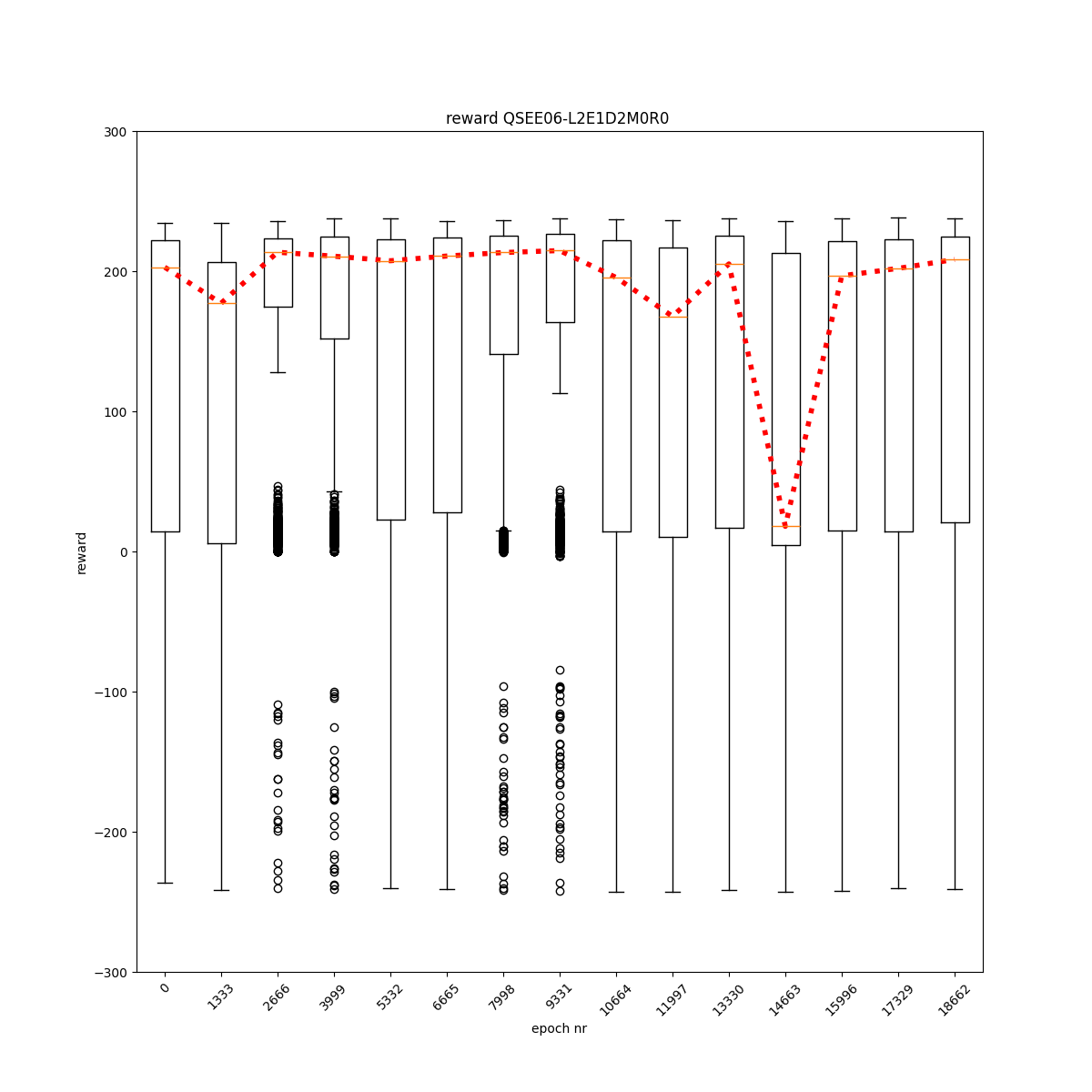

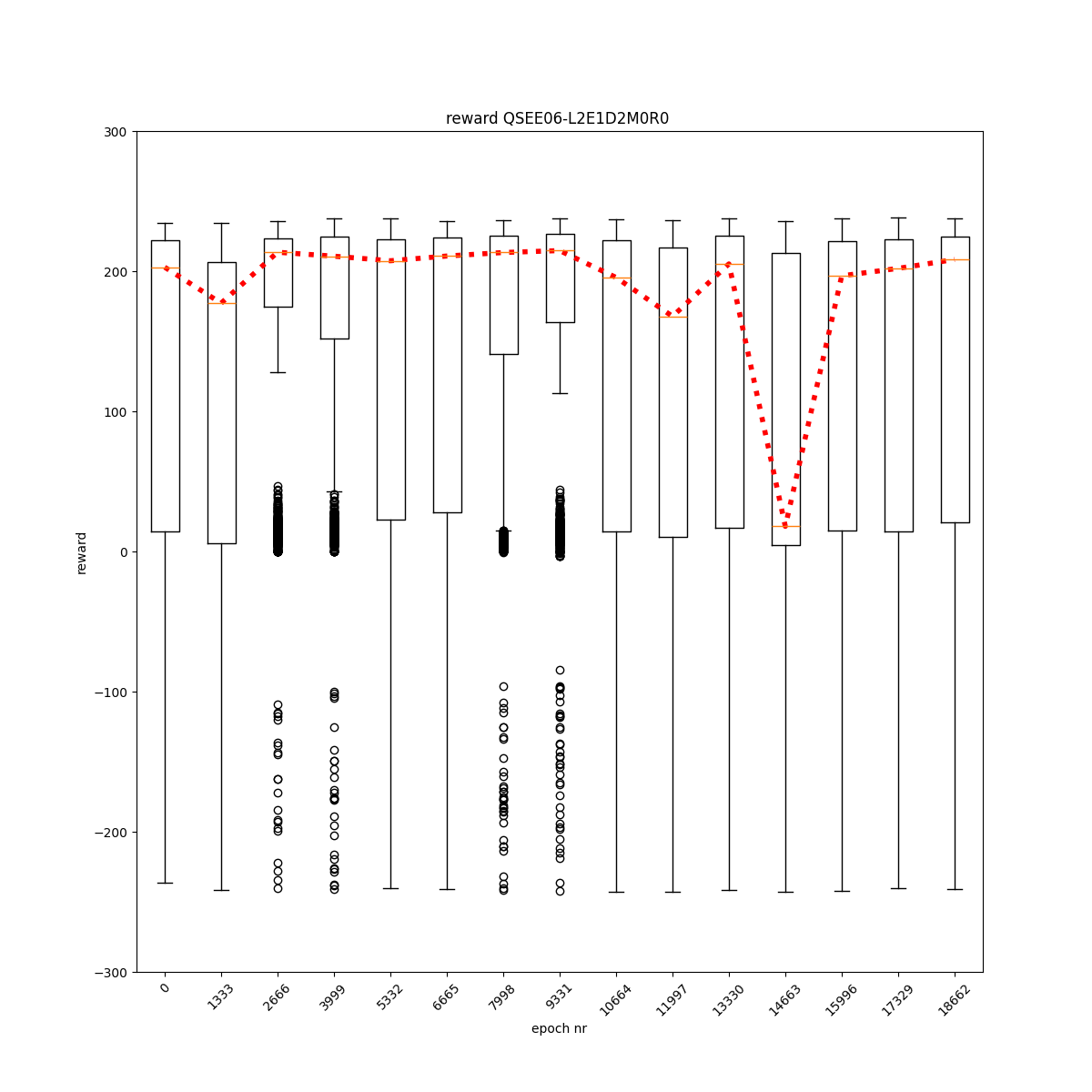

L2 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

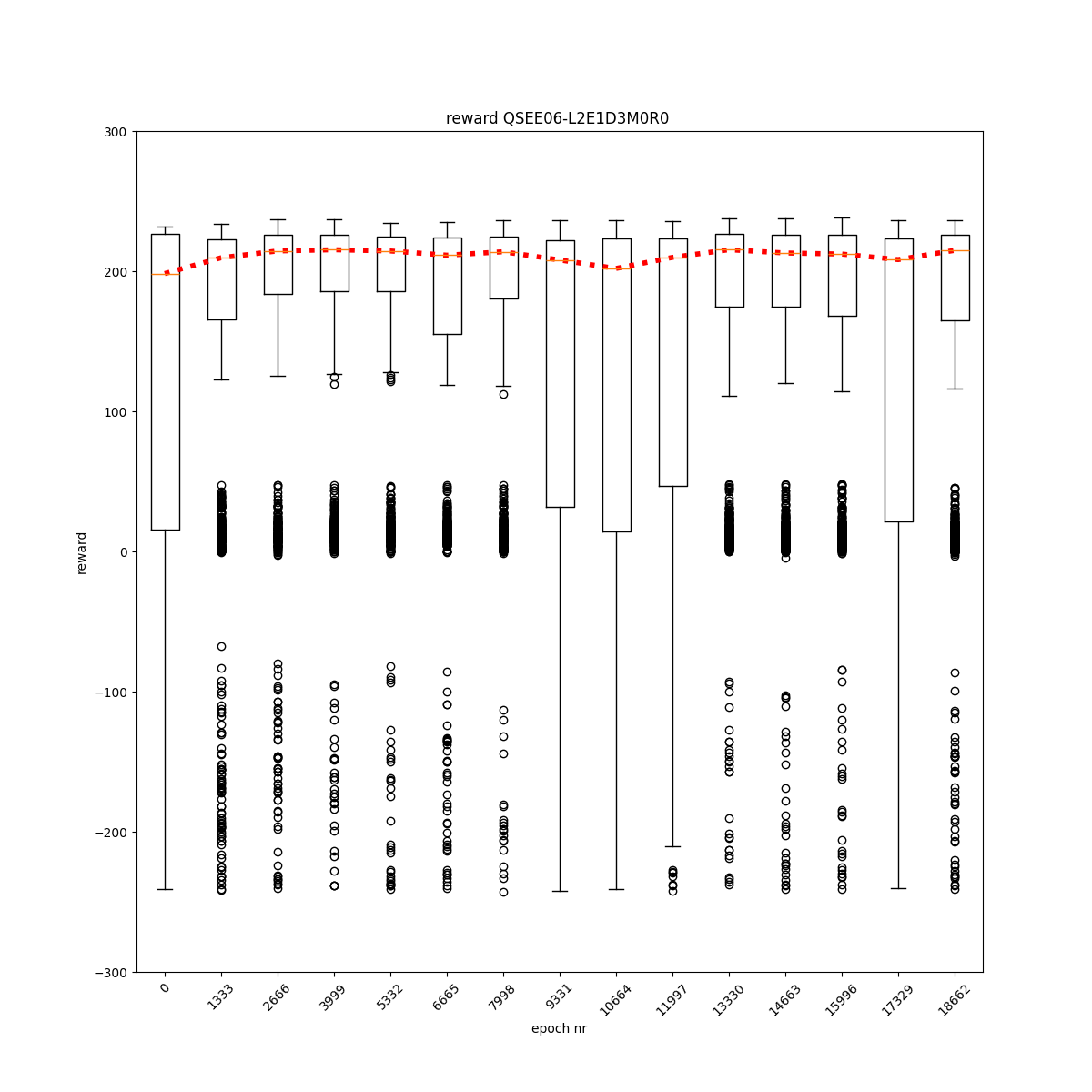

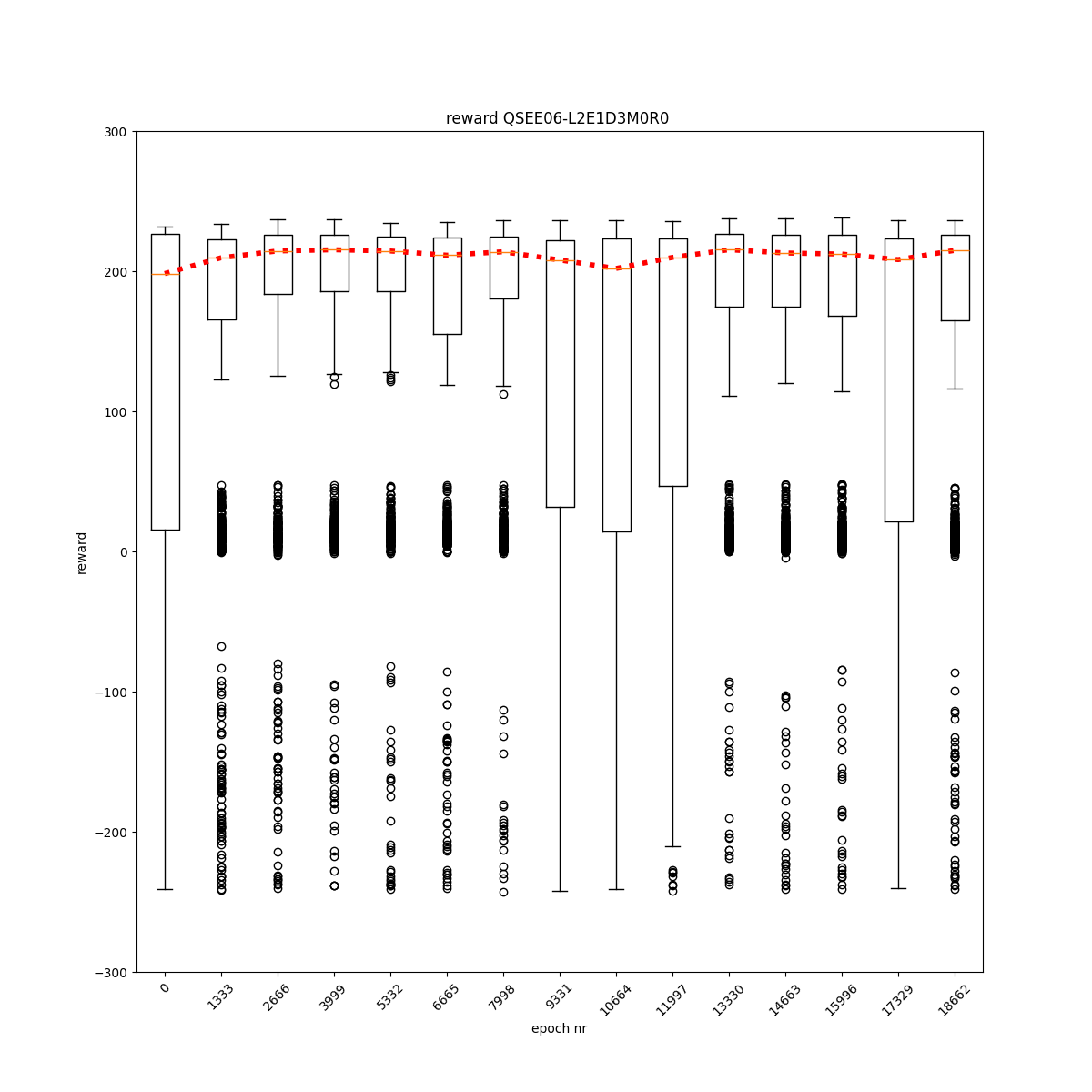

L2 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

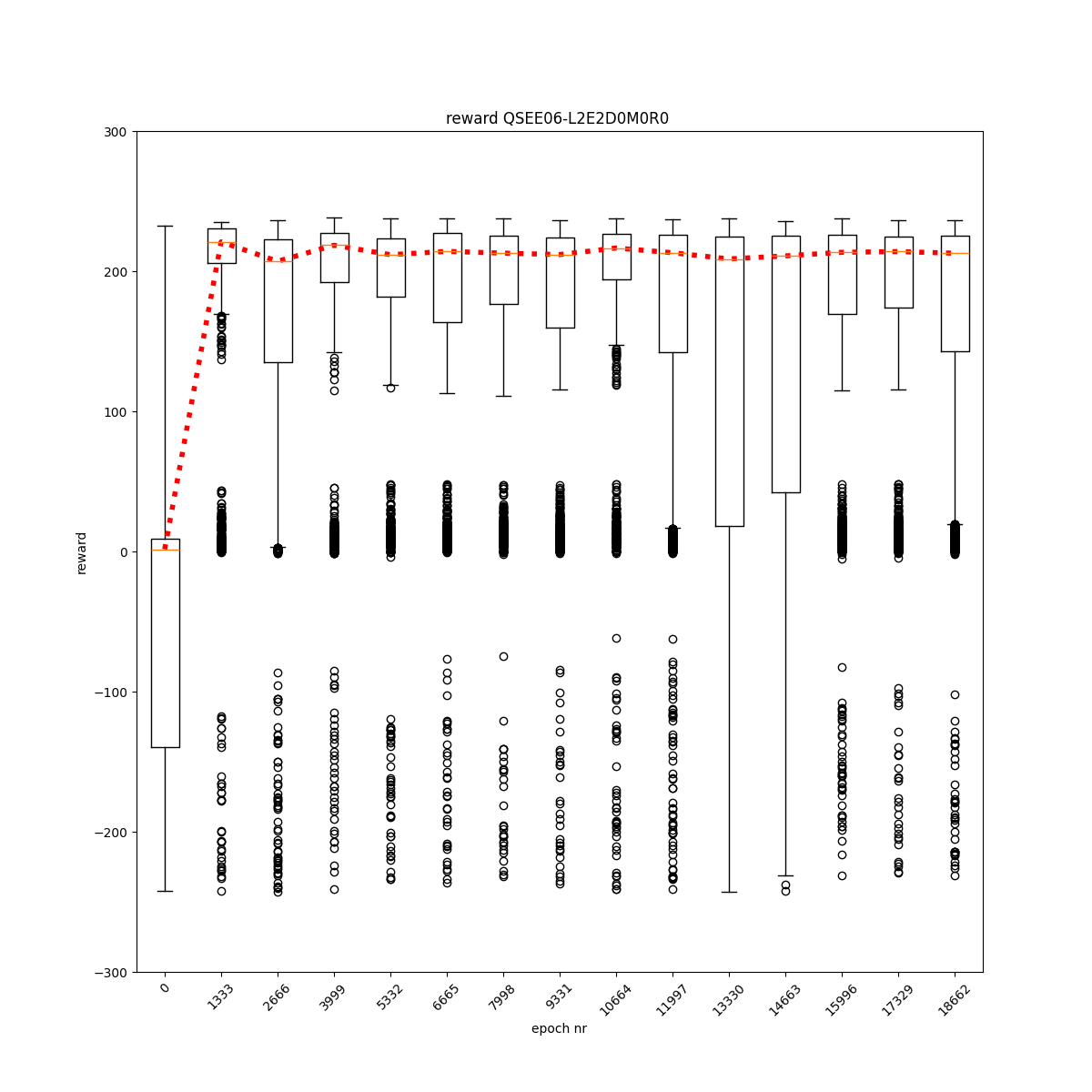

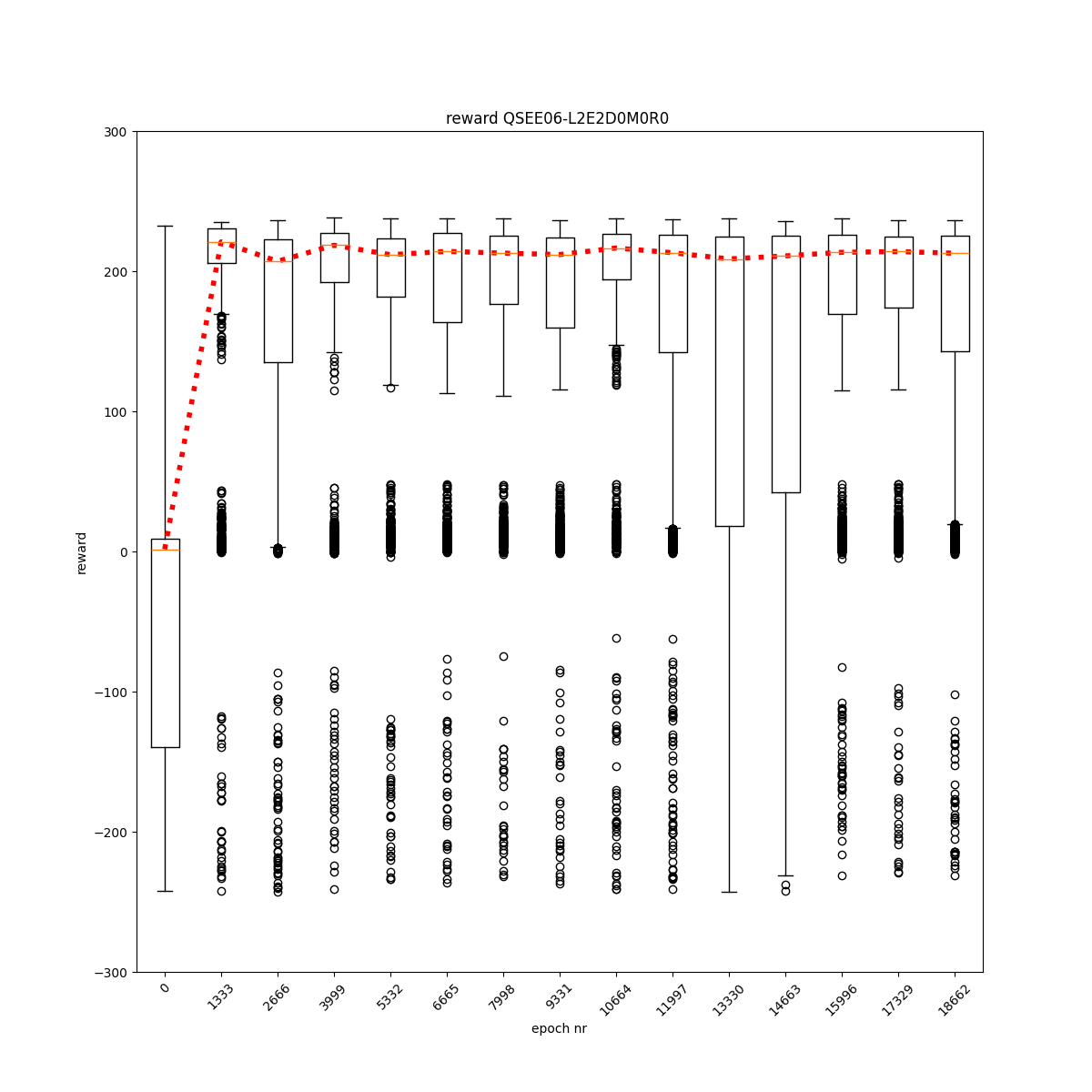

L2 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

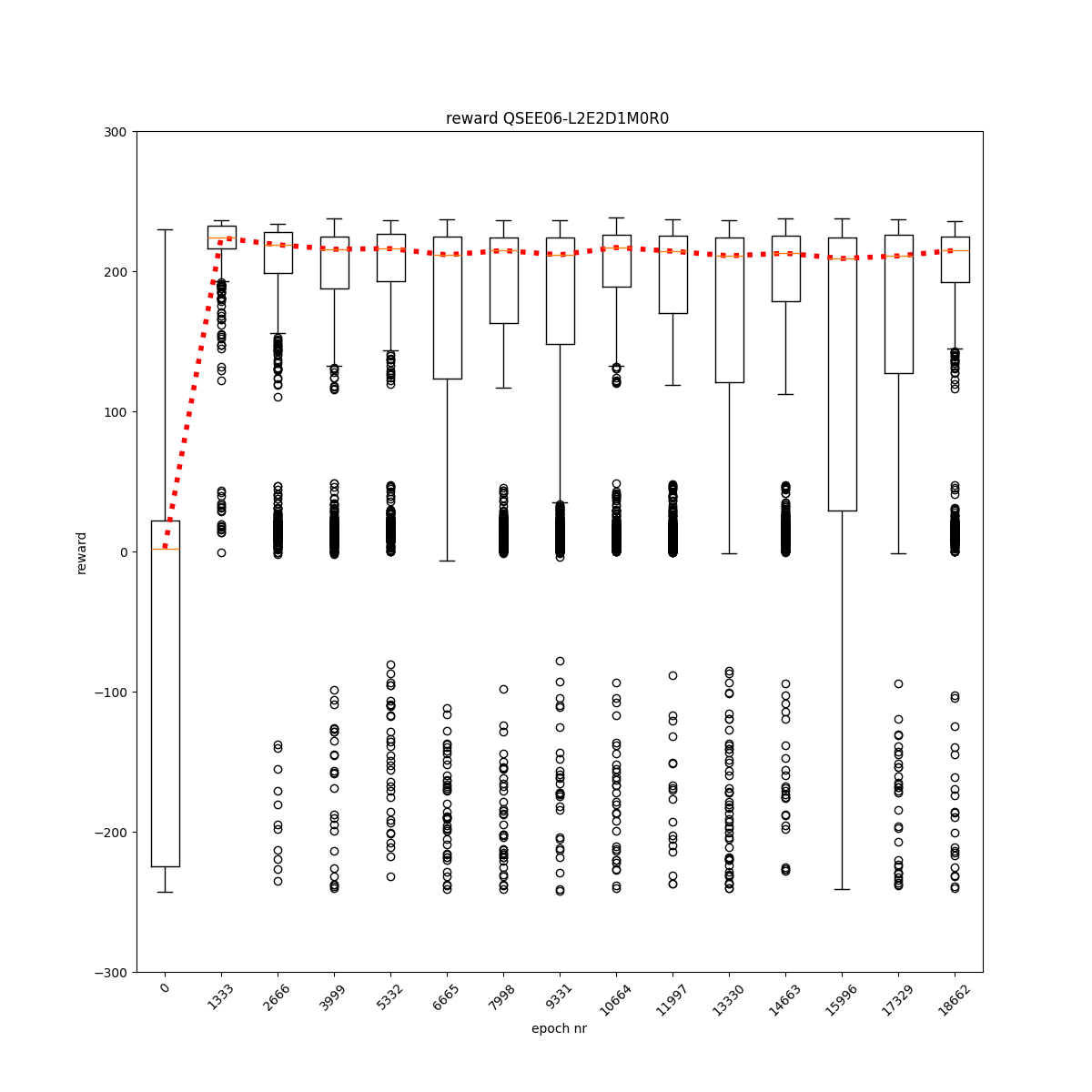

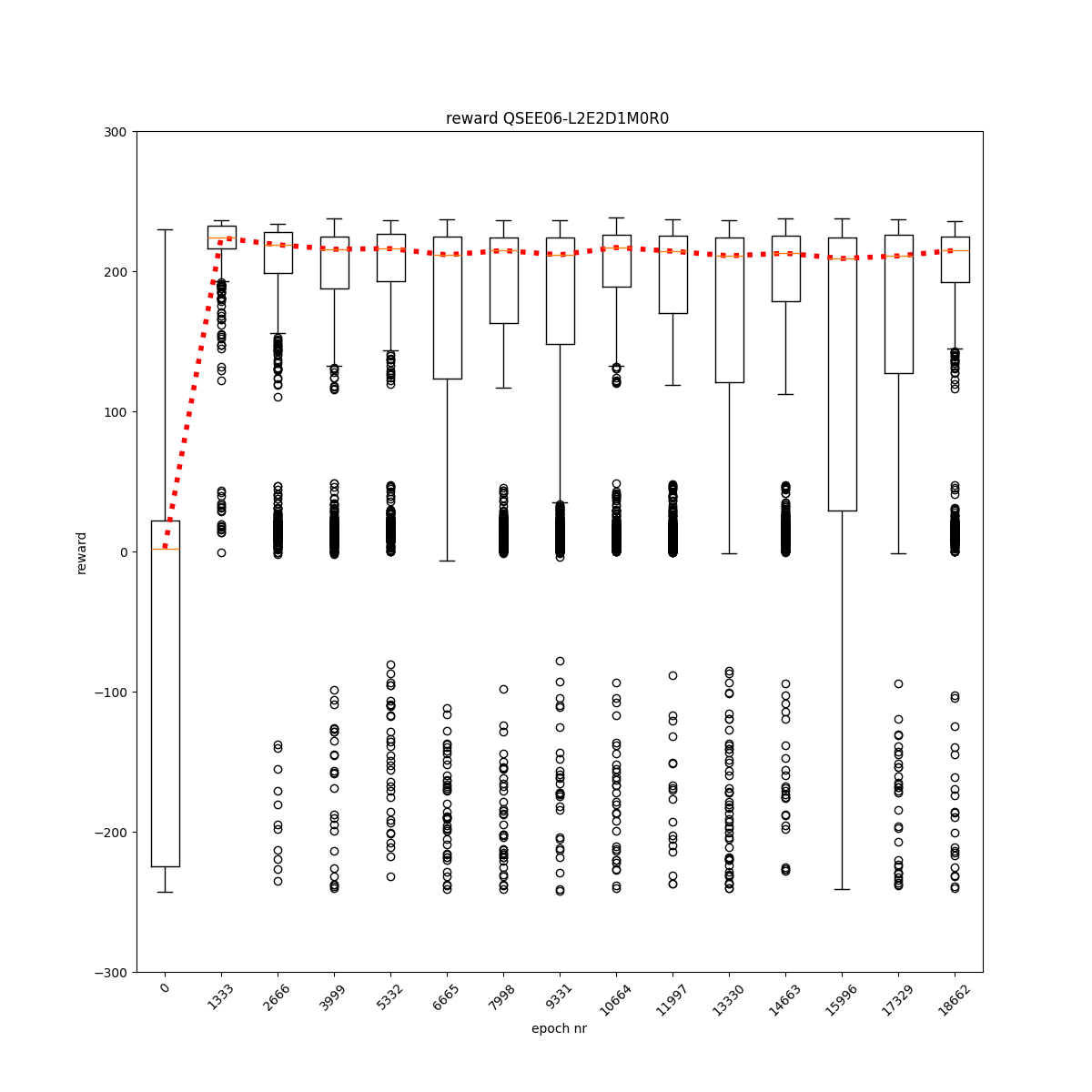

L2 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

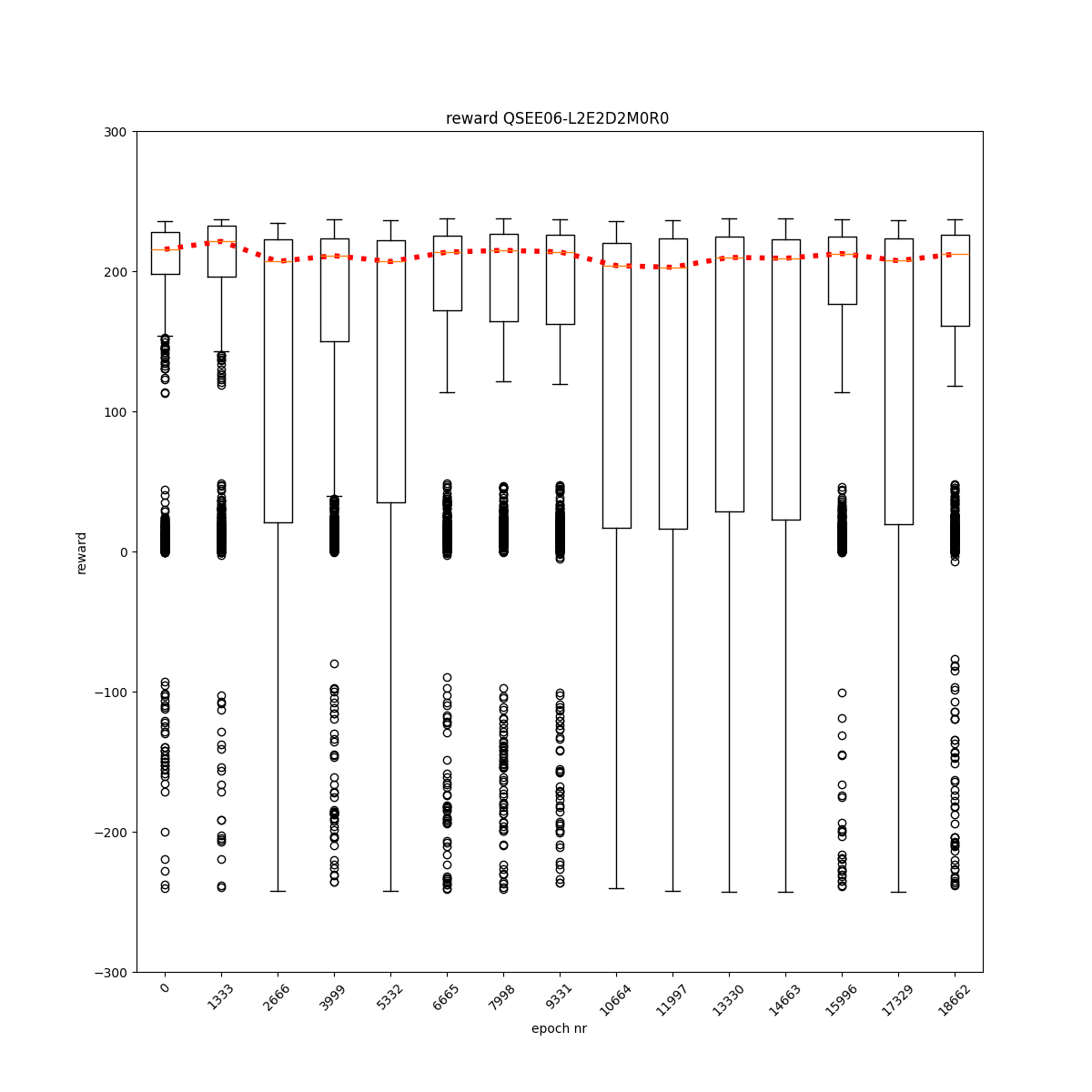

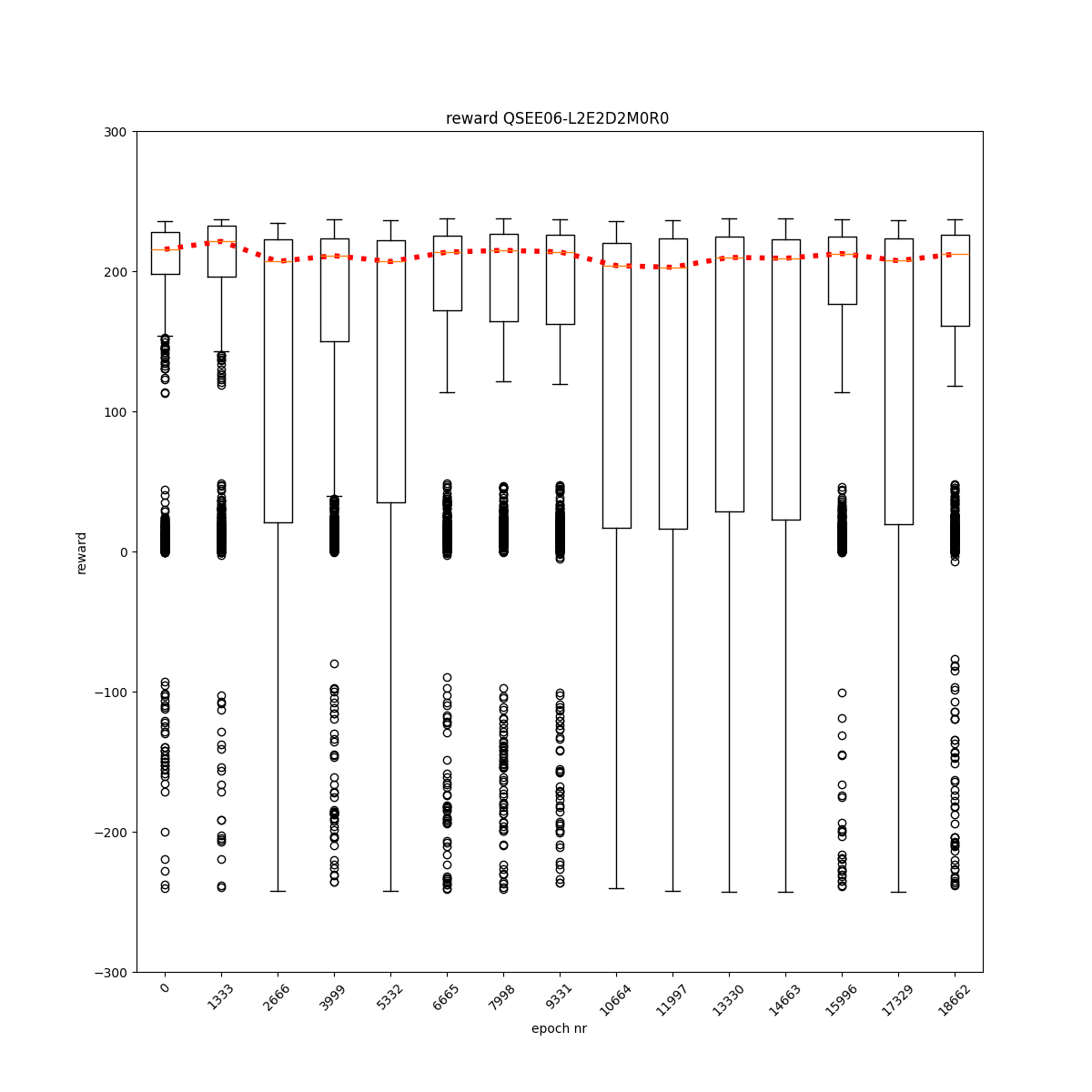

L2 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

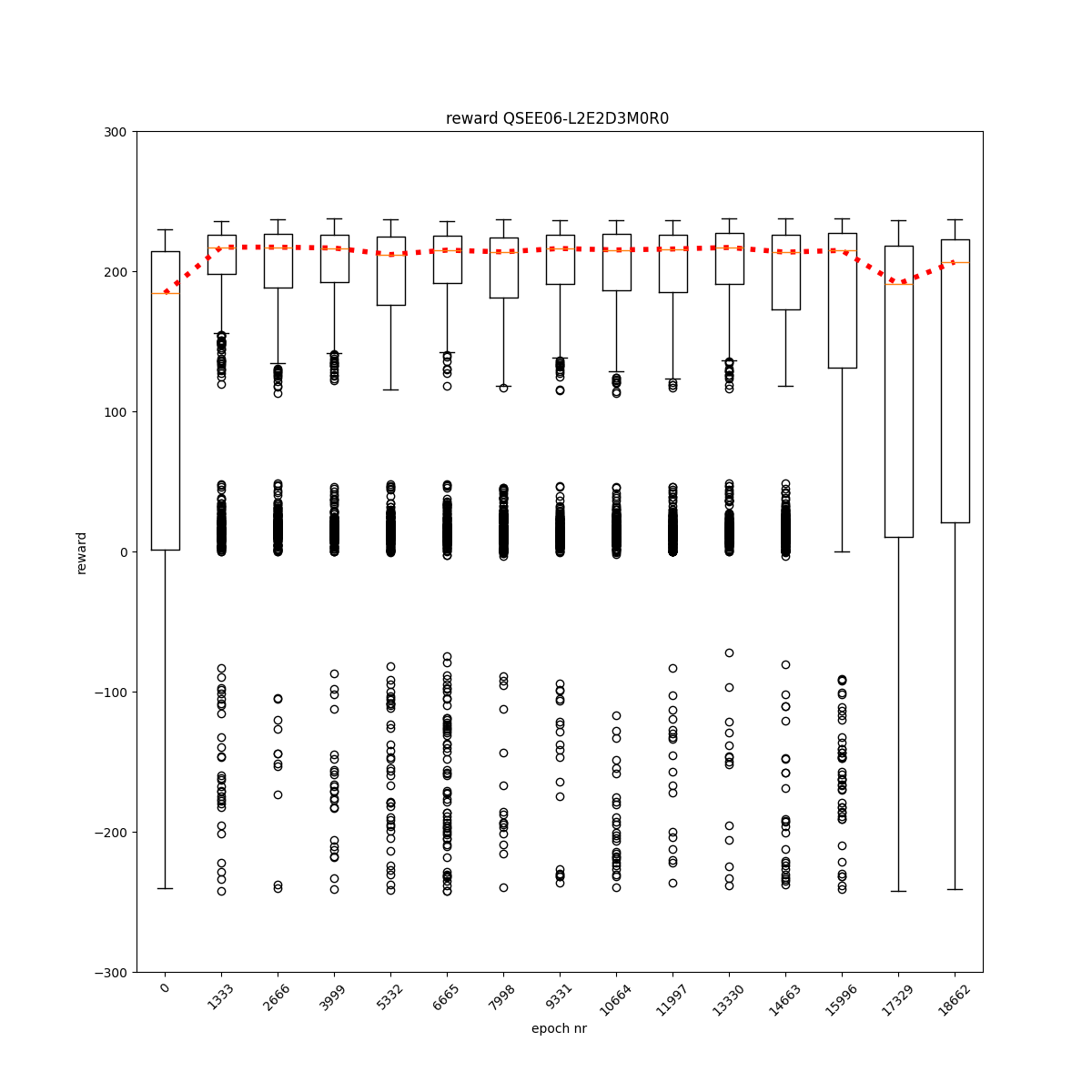

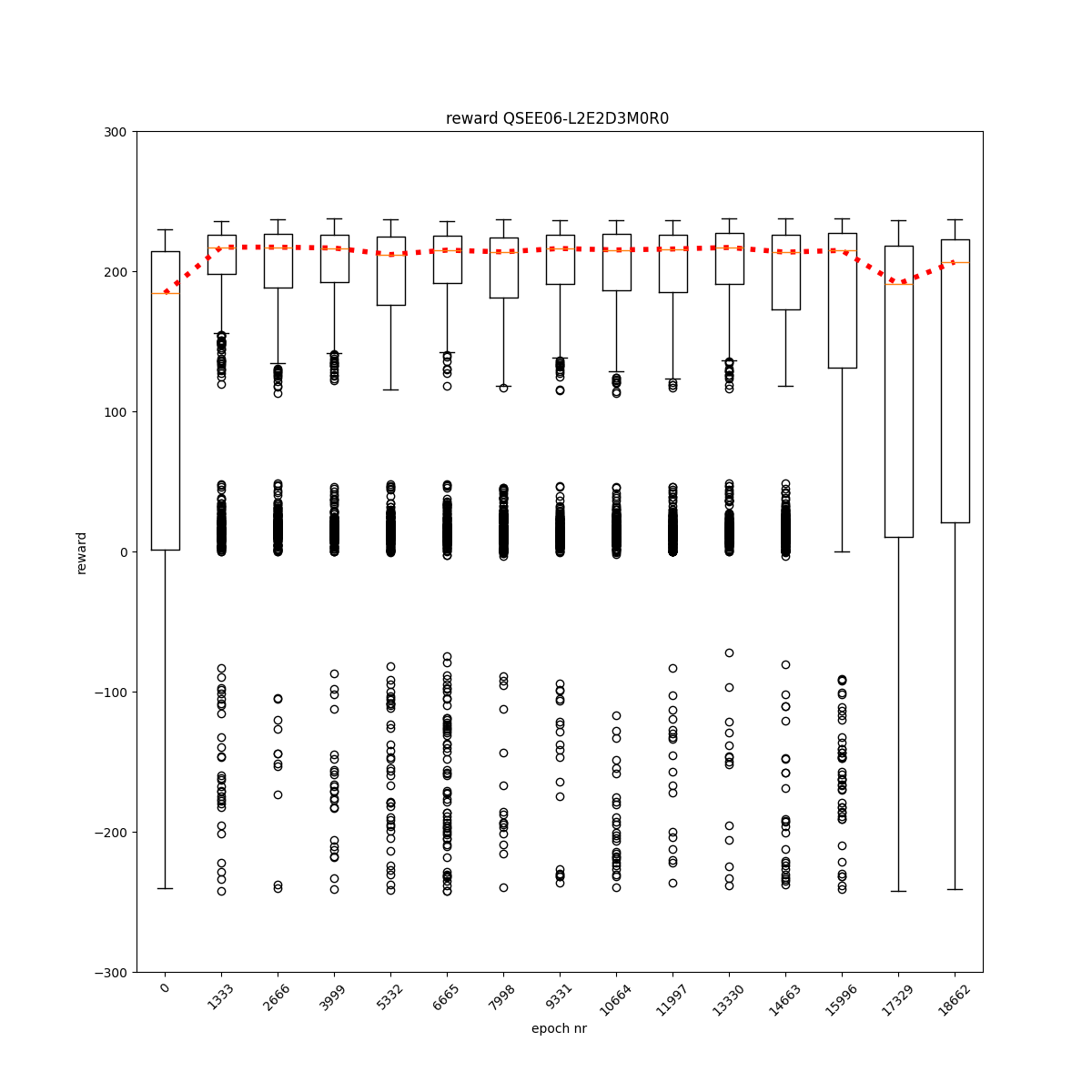

L2 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E3 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E3 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L2 E3 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

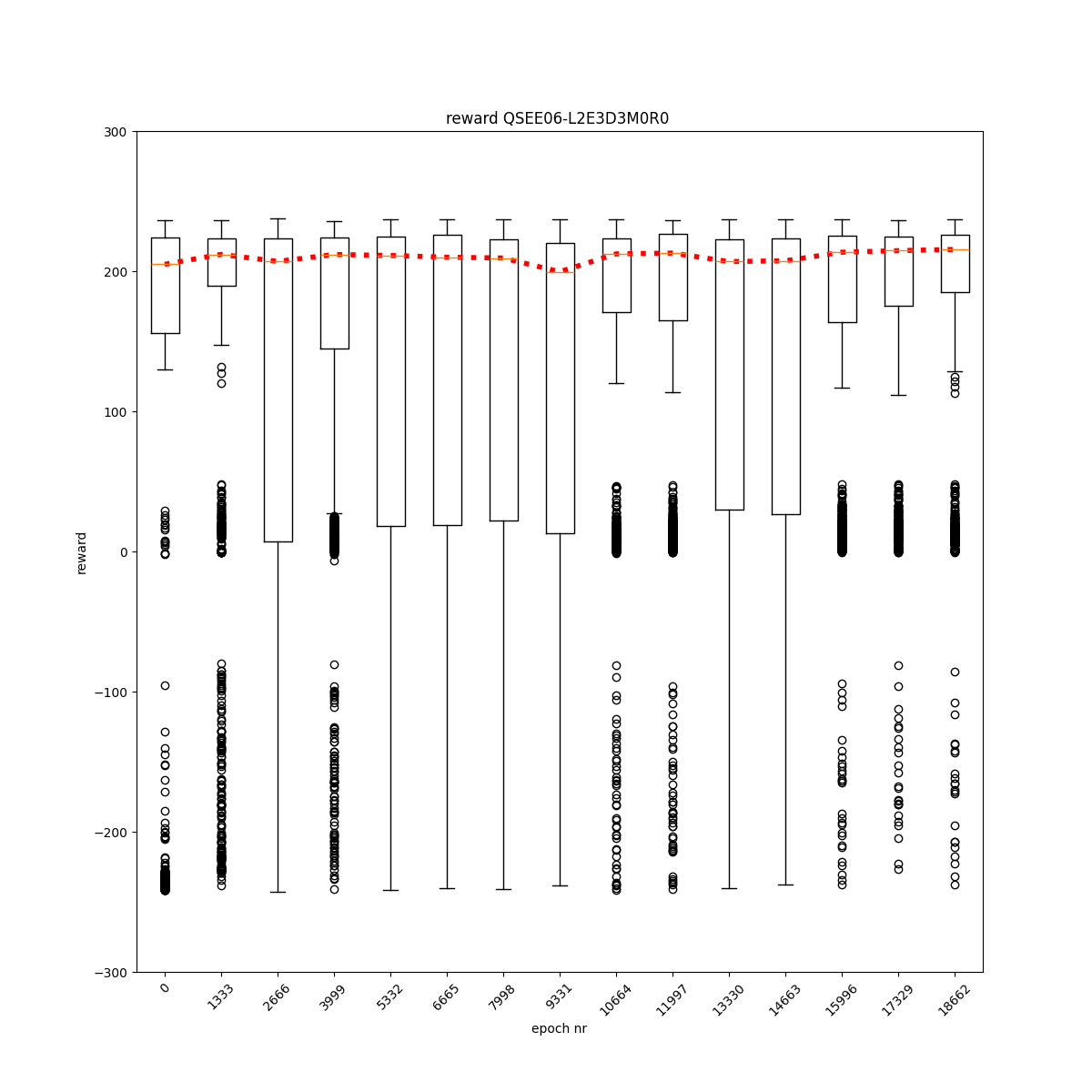

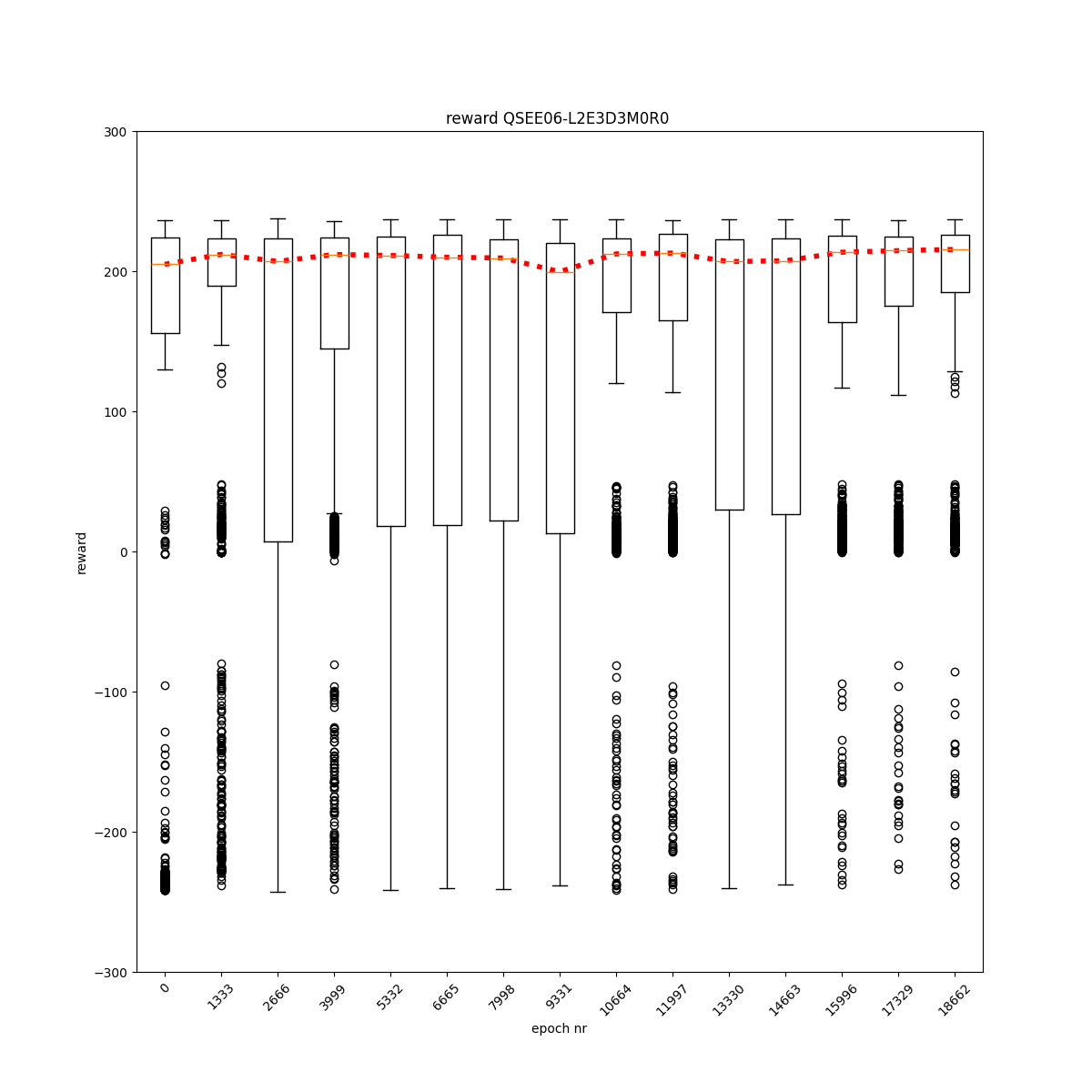

L2 E3 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

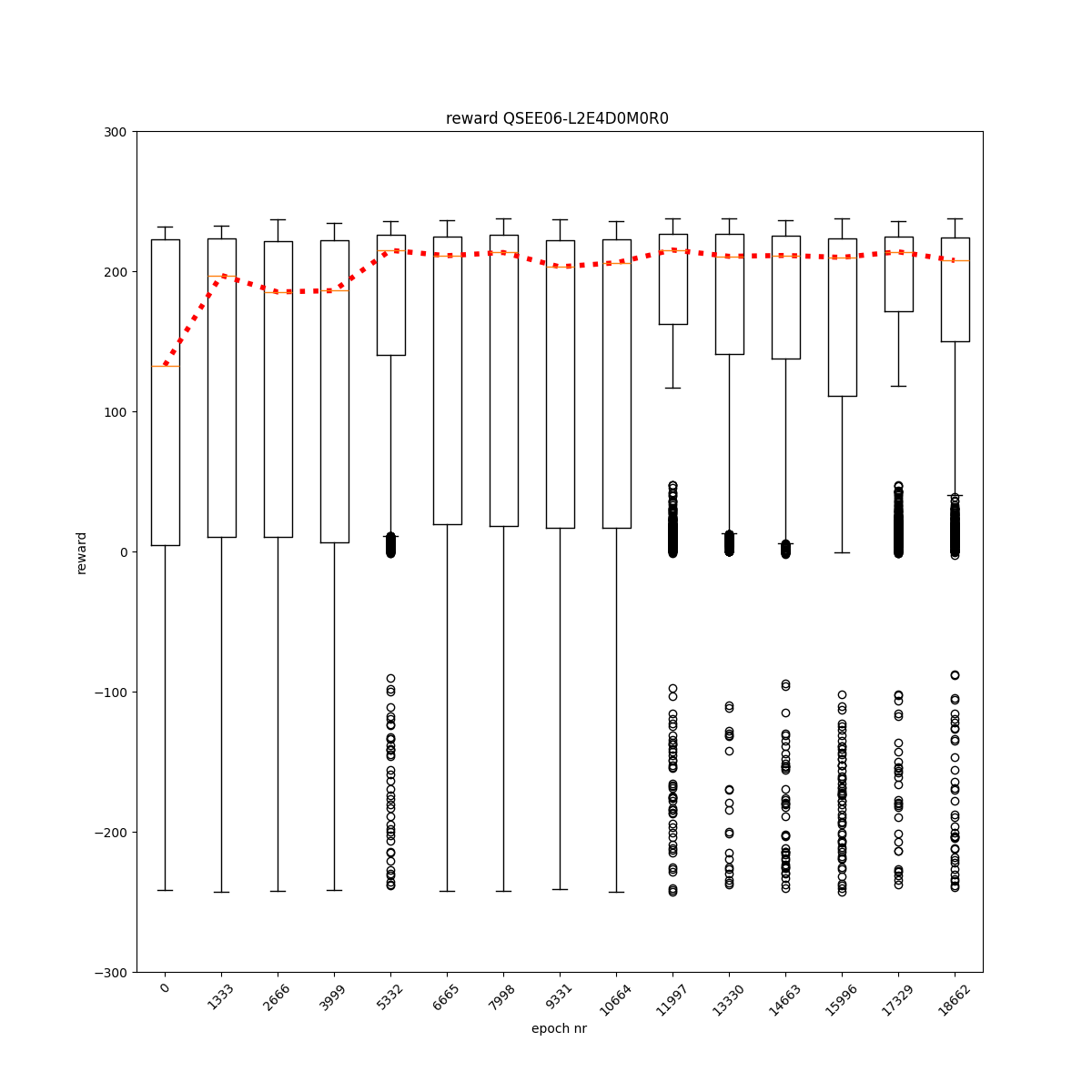

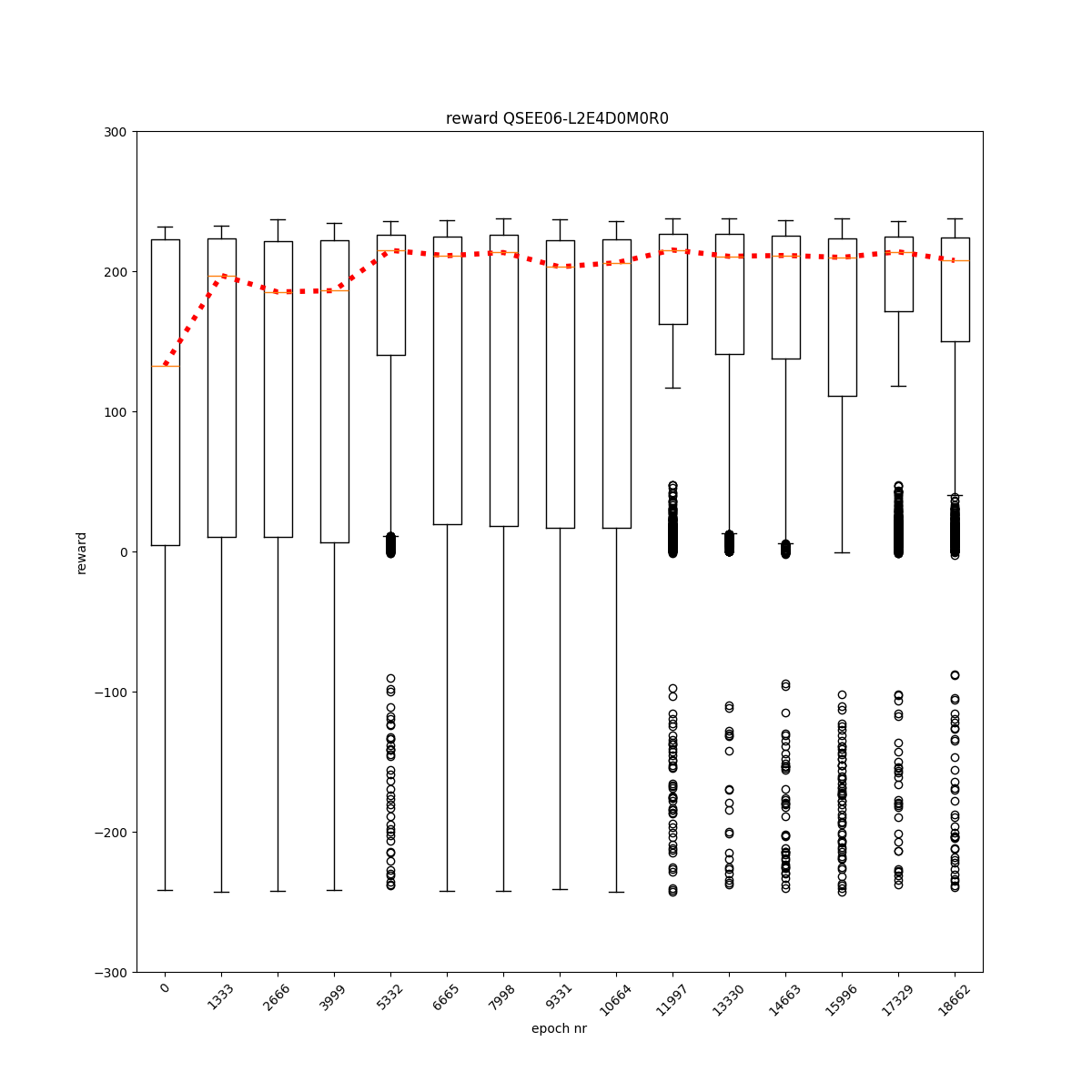

L2 E4 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

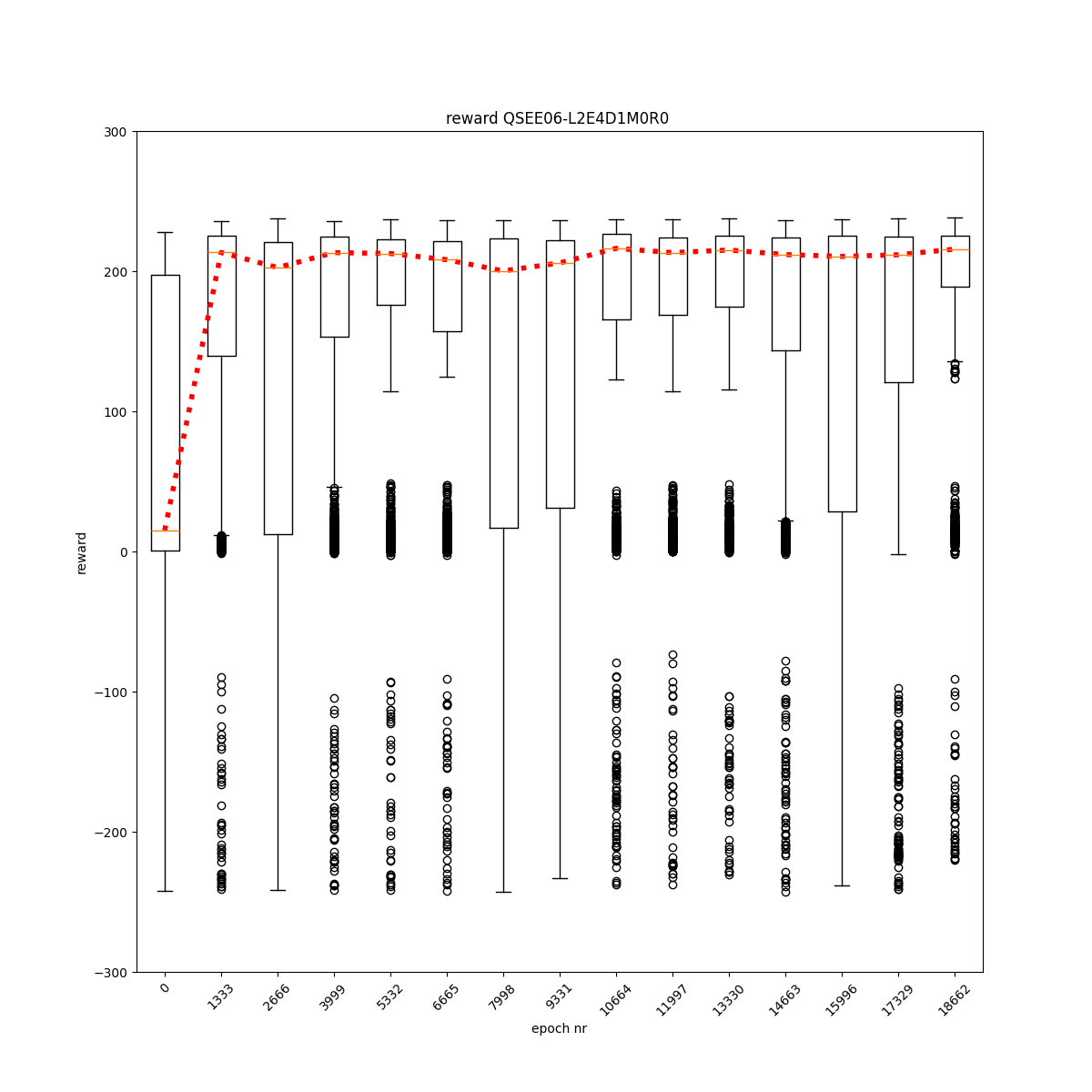

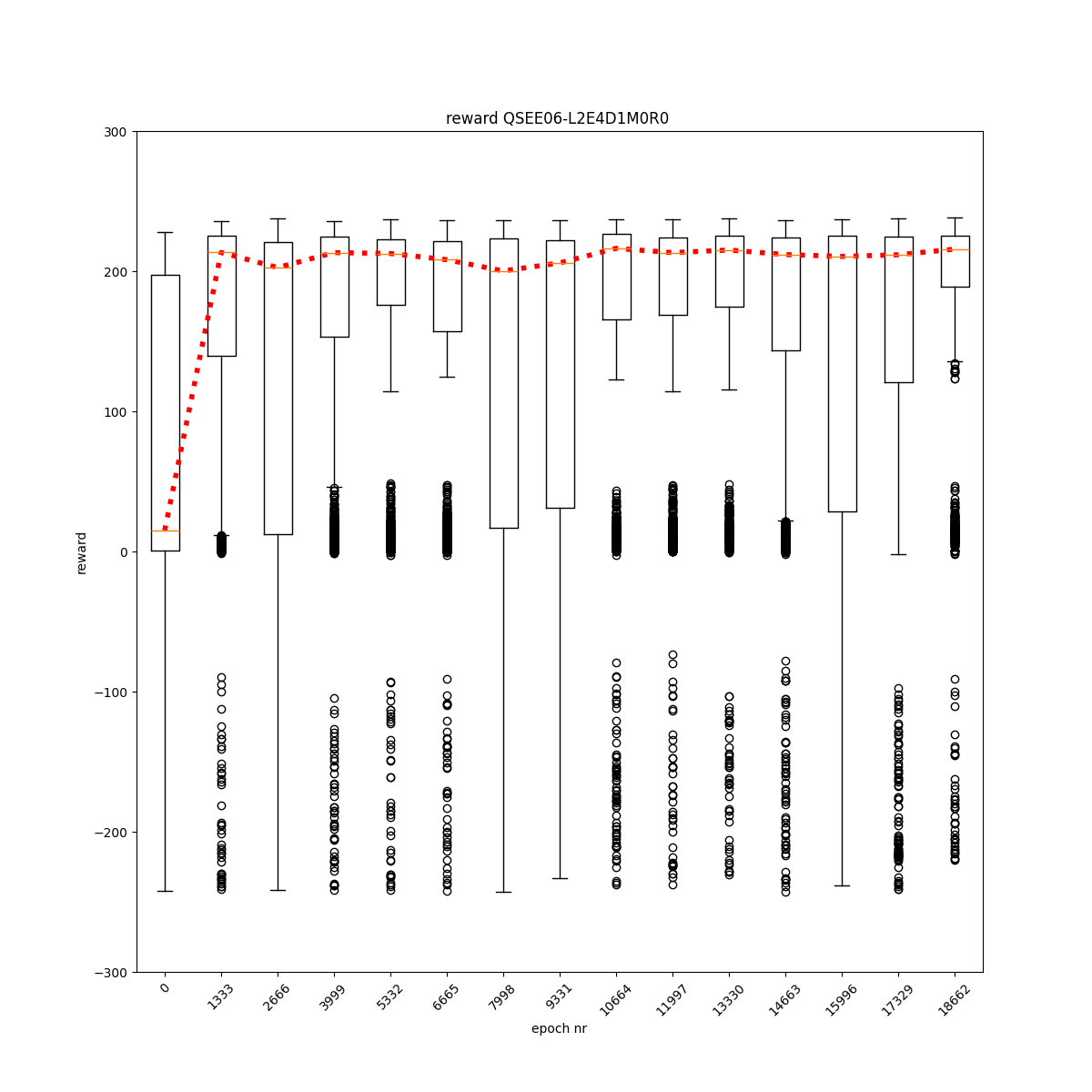

L2 E4 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

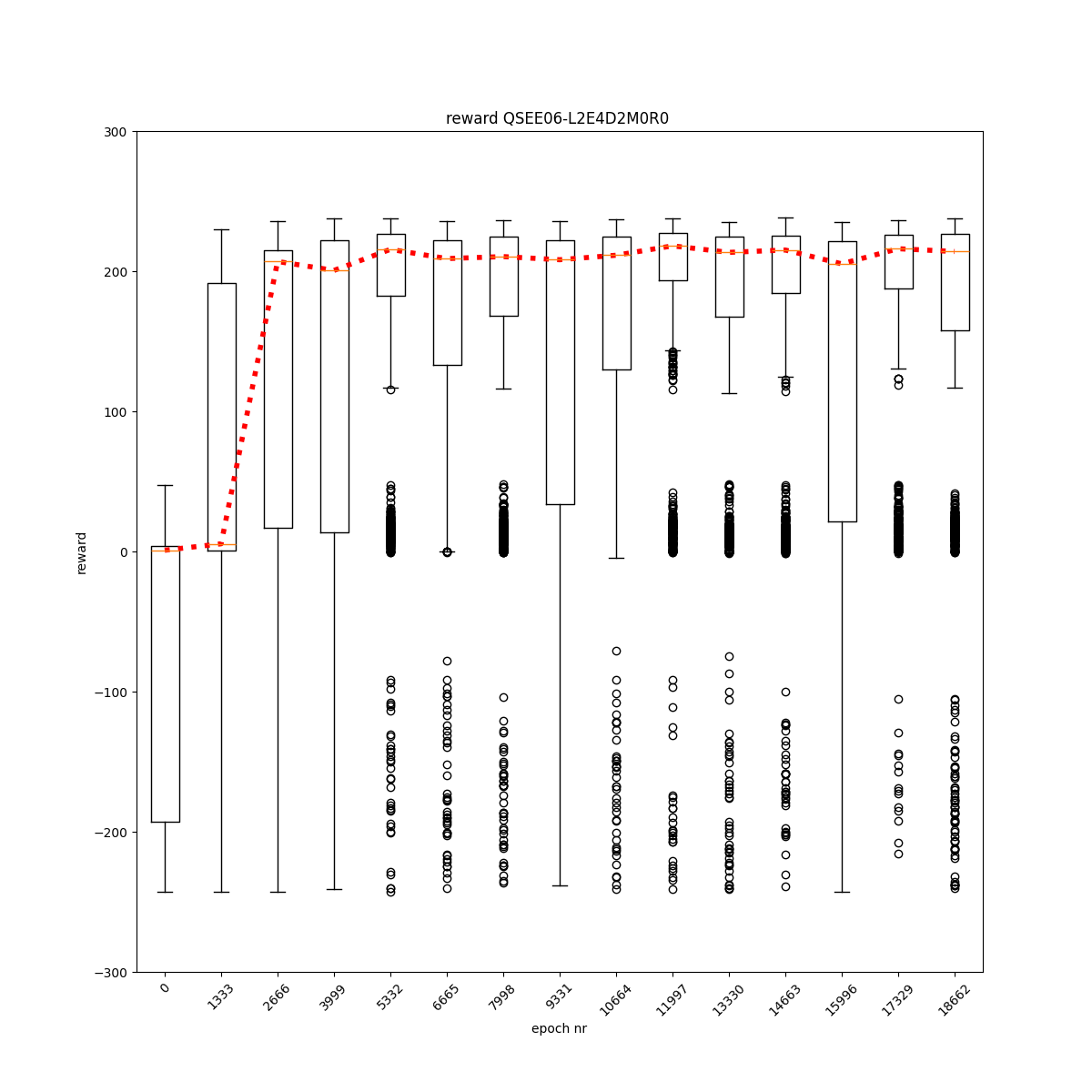

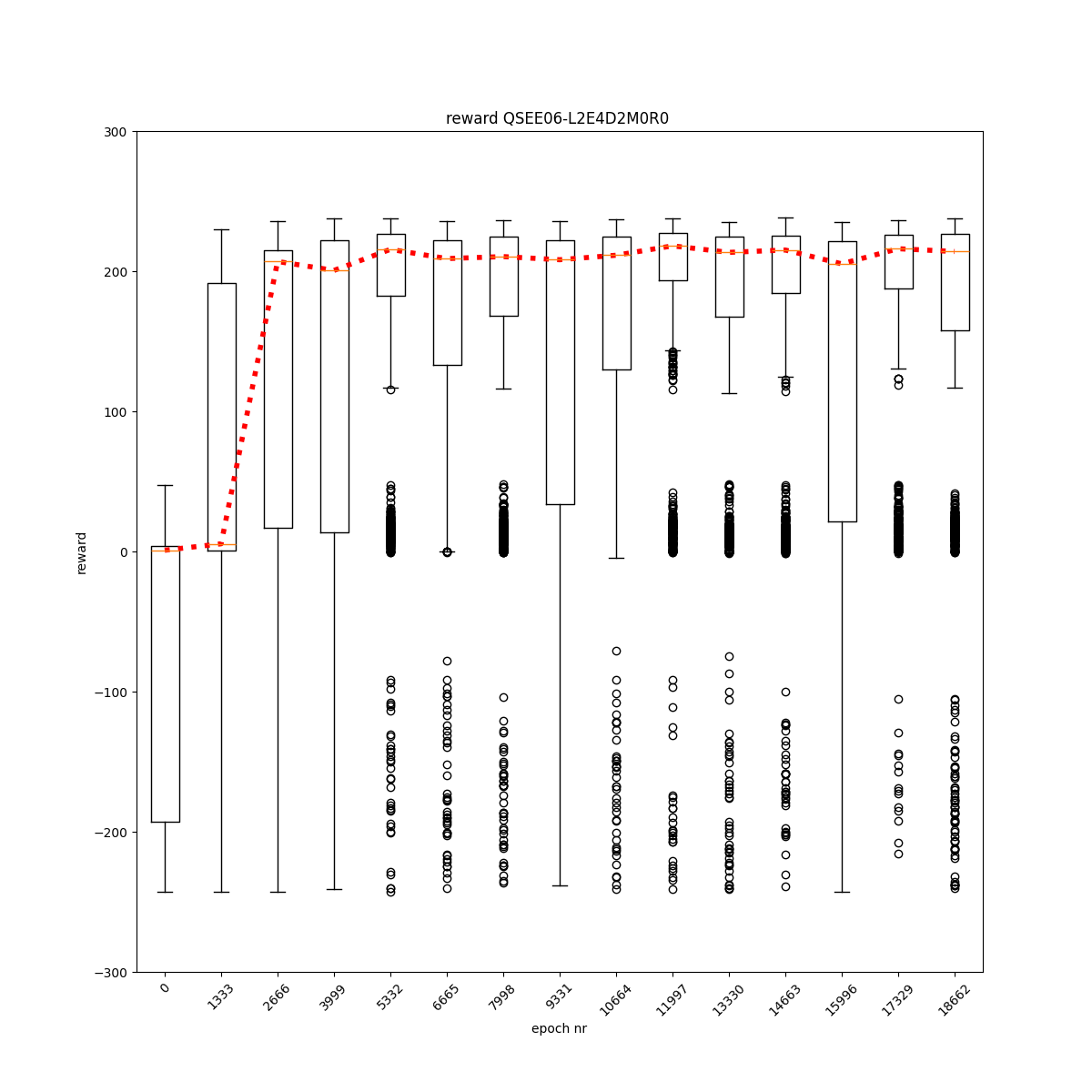

L2 E4 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

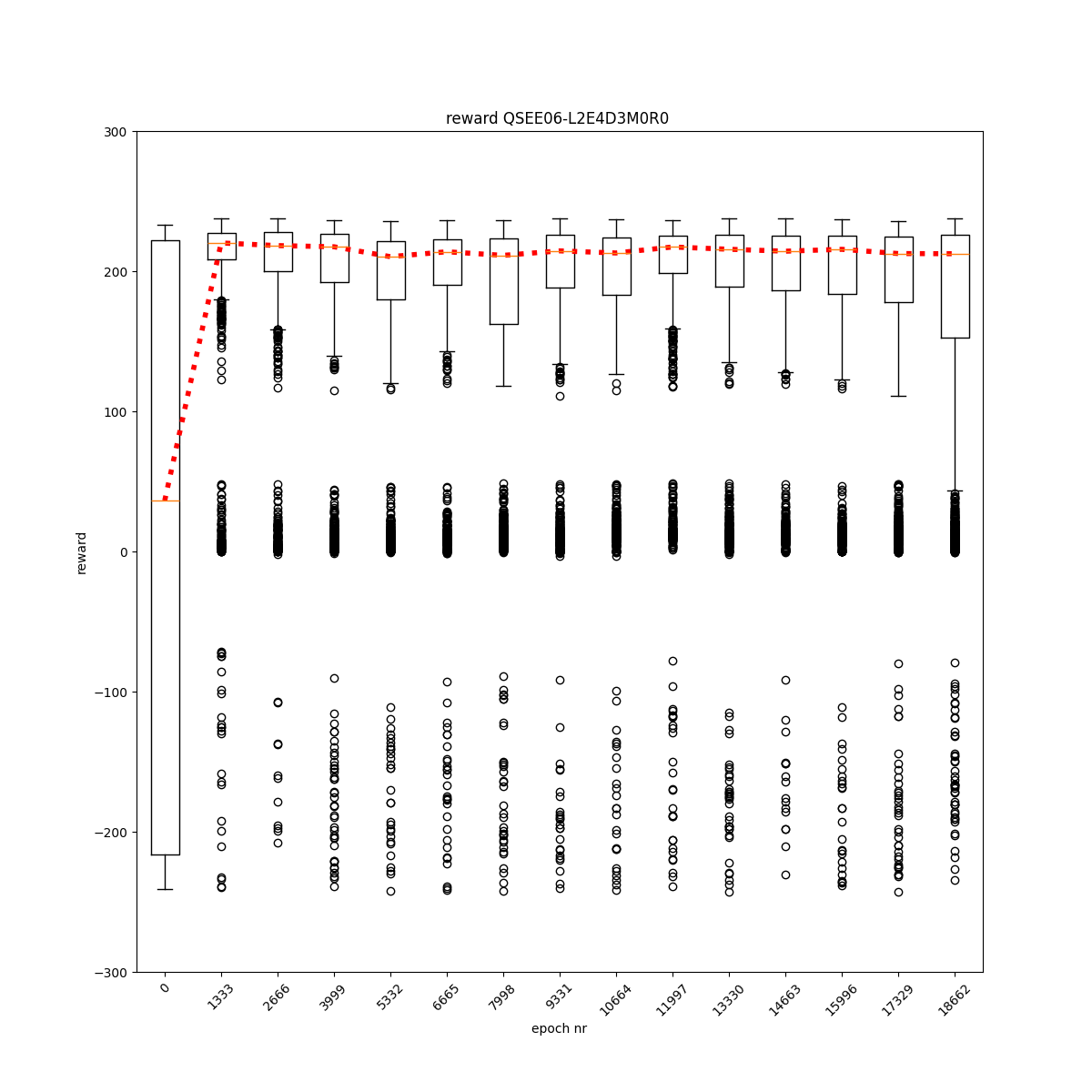

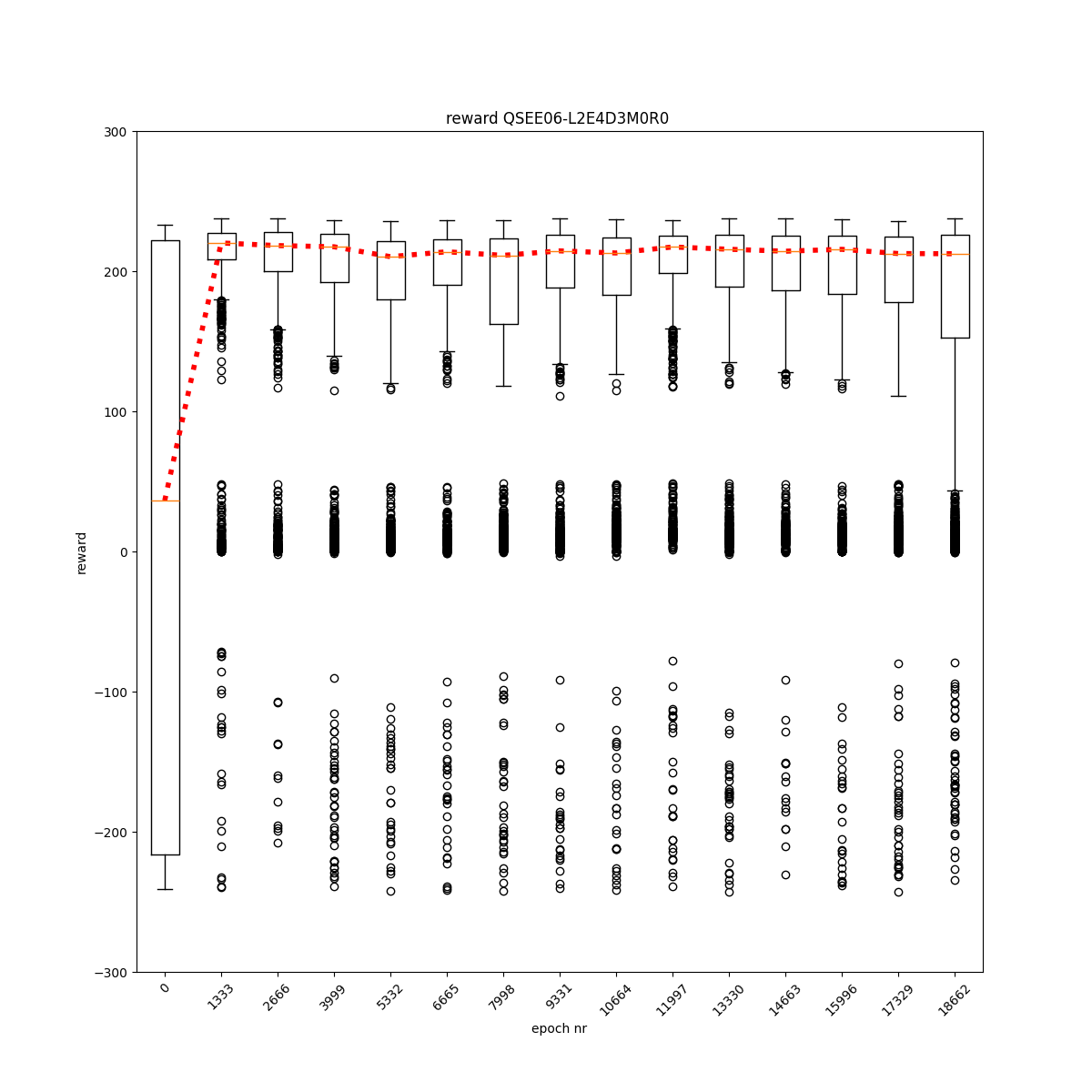

L2 E4 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

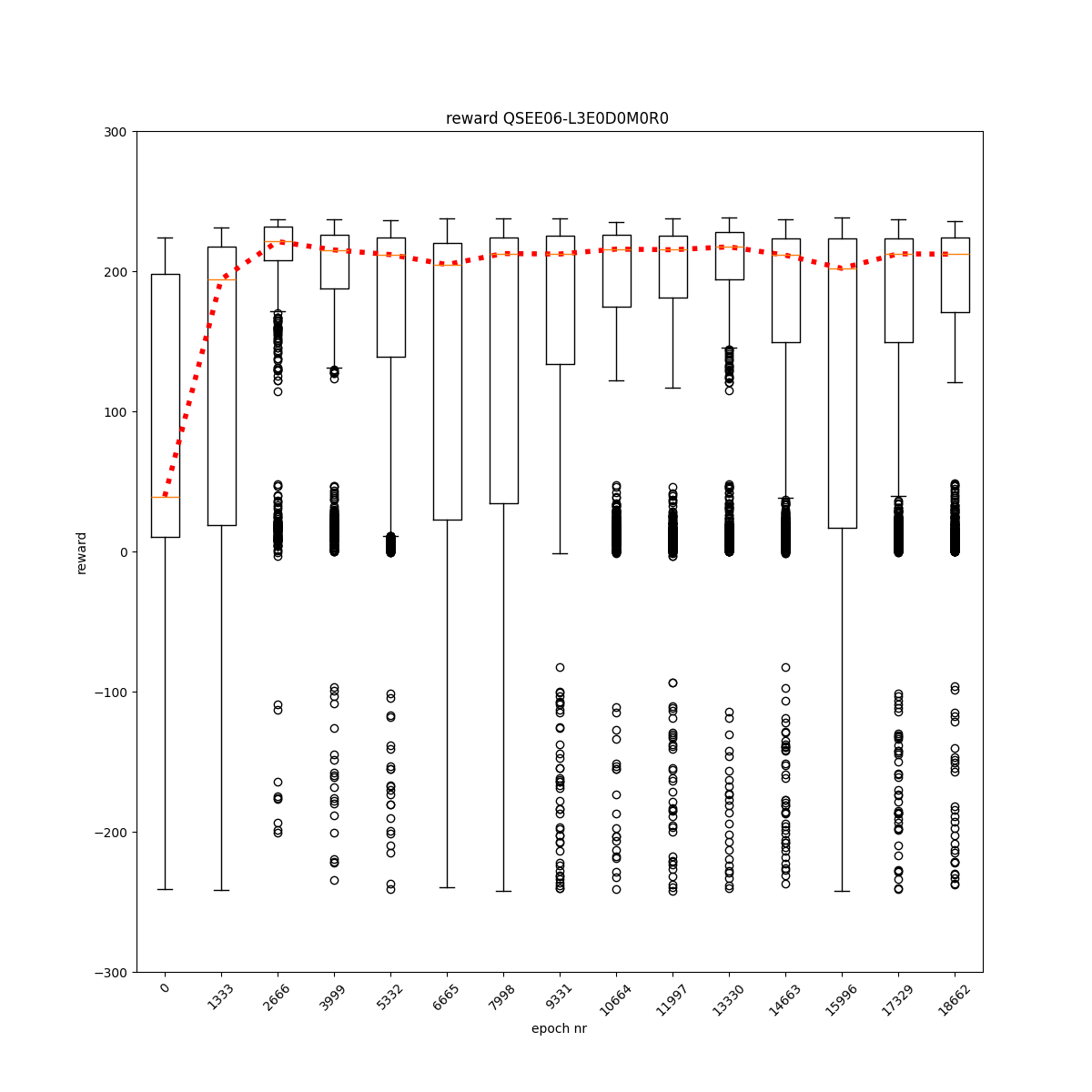

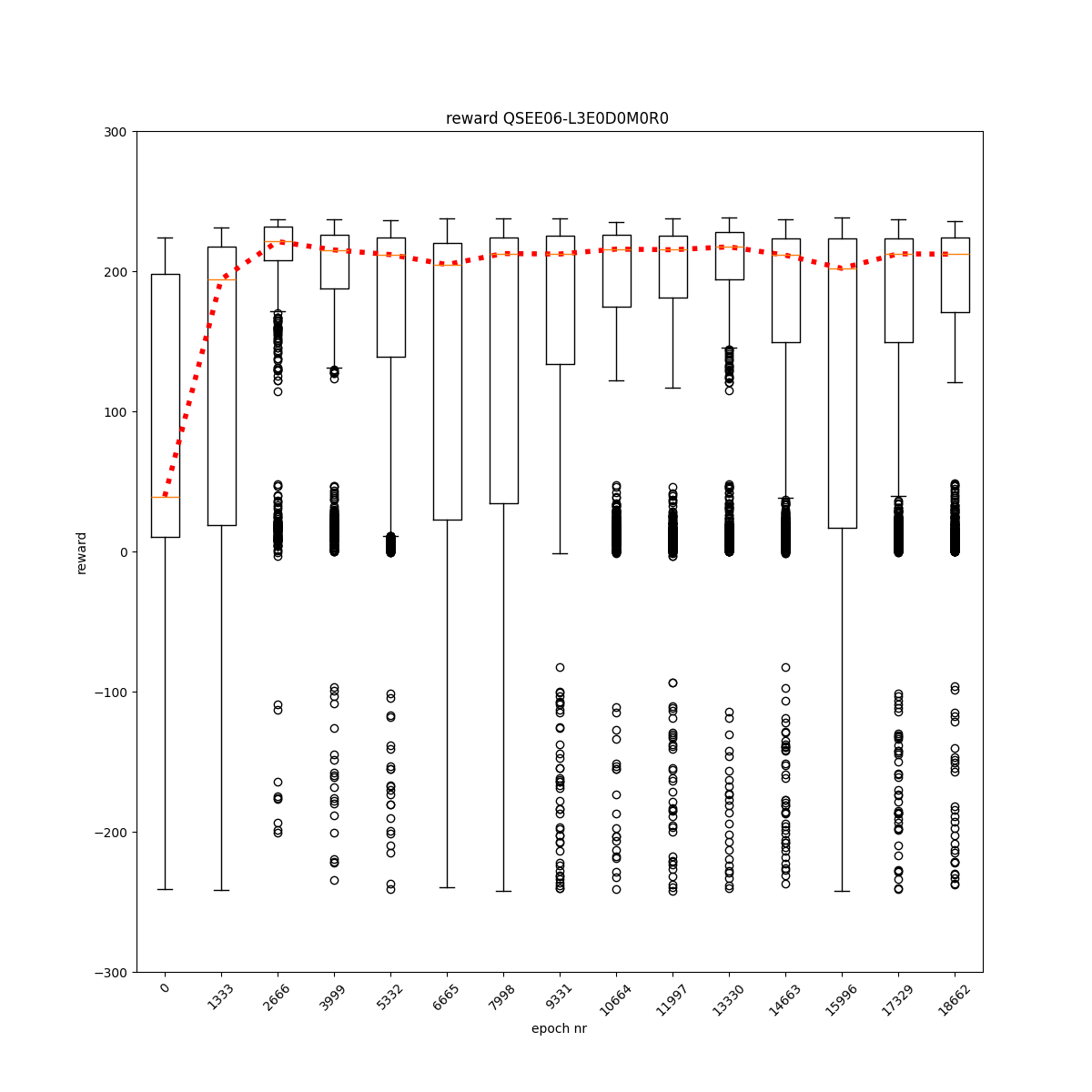

L3 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

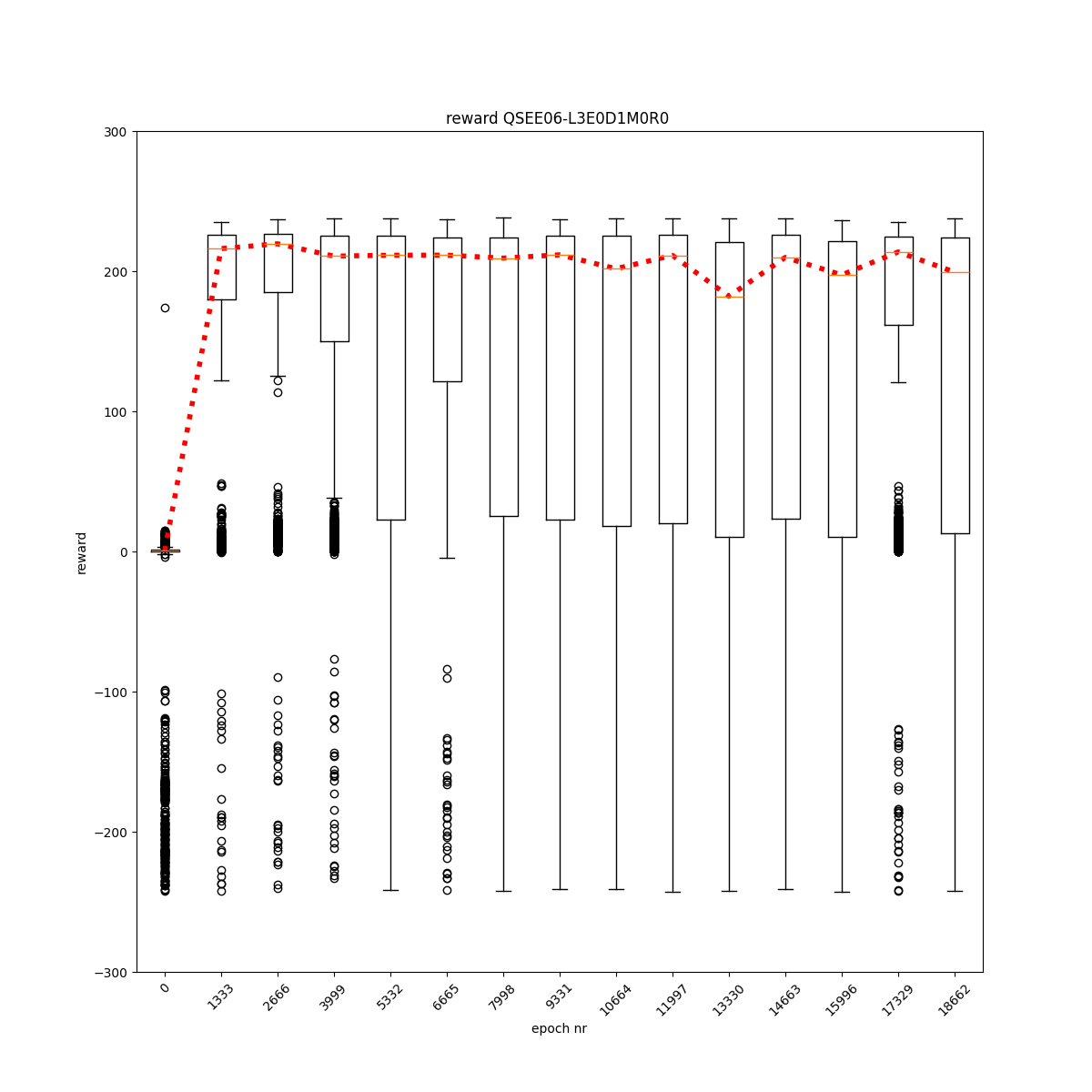

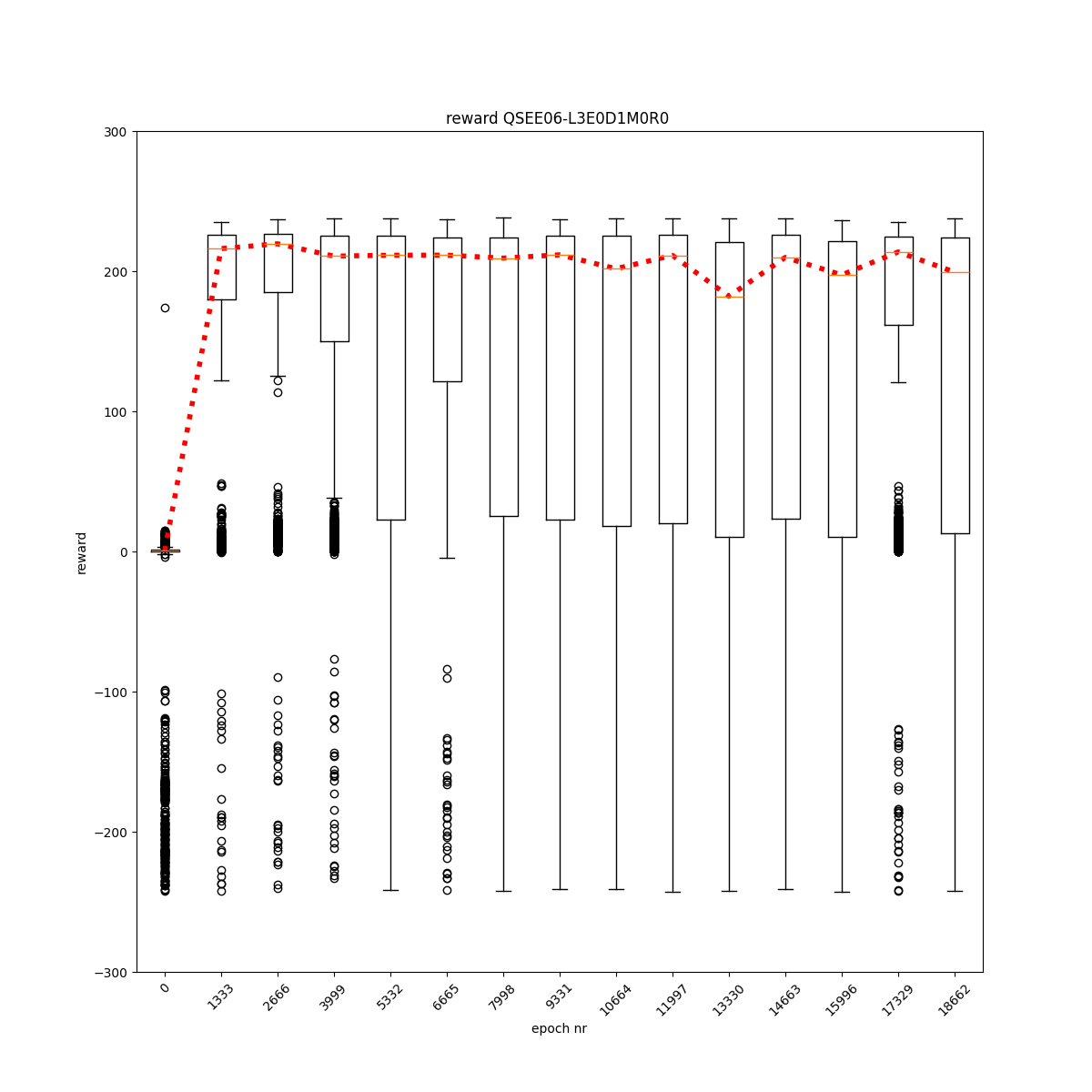

L3 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

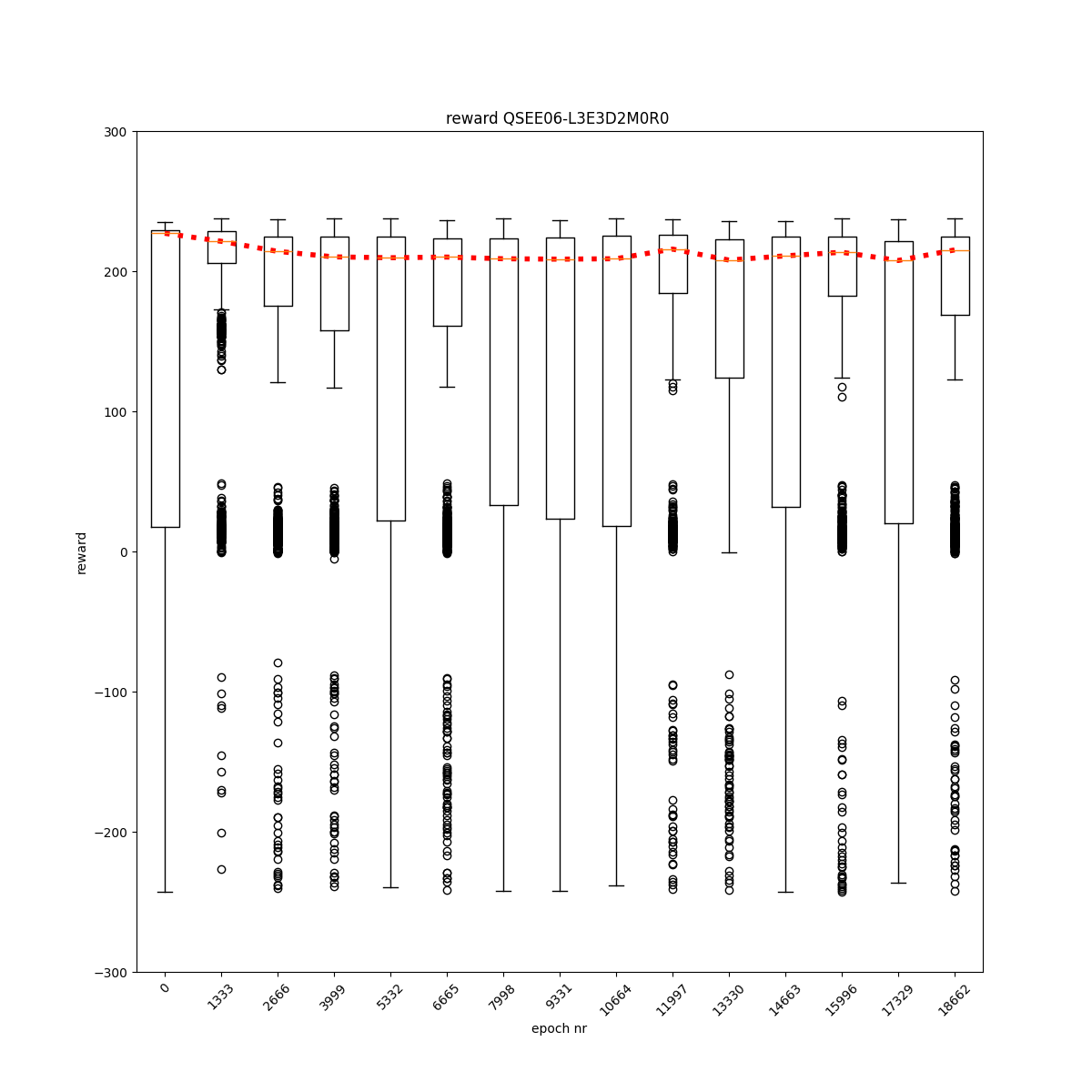

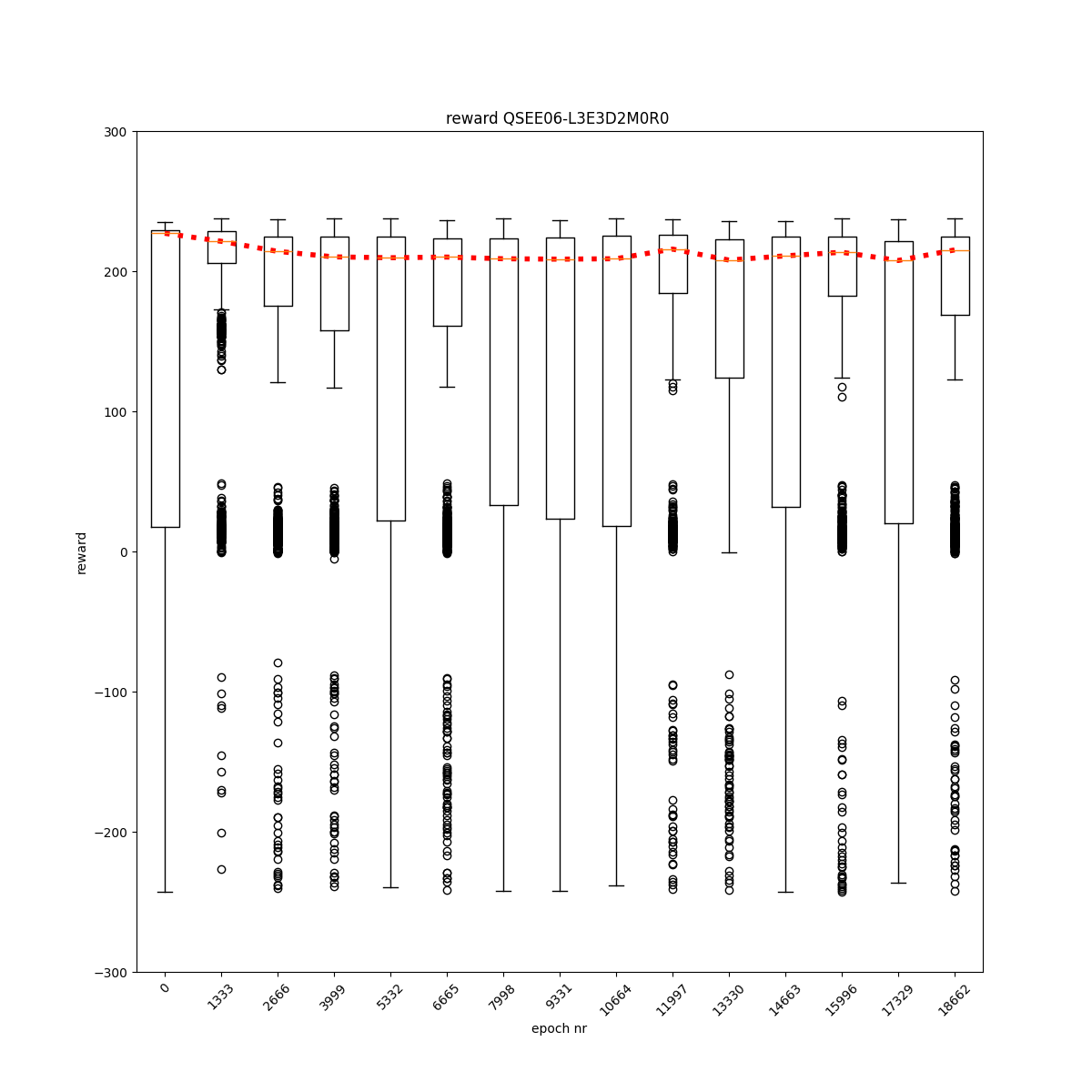

L3 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

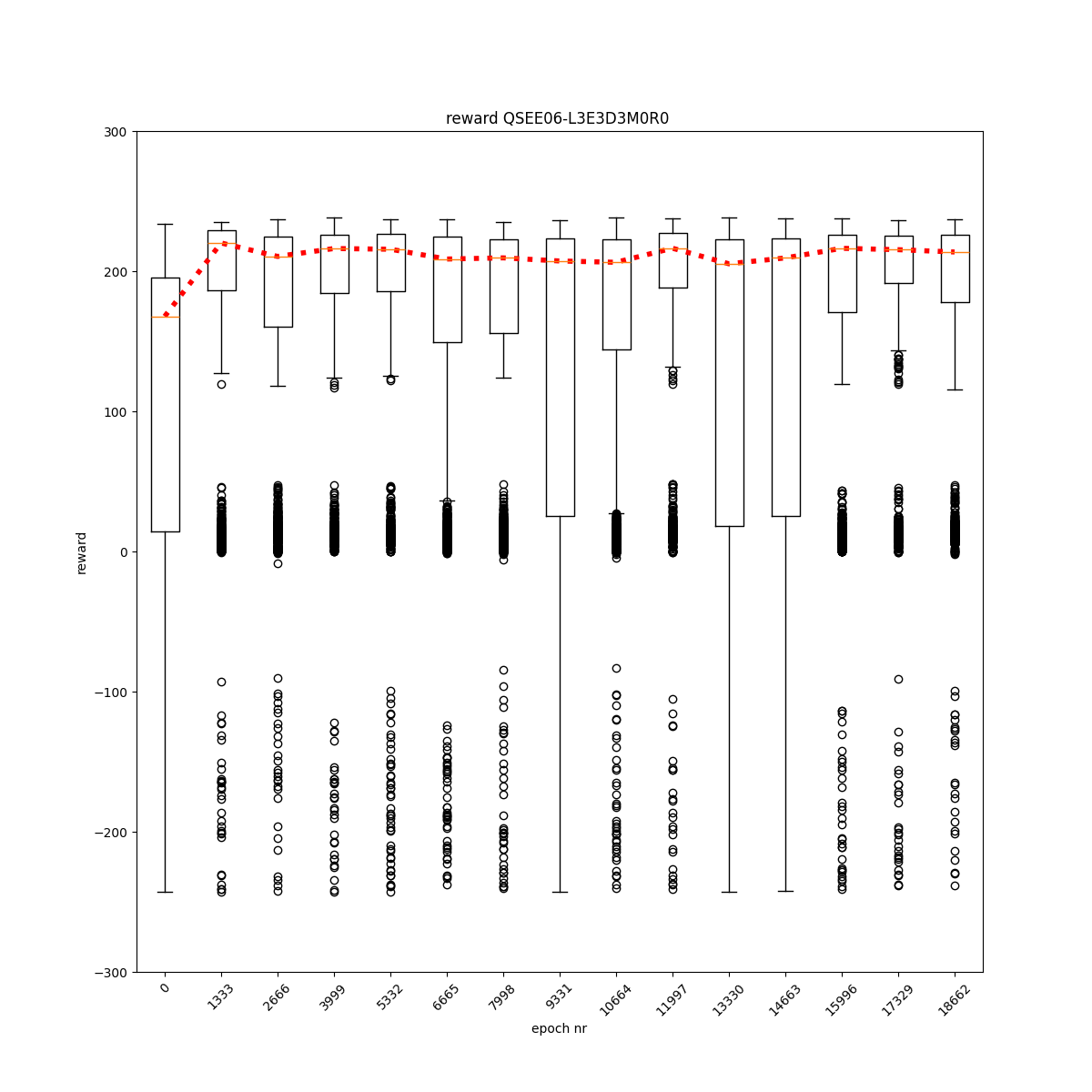

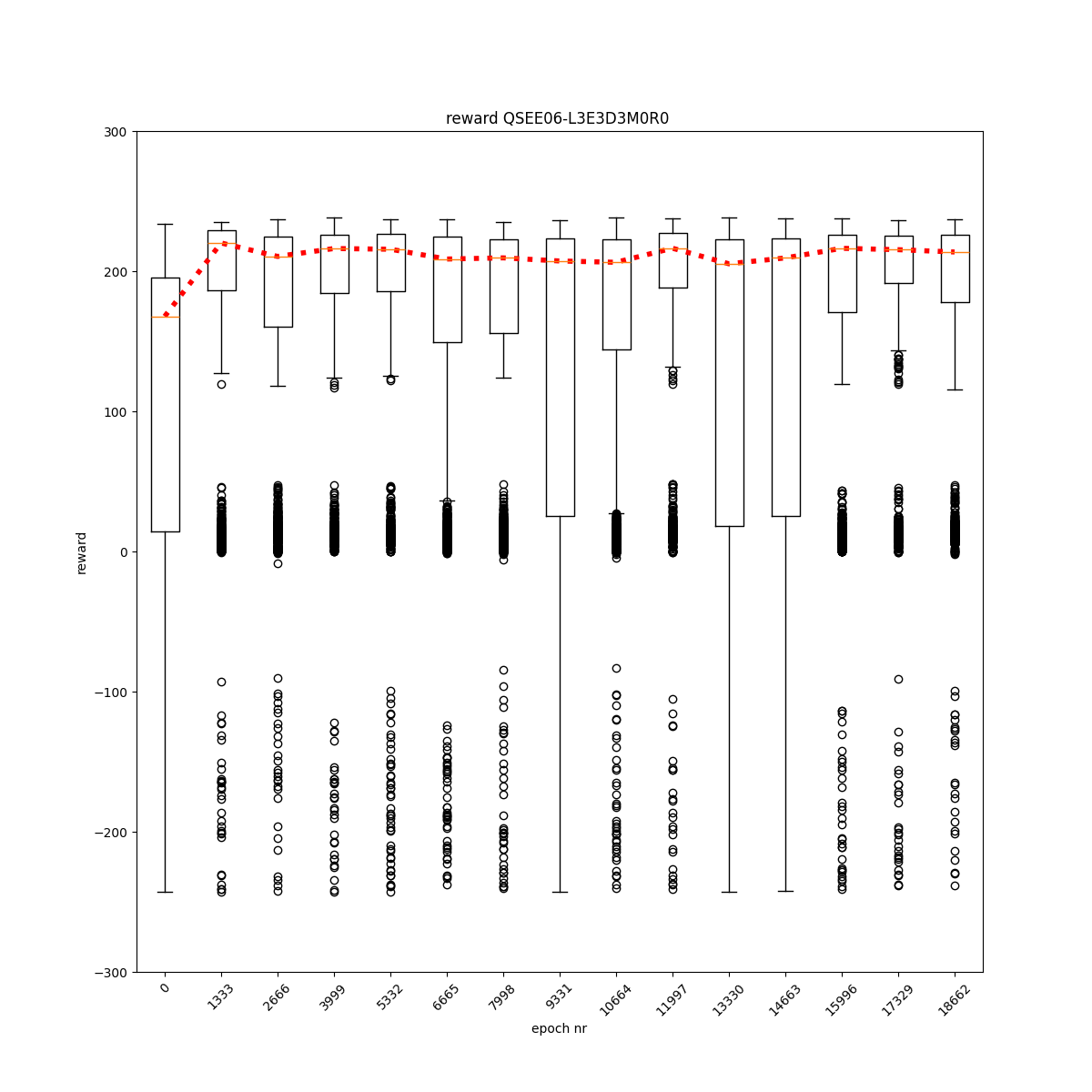

L3 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

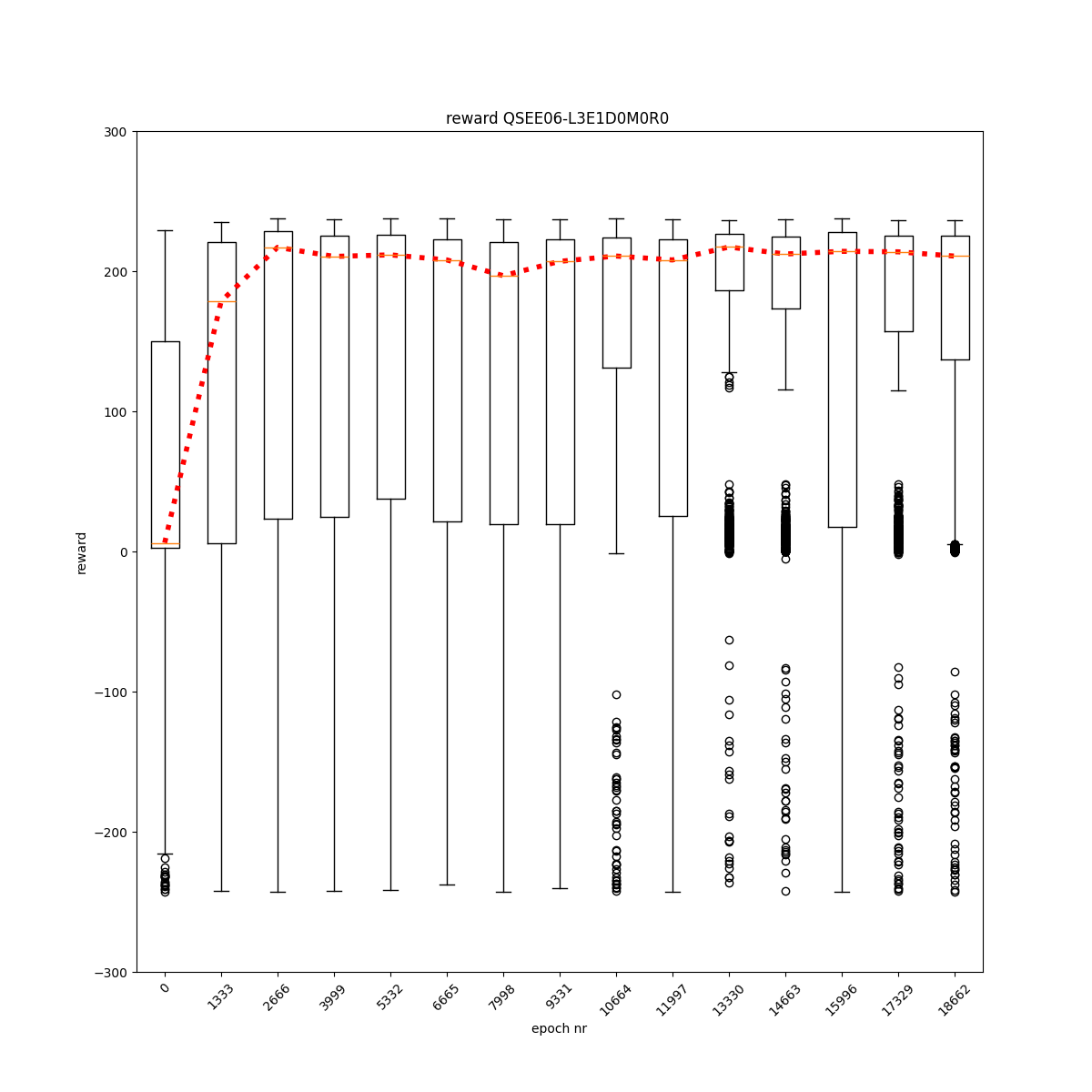

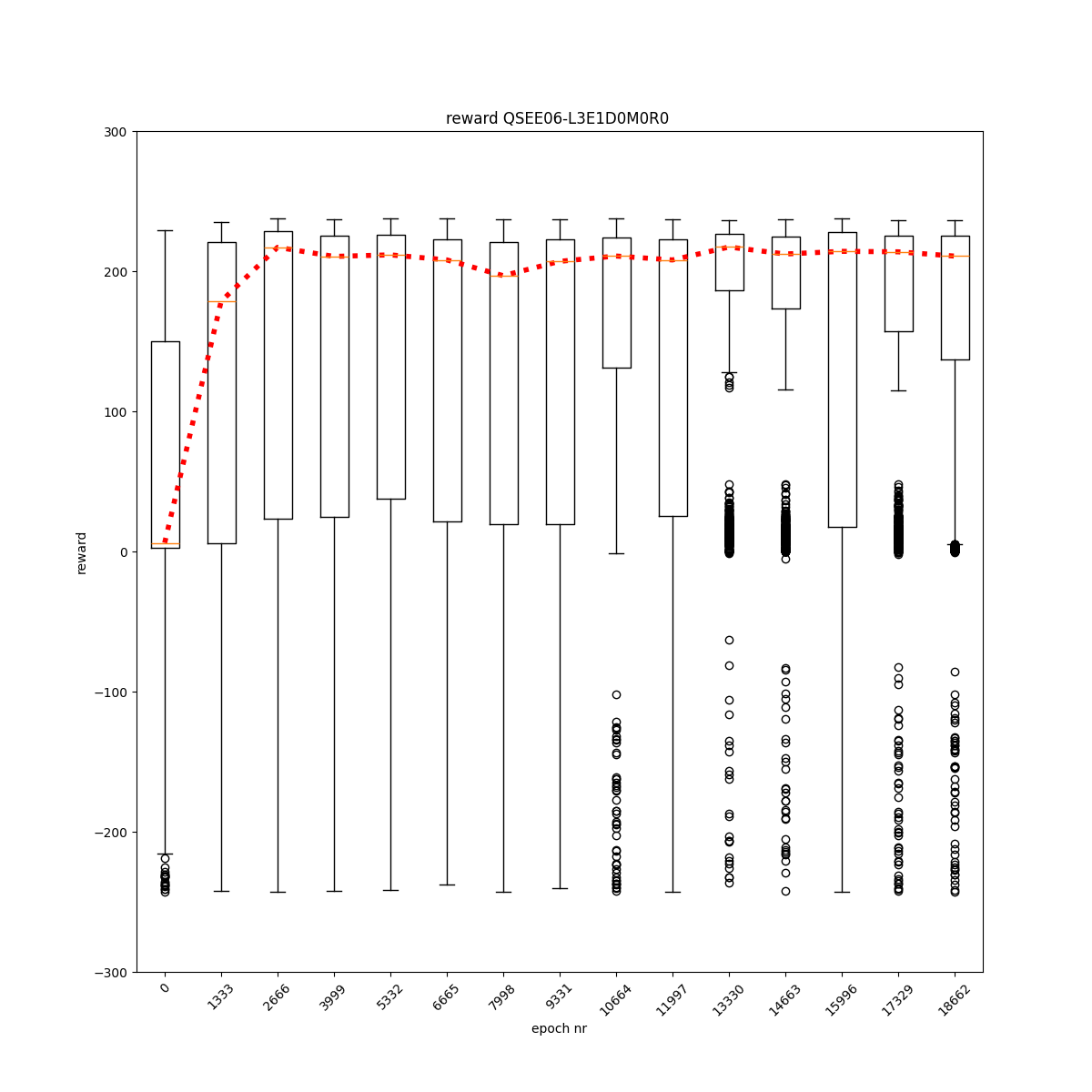

L3 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

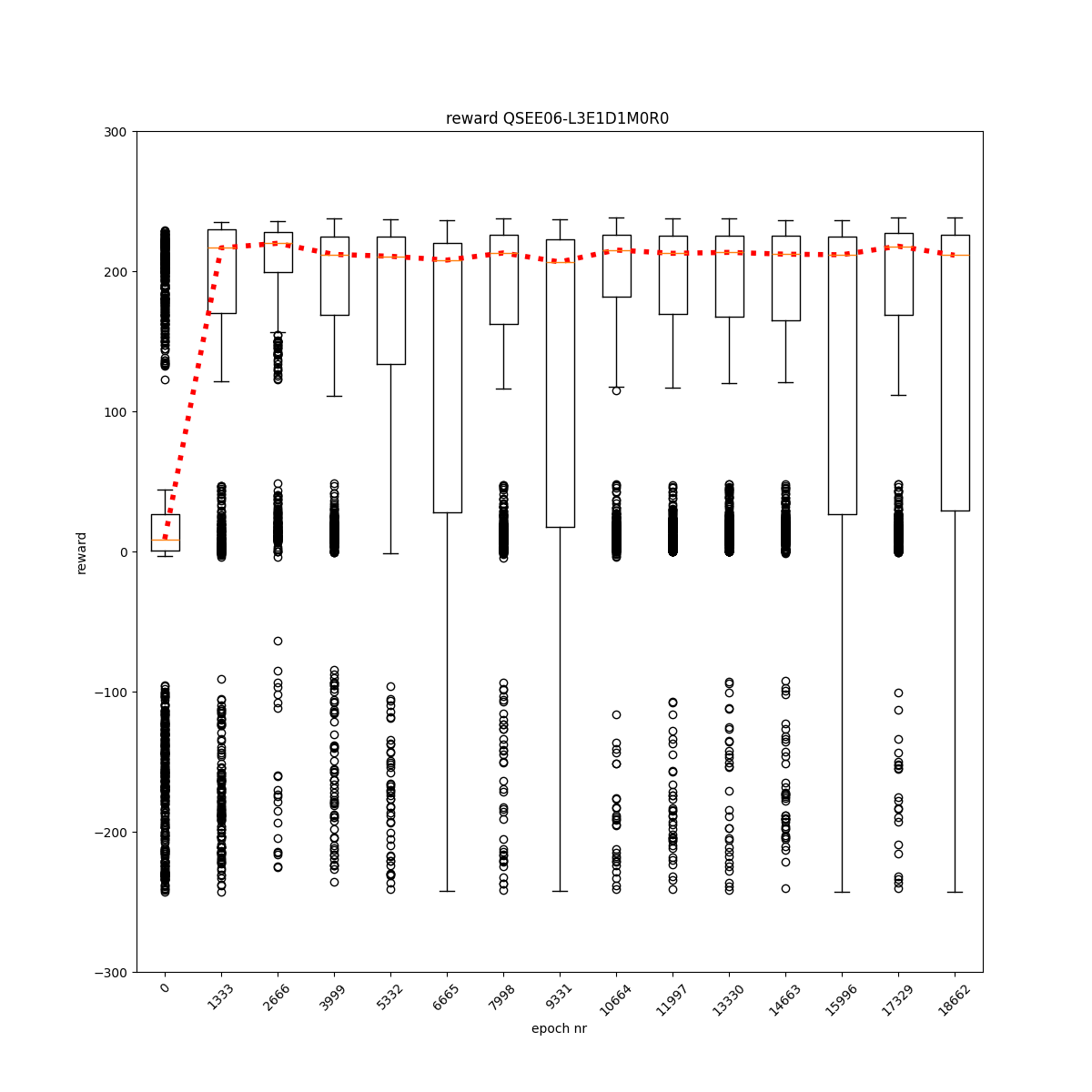

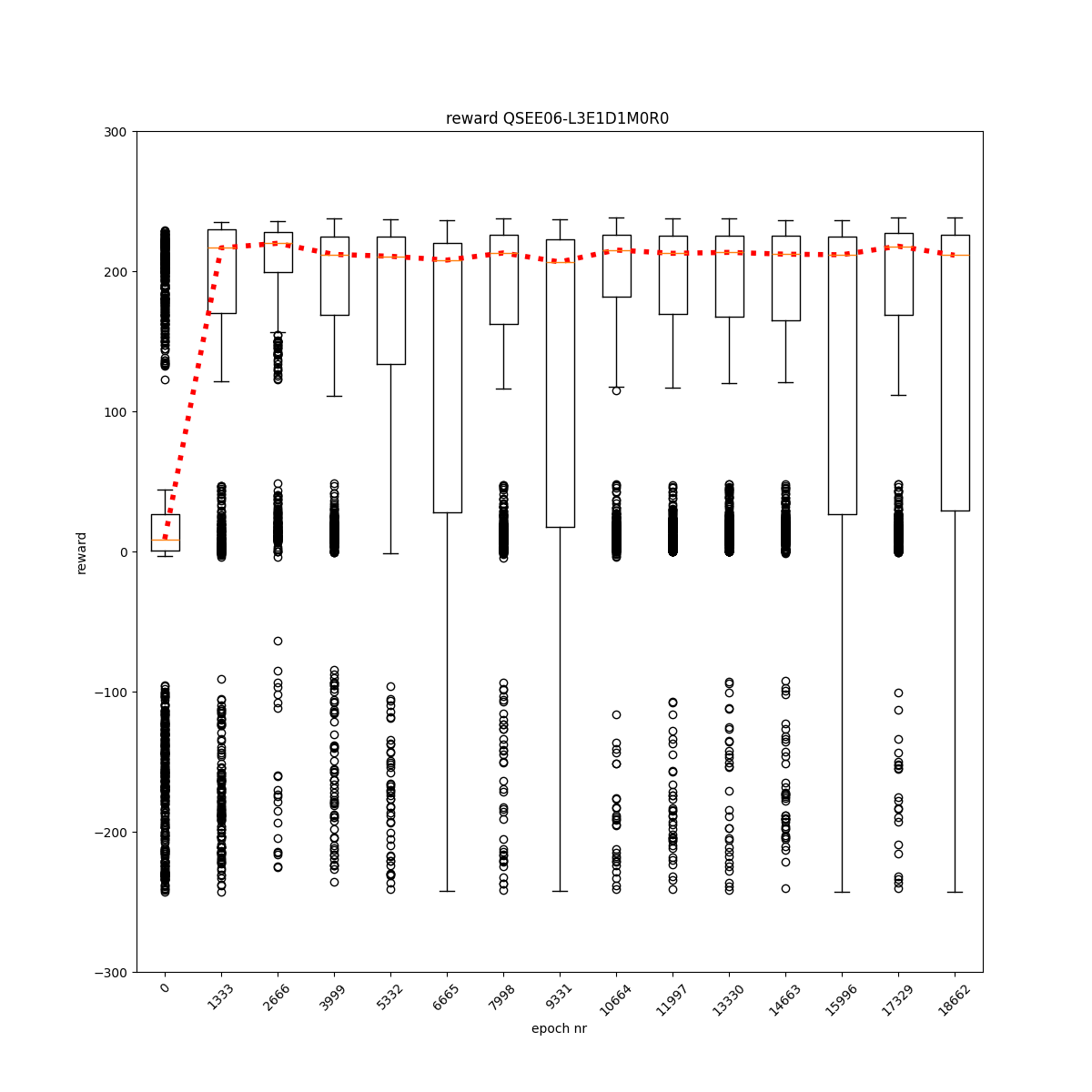

L3 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

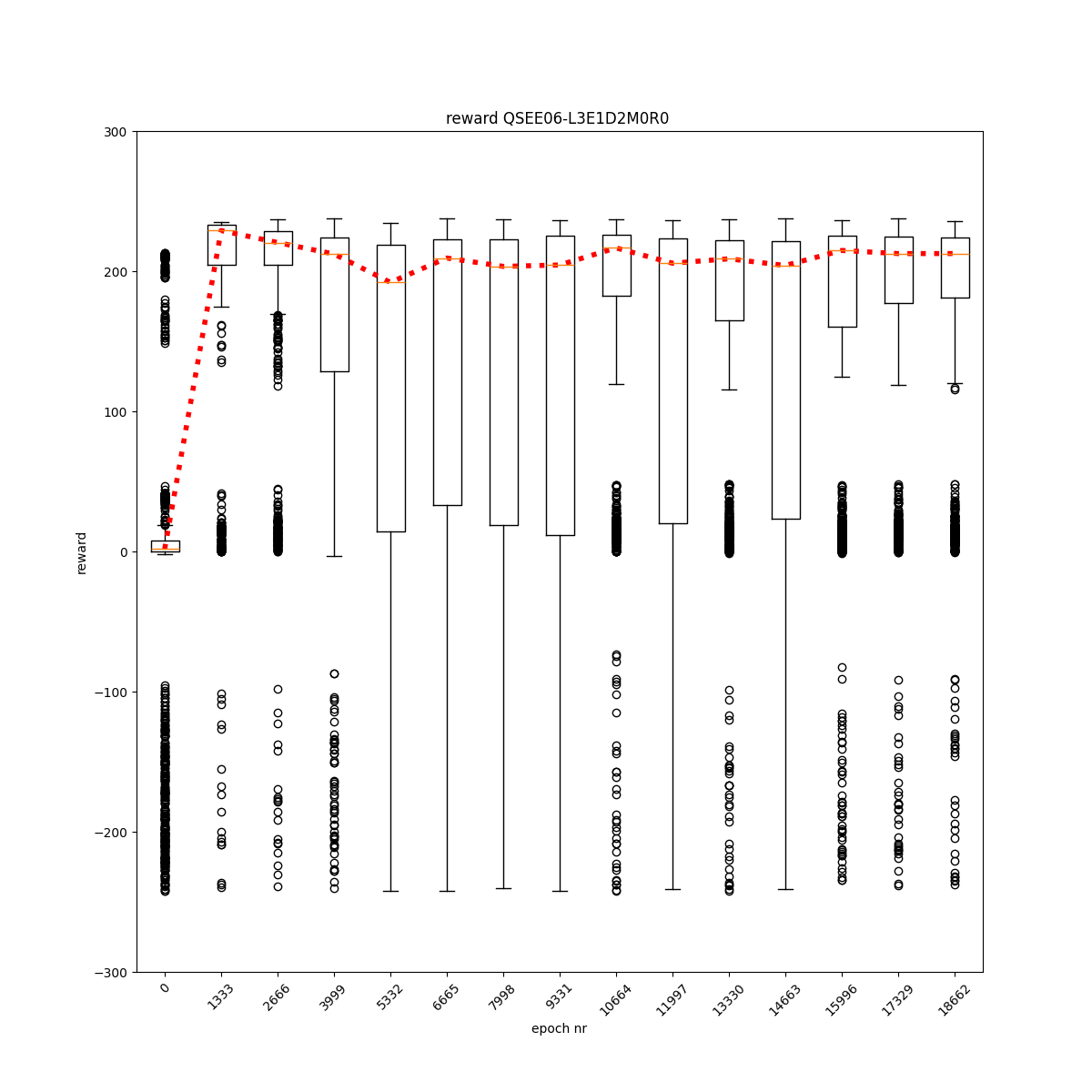

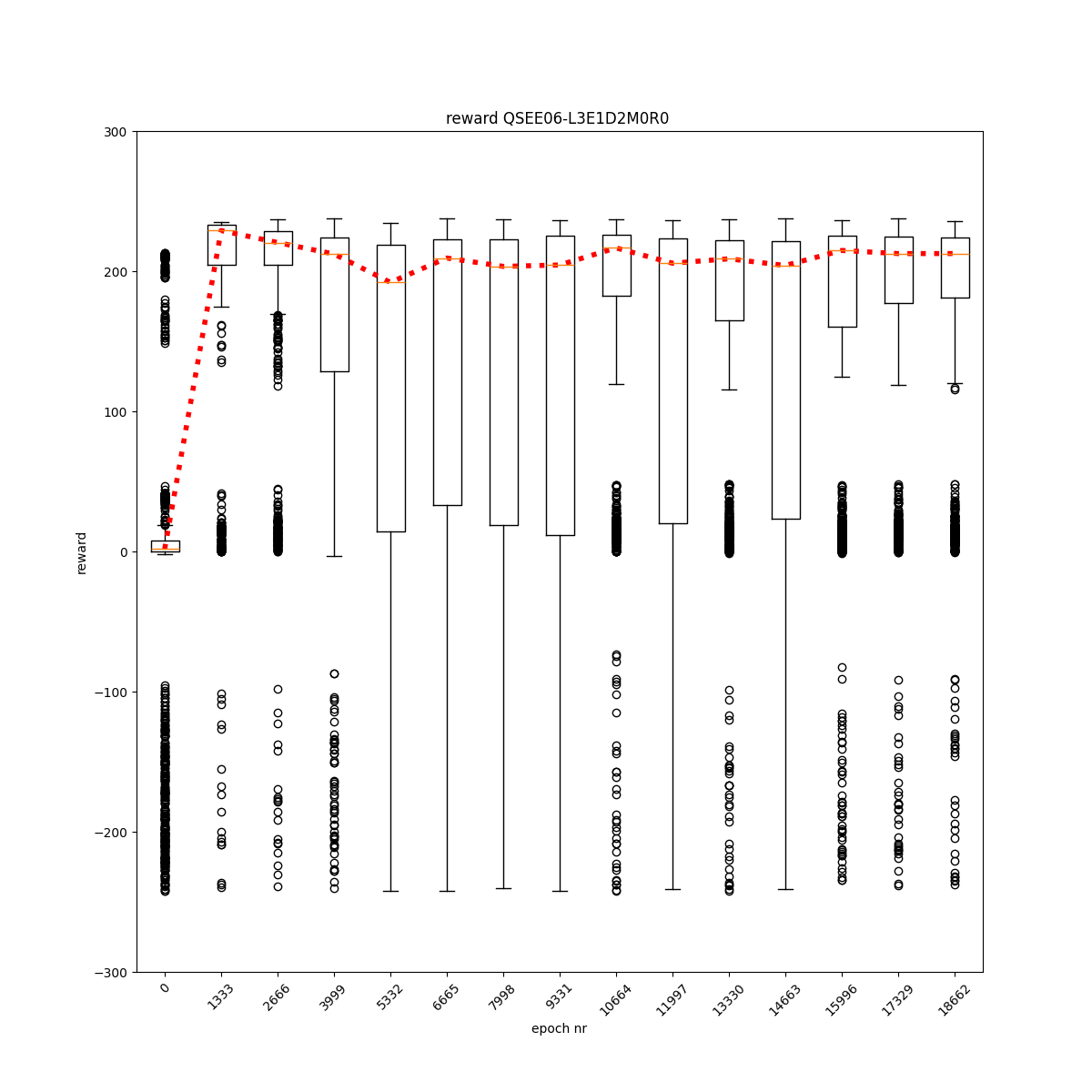

L3 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

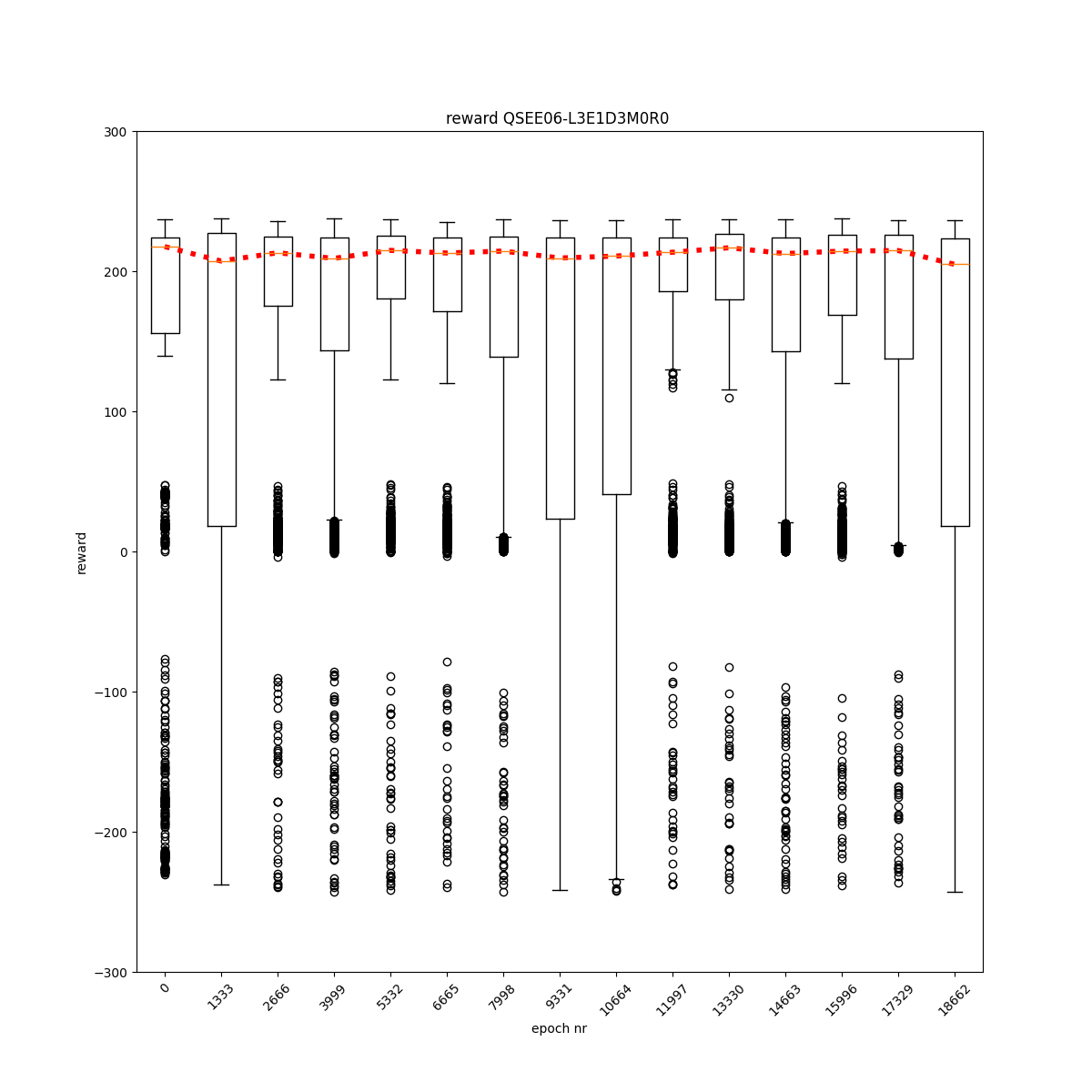

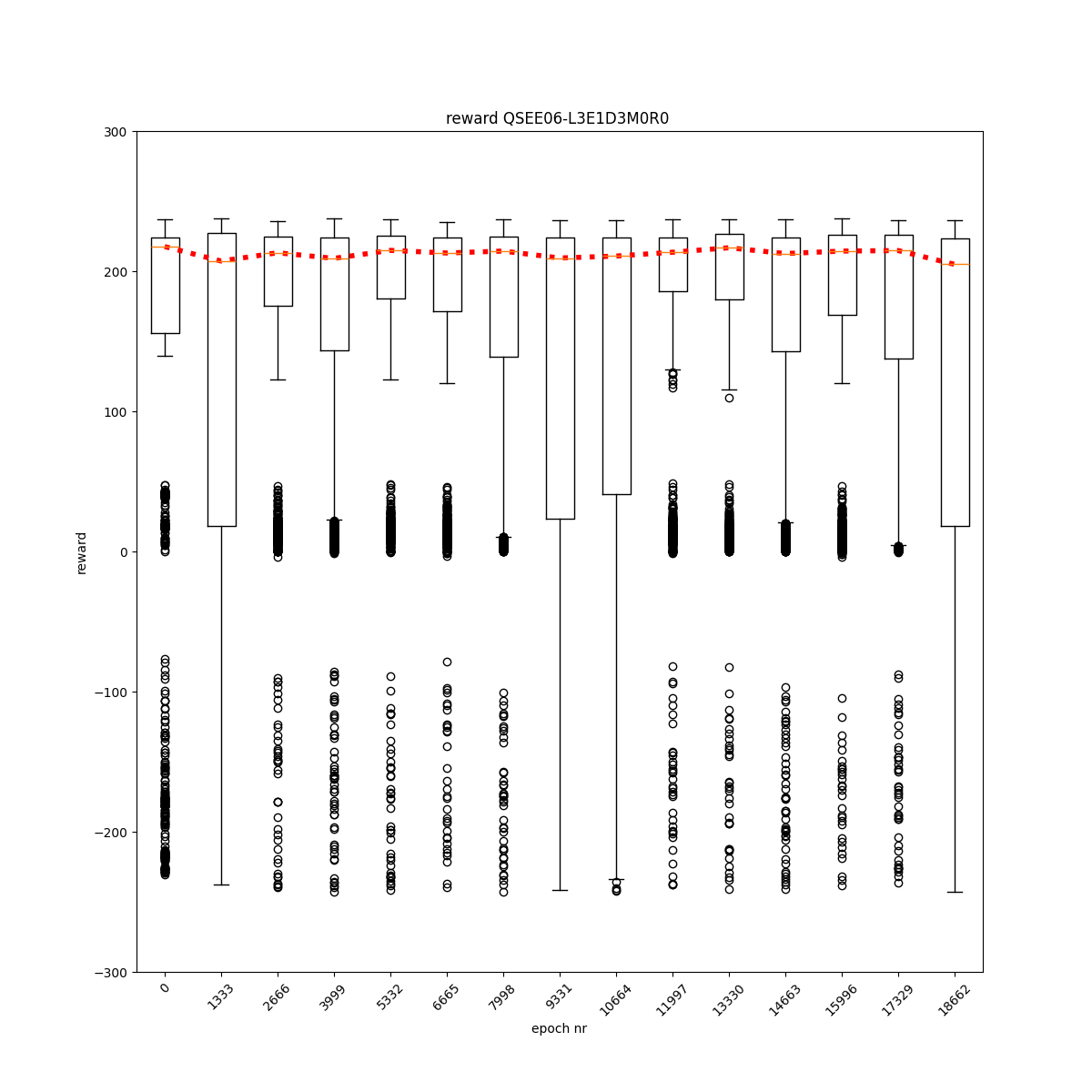

L3 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

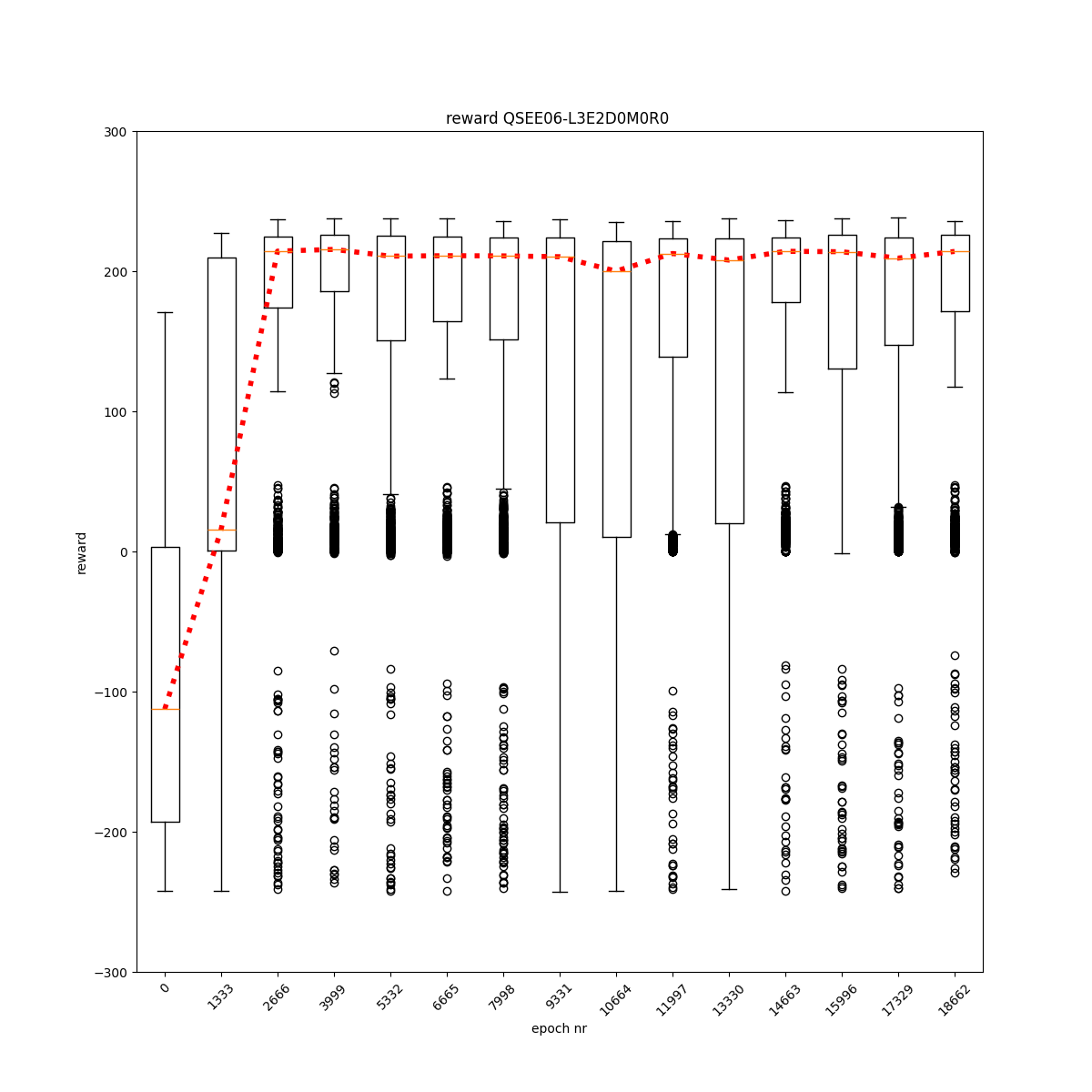

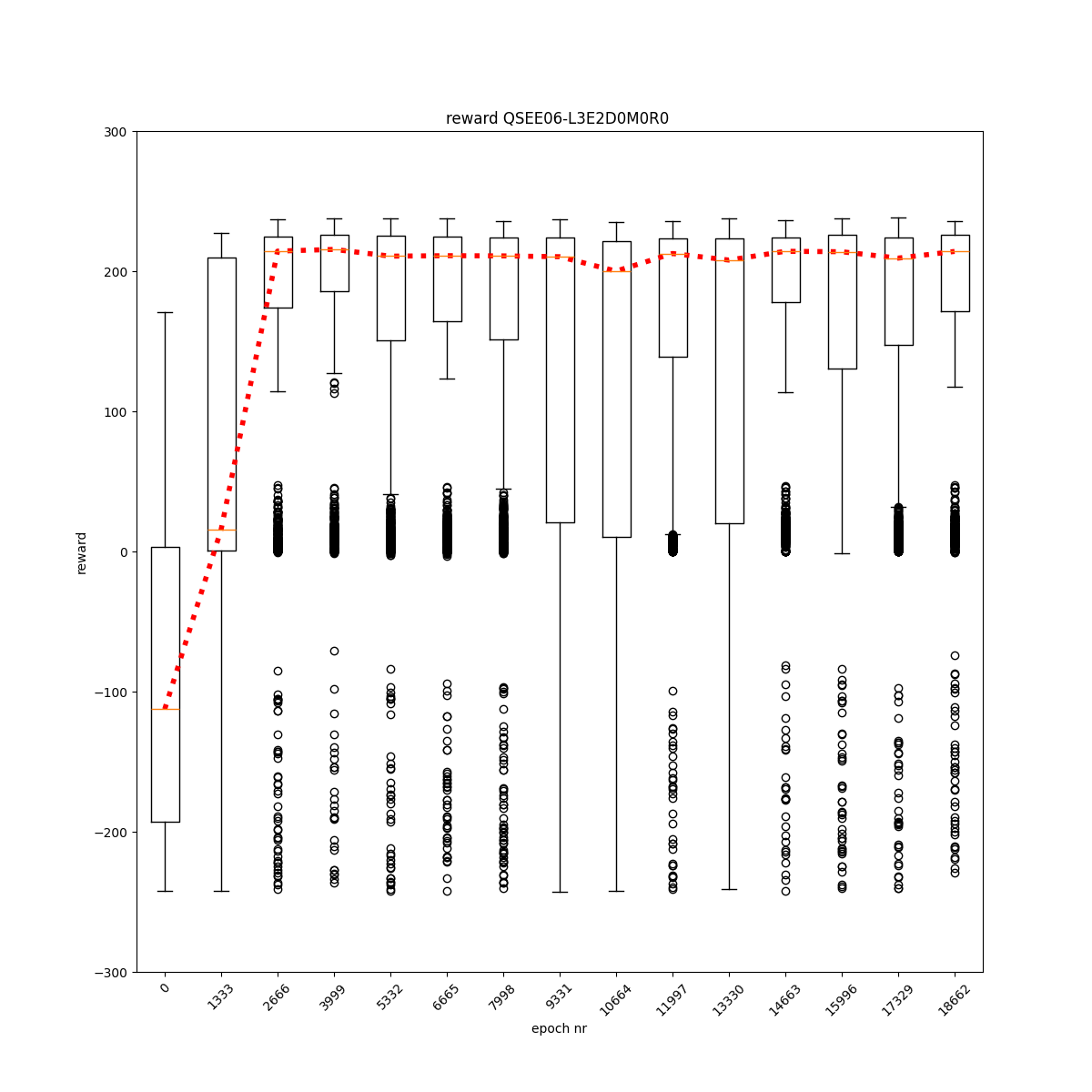

L3 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

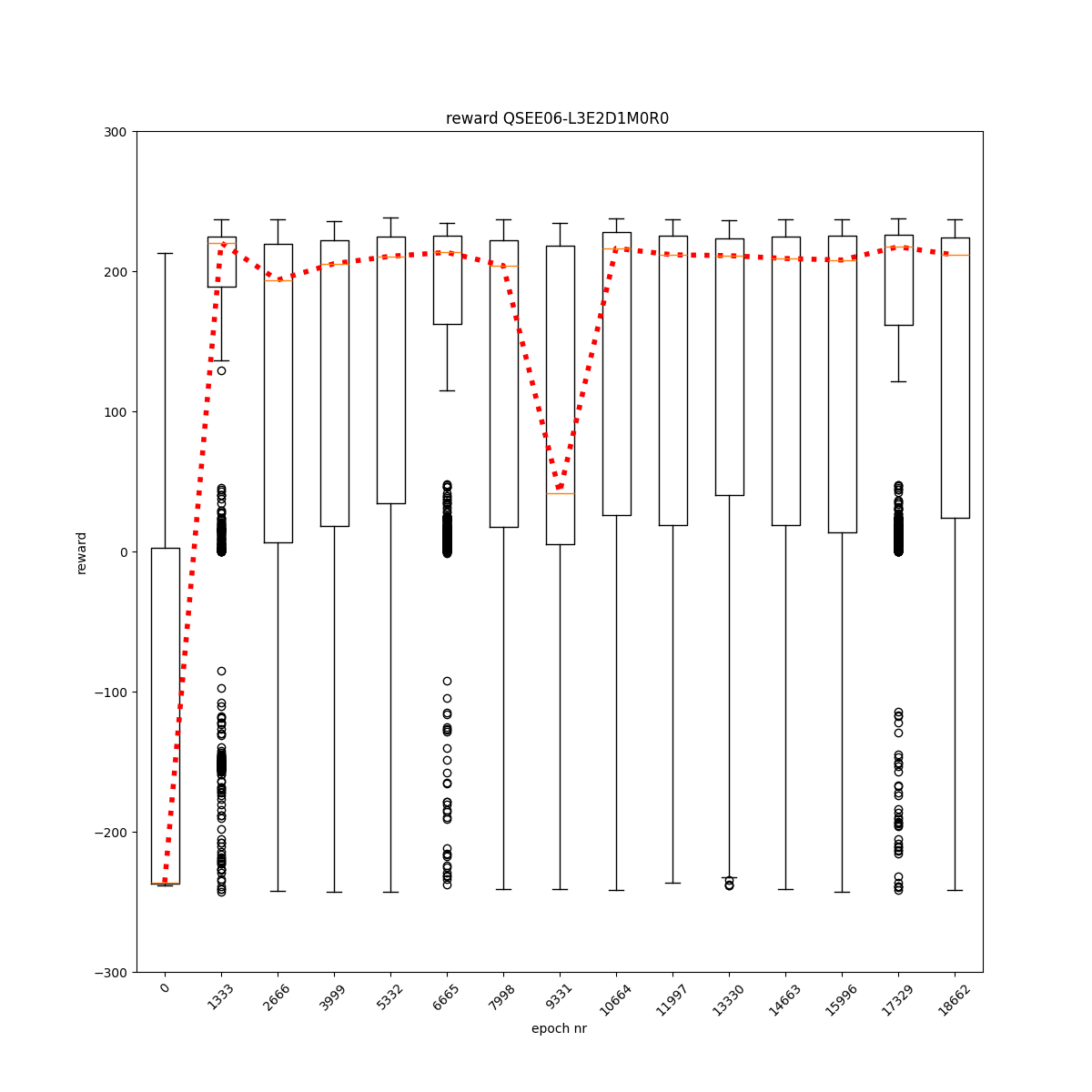

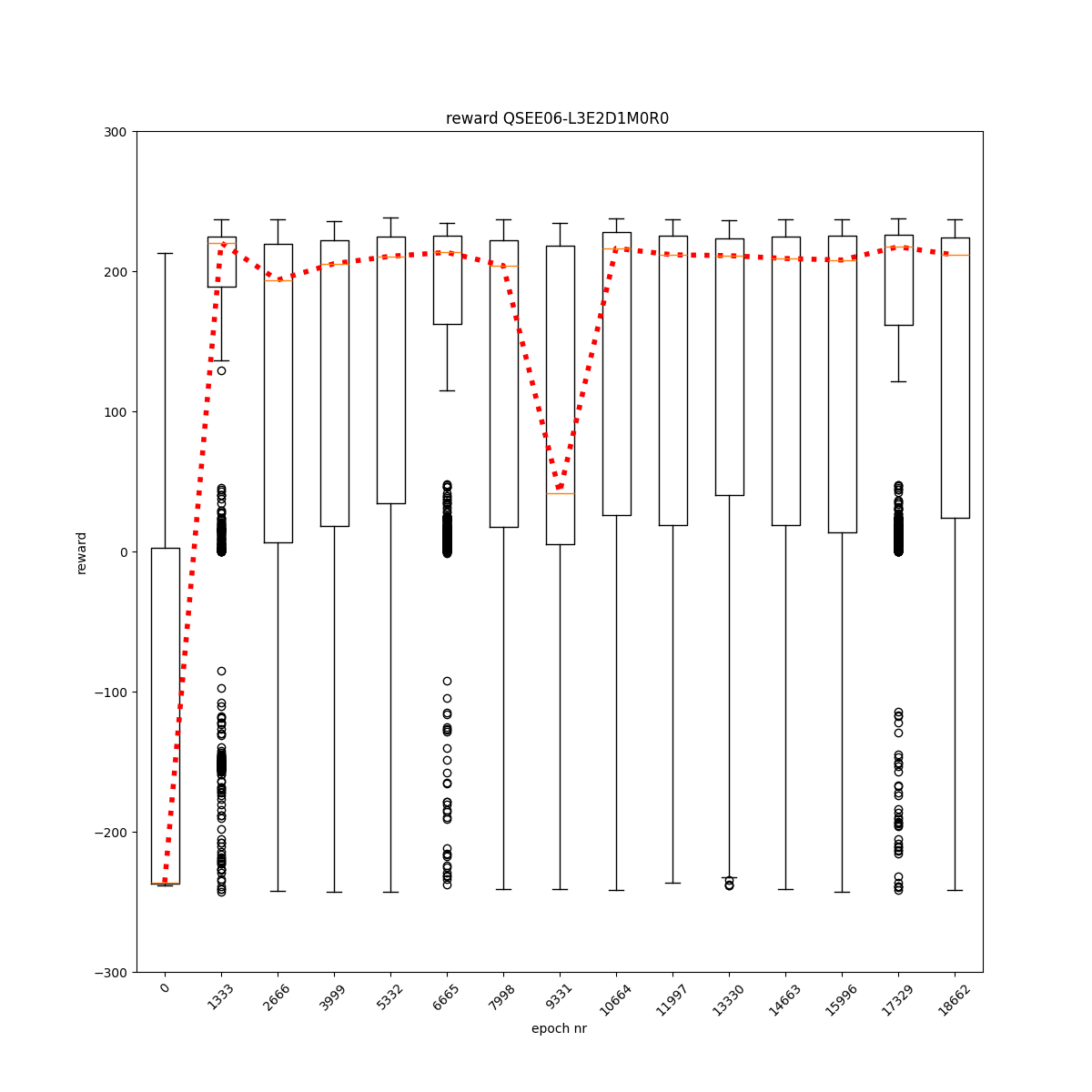

L3 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

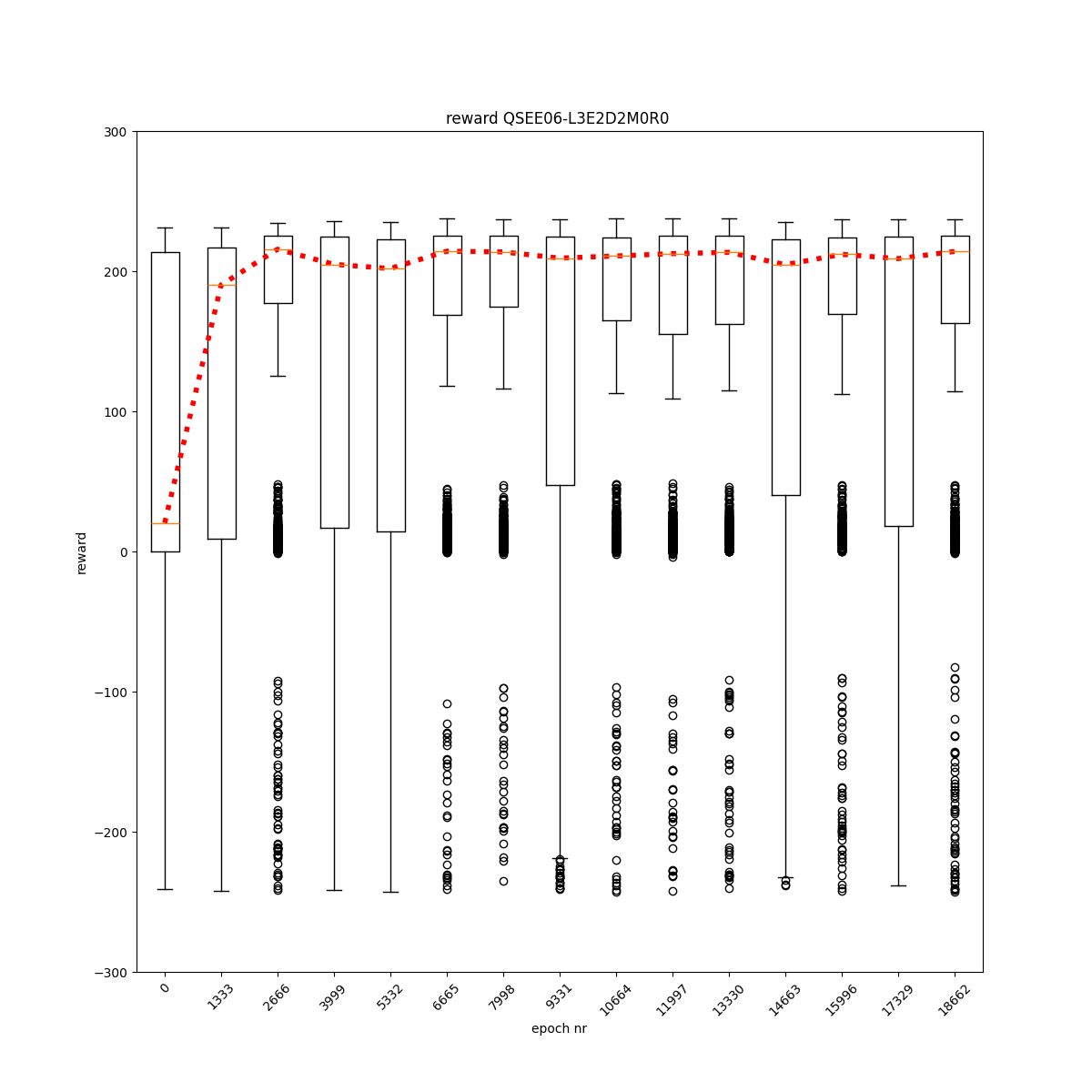

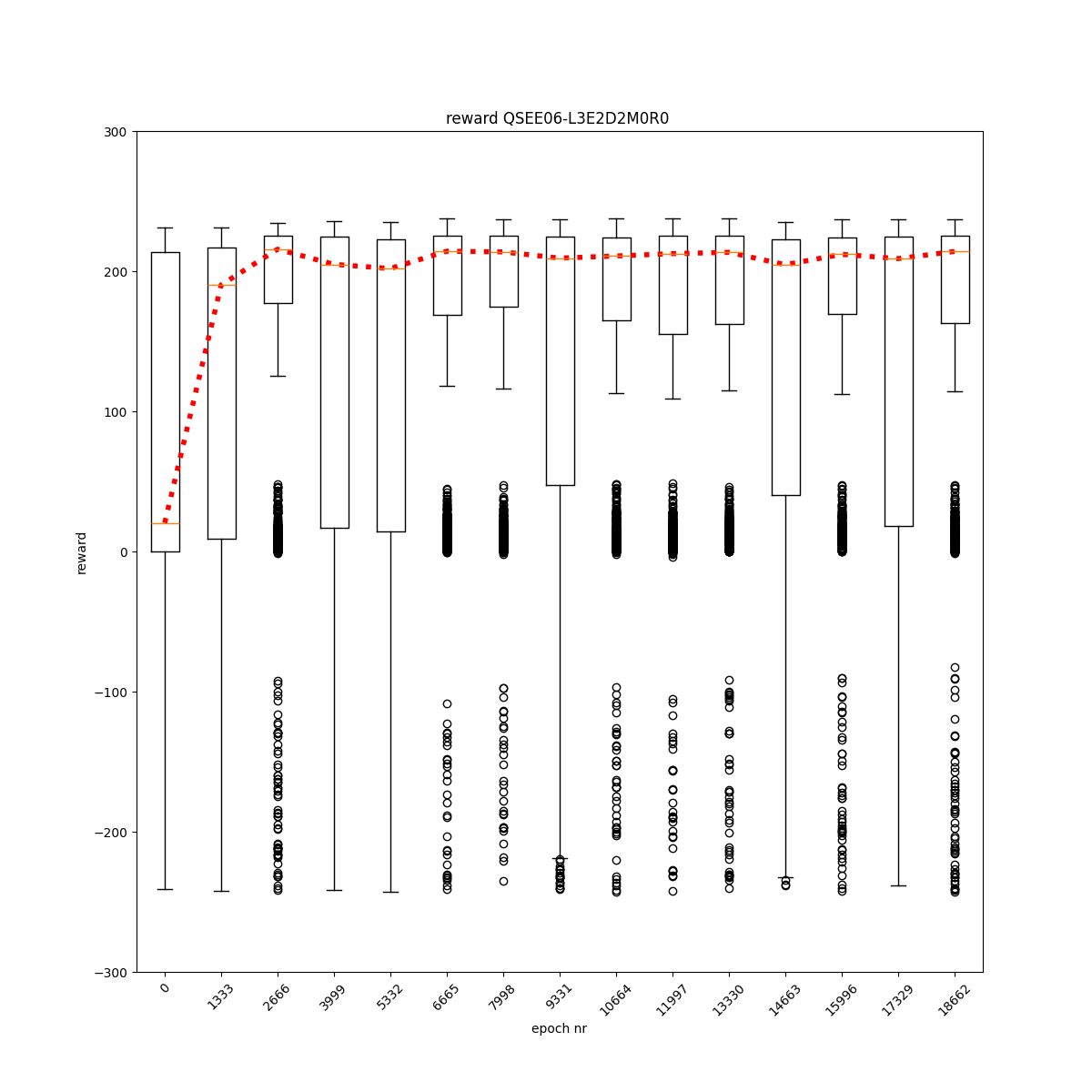

L3 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

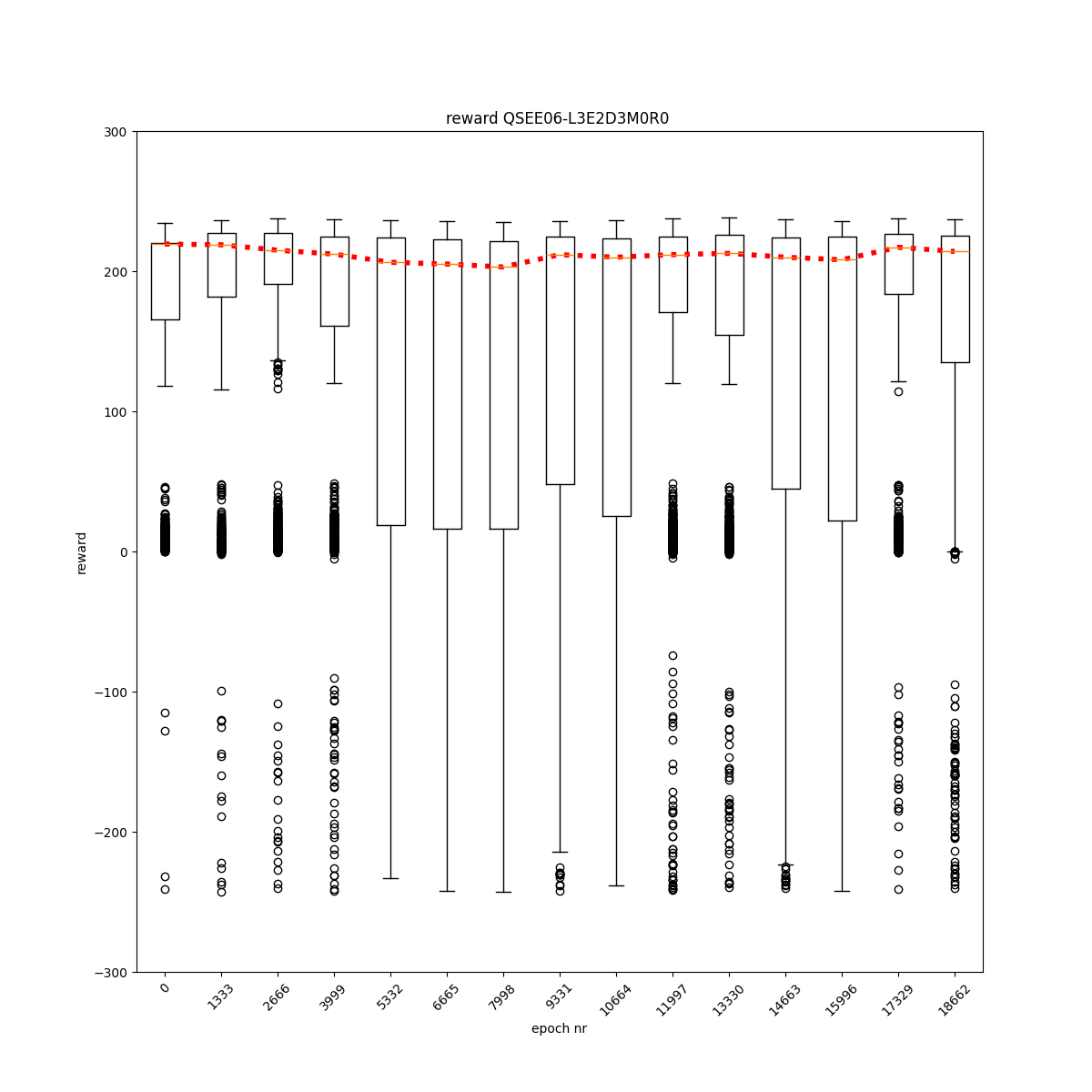

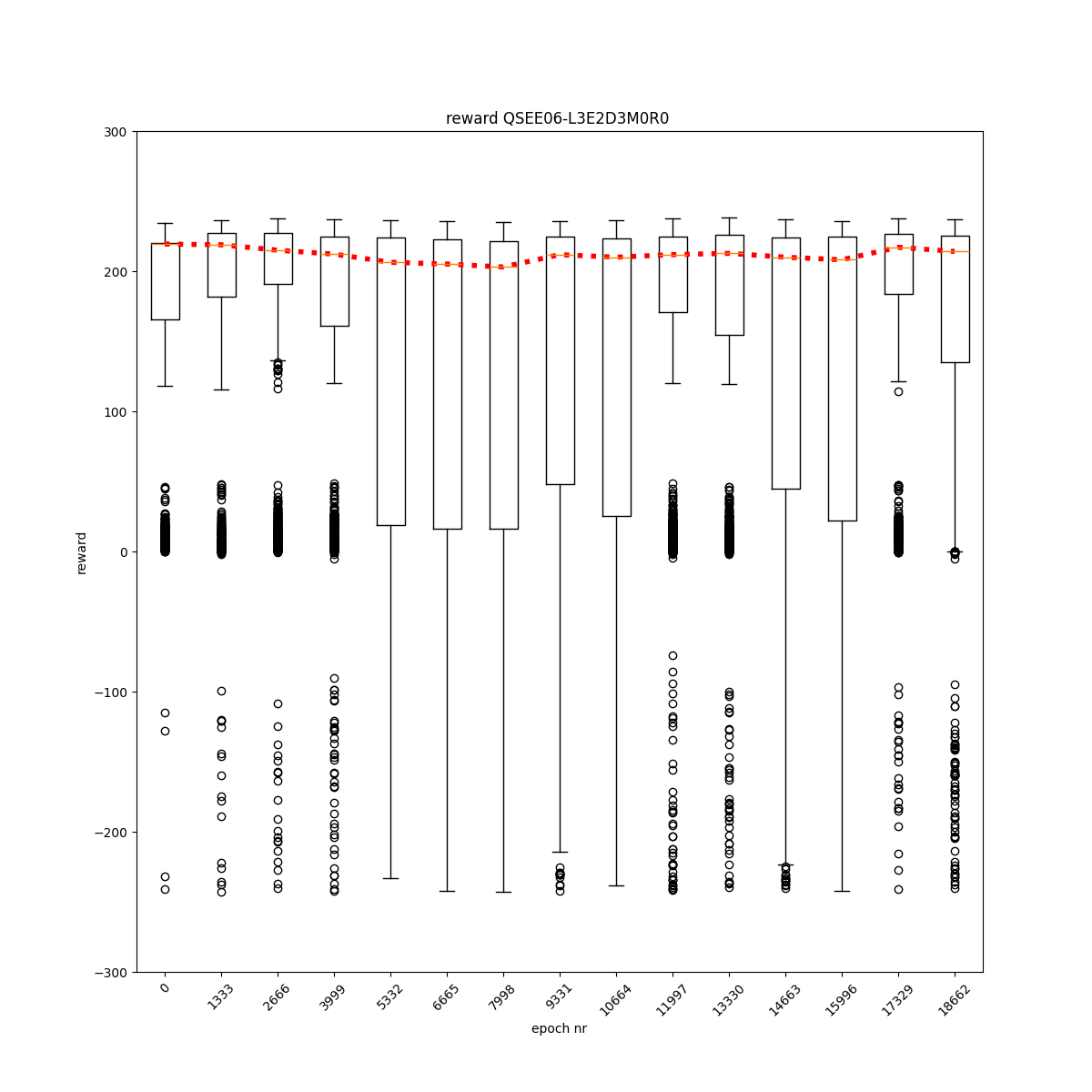

L3 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E3 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E3 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E3 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E3 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E4 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E4 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

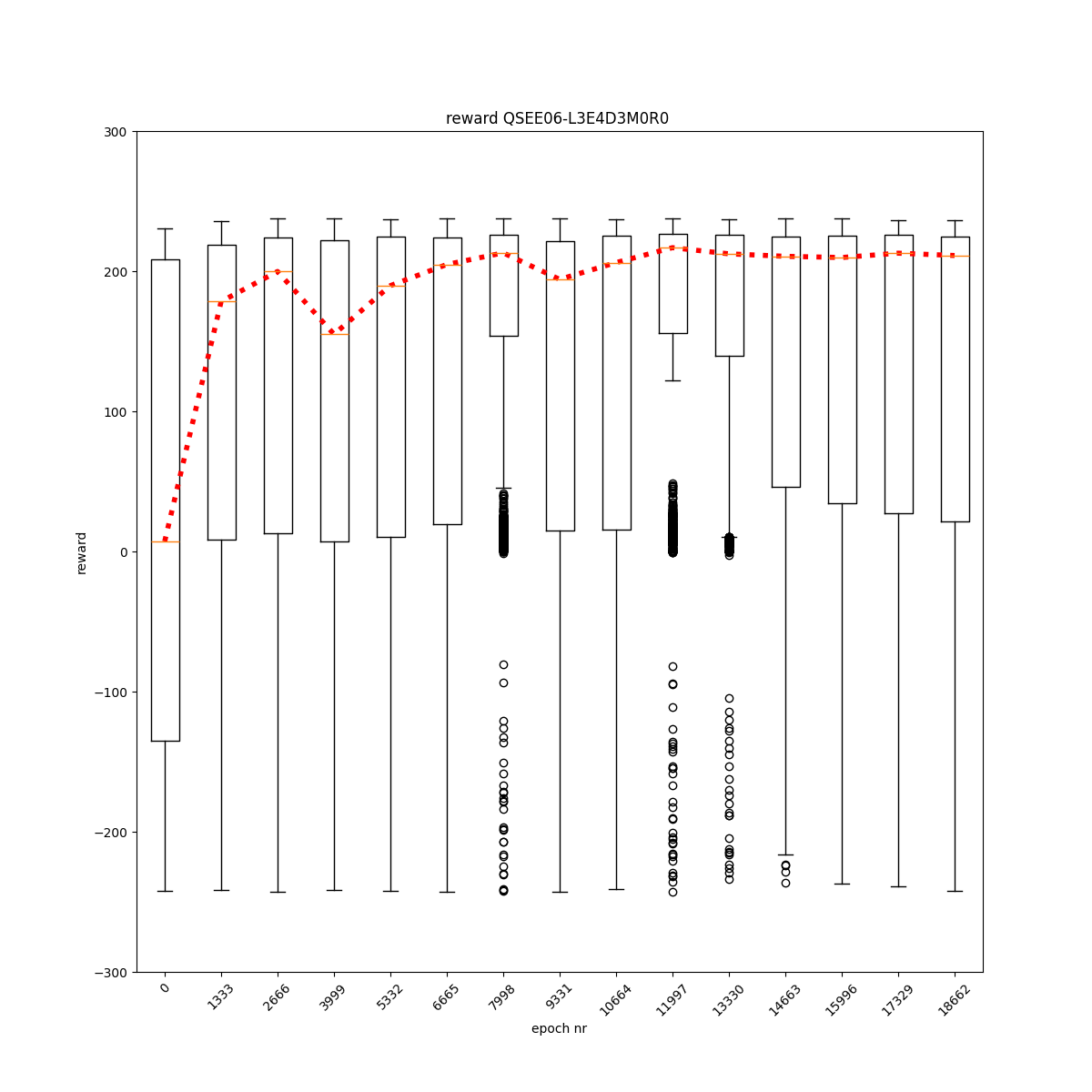

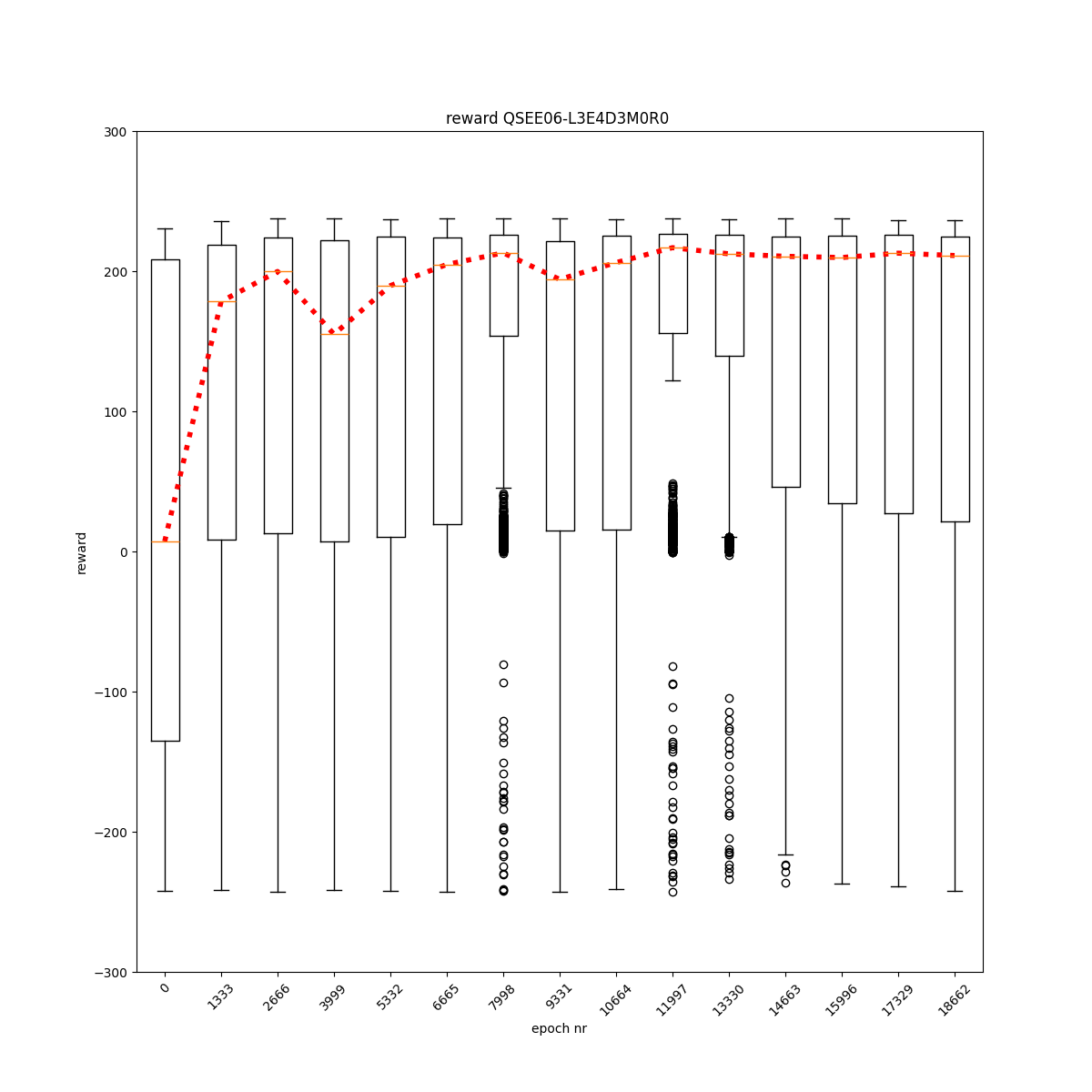

L3 E4 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L3 E4 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

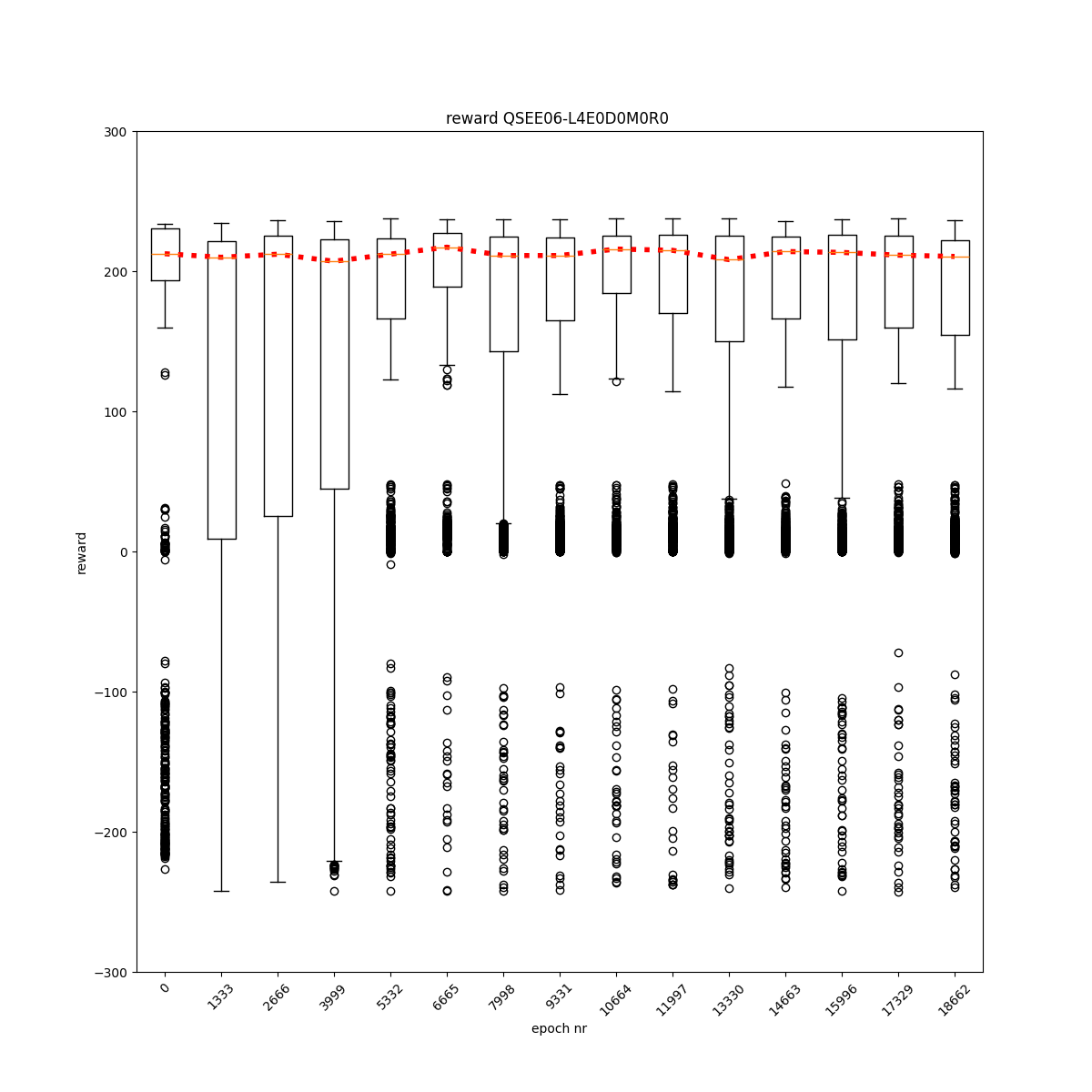

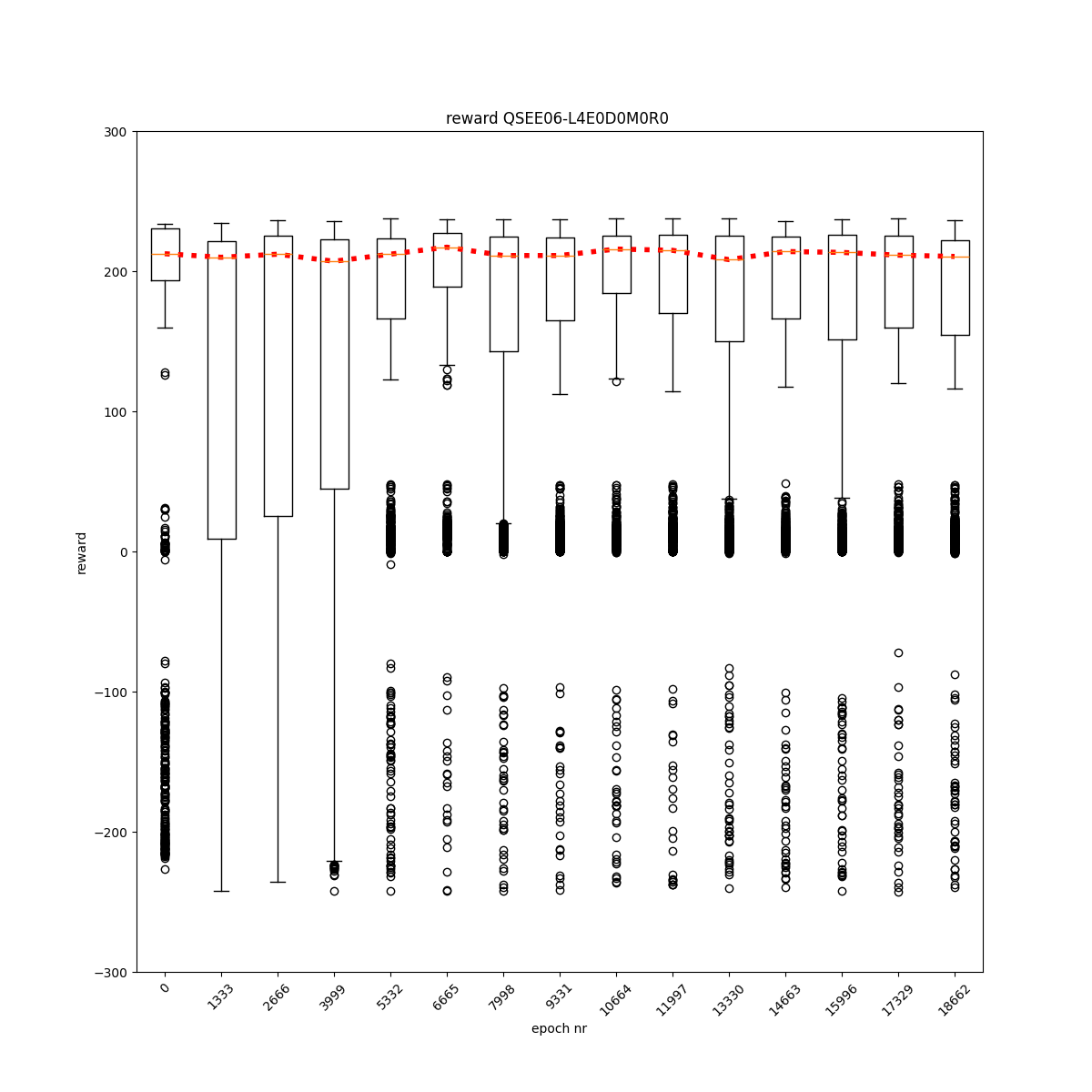

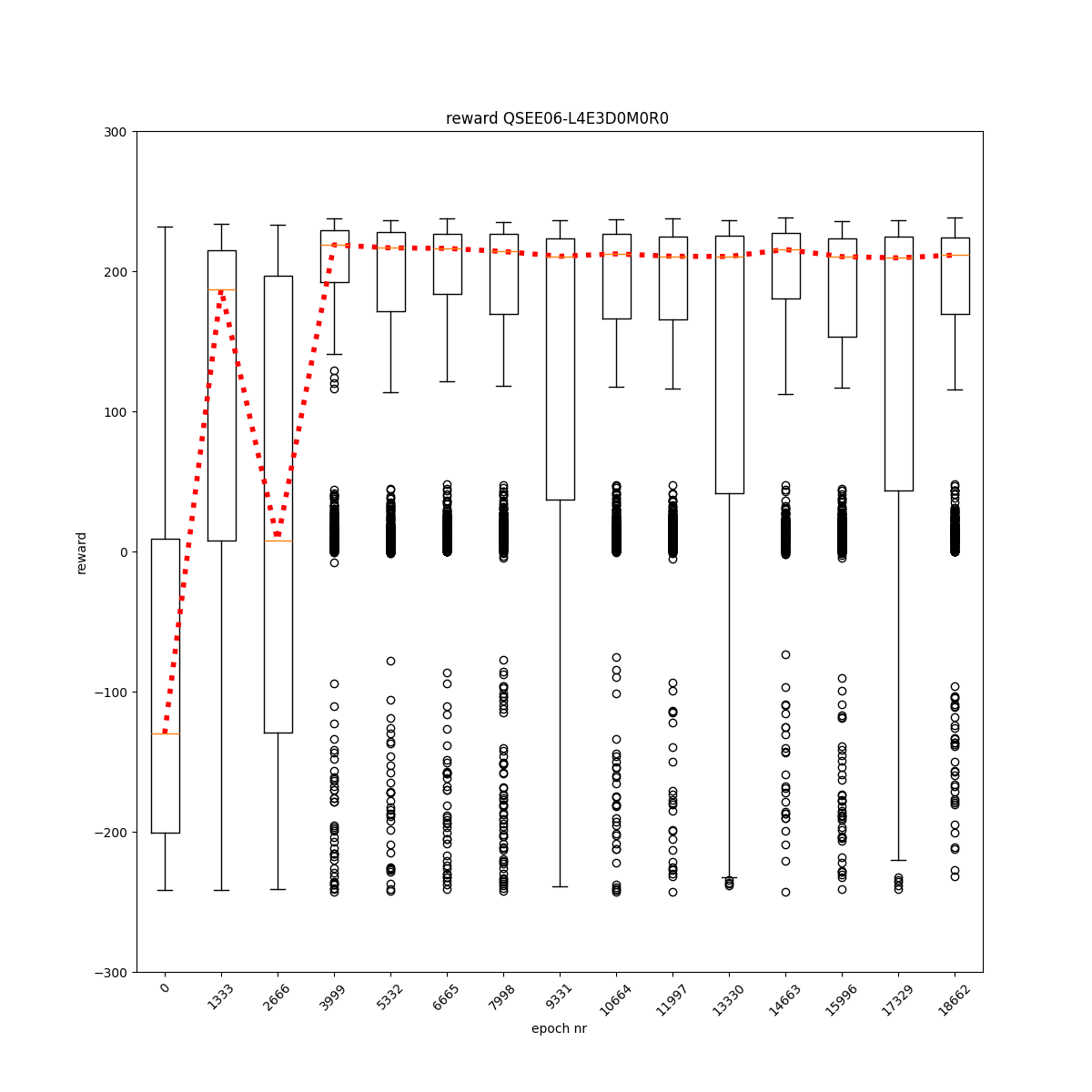

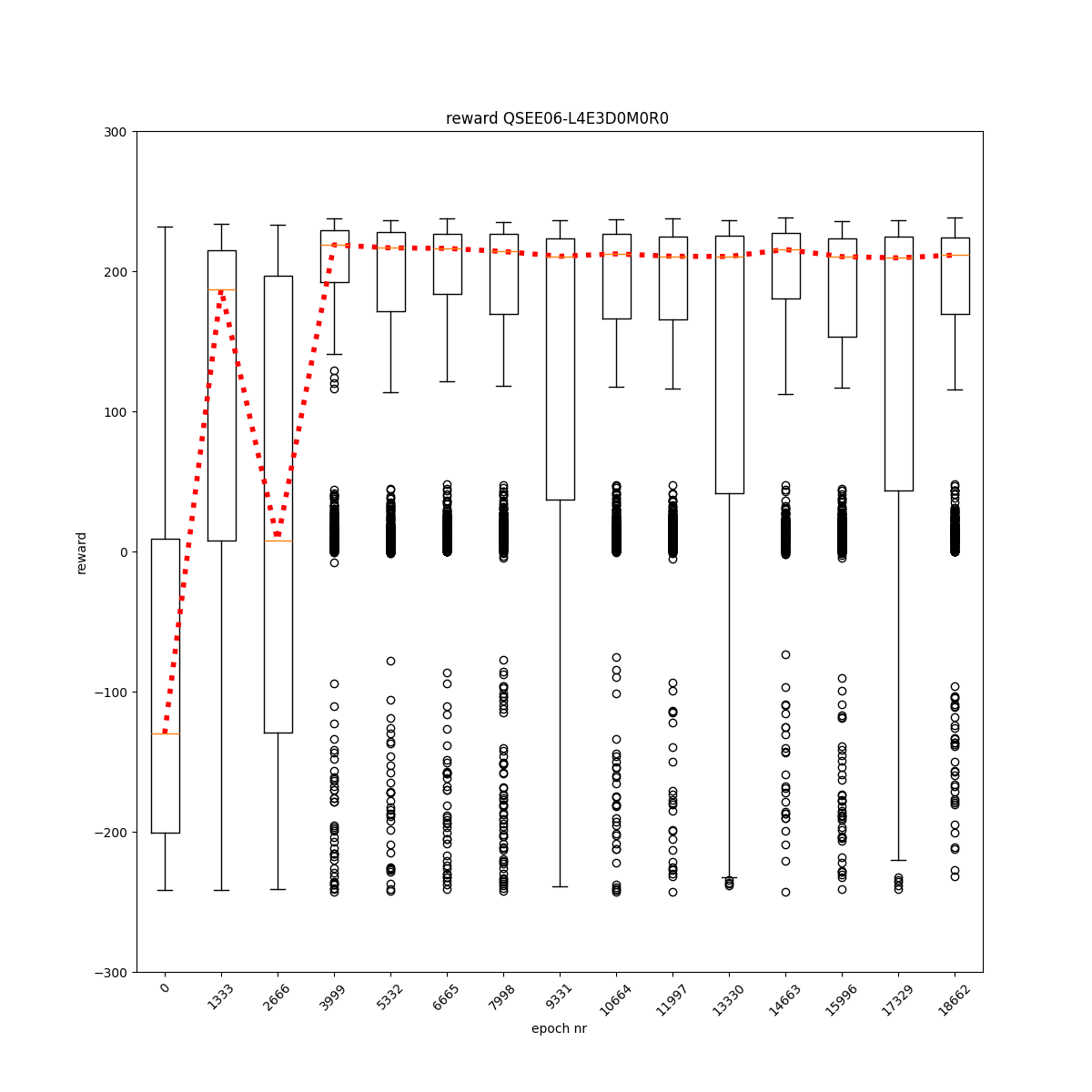

L4 E0 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

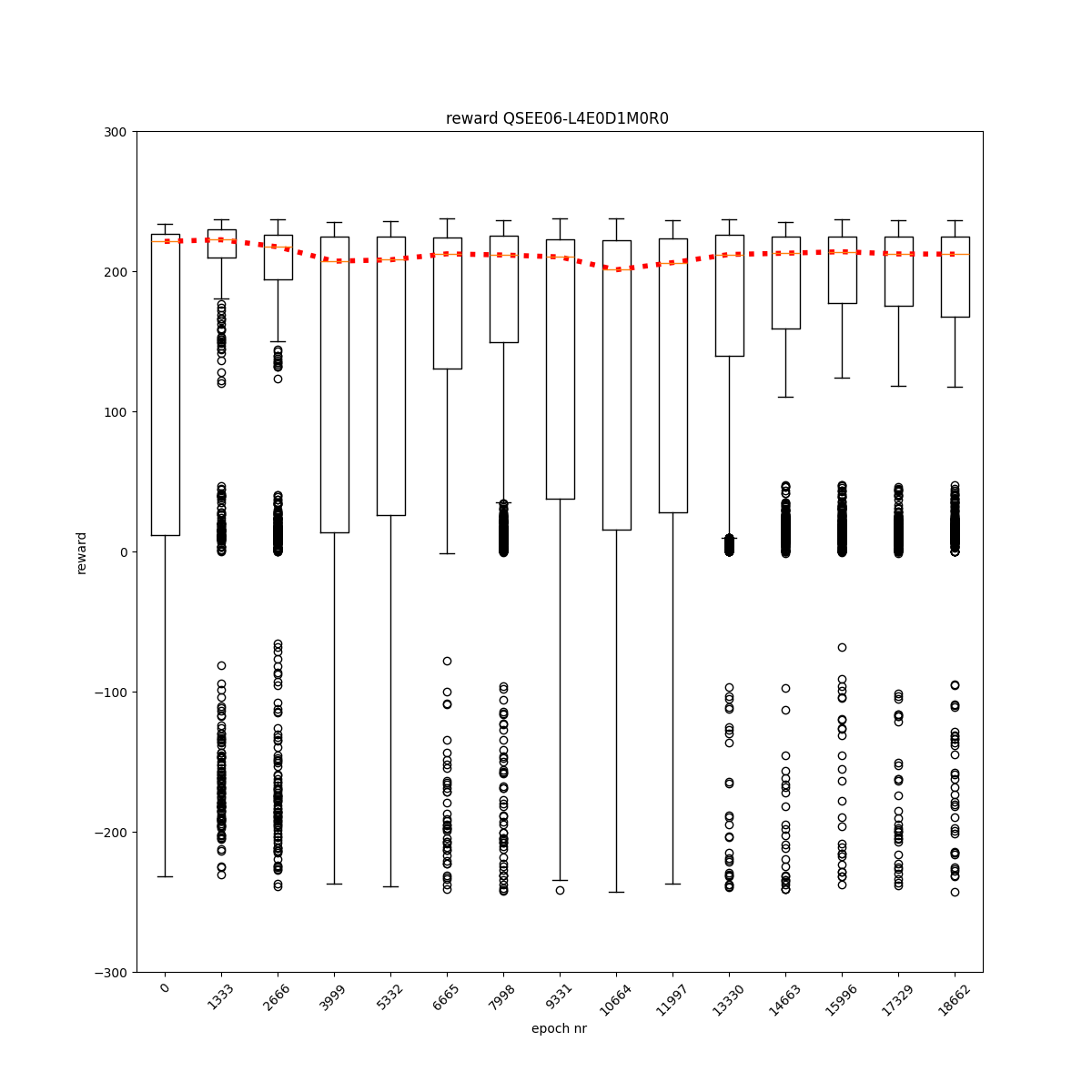

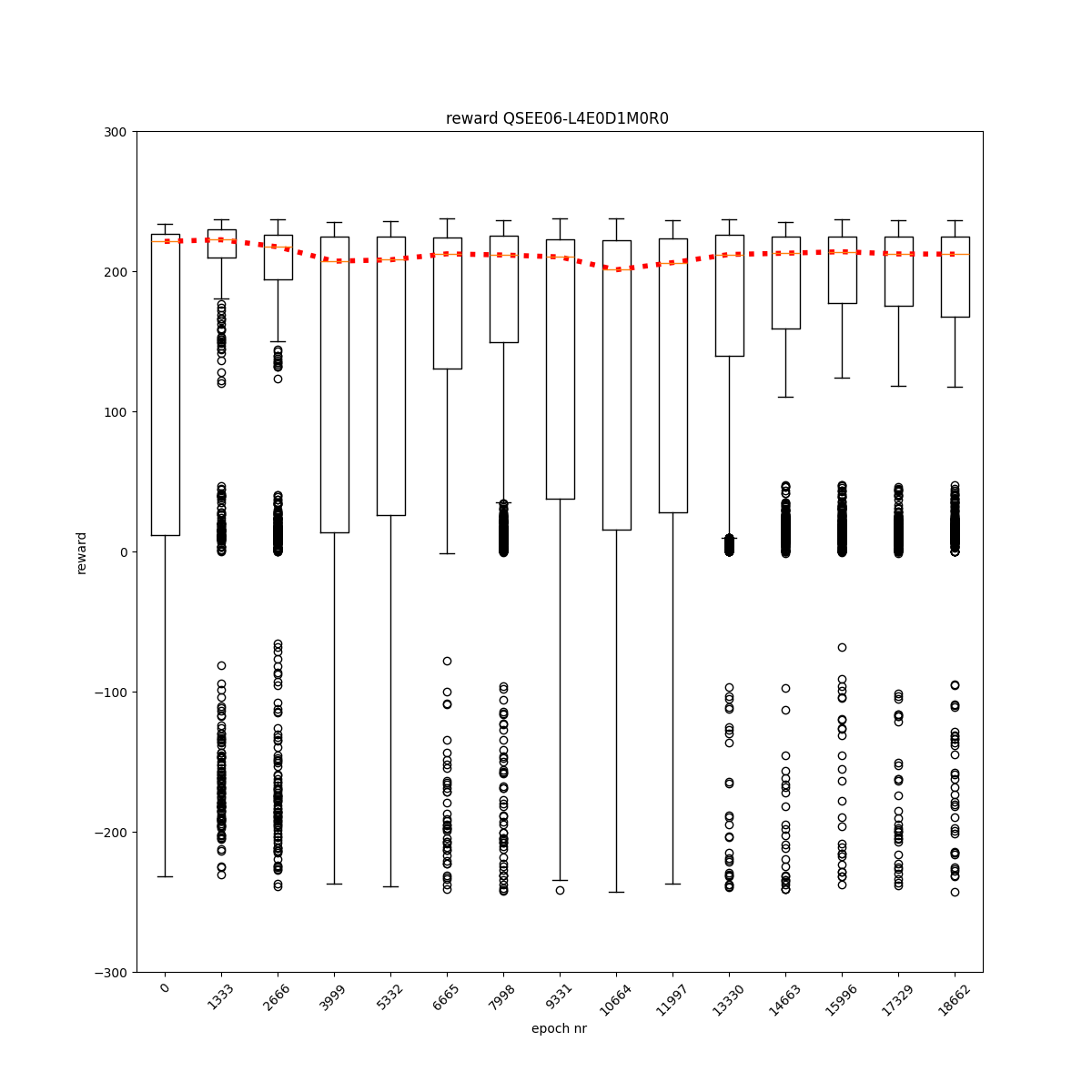

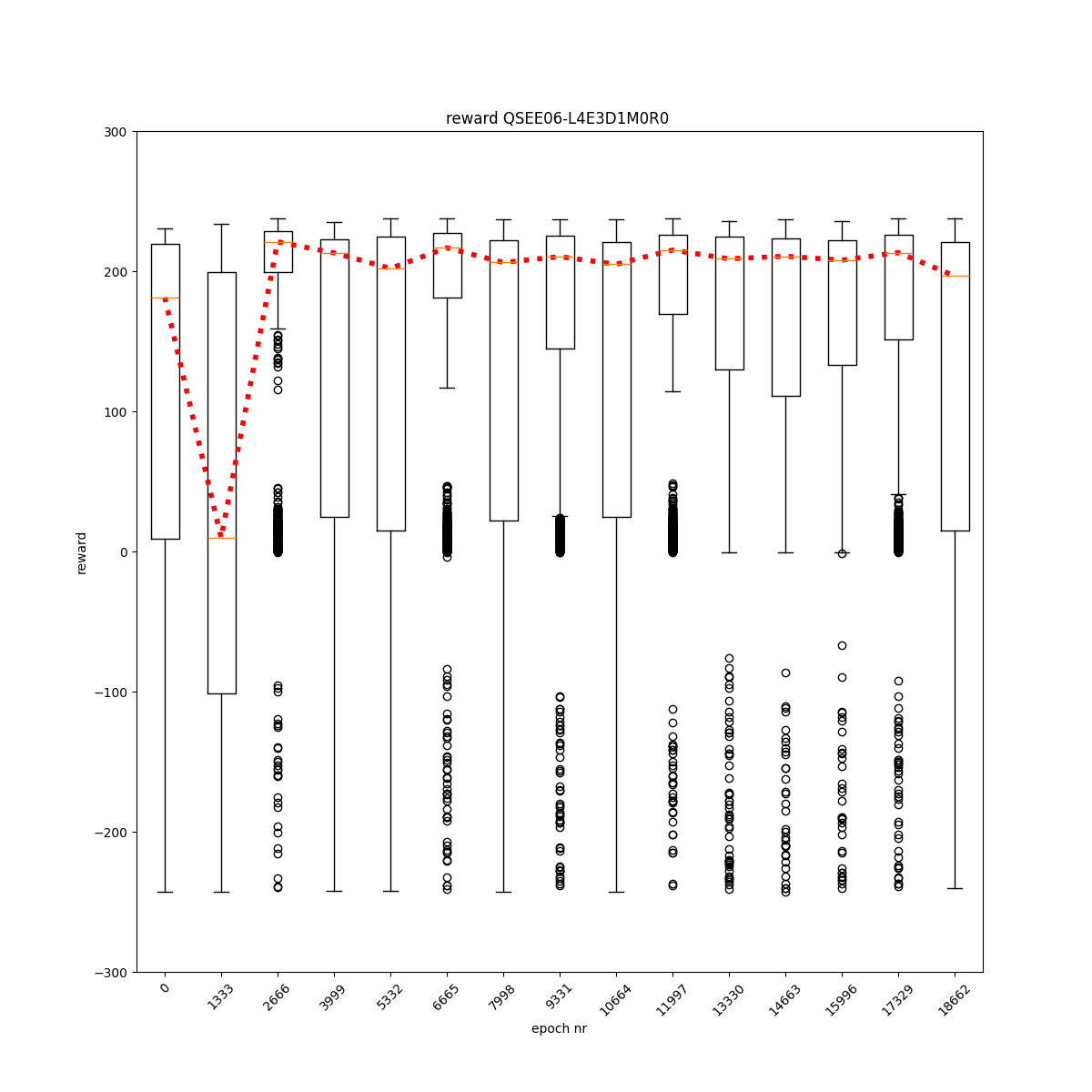

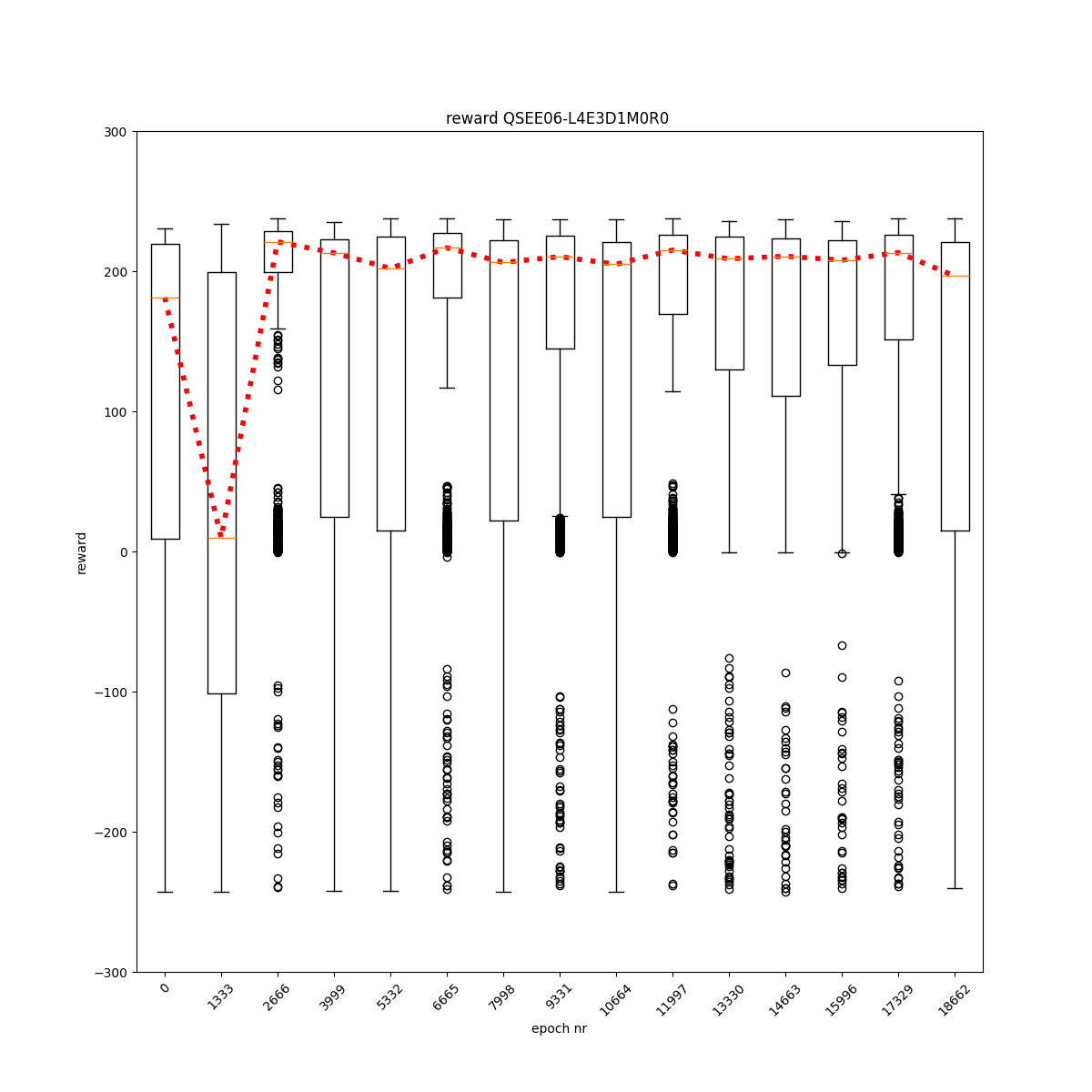

L4 E0 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

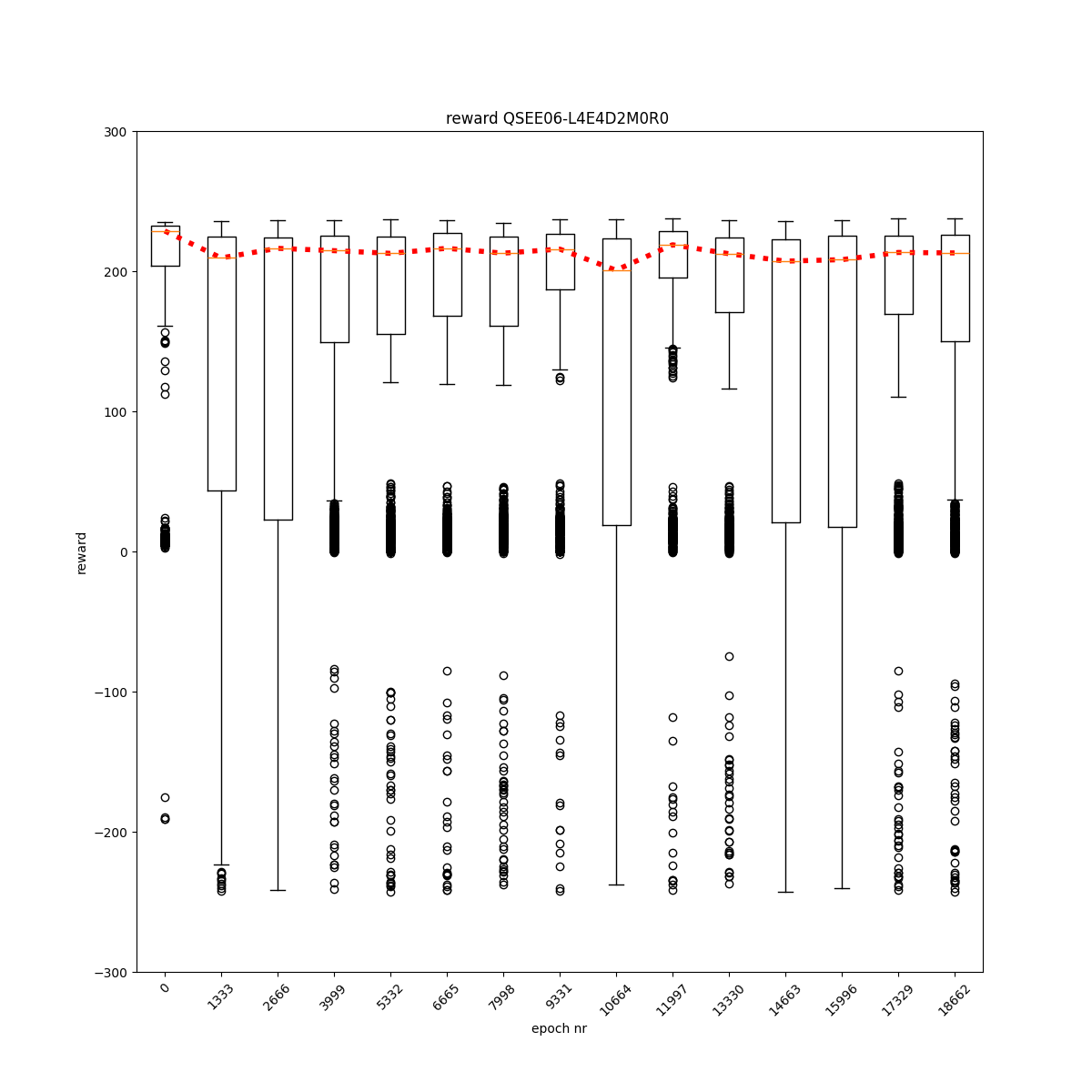

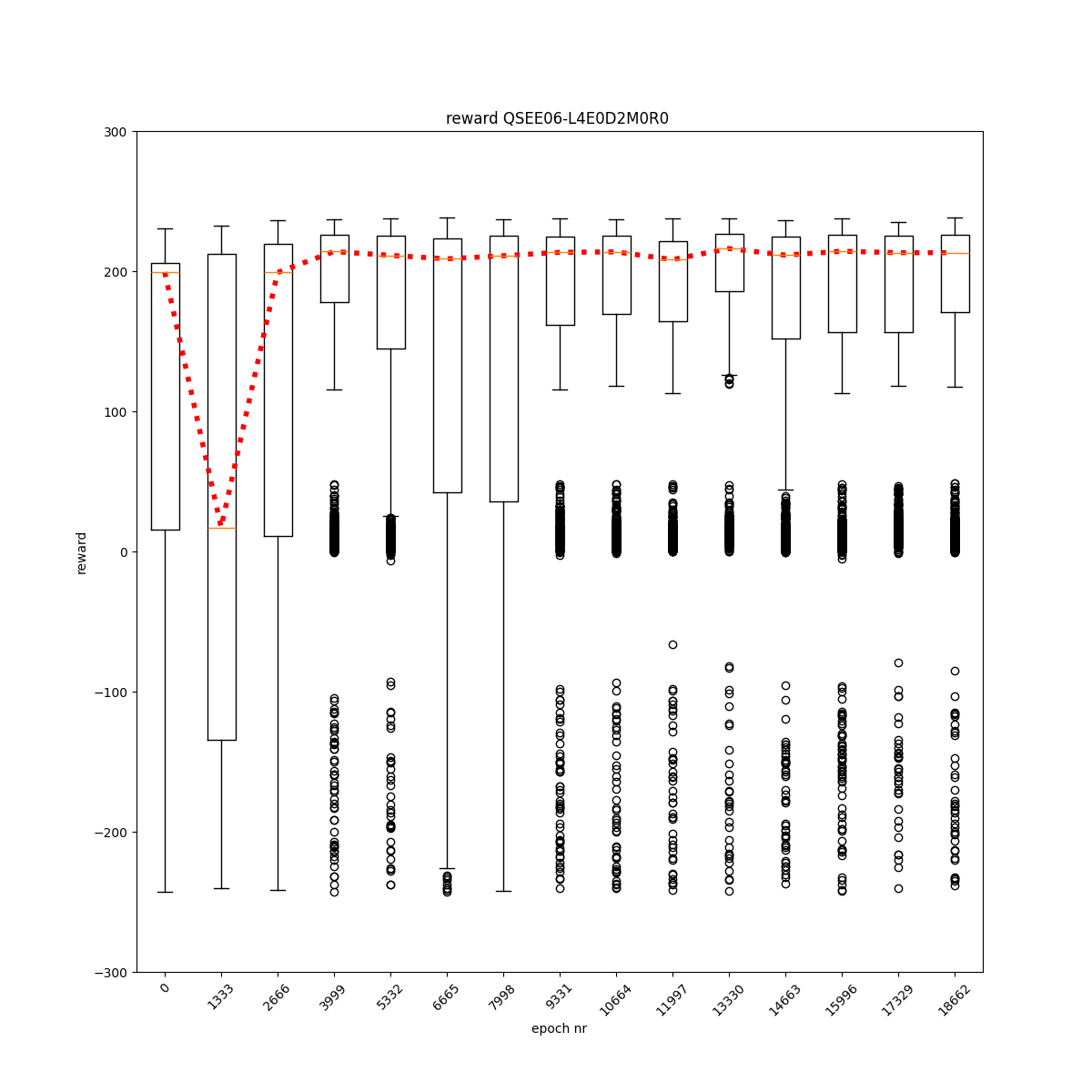

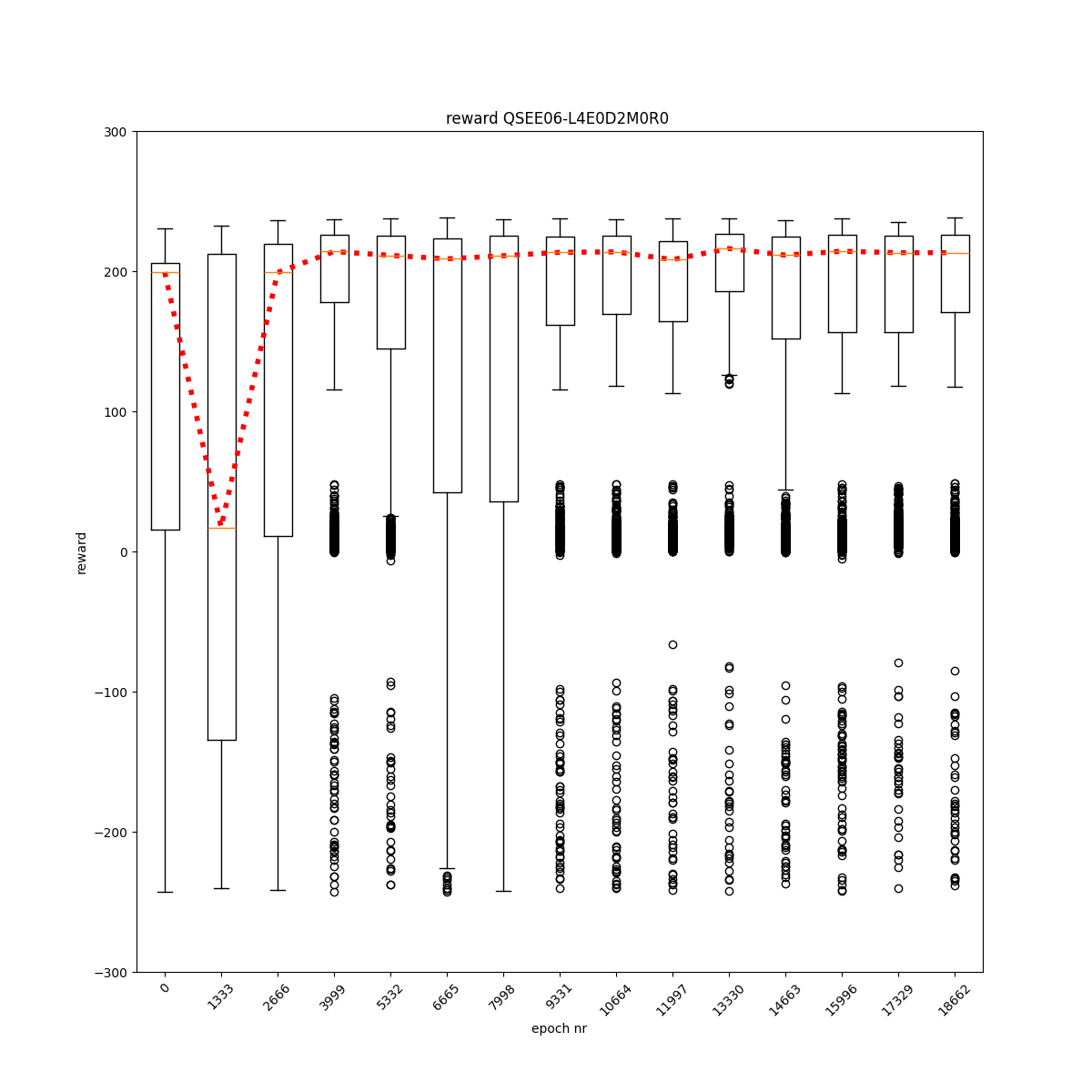

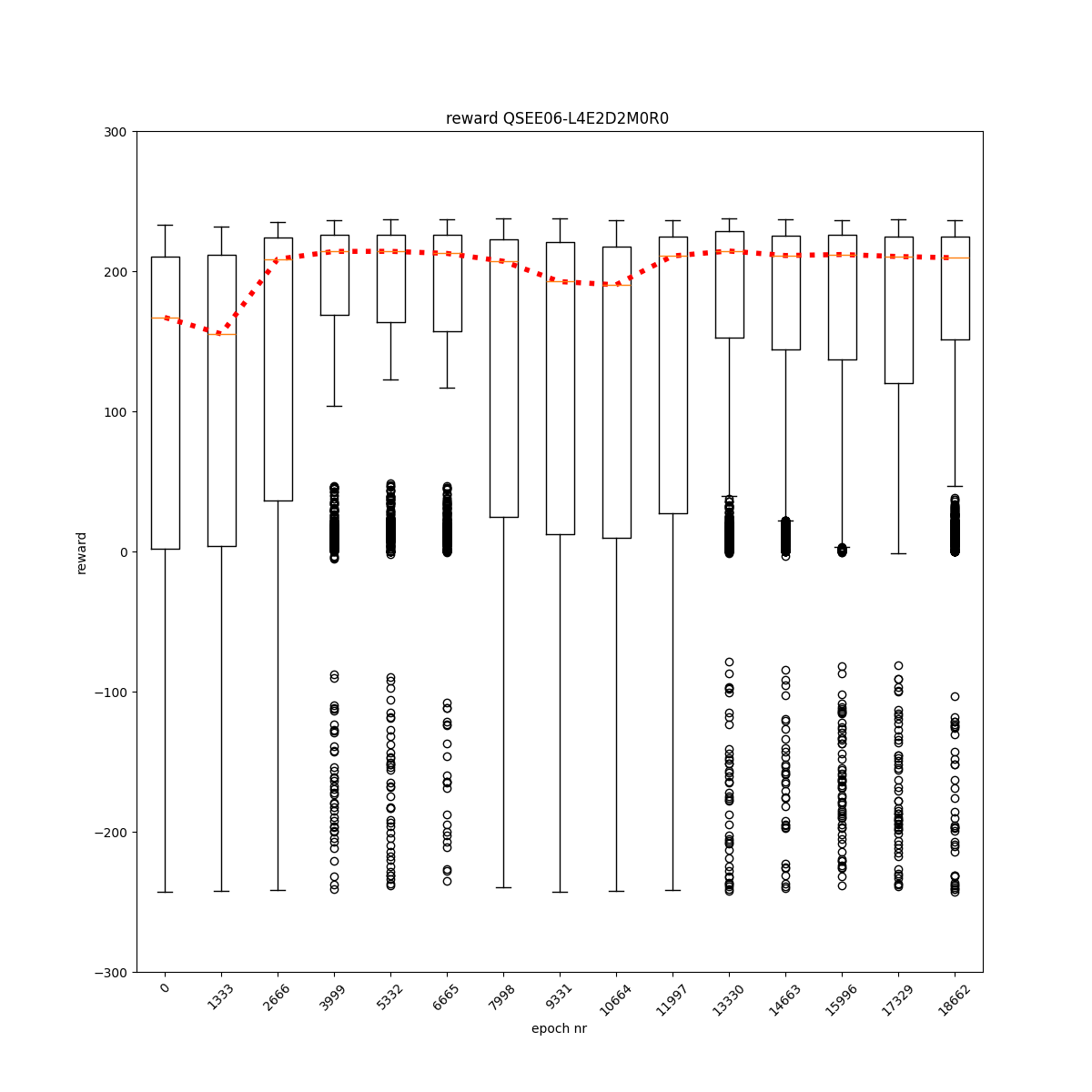

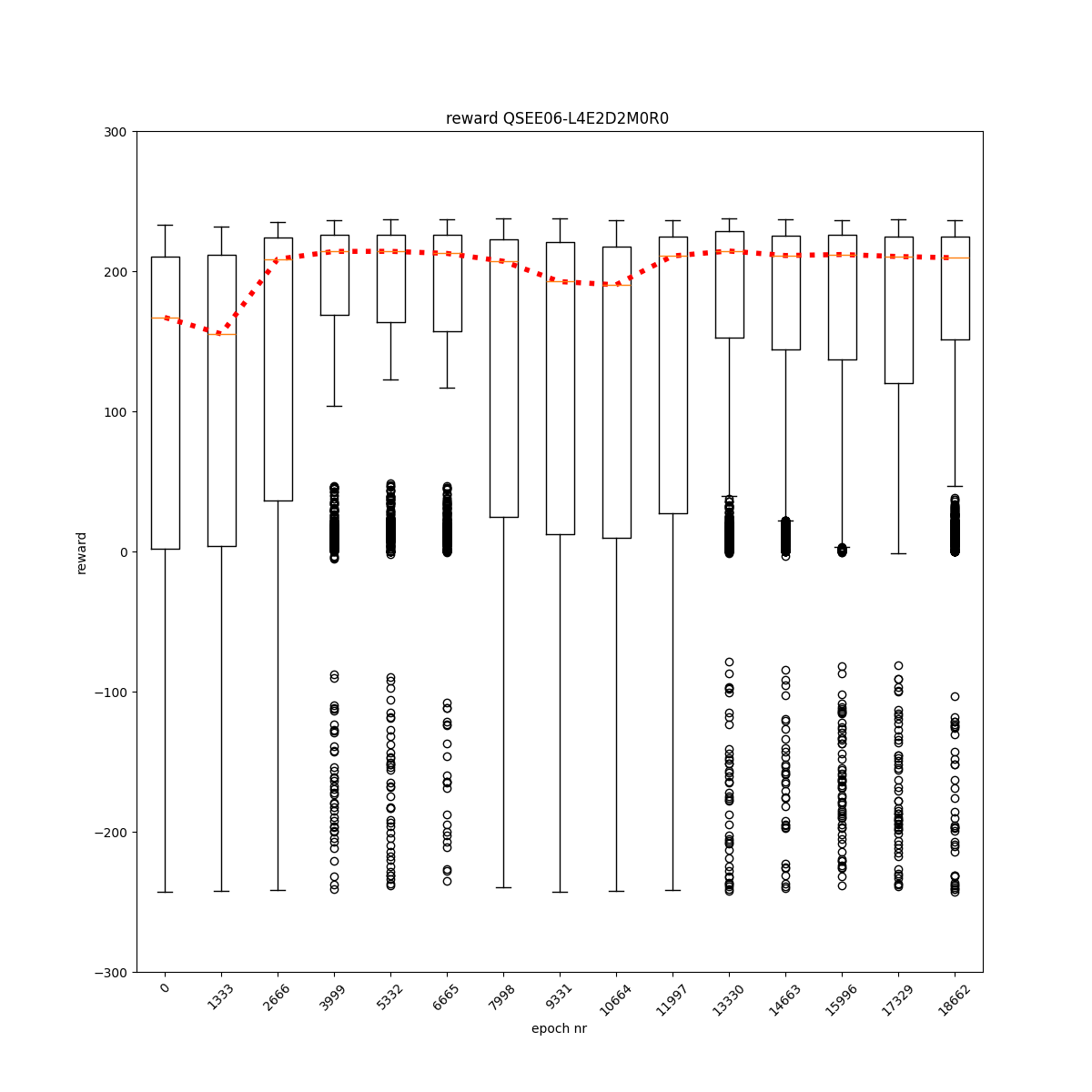

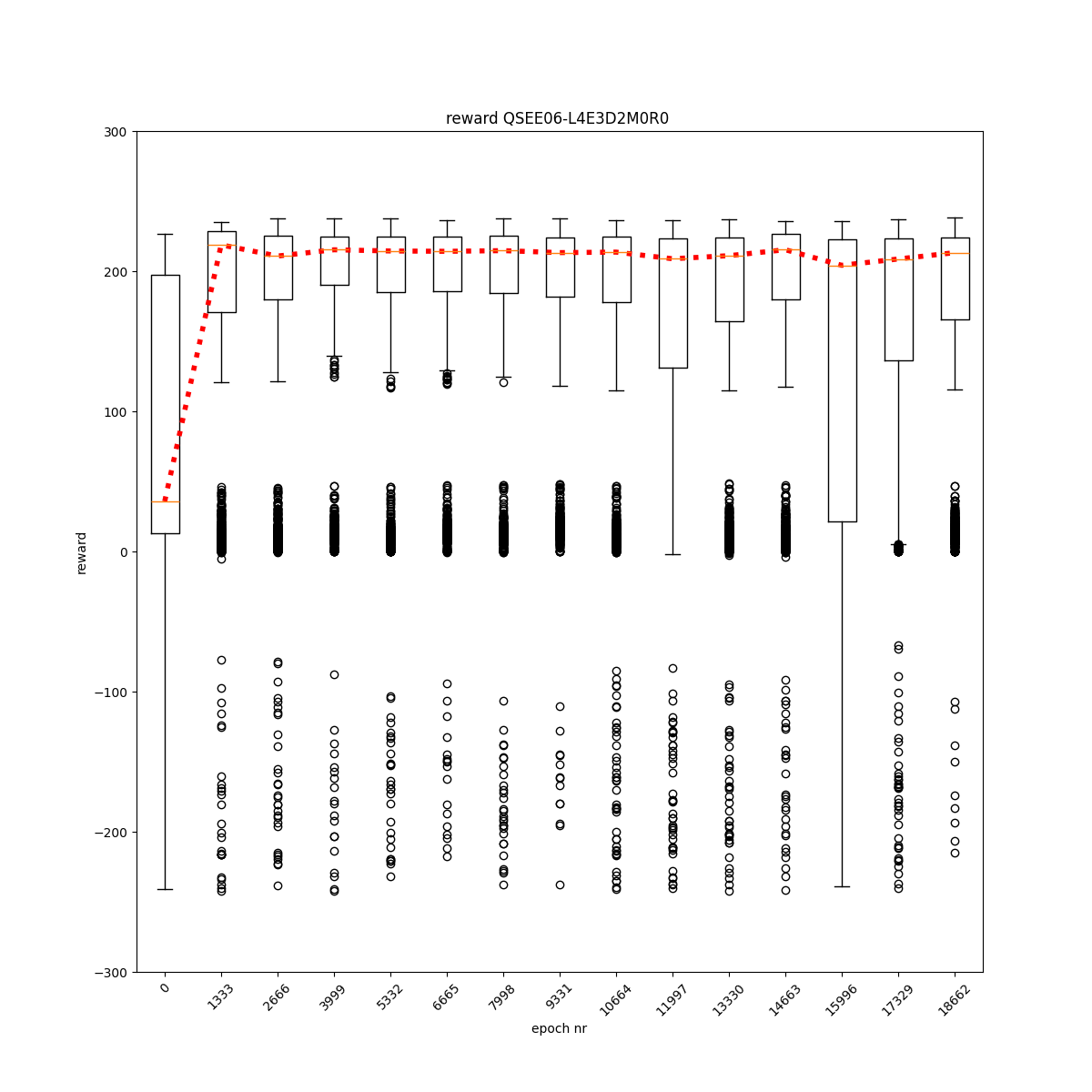

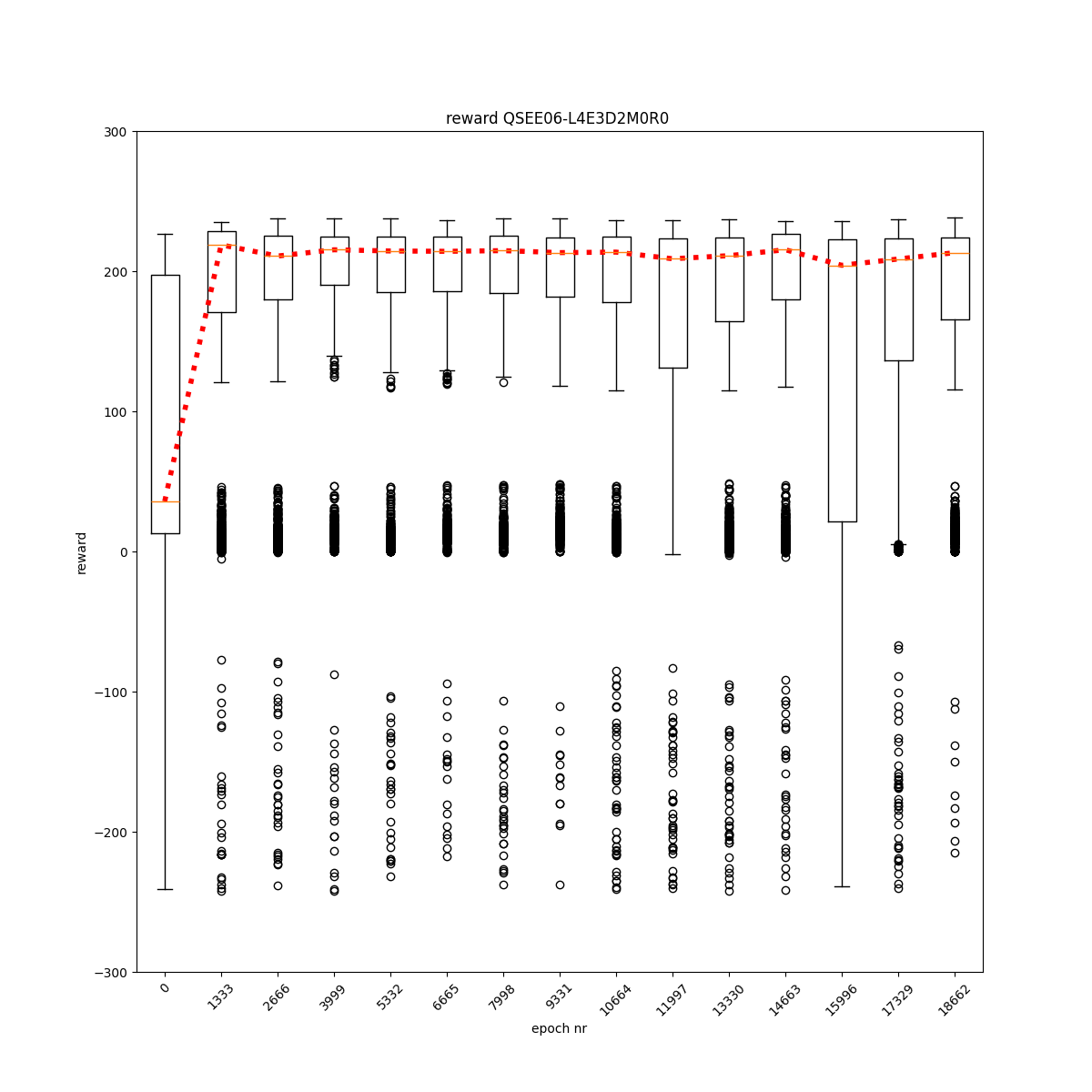

L4 E0 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

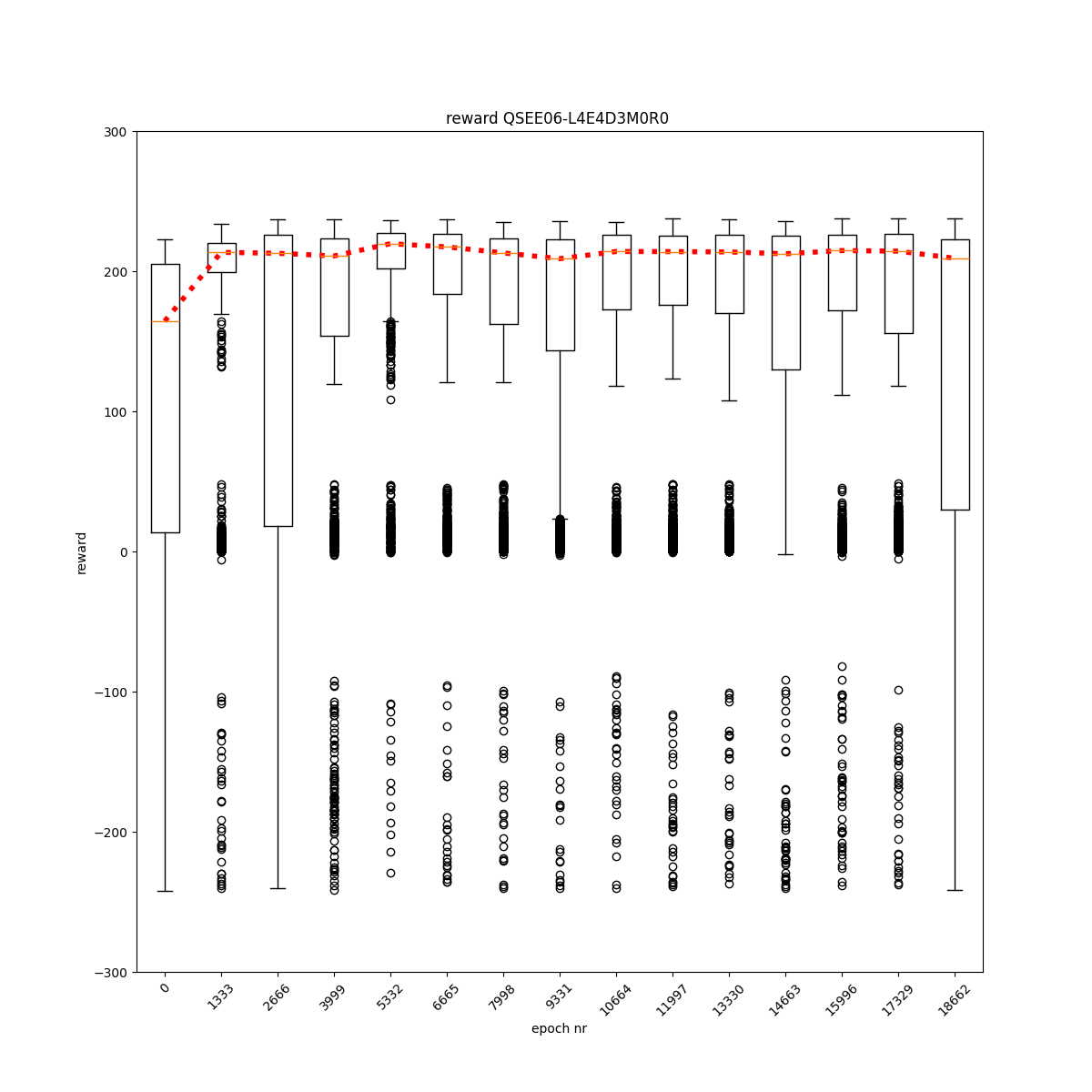

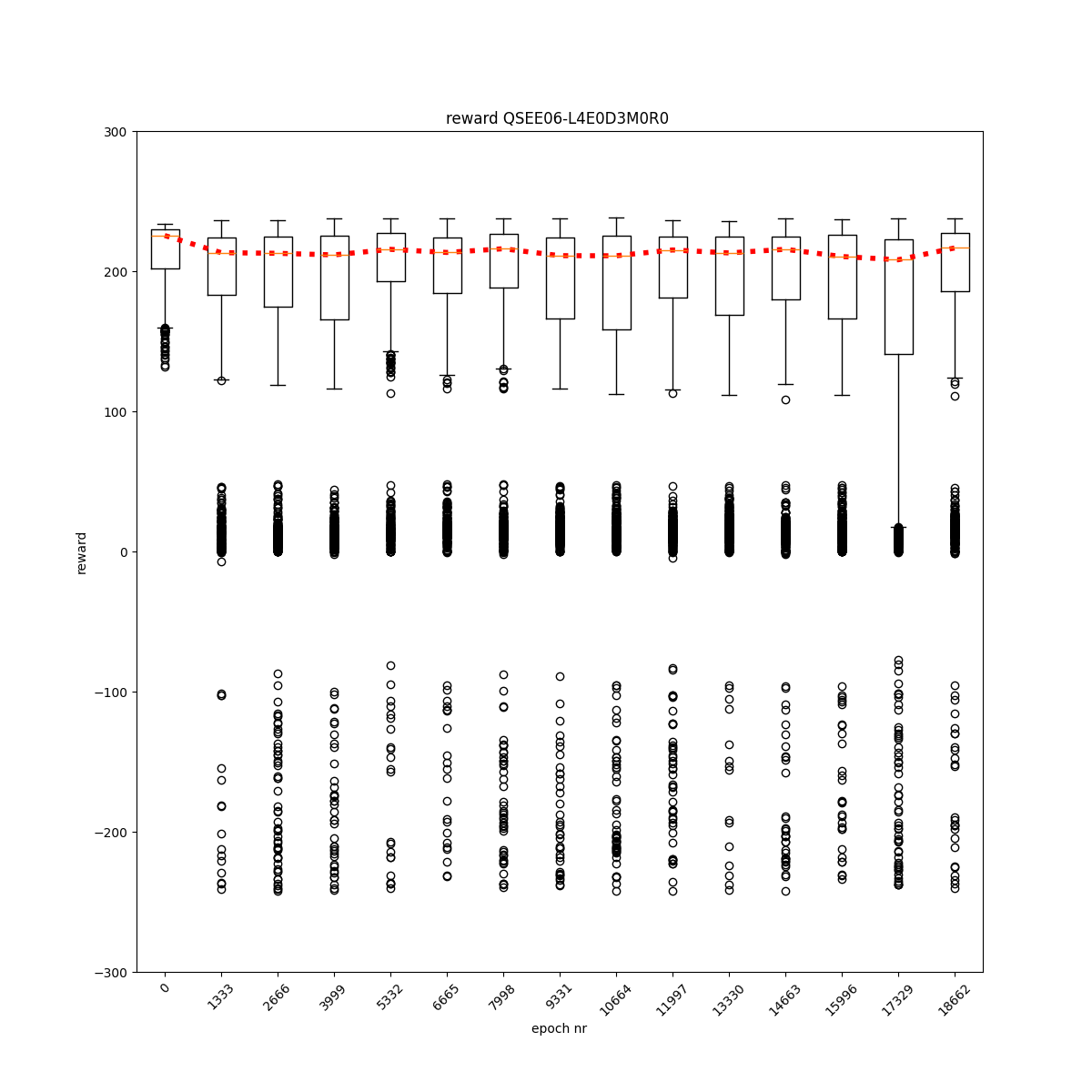

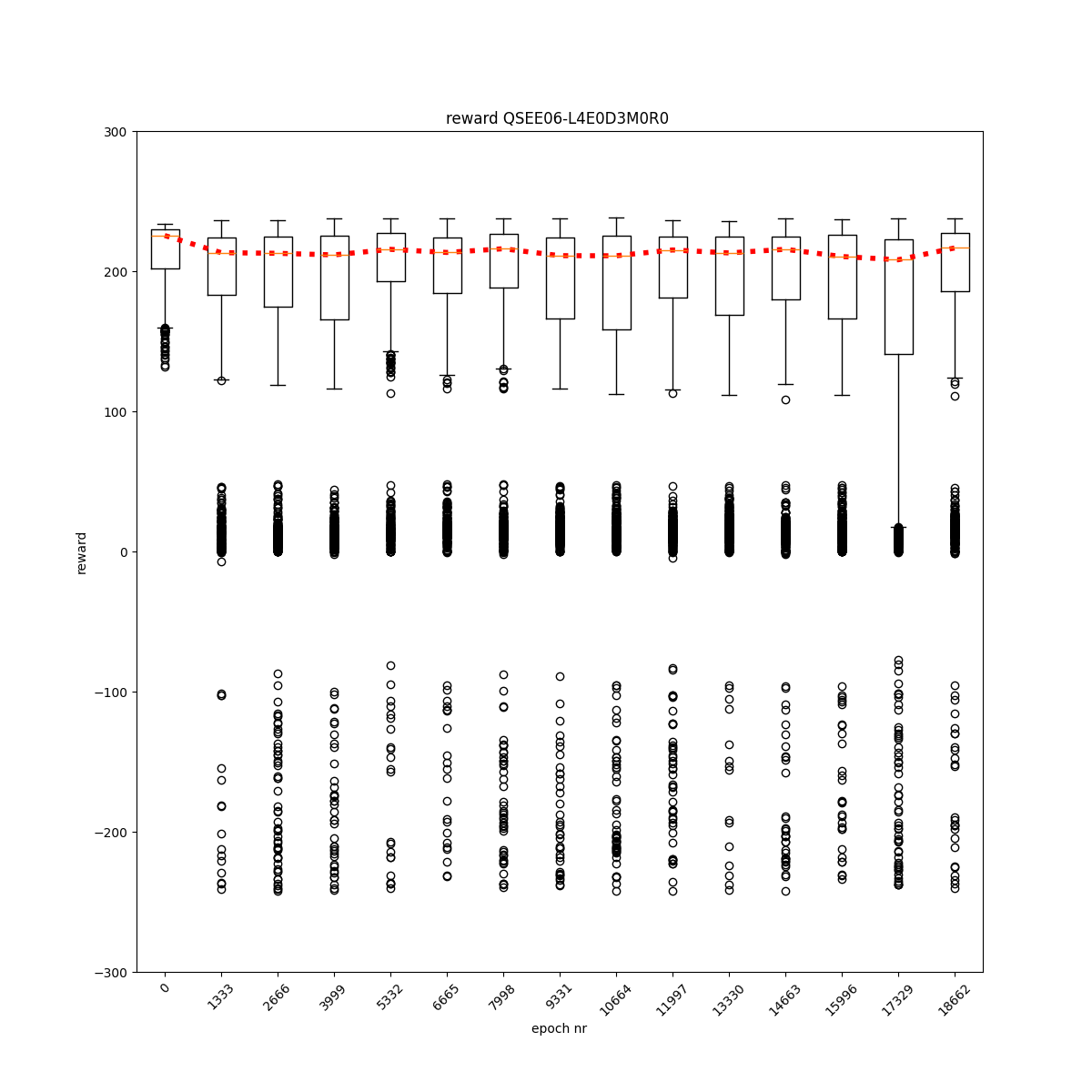

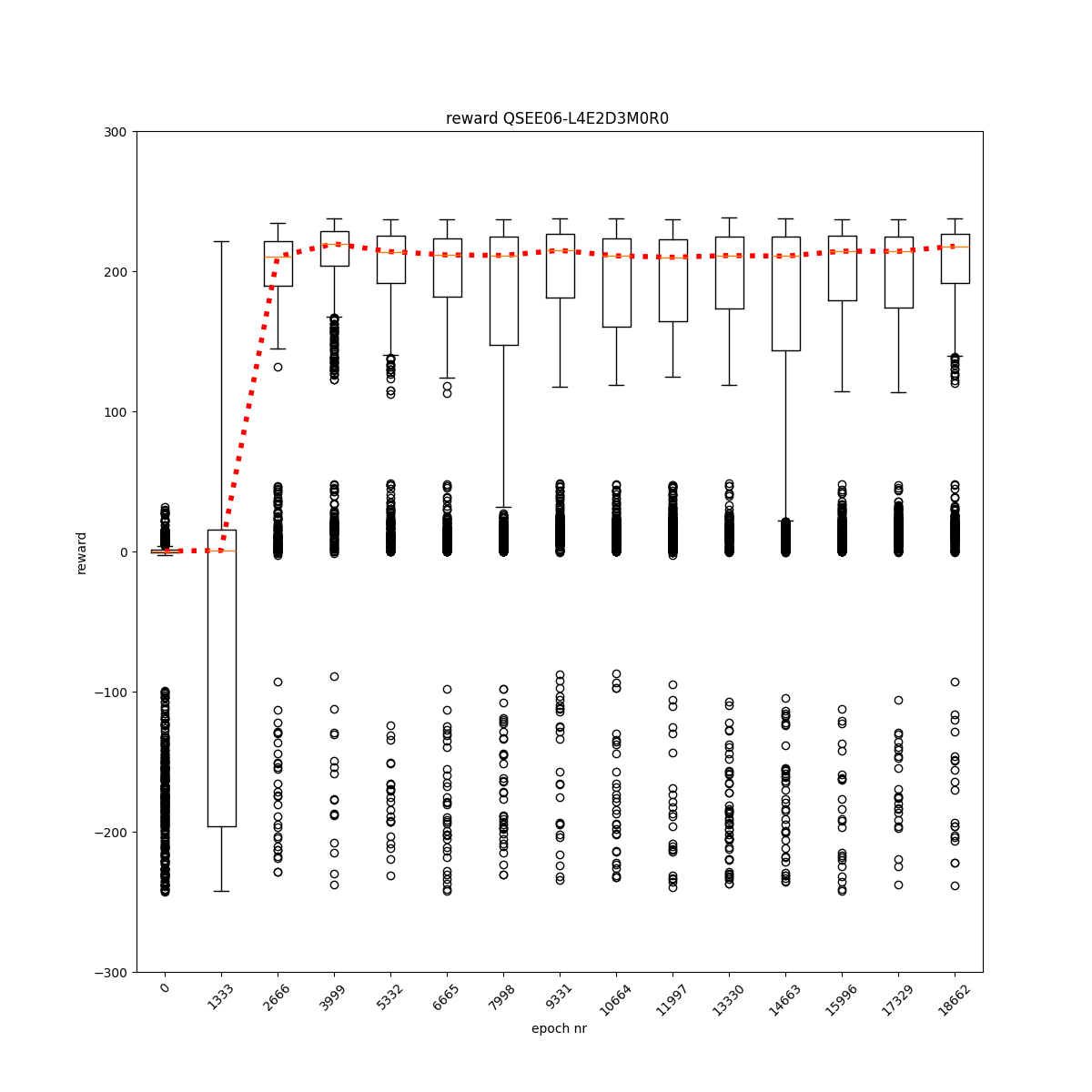

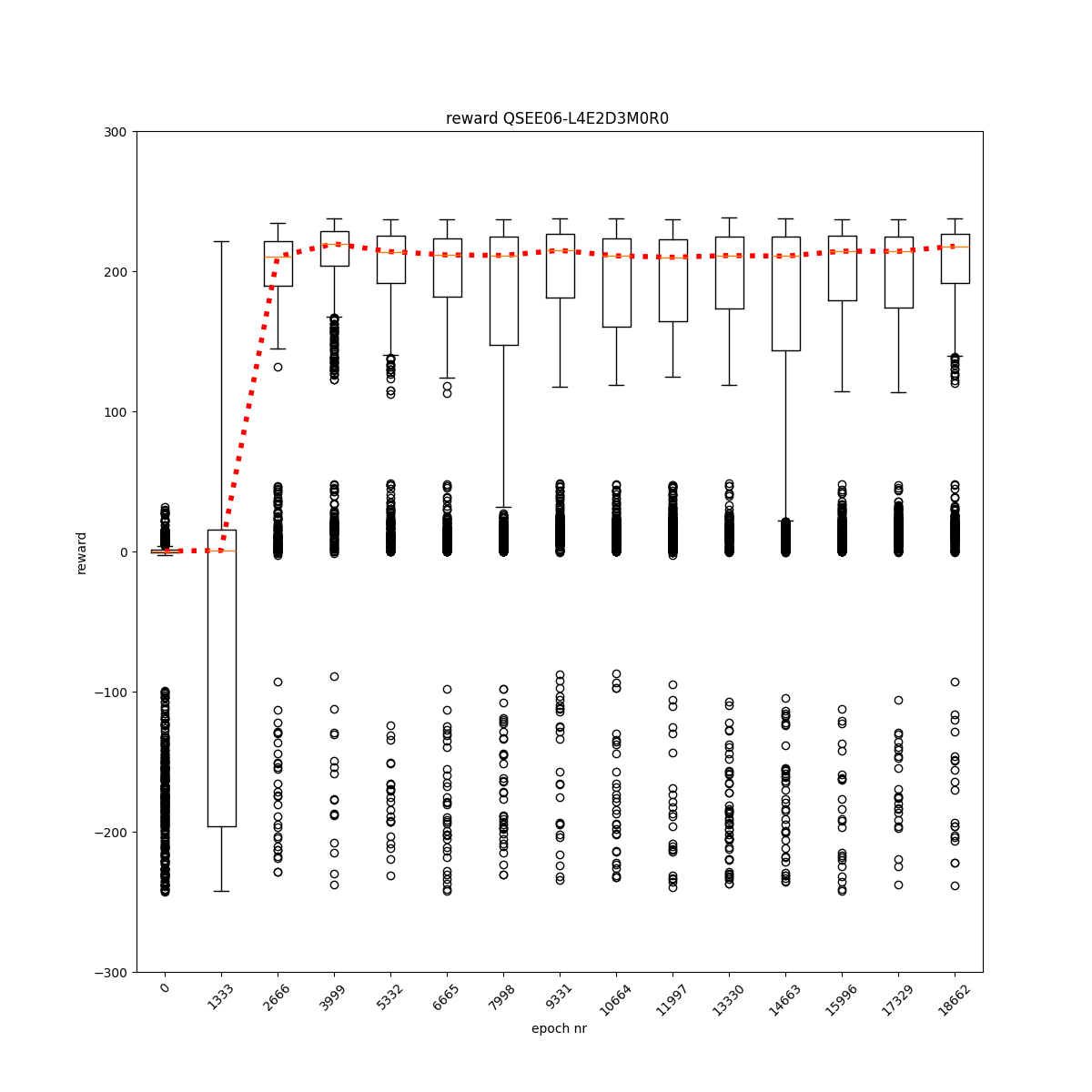

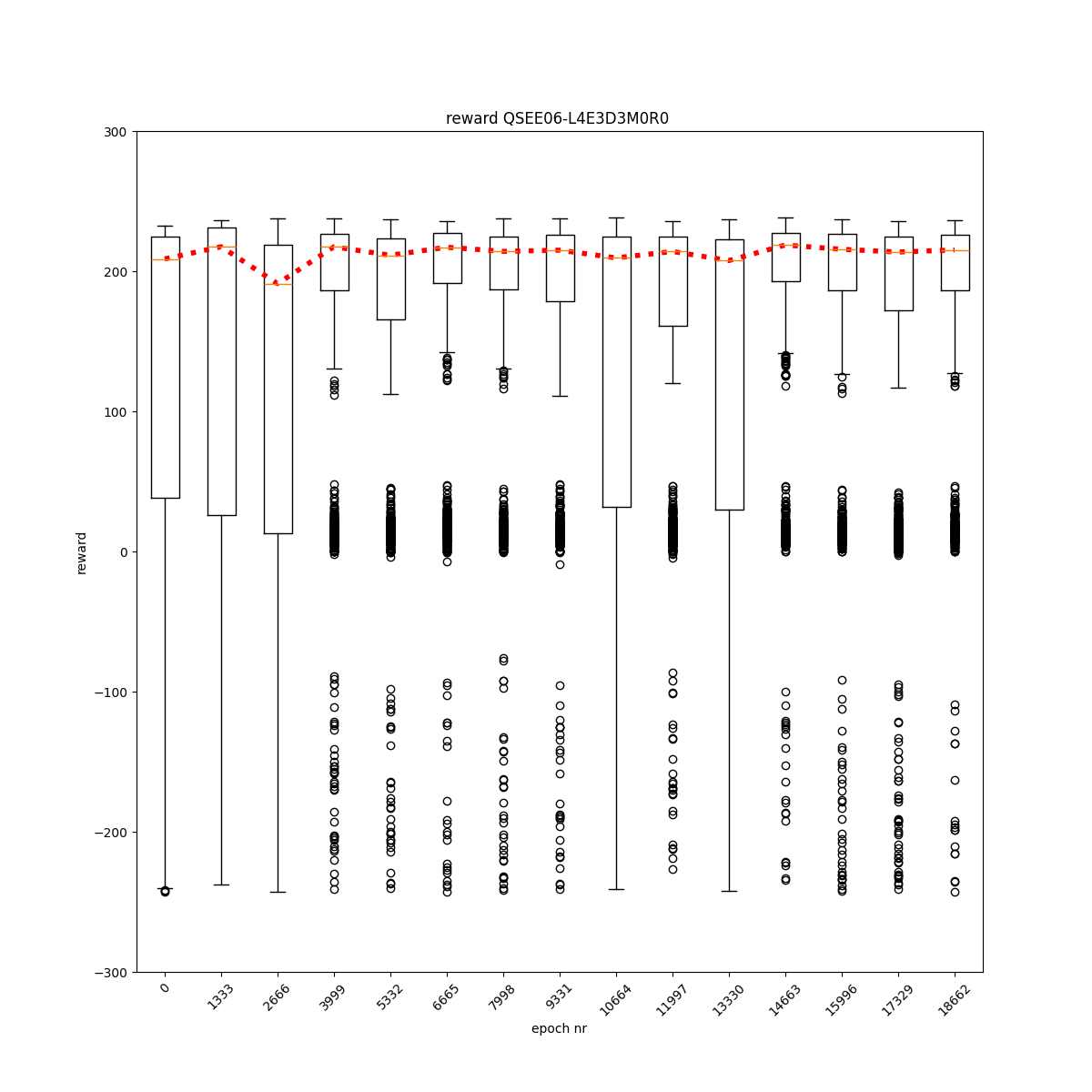

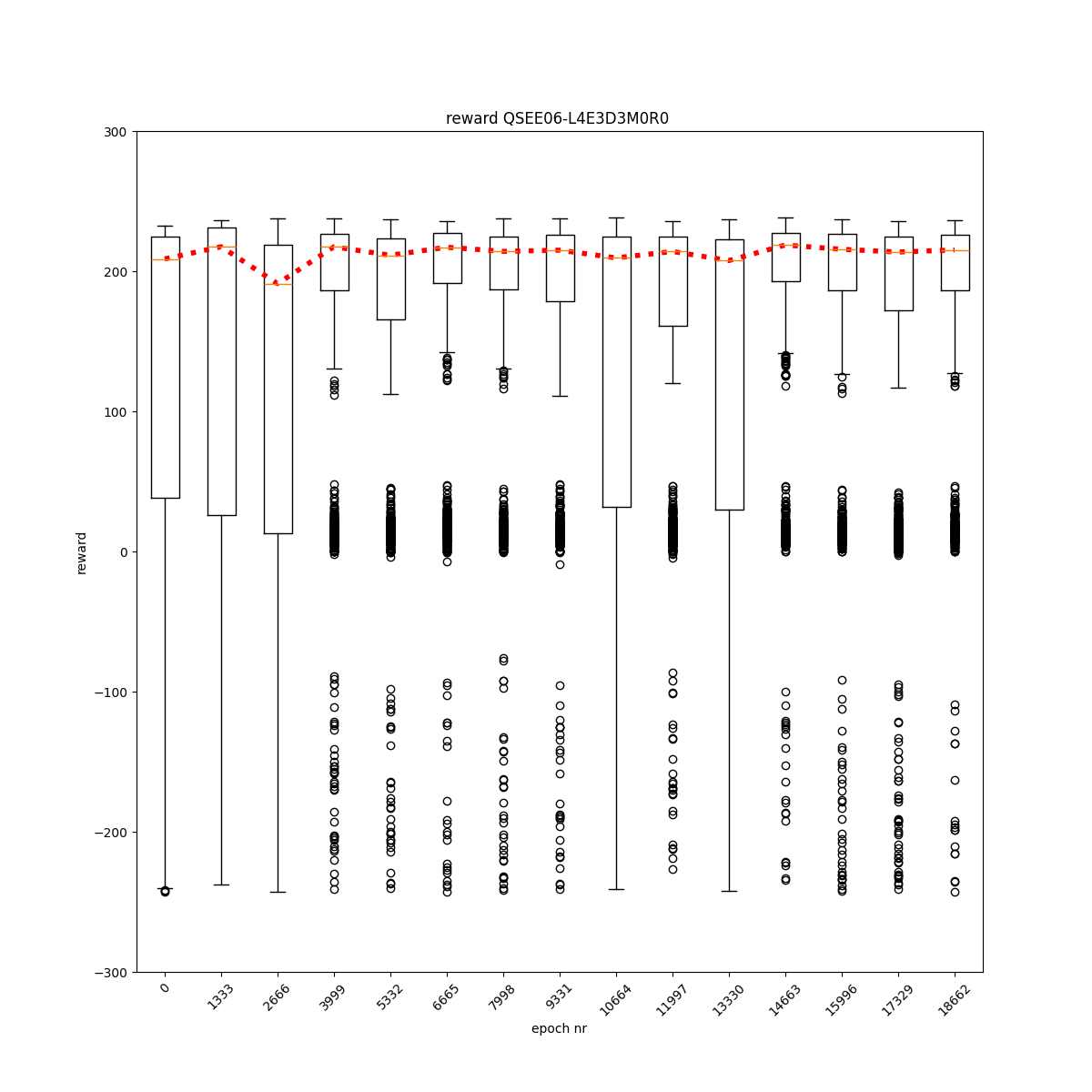

L4 E0 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

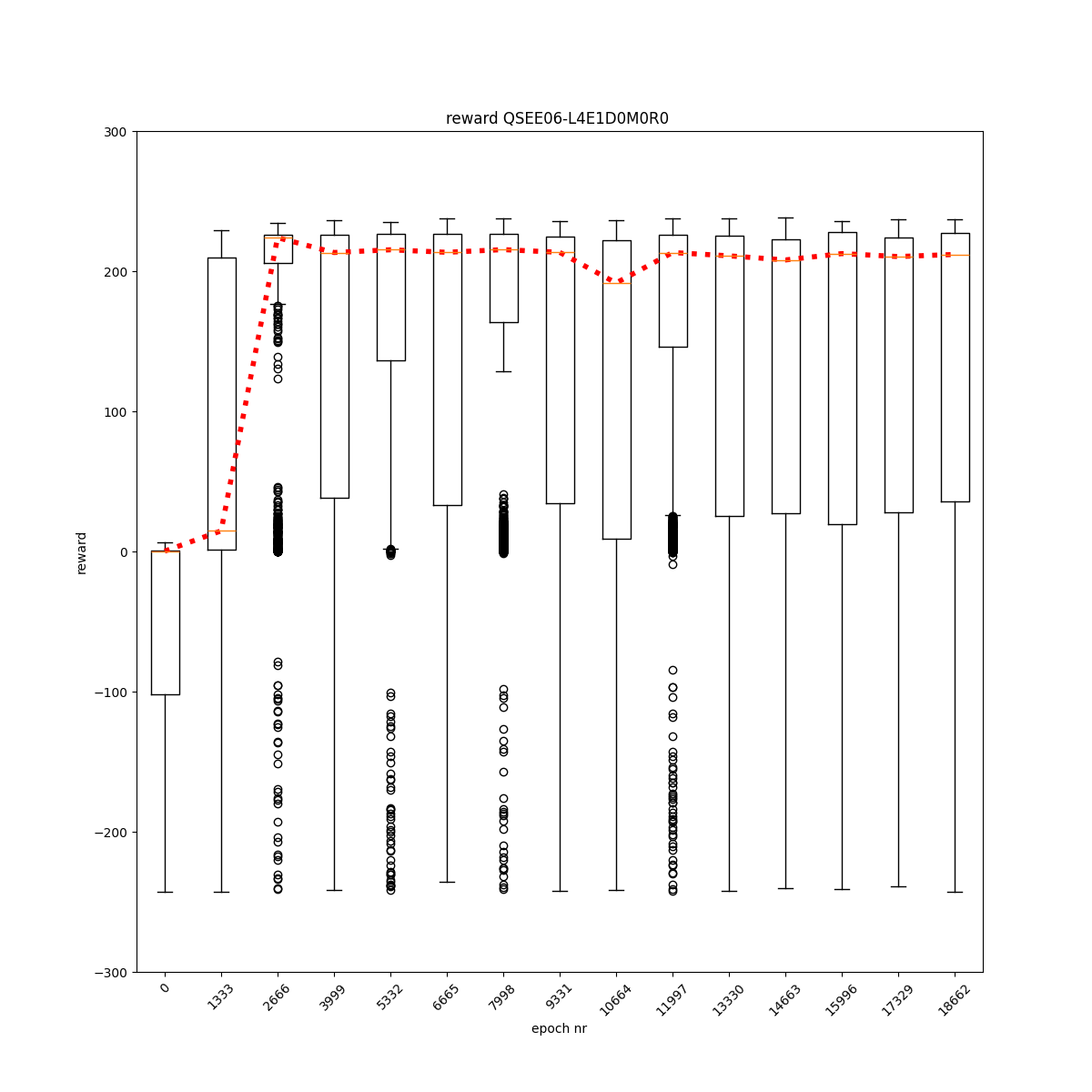

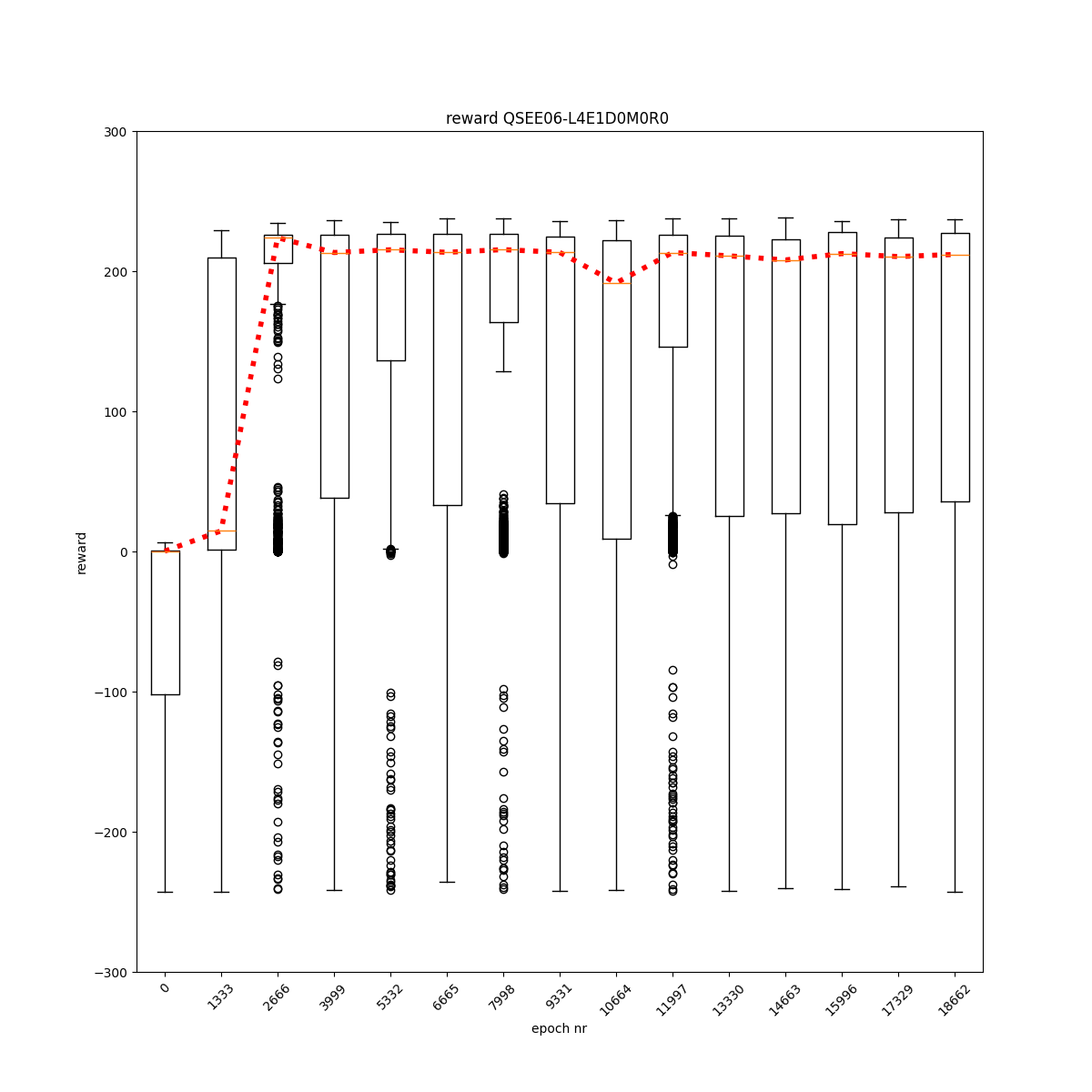

L4 E1 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

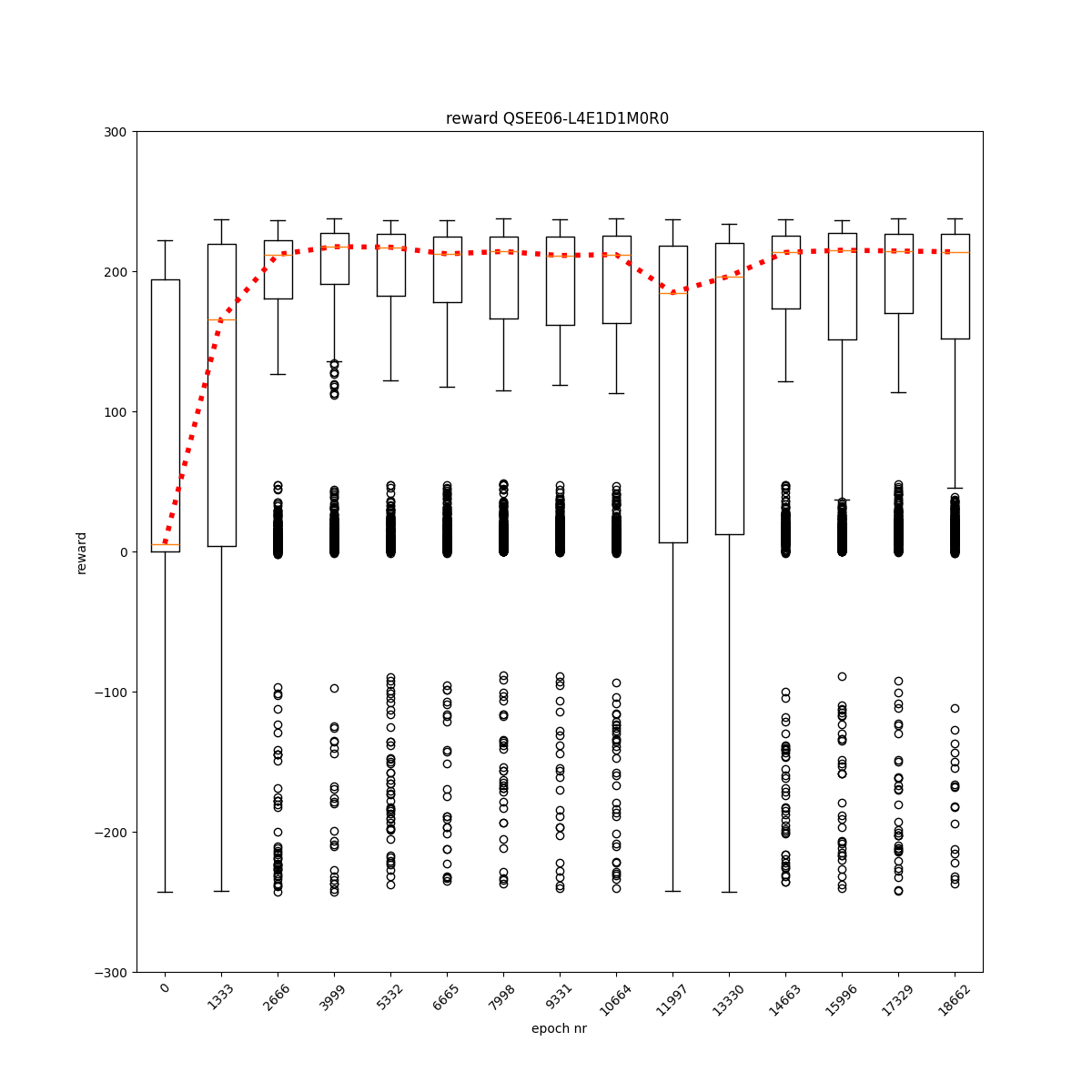

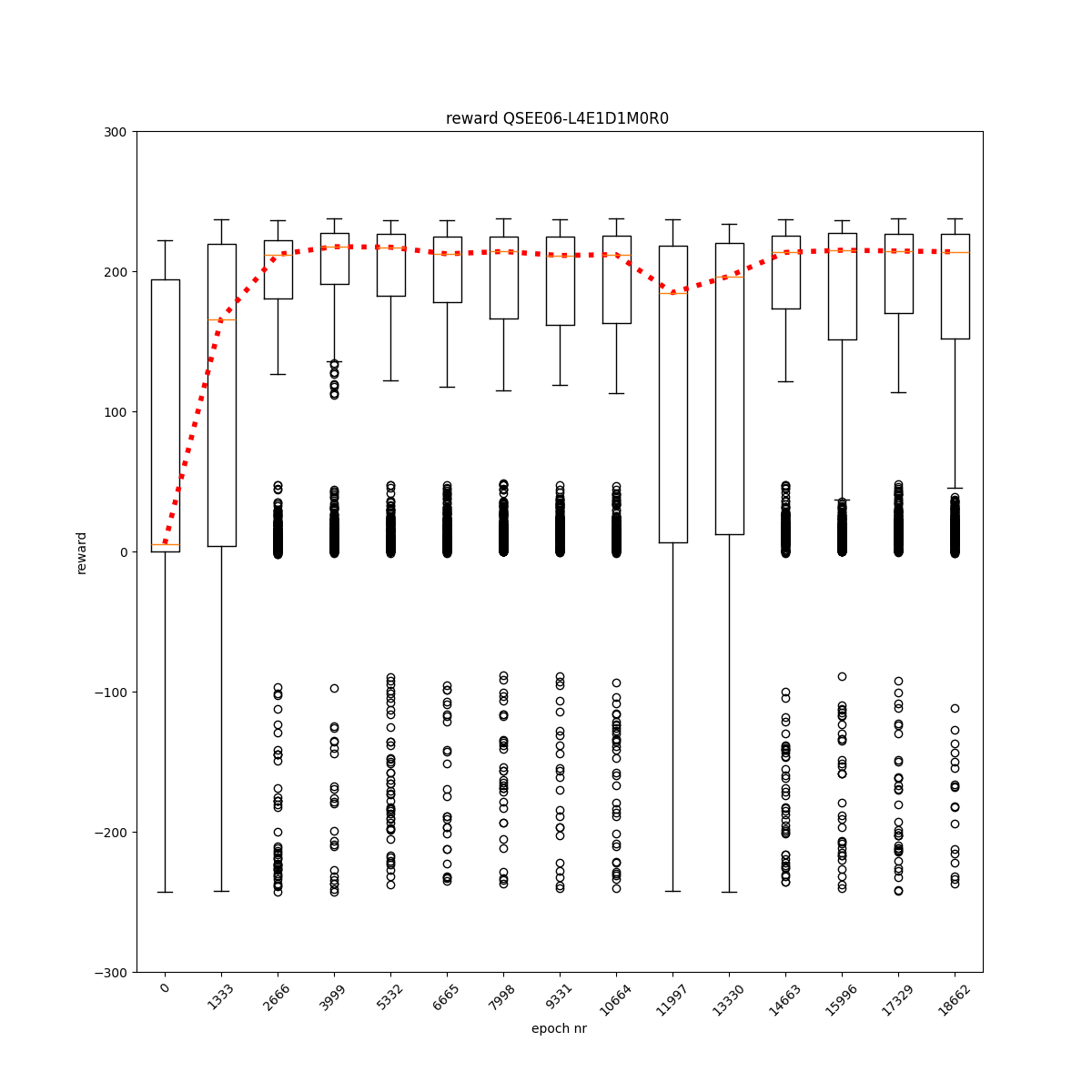

L4 E1 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

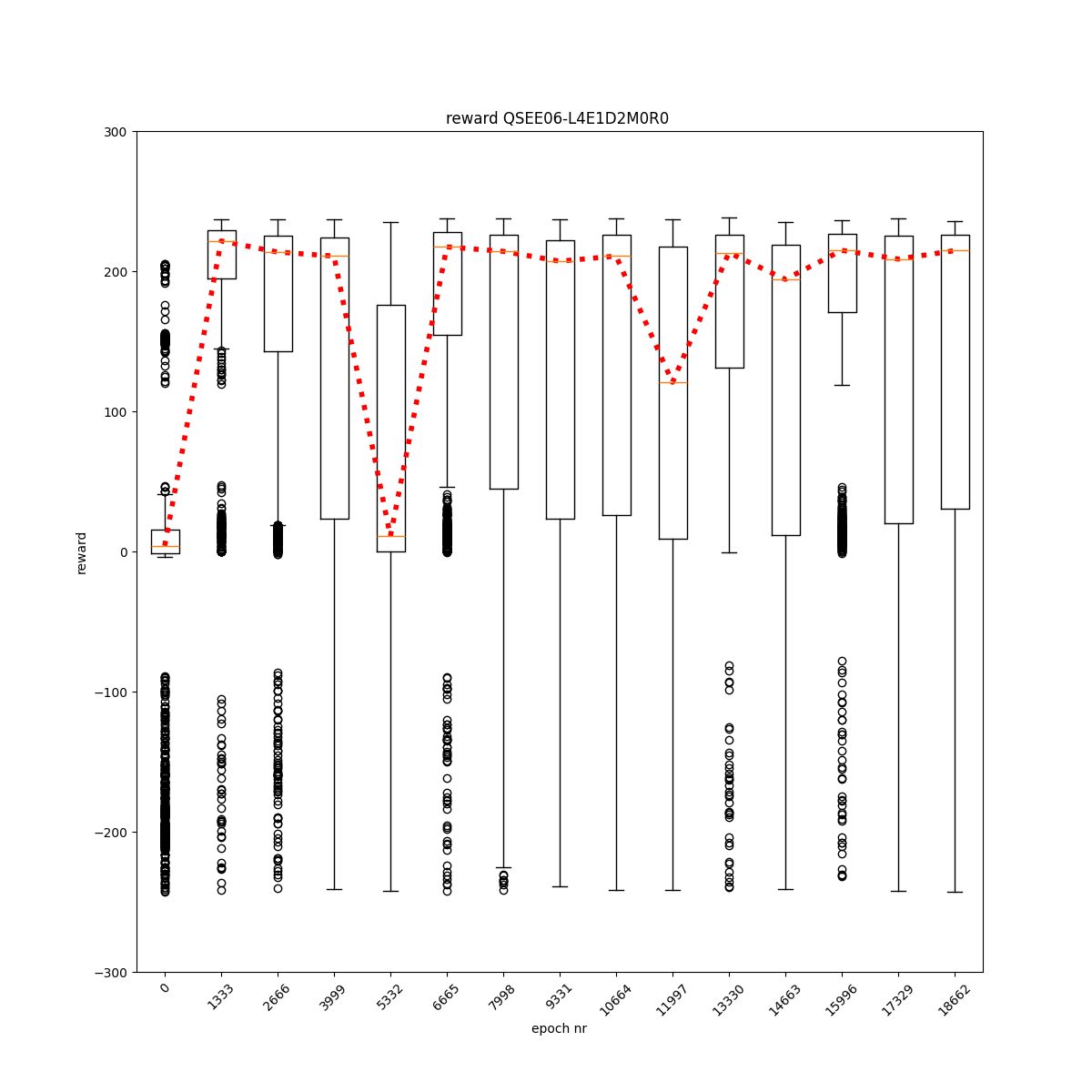

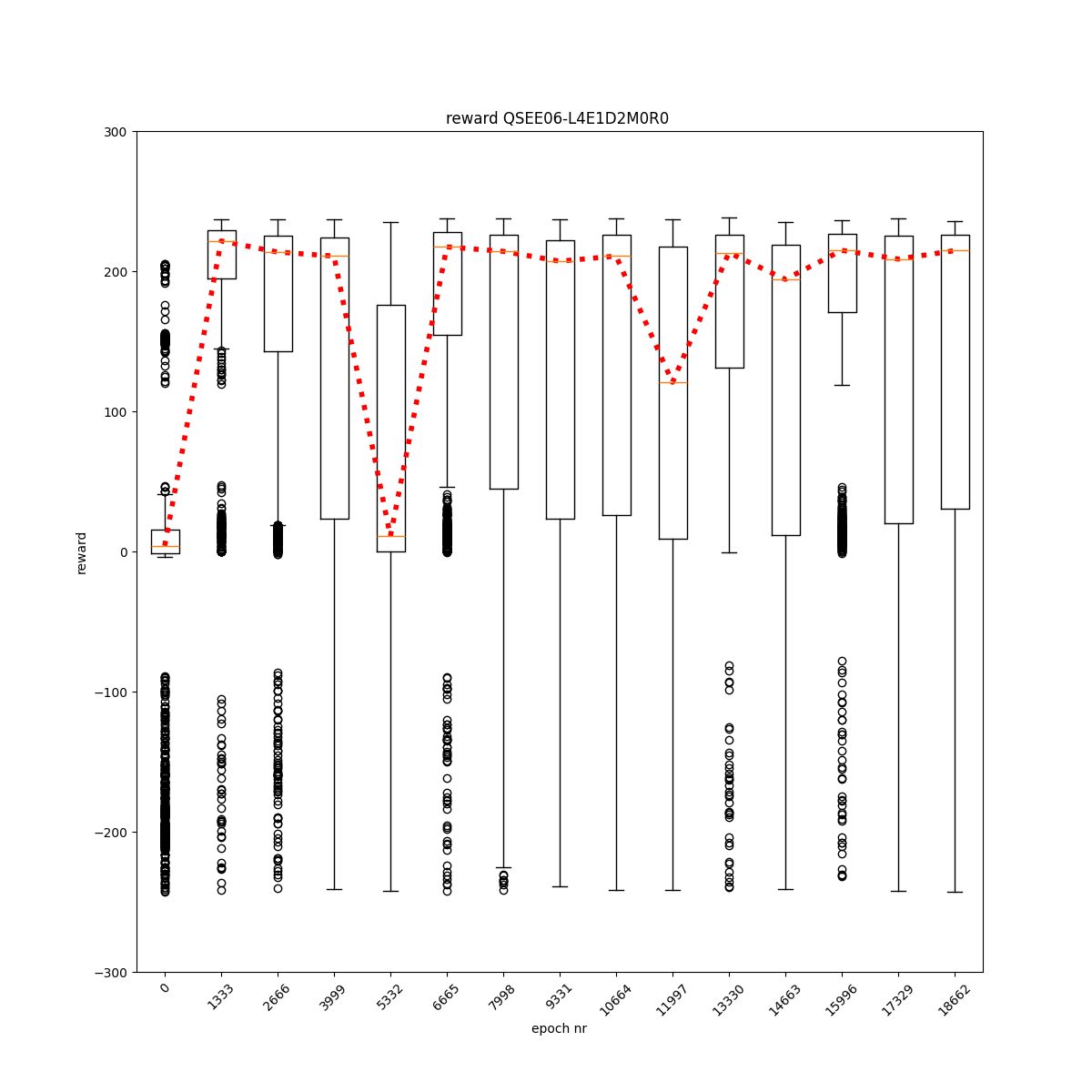

L4 E1 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

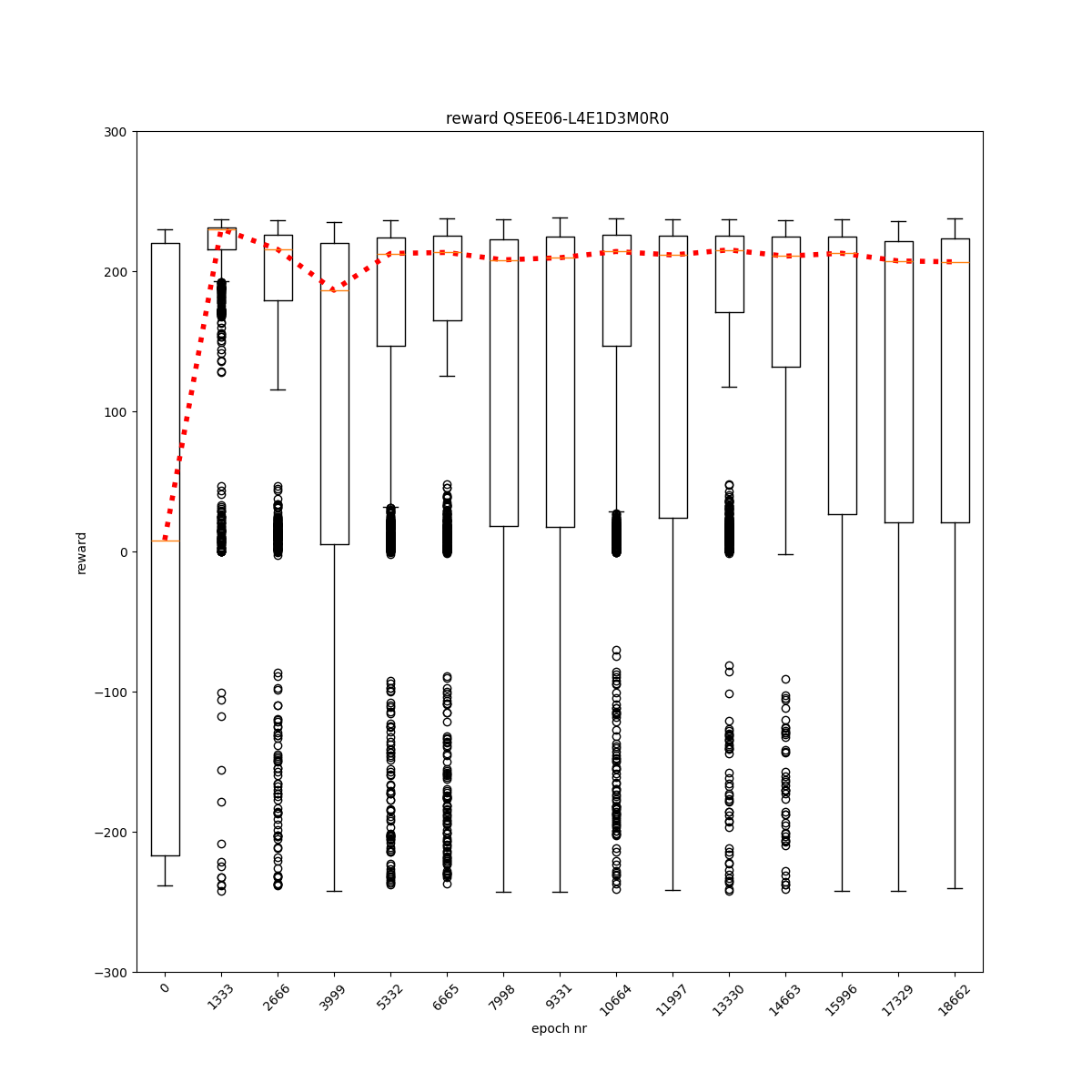

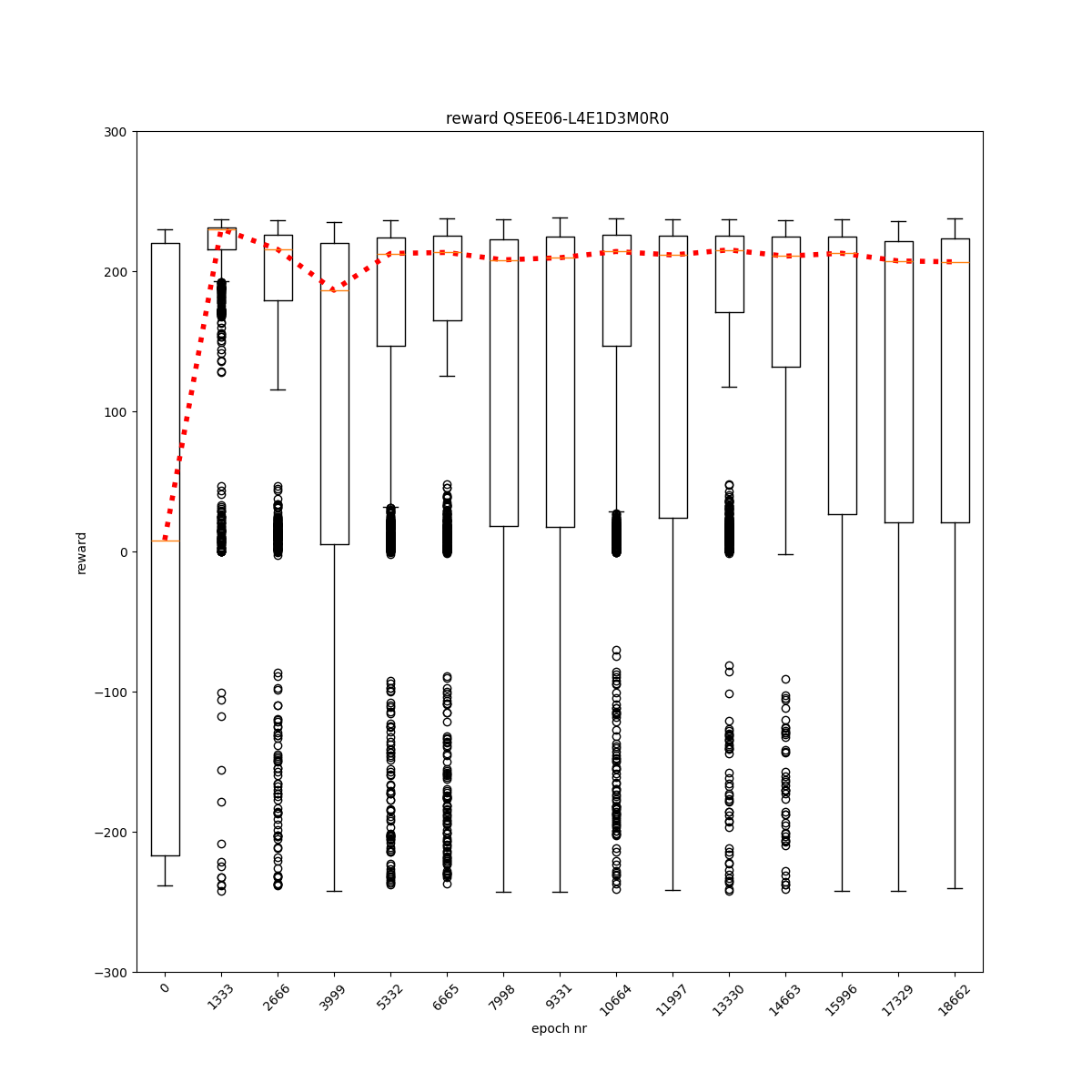

L4 E1 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

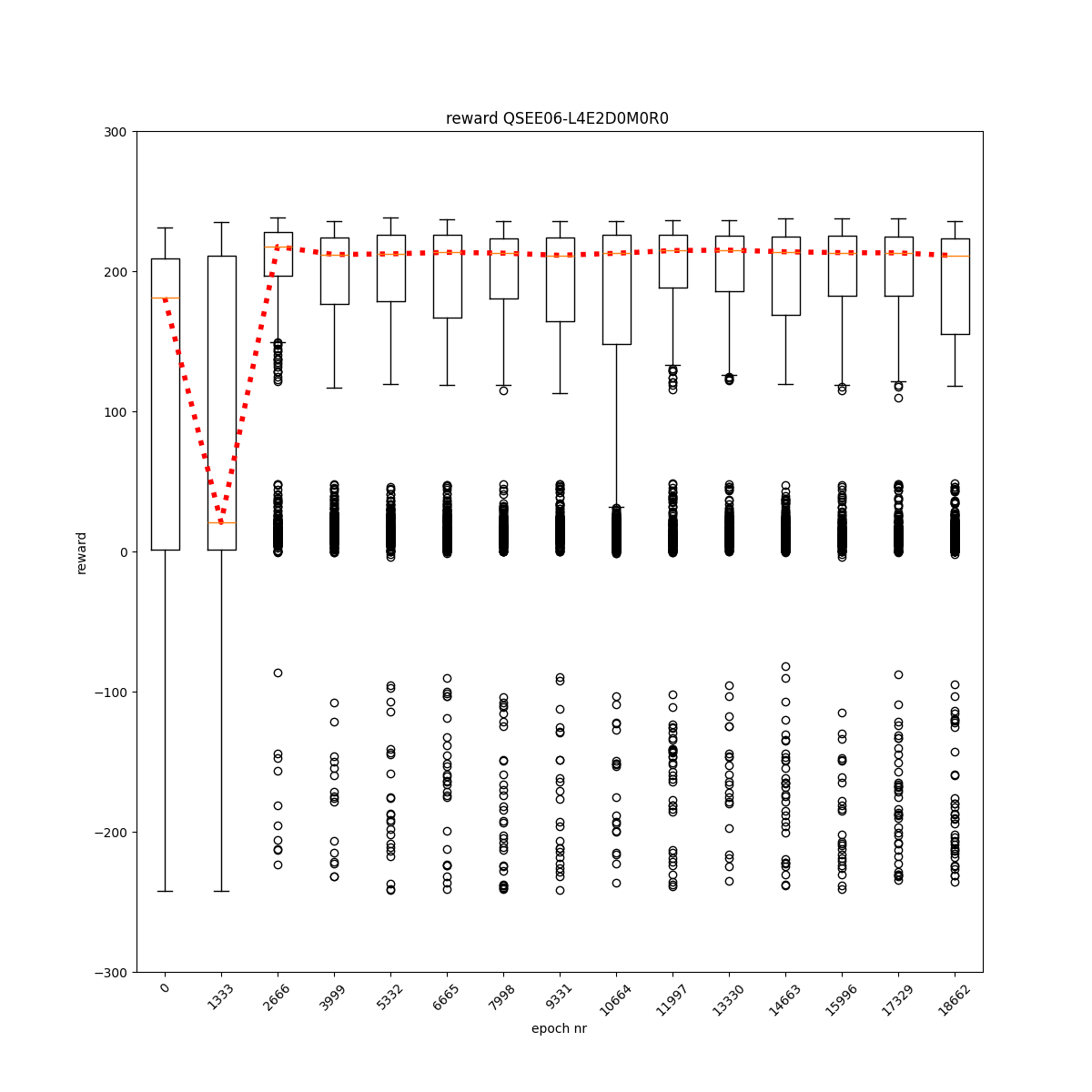

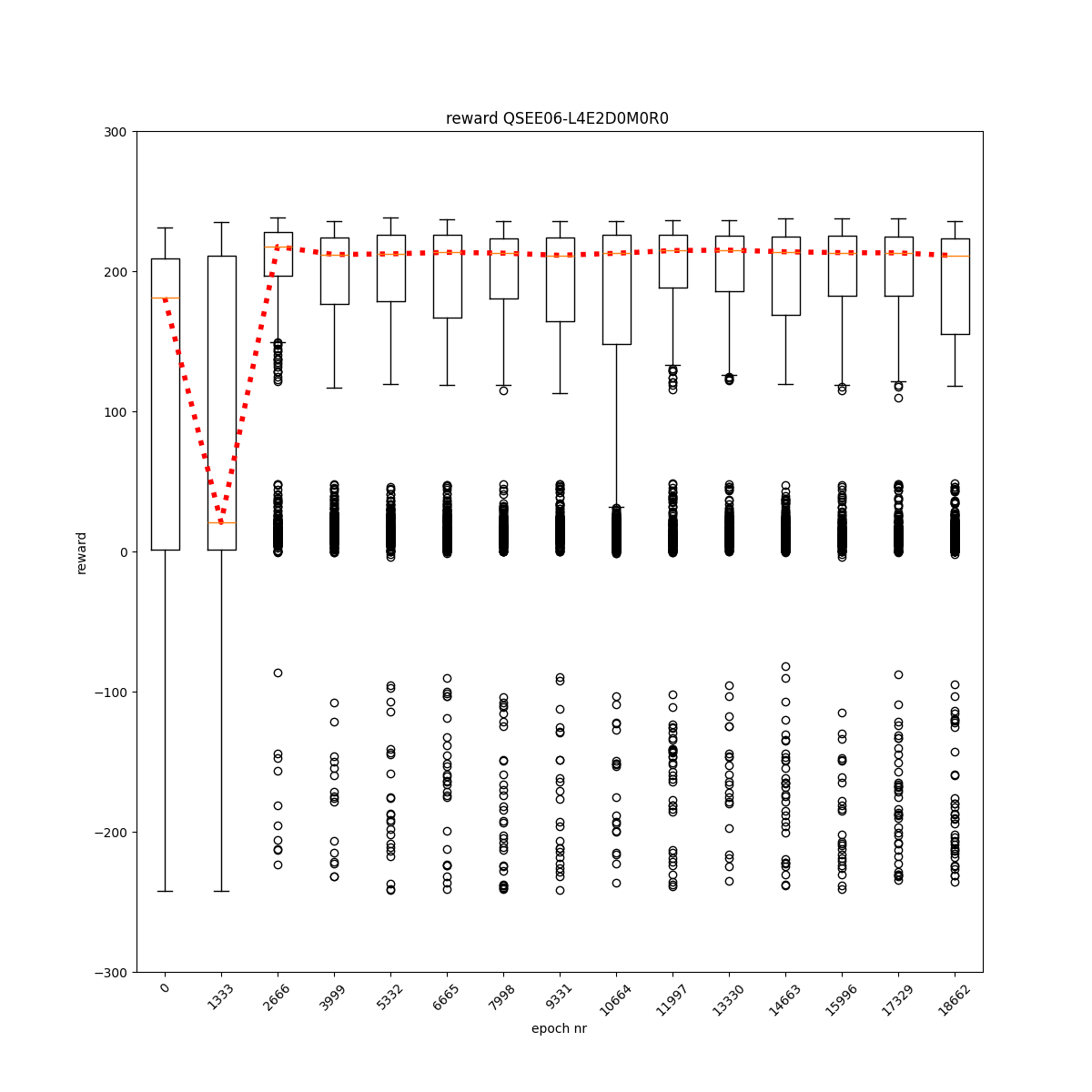

L4 E2 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

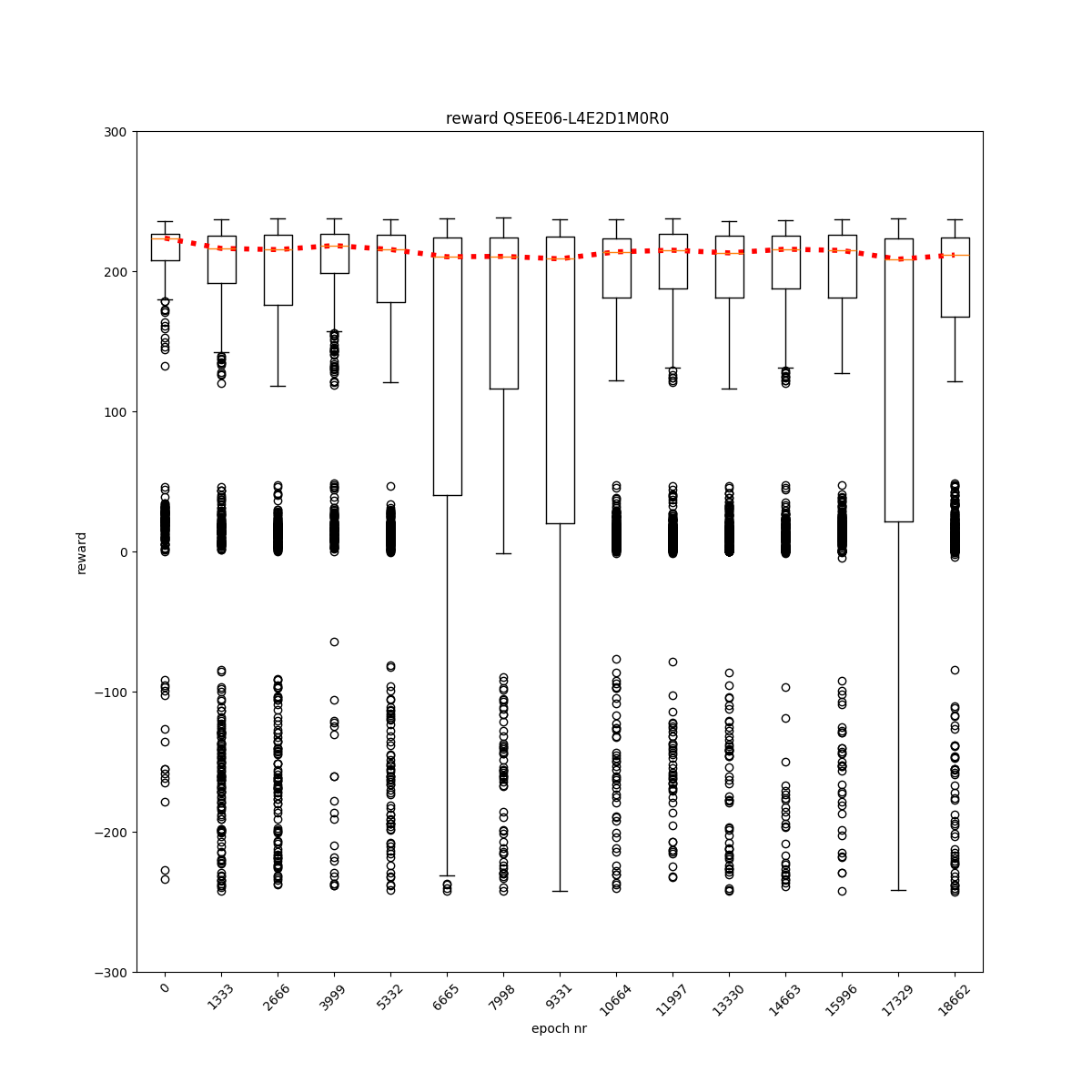

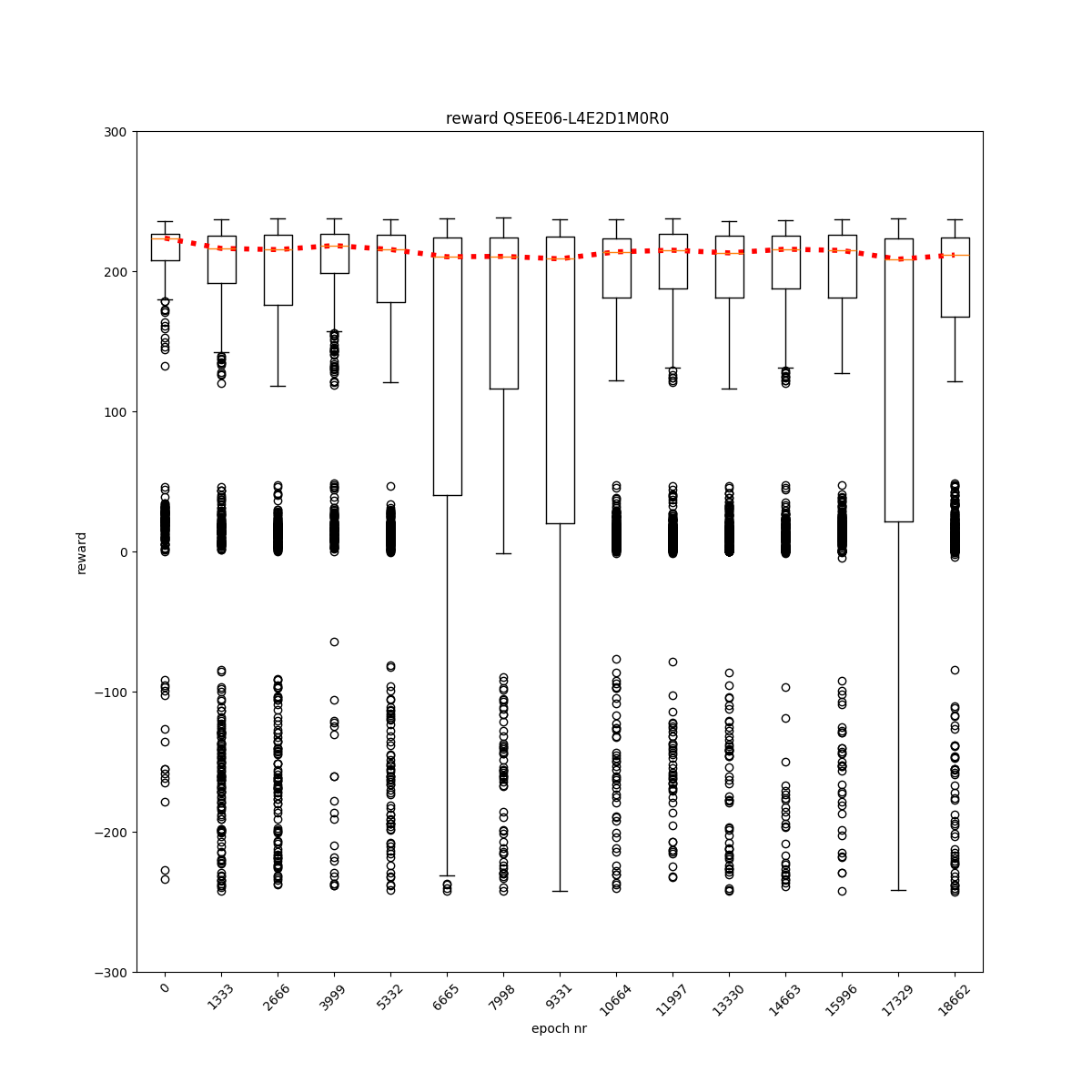

L4 E2 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L4 E2 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L4 E2 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L4 E3 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L4 E3 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L4 E3 D2 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

L4 E3 D3 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

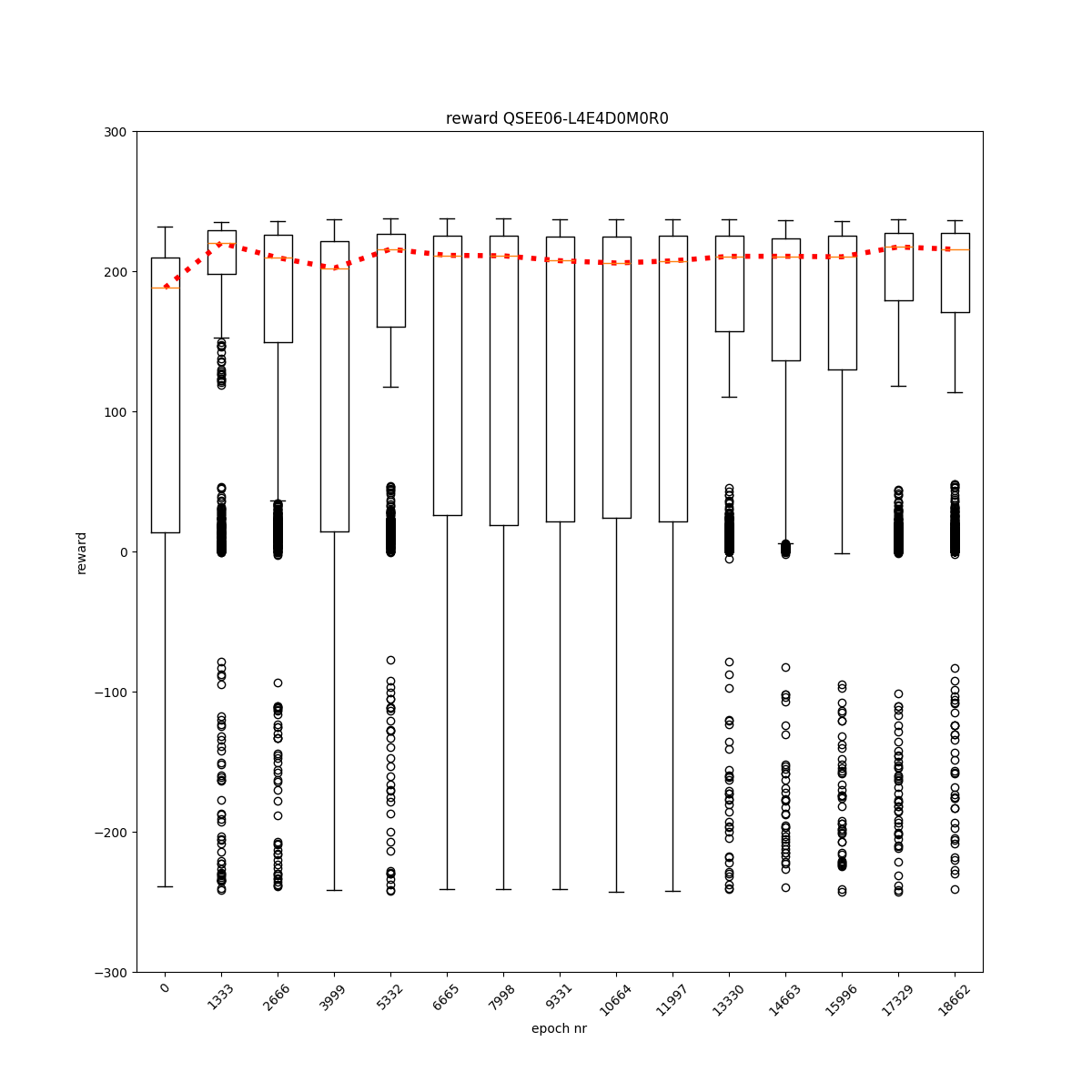

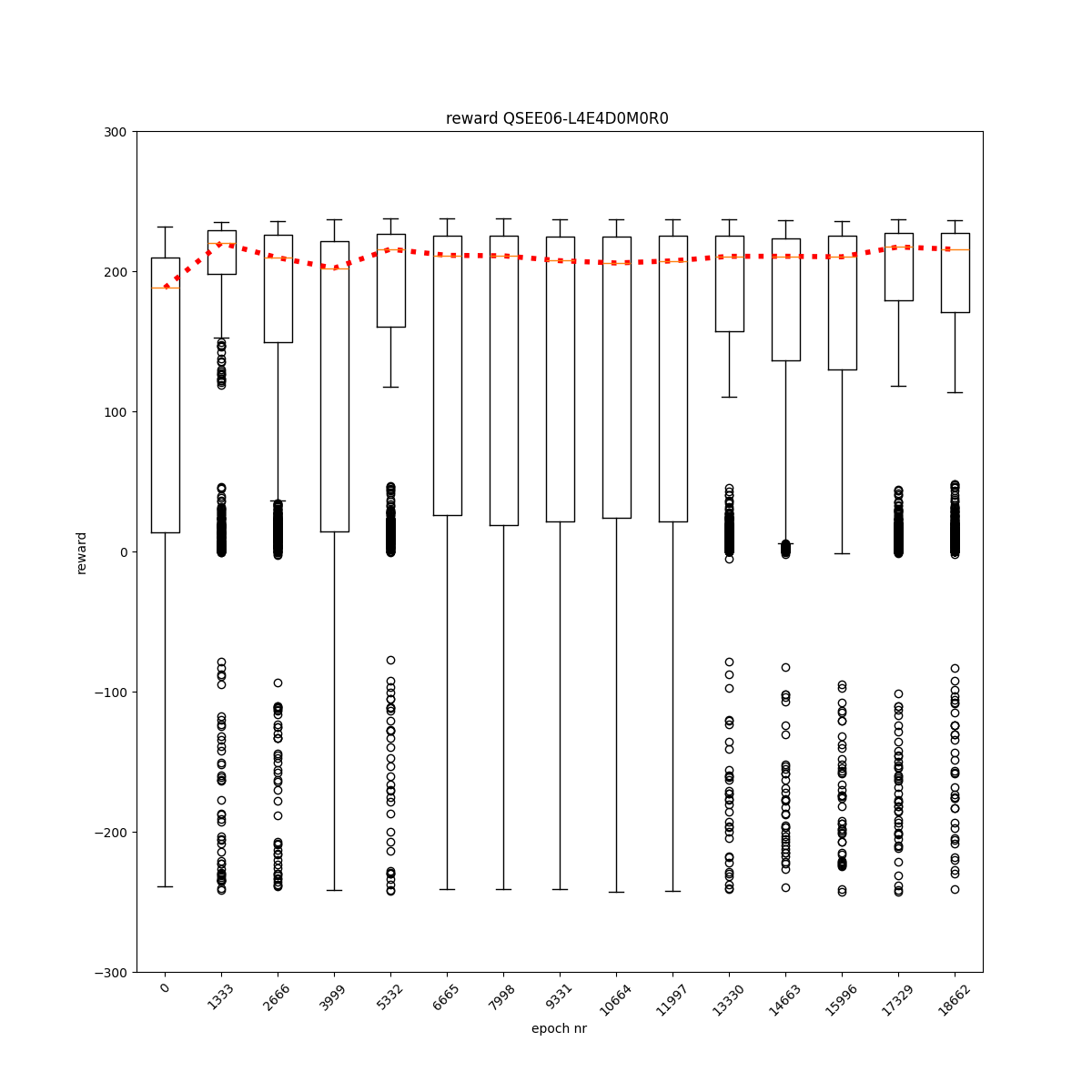

L4 E4 D0 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

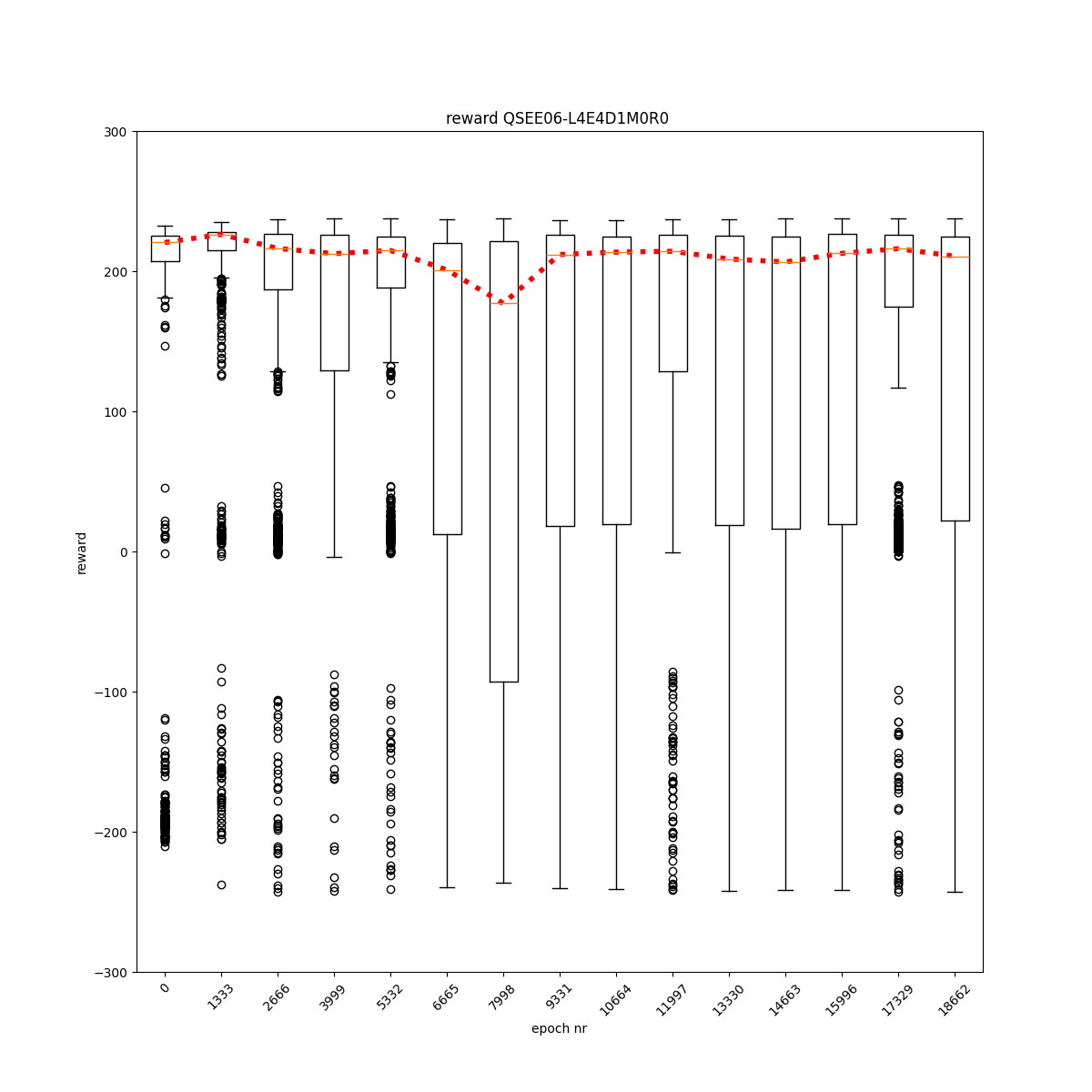

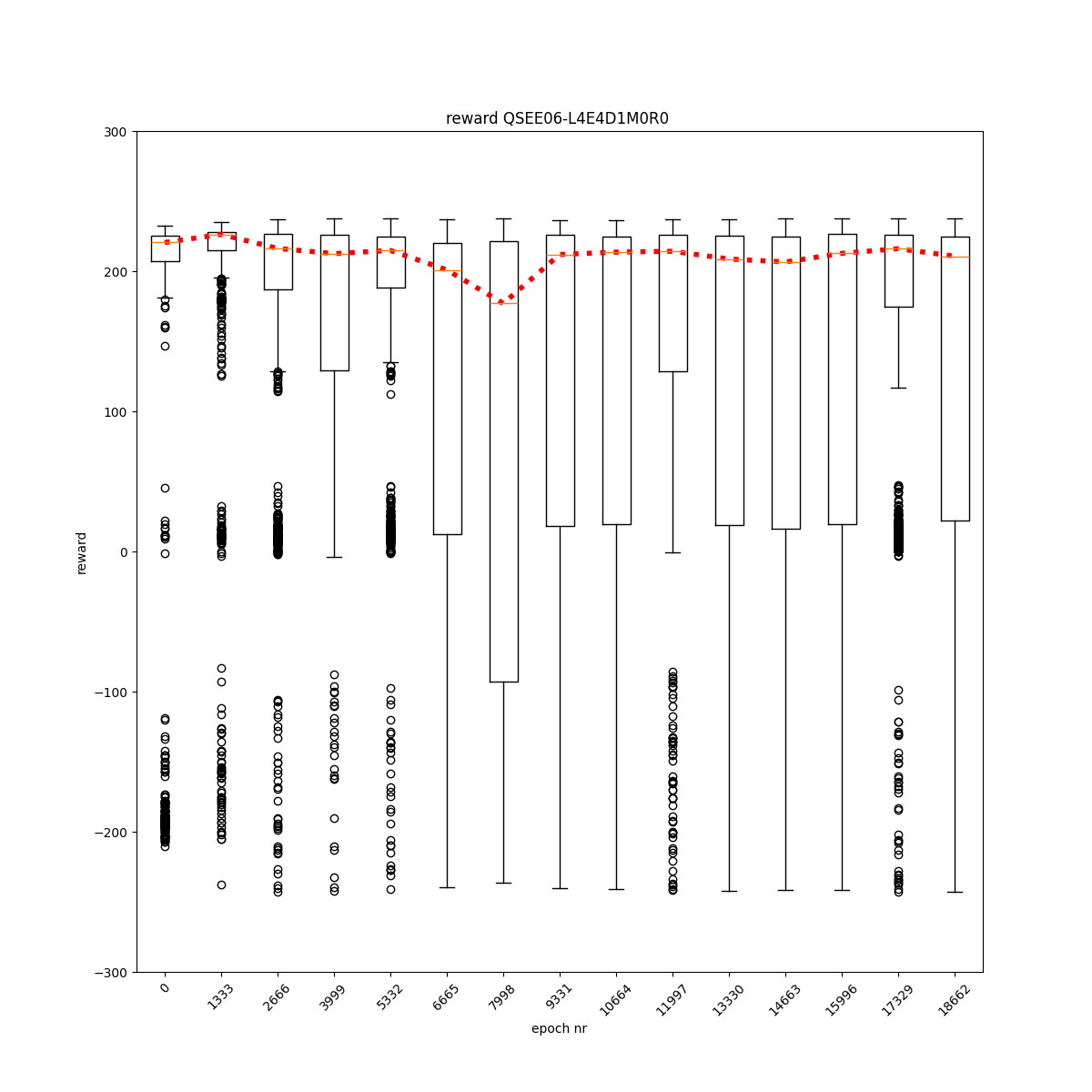

L4 E4 D1 M0 R0

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11

q-values

video 0 video 1 video 2 video 3

video 4 video 5 video 6 video 7

video 8 video 9 video 10 video 11